Synthesis AI for Teleconferencing / Web-conferencing

Overview

As has been carefully studied and well documented, COVID-19 accelerated the switch to remote work, and according to this survey, after COVID-19 92% of people surveyed expect to work from home at least 1 day per week and 80% expected to work at least 3 days from home per week. That's a lot more web conferencing calls.

Unfortuantely, the quality of many video conferencing applications leaves much to be improved, particularly for including the employees that call in from home and non-office environments. Poor lighting, background removal, cropped arms and hair, and lag is the norm. To improve this, Synthesis AI offers enhanced capabilities to simulate web conferencing across a broad set of environments and scenarios without compromising customer privacy. And we've shown our synthetic data "just works" for many of the machine learning applications related to video conferencing; read more on our Synthetic Data Case Studies: It Just Works blog post).

Our API provides the ability to mimic conferencing behavior in office, conference room, and home environments so you can quickly test and iterate machine learning models across a broader set of settings and situations without spinning up a massive data collection effort. This includes thousands of unique identities with granular control of mouth opening, gaze angle, head pose, accessories, gestures, lighting environments, camera systems (e.g. multi-camera modules), and more.

This document aims to provide copy-and-paste input examples to our API that serve many of the web-conferencing scenarios, including:

- Matting

- Gesture Recognition

- Attention Level

Workflow

The standard workflow for the Synthesis AI Face API is:

- Register Account, Download & Setup CLI

- Select Identities for the Face API with the Identities API

- Create Face API job with input JSON

- Download job output with CLI

- Parse outputs into your ML pipeline

Hello Teleconferencing World

Video conferences comprise a particularly niche set of human settings compared to the rest of Every-day life. Often they happen in the home, office, and only occasionaly outdoors on the phone. In addition, participants commonly wear headphones and prescription glasses, but rarely wearing sunglasses. To make it easy to get these prototypical video conference settings, the JSON provided contains the following features:

- 2 identities with 1 renders per identitiy (selected via Identities API)

- Camera placement similar to a desktop monitor

- Primarily indoor environments & lighting

- Transparent Glasses (20% of images)

- Headphones (20% of images)

- Facial Expressions: Eyes closing (60% of images)

- Hand Gestures: 8 gestures related to web conferencing (60% of images)

- Head Turn: head turn left (40°), 40% of images

- Facial Hair (40% of images)

- Variety of clothing

To process the outputs more easily, we provide a synthesisai library in Python, and output documentation.

Teleconferencing Quickstart JSON

{

"humans": [{

"identities": {

"ids": [

293

],

"renders_per_identity": 1

},

"clothing": [{

"outfit": ["longsleeve_dress_brown"],

"sex_matched_only": true,

"percent": 100

}],

"skin": {

"highest_resolution": true

},

"facial_attributes": {

"expression": [{

"name": [

"eyes_squint"

],

"intensity": {

"type": "range",

"values": {

"min": 0.75,

"max": 0.75

}

},

"percent": 100

}]

},

"accessories": {

"glasses": [{

"style": [

"female_square_sunglasses"

],

"lens_color": [

"default"

],

"transparency": {

"type": "range",

"values": {

"min": 1,

"max": 1

}

},

"metalness": {

"type": "range",

"values": {

"min": 0.03,

"max": 0.03

}

},

"sex_matched_only": true,

"percent": 100

}]

},

"gesture": [{

"name": [

"thumbs_up"

],

"keyframe_only": true,

"percent": 100

}],

"environment": {

"hdri": {

"name": [

"lythwood_lounge"

],

"intensity": {

"type": "list",

"values": [

0.1

]

}

}

},

"camera_and_light_rigs": [{

"type": "head_orbit",

"lights": [{

"type": "omni",

"color": {

"red": {

"type": "list",

"values": [142]

},

"blue": {

"type": "list",

"values": [234]

},

"green": {

"type": "list",

"values": [188]

}

},

"intensity": {

"type": "list",

"values": [0.11]

},

"wavelength": "visible",

"size_meters": {

"type": "list",

"values": [0.5]

},

"relative_location": {

"x": {

"type": "list",

"values": [0.03]

},

"y": {

"type": "list",

"values": [-0.33]

},

"z": {

"type": "list",

"values": [-0.63]

},

"yaw": {

"type": "list",

"values": [0]

},

"roll": {

"type": "list",

"values": [0]

},

"pitch": {

"type": "list",

"values": [0]

}

}

}],

"cameras": [{

"name": "default",

"advanced": {

"denoise": true,

"noise_threshold": 0.017

},

"specifications": {

"wavelength": "visible",

"focal_length": {

"type": "list",

"values": [150]

},

"resolution_h": 1024,

"resolution_w": 1024,

"sensor_width": {

"type": "list",

"values": [150]

}

}

}],

"location": {

"x": {

"type": "list",

"values": [0]

},

"y": {

"type": "list",

"values": [0]

},

"z": {

"type": "list",

"values": [0.85]

},

"yaw": {

"type": "list",

"values": [0]

},

"roll": {

"type": "list",

"values": [0]

},

"pitch": {

"type": "list",

"values": [0]

}

}

}]

},

{

"identities": {

"ids": [

222

],

"renders_per_identity": 1

},

"skin": {

"highest_resolution": true

},

"facial_attributes": {

"facial_hair": [{

"style": [

"beard_dutch_01"

],

"match_hair_color": true,

"relative_length": {

"type": "list",

"values": [

0.5

]

},

"percent": 100

}],

"head_turn": [{

"yaw": {

"type": "list",

"values": [

-30

]

},

"percent": 100

}]

},

"environment": {

"hdri": {

"name": [

"lebombo"

]

}

},

"gesture": [{

"name": [

"hand_talking_3"

],

"keyframe_only": true,

"percent": 100

}],

"camera_and_light_rigs": [{

"cameras": [{

"name": "default",

"specifications": {

"focal_length": {

"type": "list",

"values": [

150.0

]

},

"sensor_width": {

"type": "list",

"values": [

150.0

]

},

"wavelength": "visible",

"resolution_w": 1280,

"resolution_h": 960

}

}],

"lights": [{

"wavelength": "visible"

}],

"location": {

"z": {

"type": "list",

"values": [

0.55

]

}

},

"type": "head_orbit_with_tracking"

}]

},

{

"identities": {

"ids": [

55

],

"renders_per_identity": 1

},

"skin": {

"highest_resolution": true

},

"facial_attributes": {

"head_turn": [{

"yaw": {

"type": "list",

"values": [

30

]

},

"percent": 100

}]

},

"environment": {

"hdri": {

"name": [

"lebombo"

]

}

},

"gesture": [{

"name": [

"ok"

],

"keyframe_only": true,

"percent": 100

}],

"camera_and_light_rigs": [{

"cameras": [{

"name": "default",

"specifications": {

"focal_length": {

"type": "list",

"values": [

150.0

]

},

"sensor_width": {

"type": "list",

"values": [

150.0

]

},

"wavelength": "visible",

"resolution_w": 1280,

"resolution_h": 960

}

}],

"lights": [{

"wavelength": "visible"

}],

"location": {

"z": {

"type": "list",

"values": [

0.55

]

}

},

"type": "head_orbit_with_tracking"

}]

},

{

"identities": {

"ids": [

222

],

"renders_per_identity": 1

},

"skin": {

"highest_resolution": true

},

"facial_attributes": {

"facial_hair": [{

"style": [

"full_generic_01"

],

"match_hair_color": true,

"relative_length": {

"type": "list",

"values": [

0.5

]

},

"percent": 100

}],

"expression": [{

"name": [

"eyes_squint"

],

"intensity": {

"type": "range",

"values": {

"min": 0.75,

"max": 0.75

}

},

"percent": 100

}]

},

"environment": {

"hdri": {

"name": [

"lebombo"

]

}

},

"camera_and_light_rigs": [{

"cameras": [{

"name": "default",

"specifications": {

"focal_length": {

"type": "list",

"values": [

150.0

]

},

"sensor_width": {

"type": "list",

"values": [

150.0

]

},

"wavelength": "visible",

"resolution_w": 1280,

"resolution_h": 960

}

}],

"lights": [{

"wavelength": "visible"

}],

"location": {

"z": {

"type": "list",

"values": [

0.45

]

}

}

}]

},

{

"identities": {

"ids": [

55

],

"renders_per_identity": 1

},

"skin": {

"highest_resolution": true

},

"facial_attributes": {

"expression": [{

"name": [

"eyes_squint"

],

"intensity": {

"type": "range",

"values": {

"min": 0.75,

"max": 0.75

}

},

"percent": 100

}]

},

"environment": {

"hdri": {

"name": [

"lebombo"

]

}

},

"camera_and_light_rigs": [{

"cameras": [{

"name": "default",

"specifications": {

"focal_length": {

"type": "list",

"values": [

150.0

]

},

"sensor_width": {

"type": "list",

"values": [

150.0

]

},

"wavelength": "visible",

"resolution_w": 1280,

"resolution_h": 960

}

}],

"lights": [{

"wavelength": "visible"

}],

"location": {

"z": {

"type": "list",

"values": [

0.45

]

}

}

}]

}

]

}

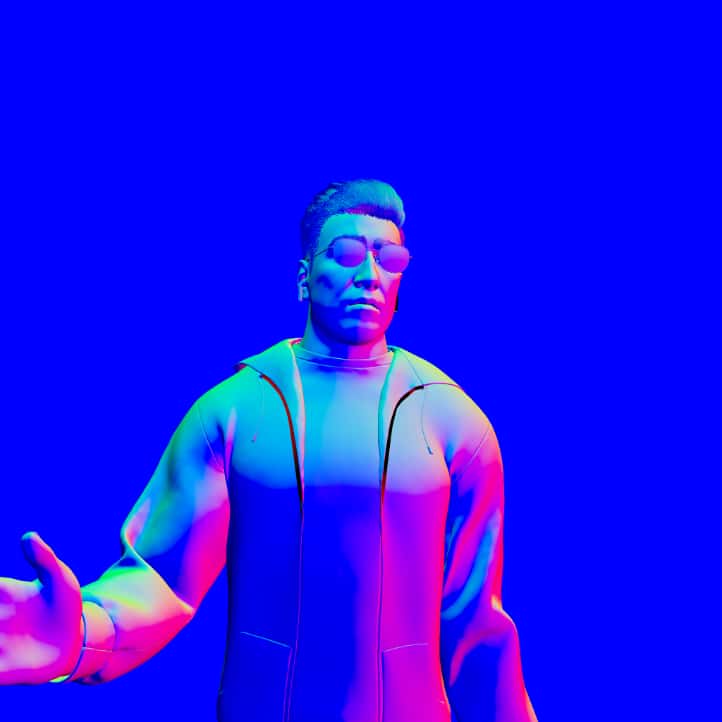

Visual examples of Hello Teleconferencing World quickstart:

Camera Pose

Three of the most common positions for the camera in the web conferencing scenario are:

- Desktop/External Monitor Camera

- Laptop Camera

- Mobile Camera

A camera on a desktop/external monitor camera typically sits a little above the person and angles down. A vertical angle of 0 to -15 usually works. You'll then want to vertically offset the camera as well so the subject sits higher in the image (see camera location documentation).

A laptop camera on the otherhand pivots with the lid, having a range of effects. The subject is likely above the camera, so a vertical angle of 0 to +20 usually works (see camera location documentation).

A mobile phone presents the most interesting case for a camera, as usually it's in front of the face, but often the person has turned their head compared to their body. In this case, we suggest enabling a wide variety of head_turn, and turning the camera's "follow_head_orientation" to true, and then adding vertical and horizontal camera angle ranges of +/-20°. With "follow_head_orientation", the camera first follows the front of the face while head turn is applied, and subsequently, the additional camera vertical and horizontal range is applied.

JSON example of camera on a desktop/external monitor

"facial_attributes": {

"head_turn": [{

"pitch": {

"type": "list",

"values": [0]

},

"yaw": {

"type": "list",

"values": [0]

},

"roll": {

"type": "list",

"values": [0]

},

"percent": 100

}]

},

"camera_and_light_rigs": [{

"type": "head_orbit",

"location": {

"pitch": {

"type": "range",

"values": {

"min": 0,

"max": 0

}

},

"yaw": {

"type": "range",

"values": {

"min": -9.5,

"max": -9.5

}

},

"roll": {

"type": "list",

"values": [0]

},

"x": {

"type": "range",

"values": {

"min": 0,

"max": 0

}

},

"y": {

"type": "range",

"values": {

"min": 0.13,

"max": 0.13

}

}

"z": {

"type": "list",

"values": [0.55]

},

},

"cameras": [{

"name": "default",

"specifications": {

"resolution_h": 960,

"resolution_w": 1280,

"focal_length": {

"type": "list",

"values": [150.0]

},

"sensor_width": {

"type": "list",

"values": [150.0]

},

"wavelength": "visible"

},

"relative_location": {

"pitch": {

"type": "list",

"values": [0]

},

"yaw": {

"type": "list",

"values": [0]

},

"roll": {

"type": "list",

"values": [0]

},

"x": {

"type": "list",

"values": [0]

},

"y": {

"type": "list",

"values": [0]

},

"z": {

"type": "list",

"values": [0]

}

},

"advanced": {

"denoise": true,

"noise_threshold": 0.017,

"lens_shift_vertical": {

"type": "list",

"values": [0]

},

"lens_shift_horizontal": {

"type": "list",

"values": [0]

},

"window_offset_vertical": {

"type": "list",

"values": [0]

},

"window_offset_horizontal": {

"type": "list",

"values": [0]

}

}

}]

}]

JSON example of laptop camera

...

"facial_attributes": {

"head_turn": [{

"pitch": {

"type": "list",

"values": [0]

},

"yaw": {

"type": "list",

"values": [0]

},

"roll": {

"type": "list",

"values": [0]

},

"percent": 100

}]

},

"camera_and_light_rigs": [{

"type": "head_orbit",

"location": {

"pitch": {

"type": "range",

"values": {

"min": 14,

"max": 14

}

},

"yaw": {

"type": "range",

"values": {

"min": 0,

"max": 0

}

},

"roll": {

"type": "list",

"values": [0]

},

"x": {

"type": "range",

"values": {

"min": 0,

"max": 0

}

},

"y": {

"type": "range",

"values": {

"min": 0,

"max": 0

}

}

"z": {

"type": "list",

"values": [0.55]

},

},

"cameras": [{

"name": "default",

"specifications": {

"resolution_h": 960,

"resolution_w": 1280,

"focal_length": {

"type": "list",

"values": [150.0]

},

"sensor_width": {

"type": "list",

"values": [150.0]

},

"wavelength": "visible"

},

"relative_location": {

"pitch": {

"type": "list",

"values": [0]

},

"yaw": {

"type": "list",

"values": [0]

},

"roll": {

"type": "list",

"values": [0]

},

"x": {

"type": "list",

"values": [0]

},

"y": {

"type": "list",

"values": [0]

},

"z": {

"type": "list",

"values": [0]

}

},

"advanced": {

"denoise": true,

"noise_threshold": 0.017,

"lens_shift_vertical": {

"type": "list",

"values": [0]

},

"lens_shift_horizontal": {

"type": "list",

"values": [0]

},

"window_offset_vertical": {

"type": "list",

"values": [0]

},

"window_offset_horizontal": {

"type": "list",

"values": [0]

}

}

}]

}]...

JSON example of mobile phone camera

...

"facial_attributes": {

"head_turn": [{

"pitch": {

"type": "list",

"values": [-20]

},

"yaw": {

"type": "list",

"values": [-20]

},

"roll": {

"type": "list",

"values": [0]

},

"percent": 100

}]

},

"camera_and_light_rigs": [{

"type": "head_orbit_with_tracking ",

"location": {

"pitch": {

"type": "range",

"values": {

"min": 5.76,

"max": 5.76

}

},

"yaw": {

"type": "range",

"values": {

"min": -11.6,

"max": -11.6

}

},

"roll": {

"type": "list",

"values": [0]

},

"x": {

"type": "range",

"values": {

"min": 0,

"max": 0

}

},

"y": {

"type": "range",

"values": {

"min": 0,

"max": 0

}

}

"z": {

"type": "list",

"values": [0.55]

},

},

"cameras": [{

"name": "default",

"specifications": {

"resolution_h": 960,

"resolution_w": 1280,

"focal_length": {

"type": "list",

"values": [150.0]

},

"sensor_width": {

"type": "list",

"values": [150.0]

},

"wavelength": "visible"

},

"relative_location": {

"pitch": {

"type": "list",

"values": [0]

},

"yaw": {

"type": "list",

"values": [0]

},

"roll": {

"type": "list",

"values": [0]

},

"x": {

"type": "list",

"values": [0]

},

"y": {

"type": "list",

"values": [0]

},

"z": {

"type": "list",

"values": [0]

}

},

"advanced": {

"denoise": true,

"noise_threshold": 0.017,

"lens_shift_vertical": {

"type": "list",

"values": [0]

},

"lens_shift_horizontal": {

"type": "list",

"values": [0]

},

"window_offset_vertical": {

"type": "list",

"values": [0]

},

"window_offset_horizontal": {

"type": "list",

"values": [0]

}

}

}]

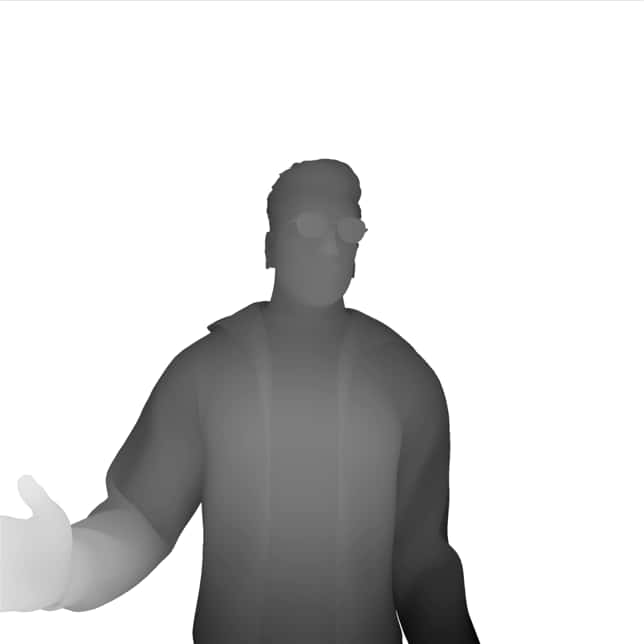

}]Visual examples of teleconferencing camera angles:

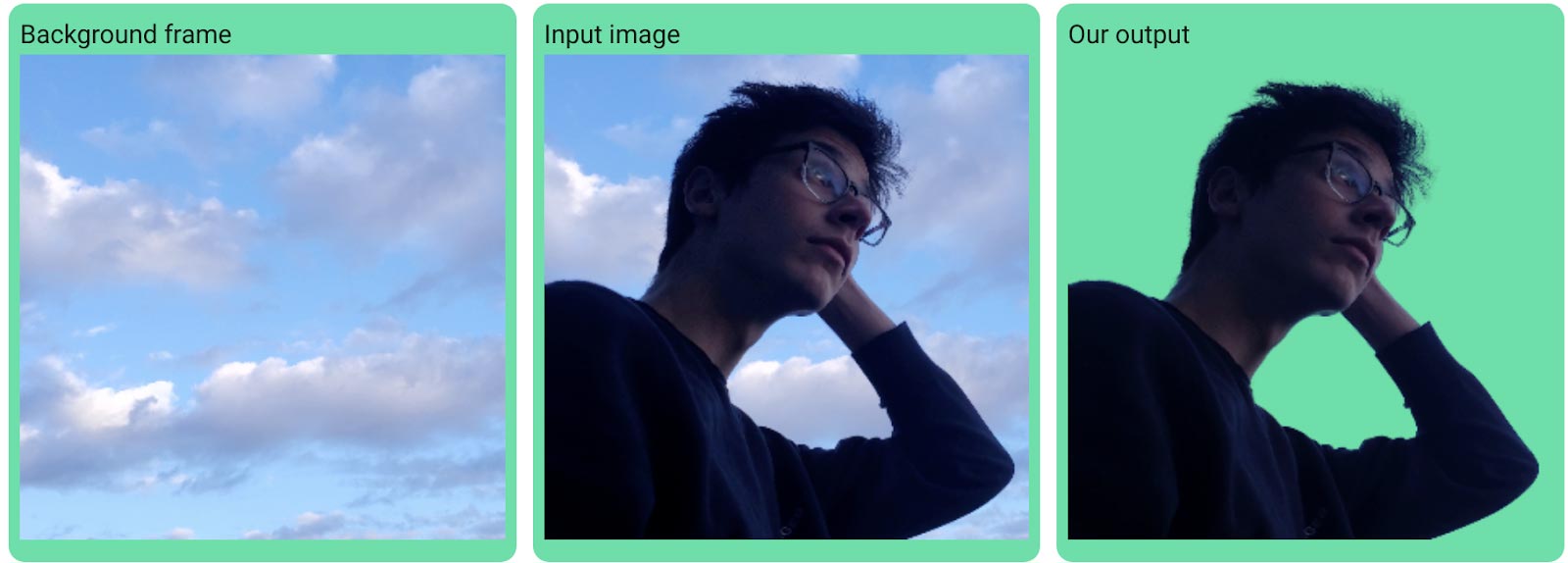

Matting

Background matting is cutting out a foreground person from any and a key computer vision problem in the current era of online videoconferencing. The complexity in the machine vision task is in predicting not only the binary segmentation mask but also the alpha (opacity) value, so the result is a “soft” segmentation mask with values in the [0, 1] range. This is very important to improve blending into new backgrounds.

We provide a sample JSON describing scenarios that are challenging for most models trained for matting:

- Loose strands of hair

- Multiple hand positions via gestures

- Environment diversity, both indoor and outdoor

- Emulating a “glow” from monitor using directional lights

{

"humans": [

{

"identities": {

"ids": [

1870

],

"renders_per_identity": 1

},

"skin":{"highest_resolution":true},

"facial_attributes": {

"expression": [{

"name": [

"eyes_squint"

],

"intensity": {

"type": "range",

"values": {

"min": 0.75,

"max": 0.75

}

},

"percent": 100

}]

},

"accessories": {

"glasses": [{

"style": [

"male_metal_aviator"

],

"lens_color": [

"default"

],

"transparency": {

"type": "range",

"values": {

"min": 1,

"max": 1

}

},

"metalness": {

"type": "range",

"values": {

"min": 0.03,

"max": 0.03

}

},

"sex_matched_only": true,

"percent": 100

}],

"headphones": [{

"style": [

"all"

],

"percent": 100

}]

},

"gesture": [{

"name": [

"screen_wipe"

],

"keyframe_only": true,

"percent": 100

}],

"environment": {

"hdri": {

"name": [

"st_fagans_interior"

],

"intensity": {

"type": "list",

"values": [

0.1

]

}

}

},

"camera_and_light_rigs": [{

"type": "head_orbit",

"lights": [{

"type": "omni",

"color": {

"red": {"type": "list","values": [142]},

"blue": {"type": "list","values": [234]},

"green": {"type": "list","values": [188]}

},

"intensity": {"type": "list","values": [0.11]},

"wavelength": "visible",

"size_meters": {"type": "list","values": [0.5]},

"relative_location": {

"x": {"type": "list","values": [0.03]},

"y": {"type": "list","values": [-0.33]},

"z": {"type": "list","values": [-0.63]},

"yaw": {"type": "list","values": [0]},

"roll": {"type": "list","values": [0]},

"pitch": {"type": "list","values": [0]}

}

}],

"cameras": [{

"name": "default",

"advanced": {

"denoise": true,

"noise_threshold": 0.017

},

"specifications": {

"wavelength": "visible",

"focal_length": {"type": "list","values": [150]},

"resolution_h": 1024,

"resolution_w": 1024,

"sensor_width": {"type": "list","values": [150]}

}

}],

"location": {

"x": {"type": "list","values": [0]},

"y": {"type": "list","values": [0]},

"z": {"type": "list","values": [0.85]},

"yaw": {"type": "list","values": [0]},

"roll": {"type": "list","values": [0]},

"pitch": {"type": "list","values": [0]}

}

}]

},

{

"identities": {

"ids": [

1749

],

"renders_per_identity": 1

},

"skin":{"highest_resolution":true},

"facial_attributes": {

"expression": [{

"name": [

"eyes_squint"

],

"intensity": {

"type": "range",

"values": {

"min": 0.75,

"max": 0.75

}

},

"percent": 100

}]

},

"accessories": {

"glasses": [{

"style": [

"female_cat_eye_frame_in_tortoise"

],

"lens_color": [

"default"

],

"transparency": {

"type": "range",

"values": {

"min": 1,

"max": 1

}

},

"metalness": {

"type": "range",

"values": {

"min": 0.03,

"max": 0.03

}

},

"sex_matched_only": true,

"percent": 100

}]

},

"gesture": [{

"name": [

"thumbs_up"

],

"keyframe_only": true,

"percent": 100

}],

"environment": {

"hdri": {

"name": [

"colorful_studio"

],

"intensity": {

"type": "list",

"values": [

0.1

]

}

}

},

"camera_and_light_rigs": [{

"type": "head_orbit",

"lights": [{

"type": "omni",

"color": {

"red": {"type": "list","values": [142]},

"blue": {"type": "list","values": [234]},

"green": {"type": "list","values": [188]}

},

"intensity": {"type": "list","values": [0.11]},

"wavelength": "visible",

"size_meters": {"type": "list","values": [0.5]},

"relative_location": {

"x": {"type": "list","values": [0.03]},

"y": {"type": "list","values": [-0.33]},

"z": {"type": "list","values": [-0.63]},

"yaw": {"type": "list","values": [0]},

"roll": {"type": "list","values": [0]},

"pitch": {"type": "list","values": [0]}

}

}],

"cameras": [{

"name": "default",

"advanced": {

"denoise": true,

"noise_threshold": 0.017

},

"specifications": {

"wavelength": "visible",

"focal_length": {"type": "list","values": [150]},

"resolution_h": 1024,

"resolution_w": 1024,

"sensor_width": {"type": "list","values": [150]}

}

}],

"location": {

"x": {"type": "list","values": [0]},

"y": {"type": "list","values": [0]},

"z": {"type": "list","values": [0.85]},

"yaw": {"type": "list","values": [0]},

"roll": {"type": "list","values": [0]},

"pitch": {"type": "list","values": [0]}

}

}]

}

]

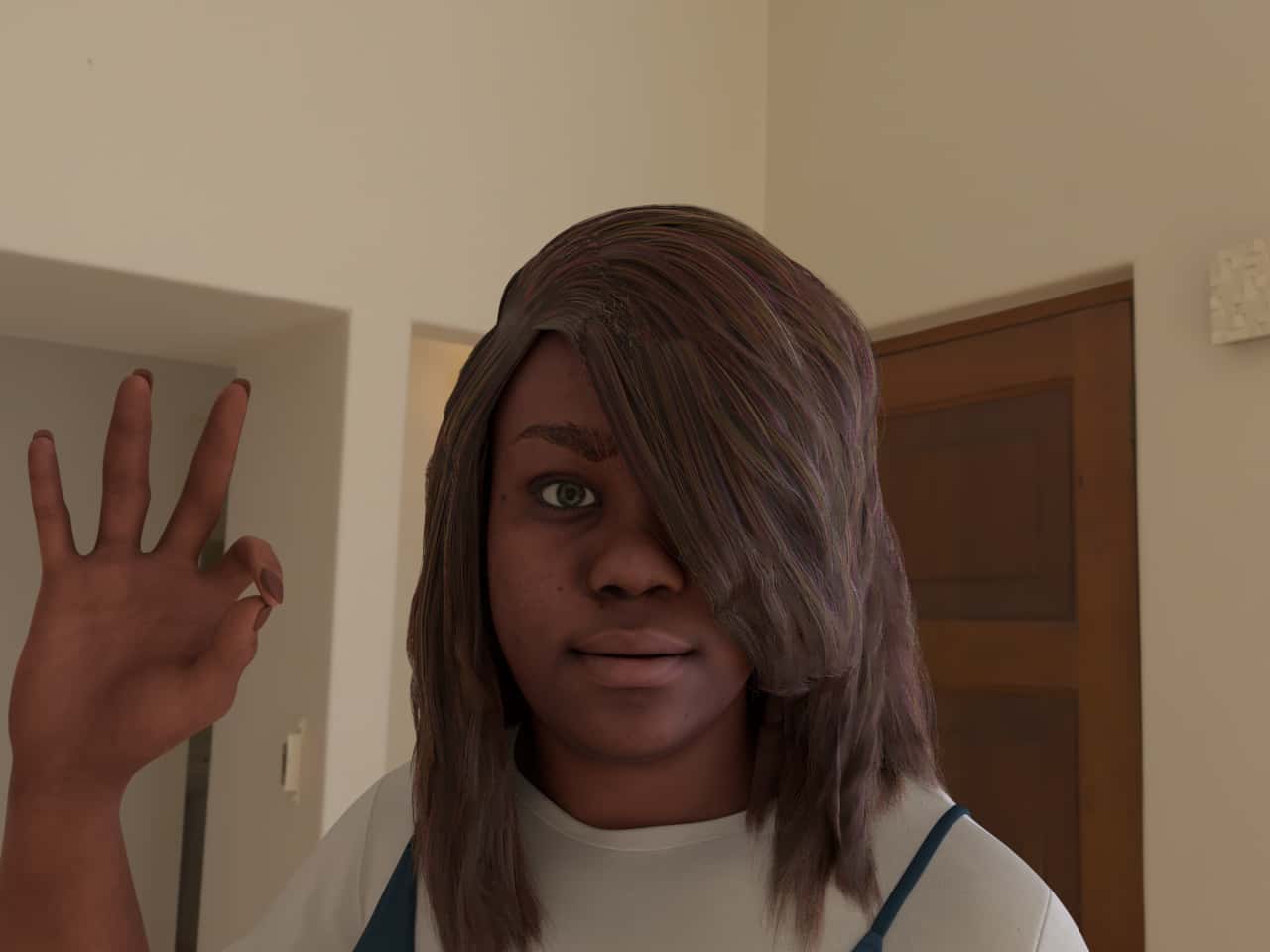

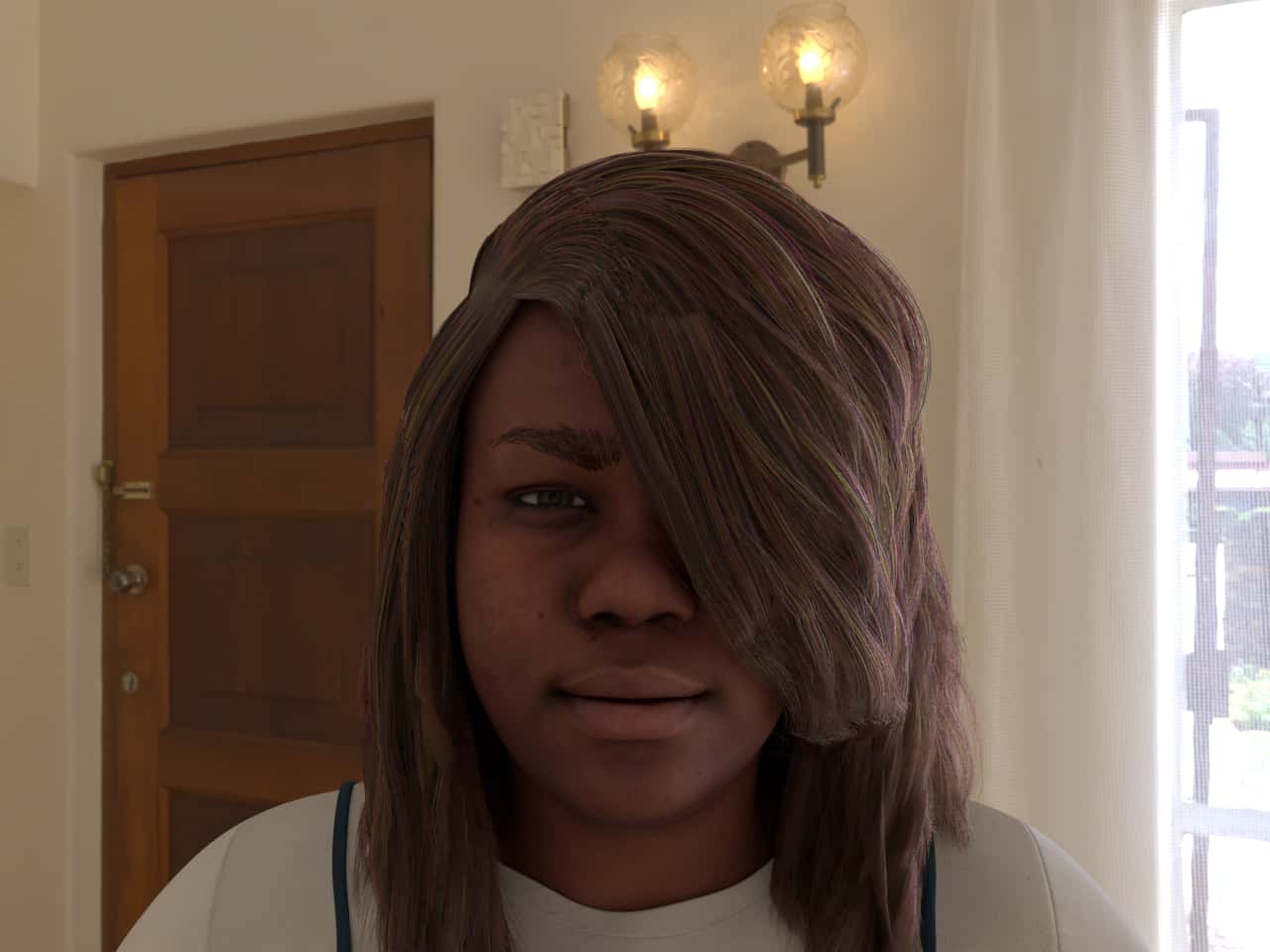

}Visual teleconferencing matte examples:

Gesture Recognition

More advanced videoconferencing systems employ gesture recognition as a part of contextual scene understanding and transcription. Our API provides both static and full animation gestures sequences that can be combined with all other API features.

For example, you can combine a "wave_hello" with every type of skin tone and lighting environment to ensure your model is fully robust in its detection of a waving gesture.

On the output side, we provide sub-segmentation as well as both face and body 3d landmarks.

The following JSON example shows 11 static image gestures. For a full animation output, set "keyframe_only" to false.

JSON example of using 11 gestures

"gesture": [{

"name": [

"answer_phone_talk",

"two_hand_phone_usage",

"high_five",

"hand_talking_4",

"stretch",

"screen_wipe",

"writing_horizontal",

"hand_talking_1",

"pointing_vertical_surface",

"air_swipe",

"hands_behind_head"

],

"keyframe_only": true,

"percent": 100

}]

Visual examples of teleconferencing gestures:

Eye Gaze & Expressions

Participant attentiveness monitoring relies on gaze estimation. Our API provides programmatic control of eye gaze which allow for limitless combinations of pupil direction; and also comes with 3d gaze vectors and 3d landmarks.

These gazes are compatible with head turns and glasses, which all serve as effective confounds to train against.

JSON example of gaze direction

"facial_attributes": {

"gaze": [{

"horizontal_angle": { "type": "range", "values": {"min": -30, "max": 30 }},

"vertical_angle": { "type": "range", "values": {"min": -10.0, "max": 10.0 }},

"percent": 100

}]

...

}

JSON example of facial expressions

"facial_attributes": {

"expression": [{

"name": ["eyes_squint"],

"intensity": { "type": "range", "values": {"min": 0.75, "max": 0.75} },

"percent": 100

}]

...

}

Visual examples of eye gaze:

Visual examples of facial expressions: