Human API: Input Specifications

Overview

The Human API is a data generating service that can create millions of labeled images of humans with a specifiable variety of detailed humans placed into different contexts.

A request (also known as a “job”) is typically submitted by using a command line client application provided by Synthesis AI or the web interface. A job ID will be returned and this job ID will then show up in the web interface. This ID is used as the reference for downloading images via the CLI. After the job is complete, the user will receive and email to notify the user that the job and all of its tasks have completed.

Job requests are described in JSON format, and a single JSON can describe many scenes (aka tasks).

Workflow

The standard workflow for the Synthesis AI Human API is:

- Register Account, Download & Setup CLI

- Select Identities for the Human API with the Identities API

- Create Human API job with input JSON

- Download job output with CLI

- Parse outputs into your ML pipeline

Important Concepts

Percent Groups per Parameter

Almost all parameters have the concept of percent, and then an array of settings for the parameter, which is the percent of scenes/images these should apply to.

Example:

You might want mouth open & eyes closed to be applied to 70% of images, several other expressions to be applied to 20% of images, and then the remaining 10% of images having neutral.

Note: The percentage allocated across all groups in each section must add up to 100% — an error will be returned if they do not.

In order to accomplish this, 2 percent array elements would be specified, the first for 70% with certain expression settings, and the second for 20% of images with different expression settings. The remaining 10% of images would be implicitly the neutral expression, because that parameter would not be set on them.

Parameters with percent groups are:

- Body

- Clothing

- Expressions

- Gesture

- Head Hair

- Facial Hair

- Eye Gaze

- Eyebrows

- Head Turn

- Headphones

- Headwear

- Masks

- Glasses

Example JSON of a request with percent groups follows. In this example, 100 renders will be produced, with 40% of them containing 2 types of face masks, and 60% of them containing no face masks:

40% Have Masks, 60% No Masks

{

"version": 1,

"humans": [

{

"identities": {"ids": [80], "renders_per_identity": 100},

"accessories": {

"masks": [{

"style": ["Duckbill","DuckbillFlat"],

"percent": 40

},

{

"style": ["none"],

"percent": 60

}]

}

}

]

}Value lists vs. Min/Max Ranges

For parameters with numerical settings, there are two types of collections of values that can be specified: the list, and the range/interval.

Lists are a discrete list of values (repeating allowed) that are randomly selected from for each scene, like [10, 20, 23]. They are not randomly “shuffled”, but rather randomly selected, so duplicates can occur.

To further control distribution in a list, values can be repeated in the proportion they are desired. For example, a list with values [3, 3, 3, 3, 4, 4] would have a two-to-one ratio (on average), of scenes with the value of 3 rather than 4 for that parameter.

On the other hand, ranges are set between two numbers, with a min and a max value specified. Any valid random number between these two, and including these two values will be selected (inclusive of min/max values). The distribution between the two values comes out to usually be a uniform distribution, but duplicates can occur.

Within a single percent array item, only a list or a min/max range can be used for a given parameter. However, two separate percent array items may use different choices for lists vs. ranges.

Example of a JSON request with value lists and ranges for a parameter in 2 different percent arrays follows. In this example, the intensity of the expressions for 20% of the renders will be (randomly) evenly distributed between 0 and 1, and in 80% of the renders, the intensity will be at .25 and .5, and twice as likely still to be .75 (because it’s repeated twice).

Value lists vs. Min/Max Ranges JSON Example

{

"version": 1,

"humans": [

{

"identities": {"ids": [80], "renders_per_identity": 100},

"facial_attributes": {

"expression": [{

"name": ["all"],

"intensity": {"type": "range","values": {"min": 0.0,"max": 1.0}},

"percent": 20

},

{

"name": ["all"],

"intensity": {"type": "list","values": [0.25, 0.5, 0.75, 0.75]},

"percent": 80

}]

}

}

]

}Top Level Entries

The JSON request file has two parts, a version section, and a humans array section. The version section specifies the version of the Human API to target, this should be set to 1. The humans array is a collection of scene parameter groupings. These parameter groupings describe how to adjust the subjects in the renders, as well as the camera and environment.

Full, minimal example of a valid request:

{

"version": 1,

"humans": [

{

"identities": {

"ids": [80],

"renders_per_identity": 1

}

}

]

}Humans Array

The humans array object is a list of sets of scenes to render, each element of the array with a particular specification as documented in the following sections.

A minimal "multiple scene-set" JSON

{

"humans": [

{

"identities": {

"ids": [80],

"renders_per_identity": 1

}

},

{

"identities": {

"ids": [3],

"renders_per_identity": 1

}

}

]

}Identities & Renders Per Identity

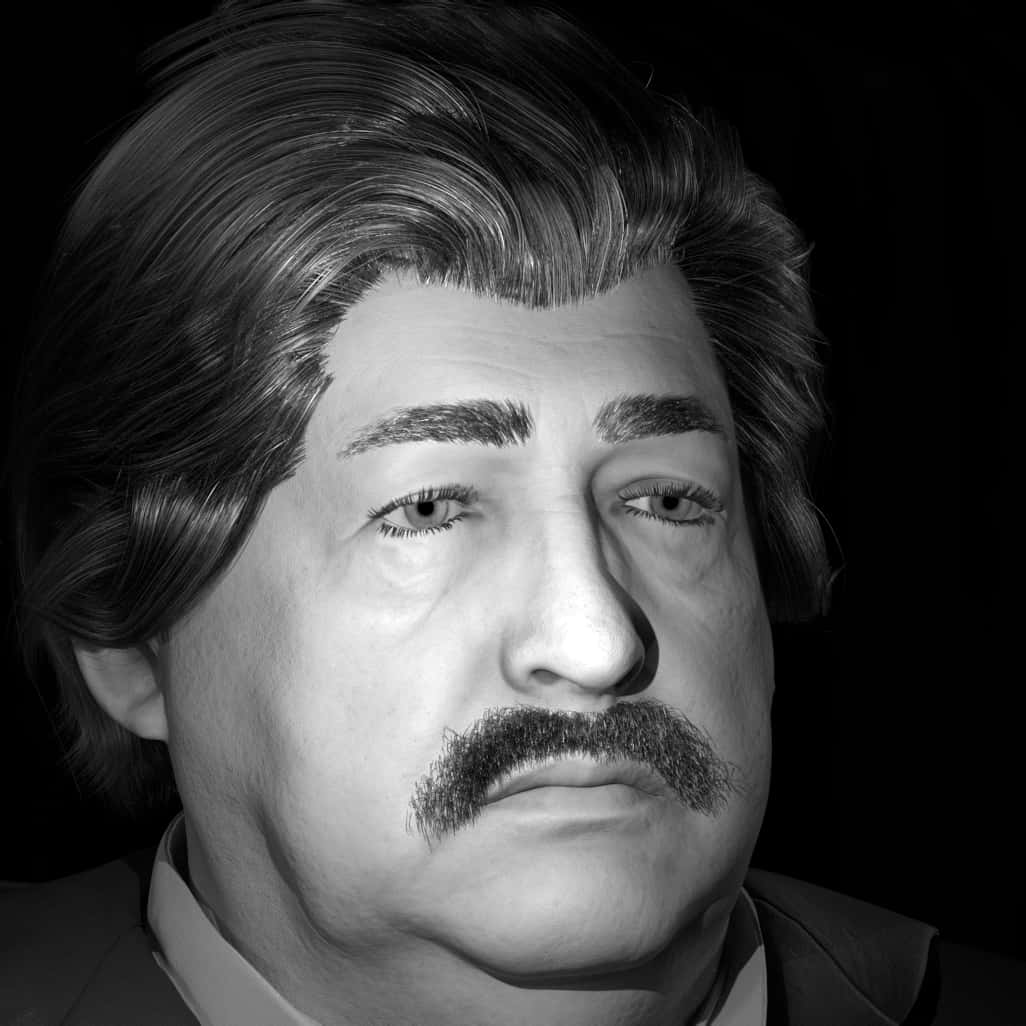

Our facial identities each have a numerical ID (e.g. 79, 80), and are consistent between every run. Each ID also has consistent demographic information with it that is returned on output (sex, ethnicity, age). See more in output documentation.

NOTE: IDs are not contiguous. Some IDs do not exist and will yield a job error if requested.

To get a list of IDs that match your demographic requirements, our command line tool provides a humans ids subcommand (read more) to query for ids matching a set of selection parameters.

One or more IDs can be sent in per scene specification (per humans array element). The total number of images generated for the array element will be, in pseudocode:

length(humans.identities.ids) x humans.identities.renders_per_identity.

Identities id Array List

- Default: no default, required

- Note: maximum length of 10,000 values

Identities renders_per_identity Value

- Default: 1

- Min: 1

- Max: 1000

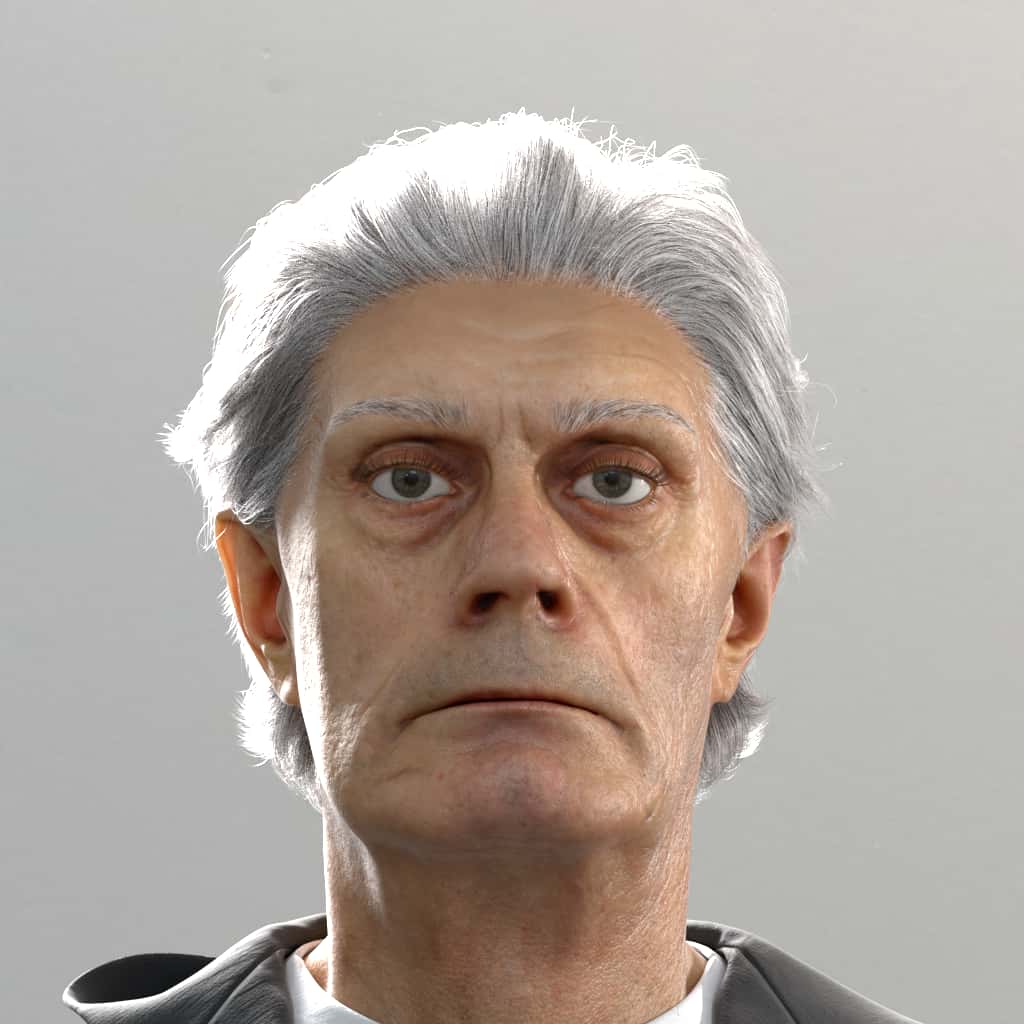

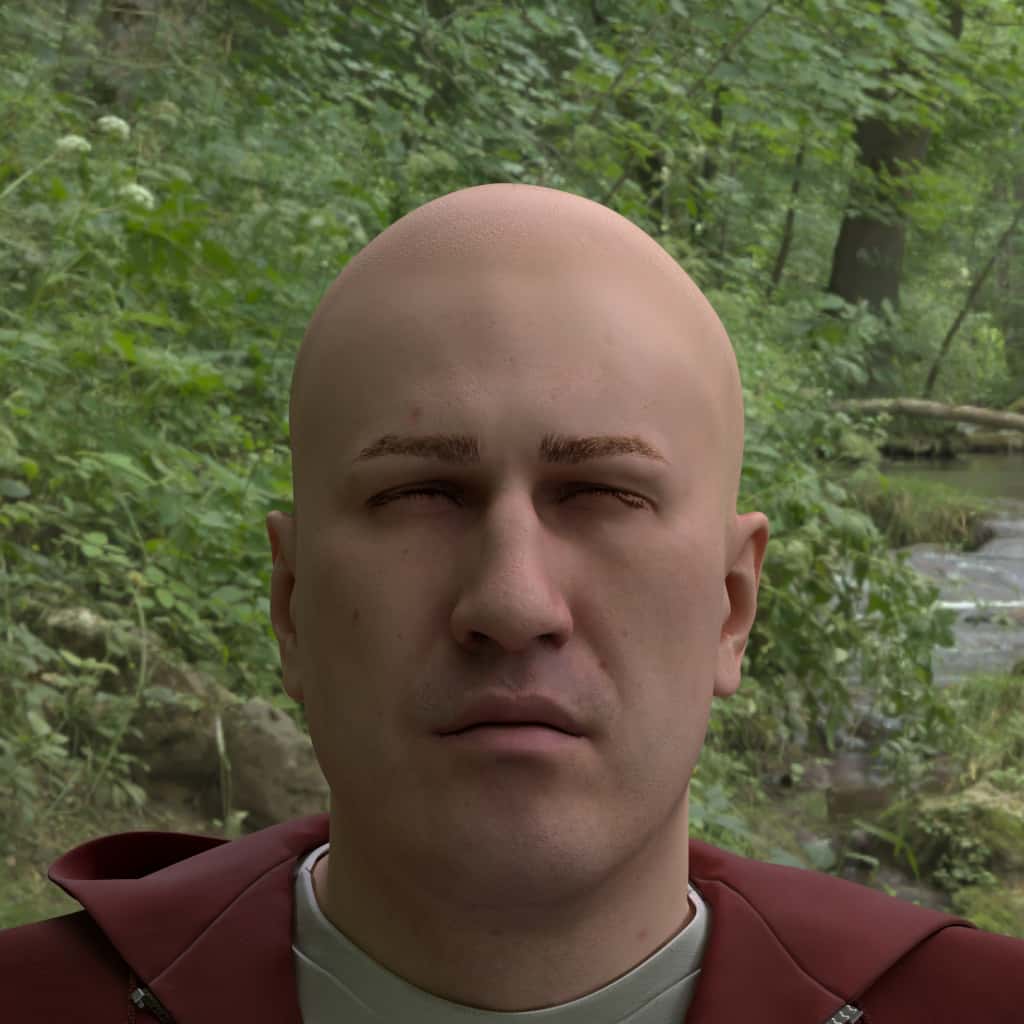

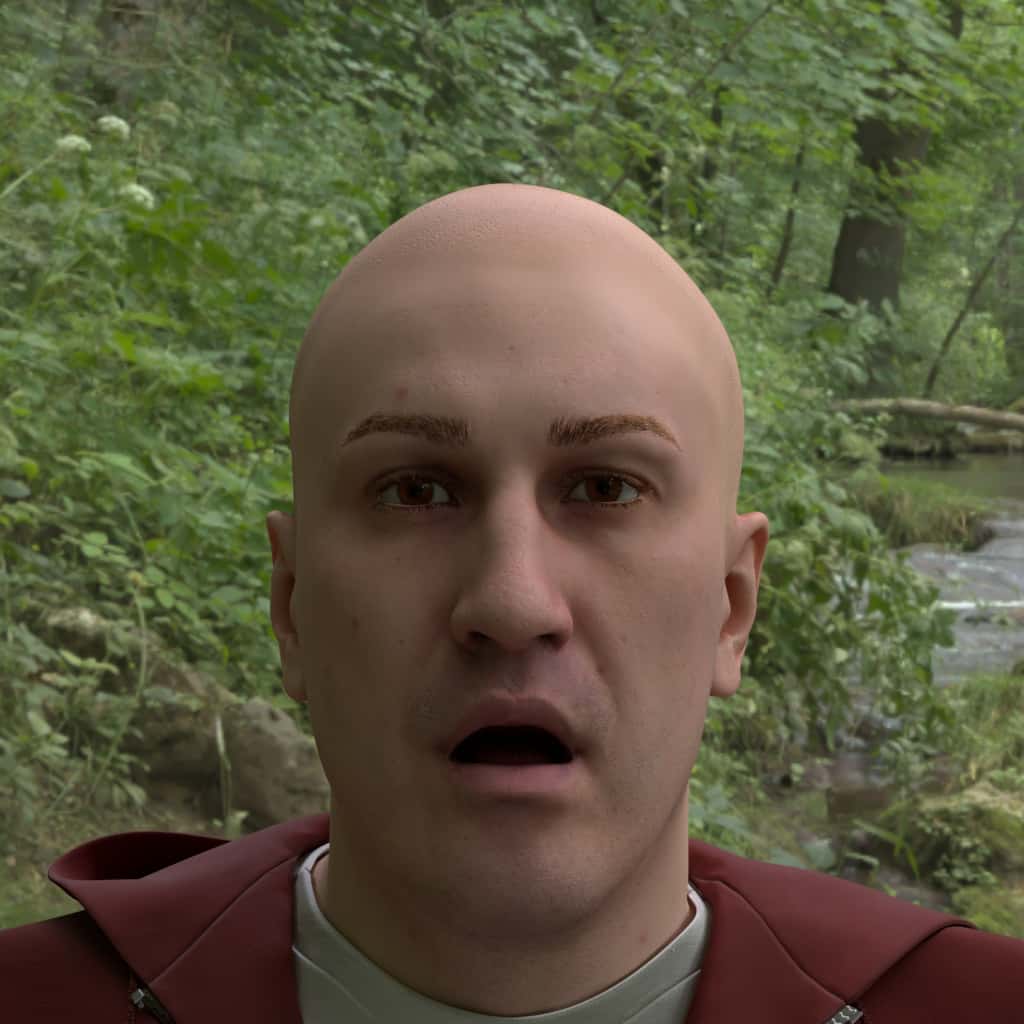

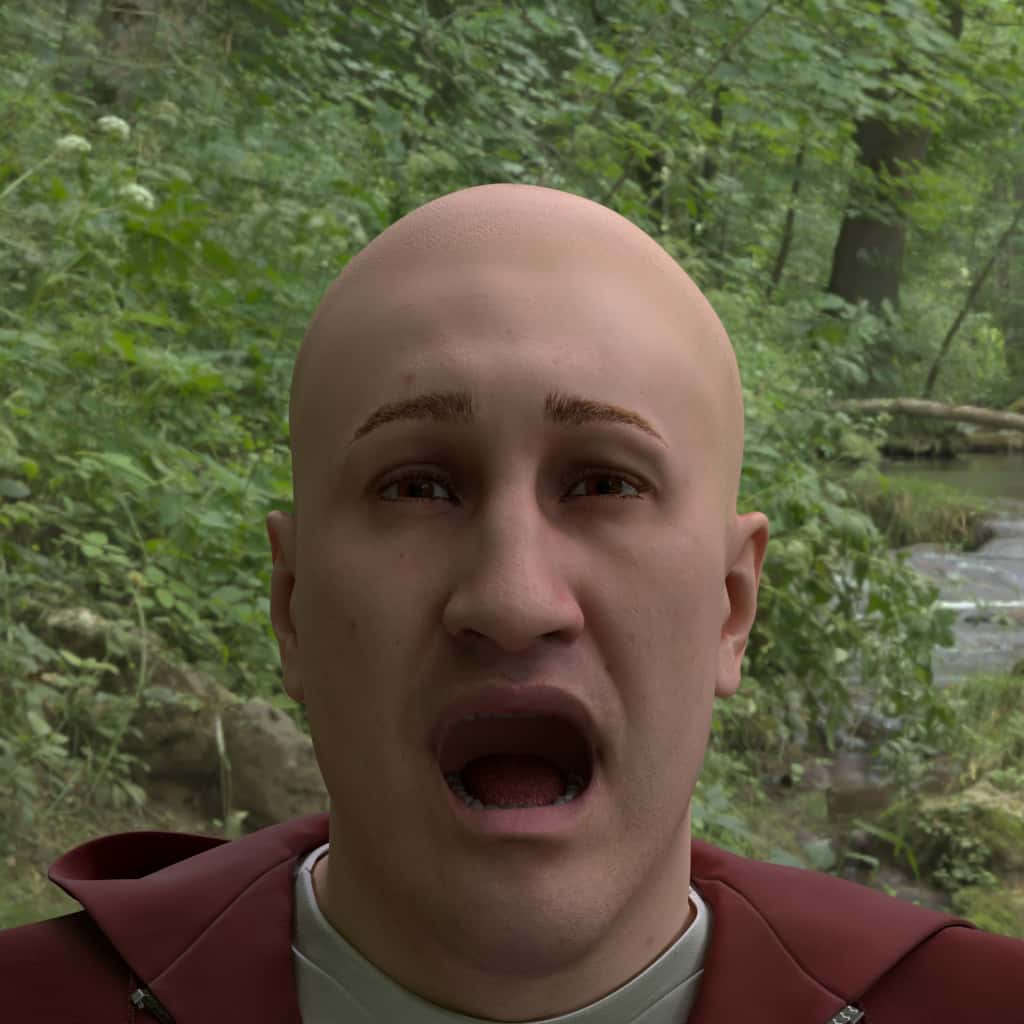

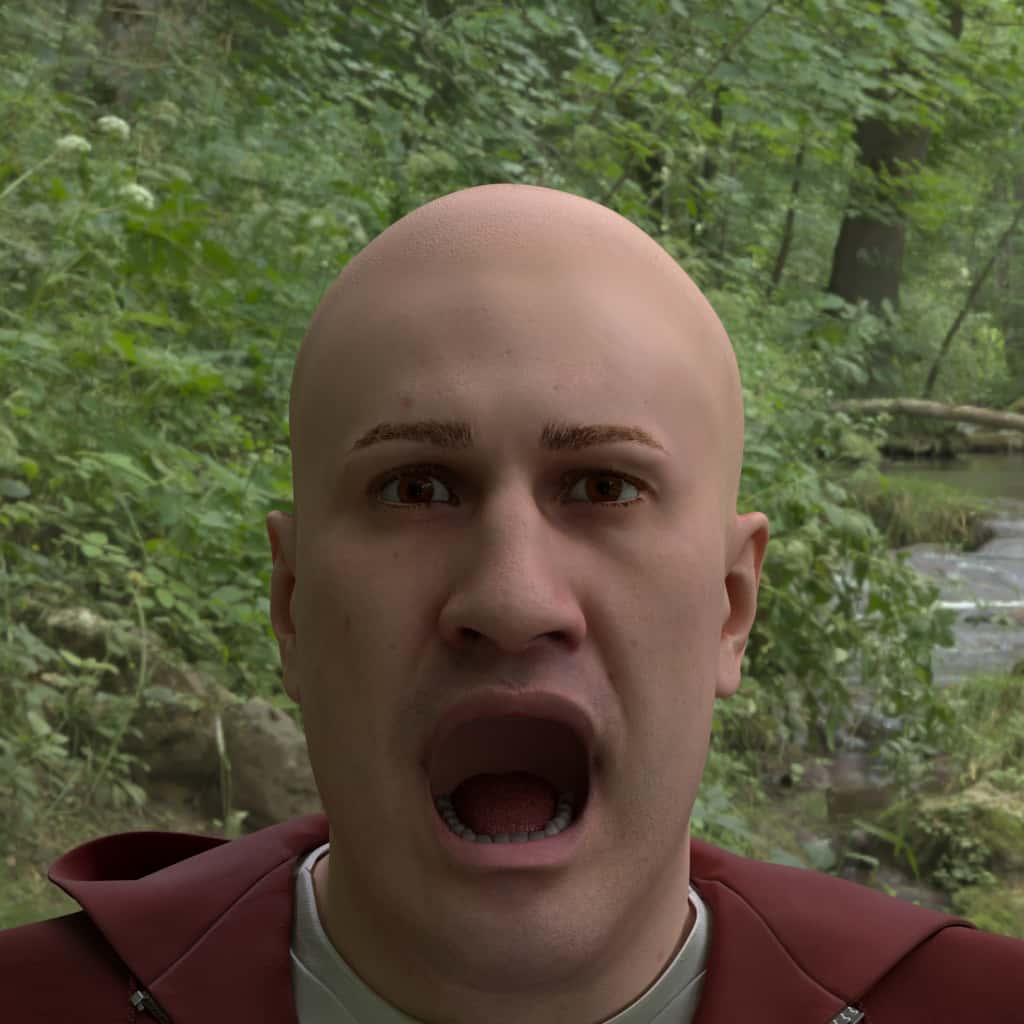

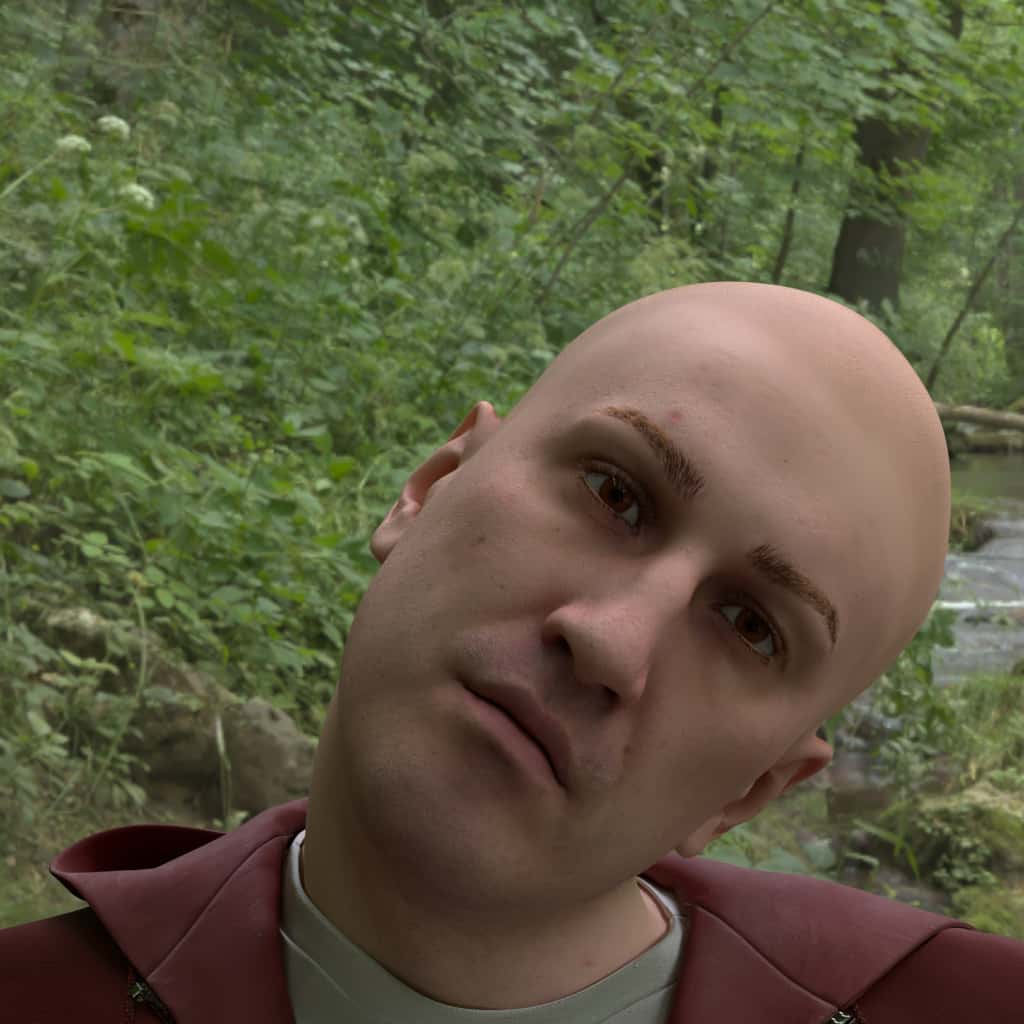

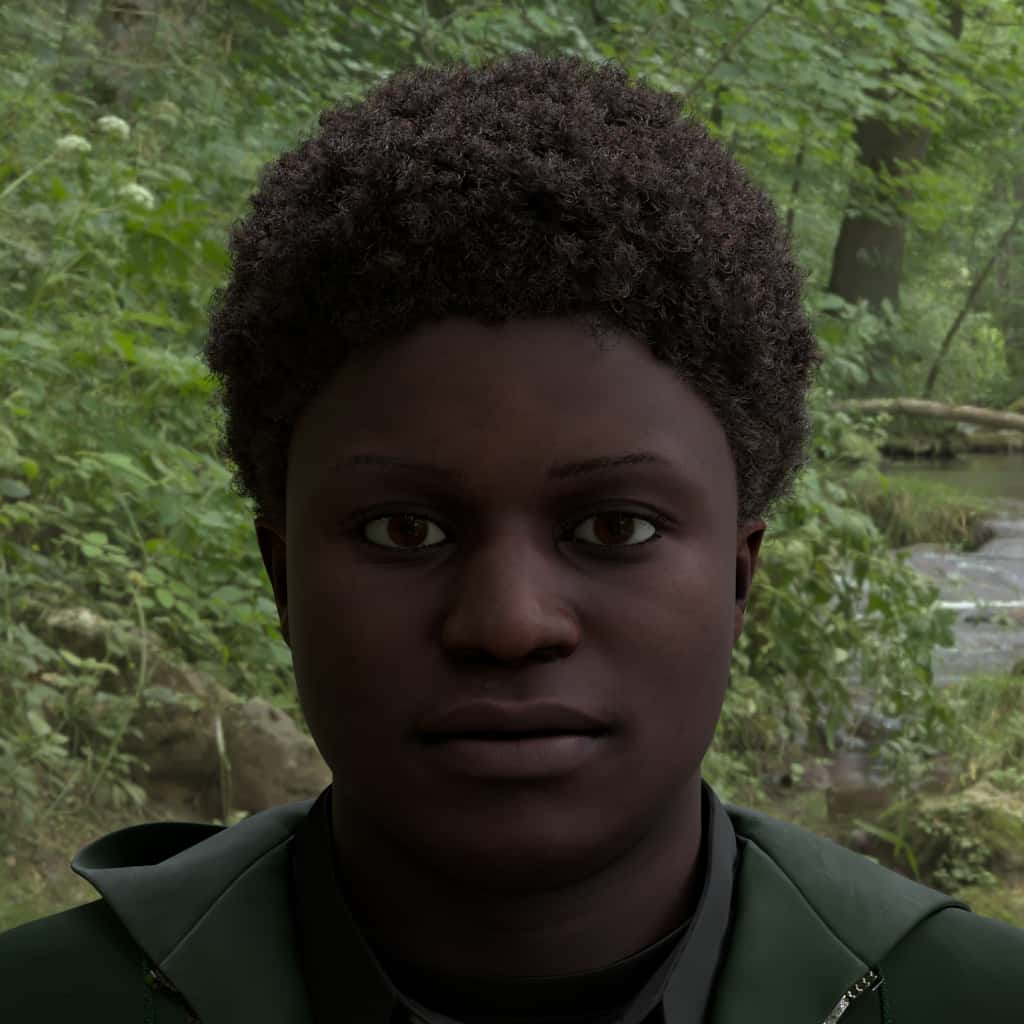

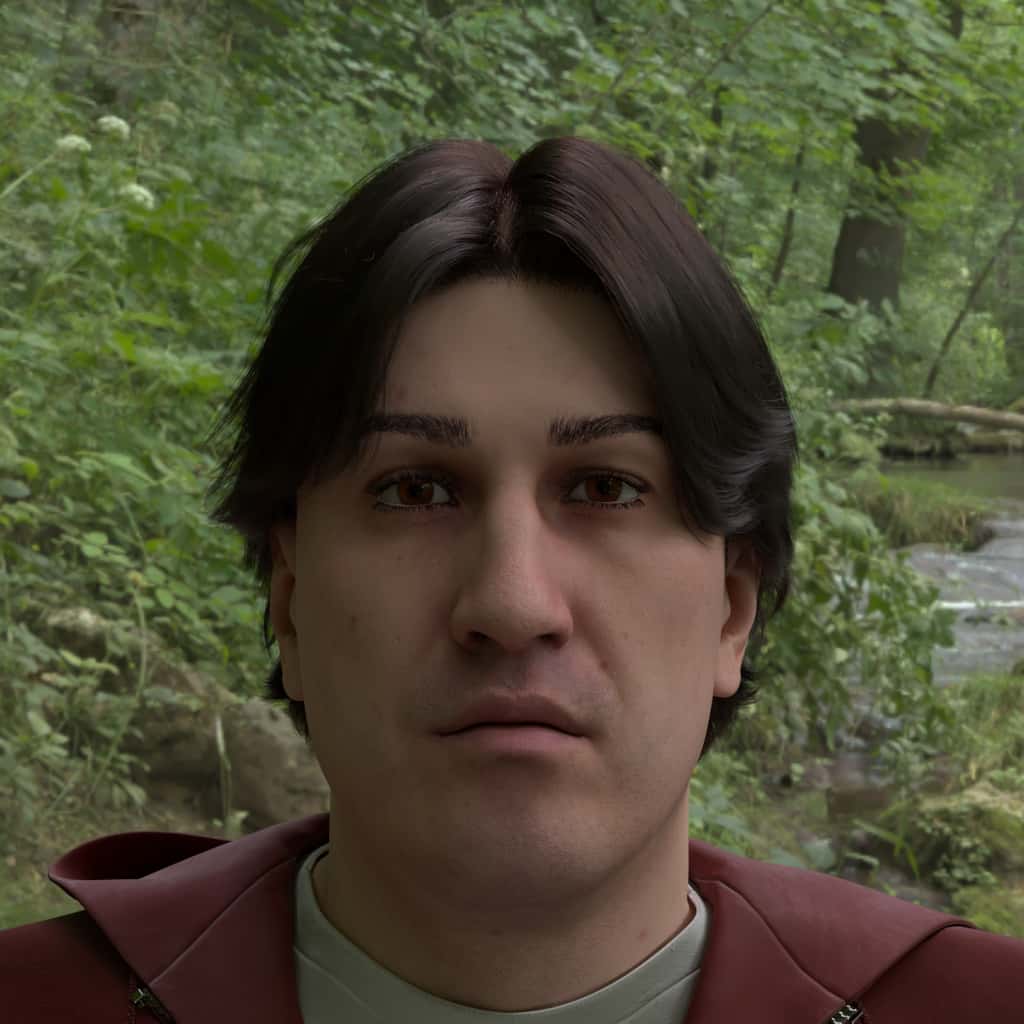

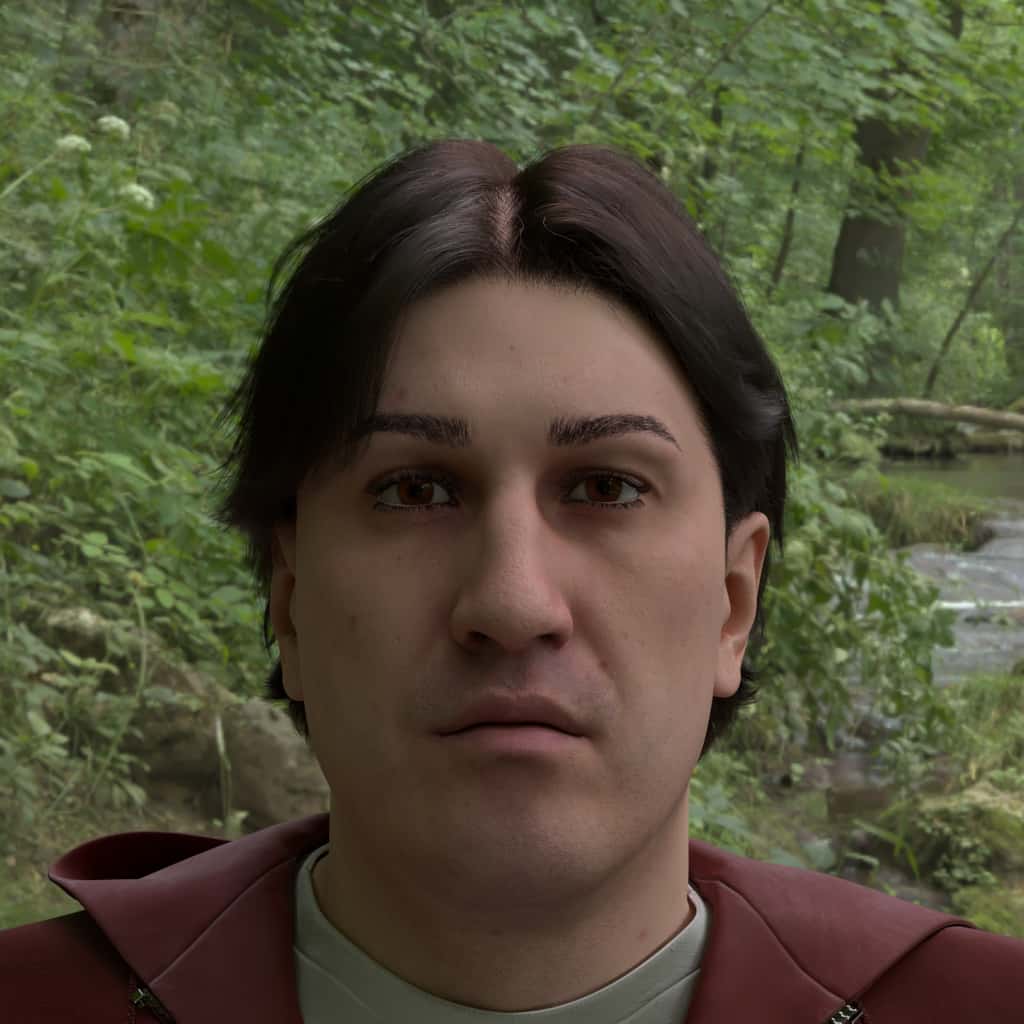

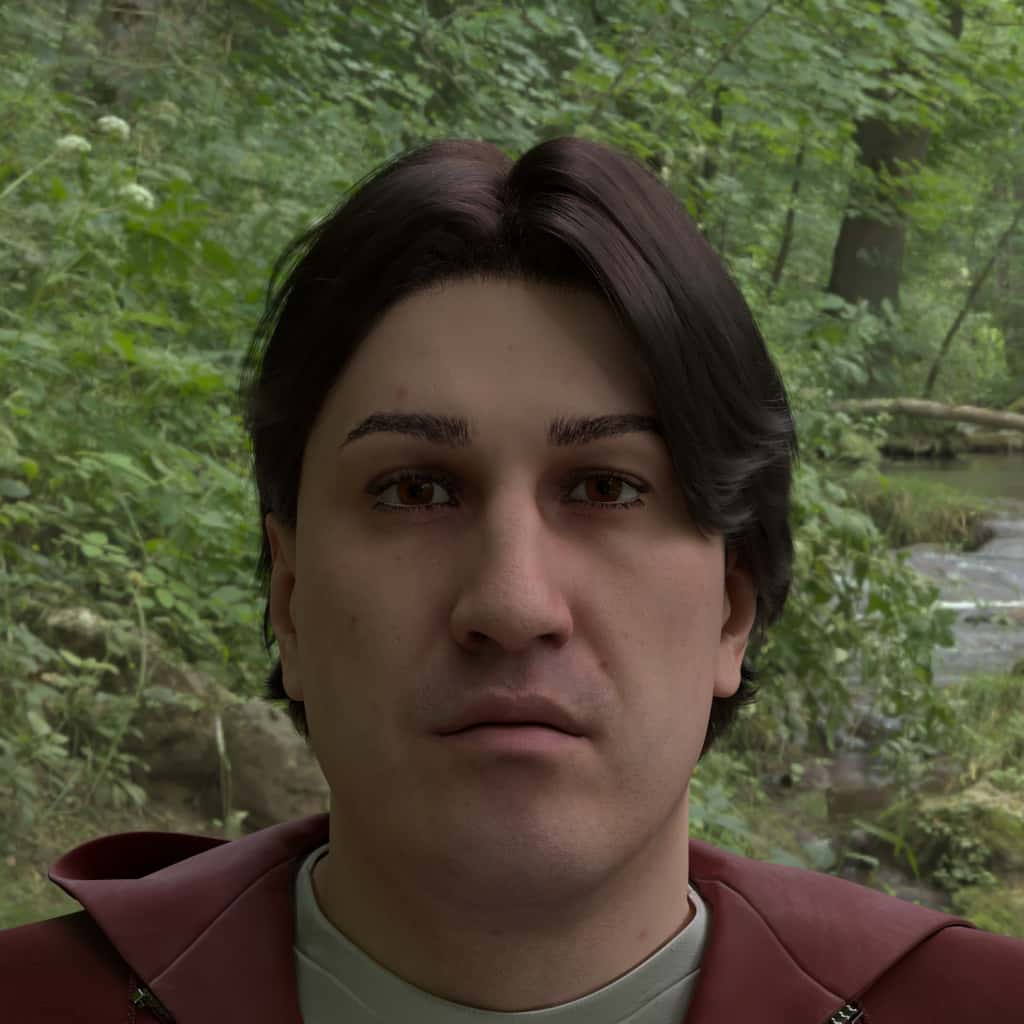

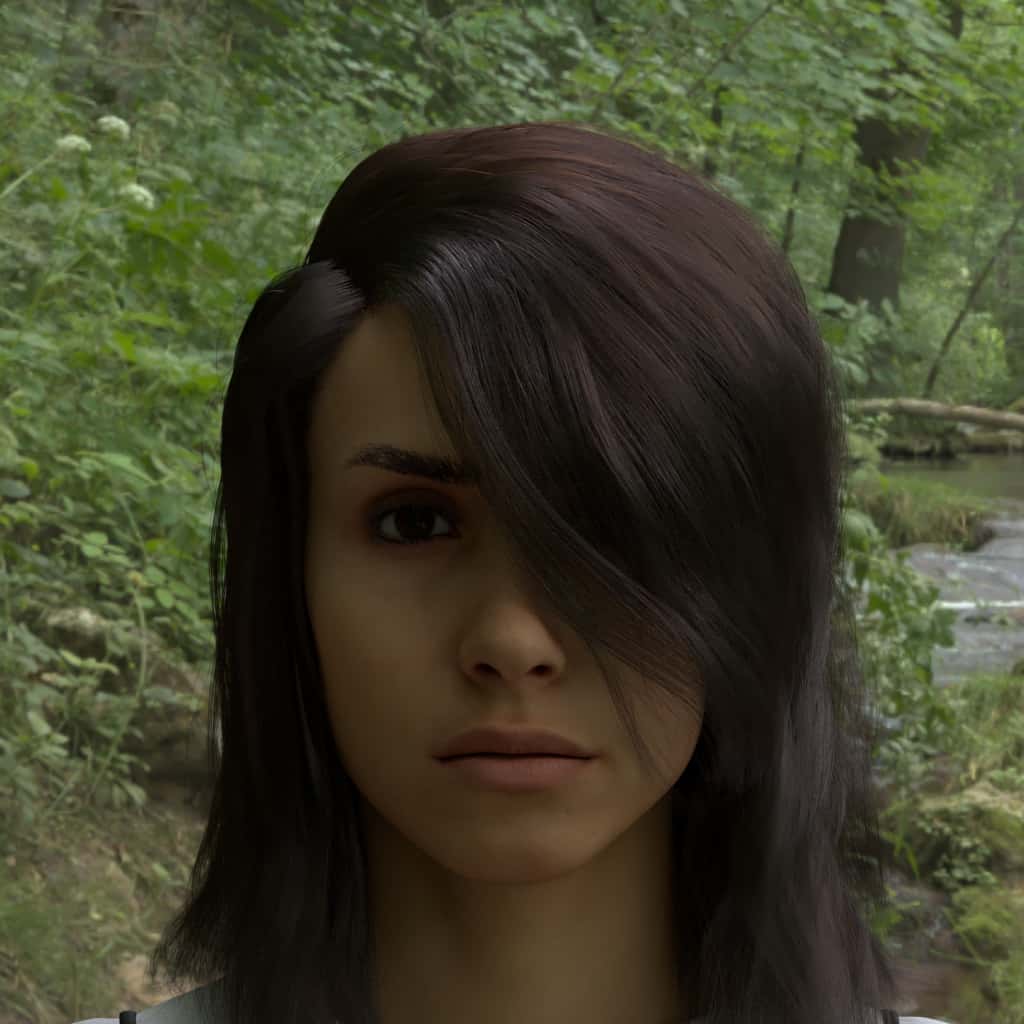

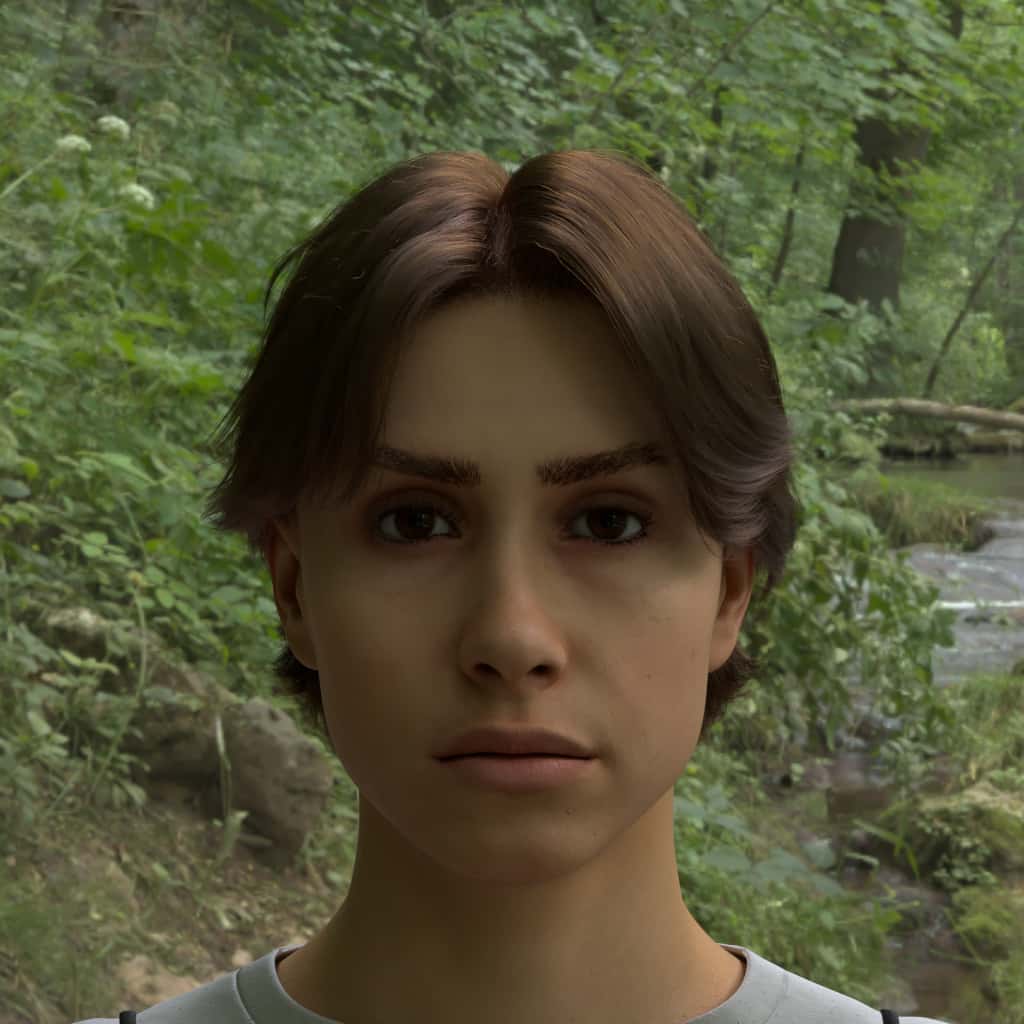

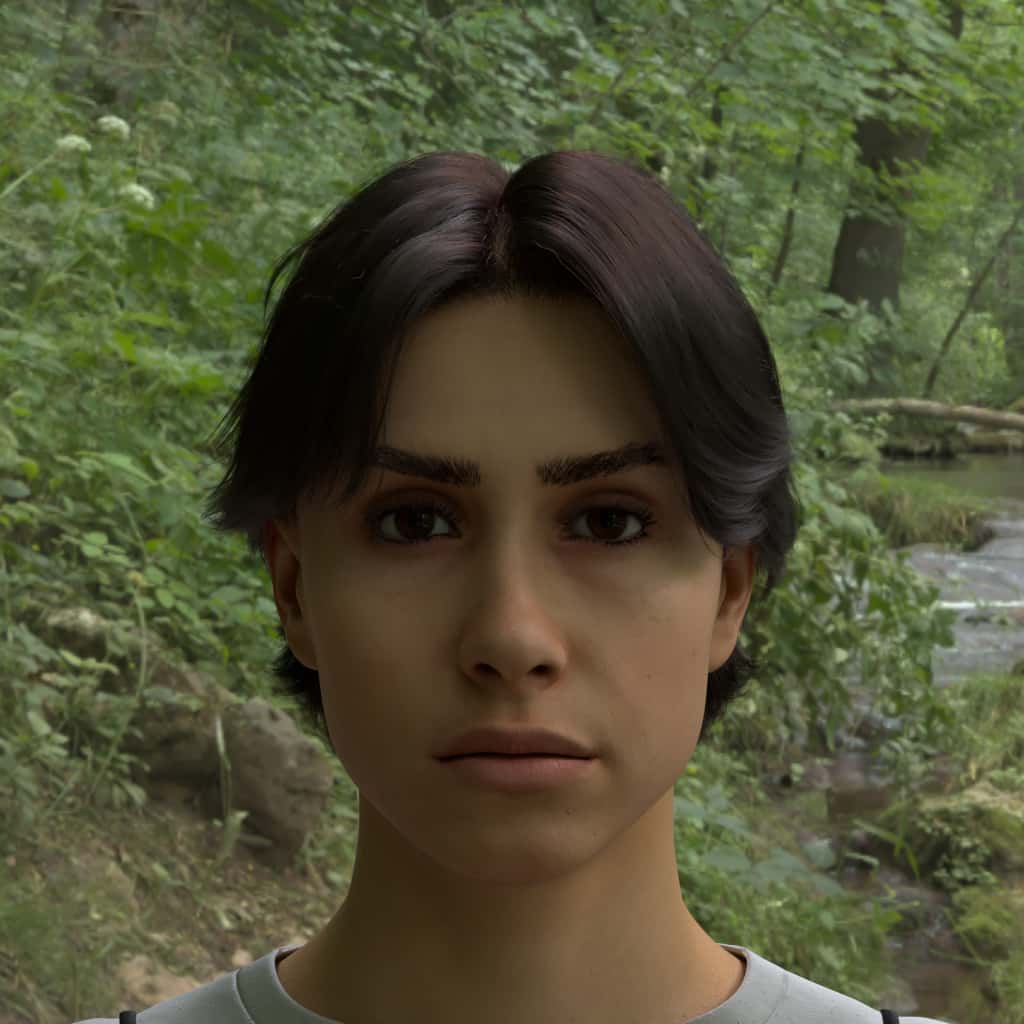

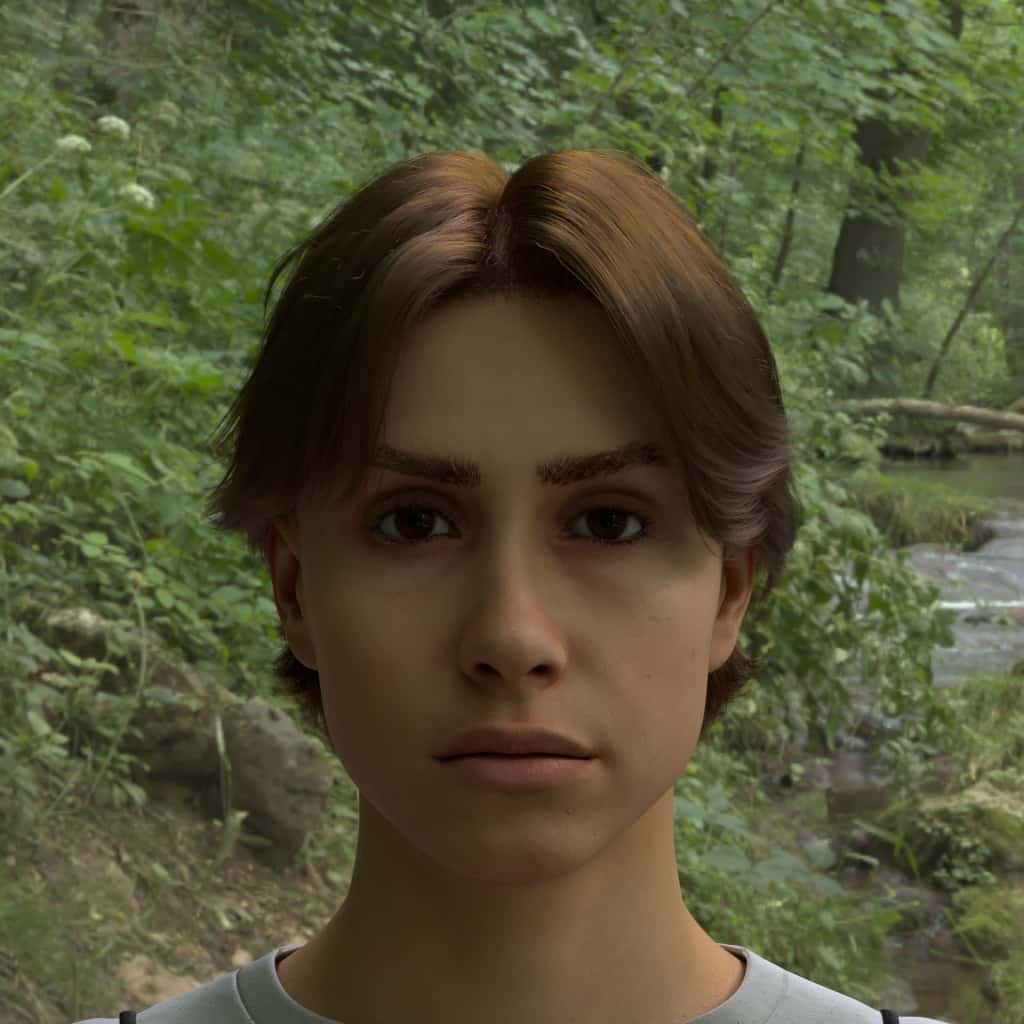

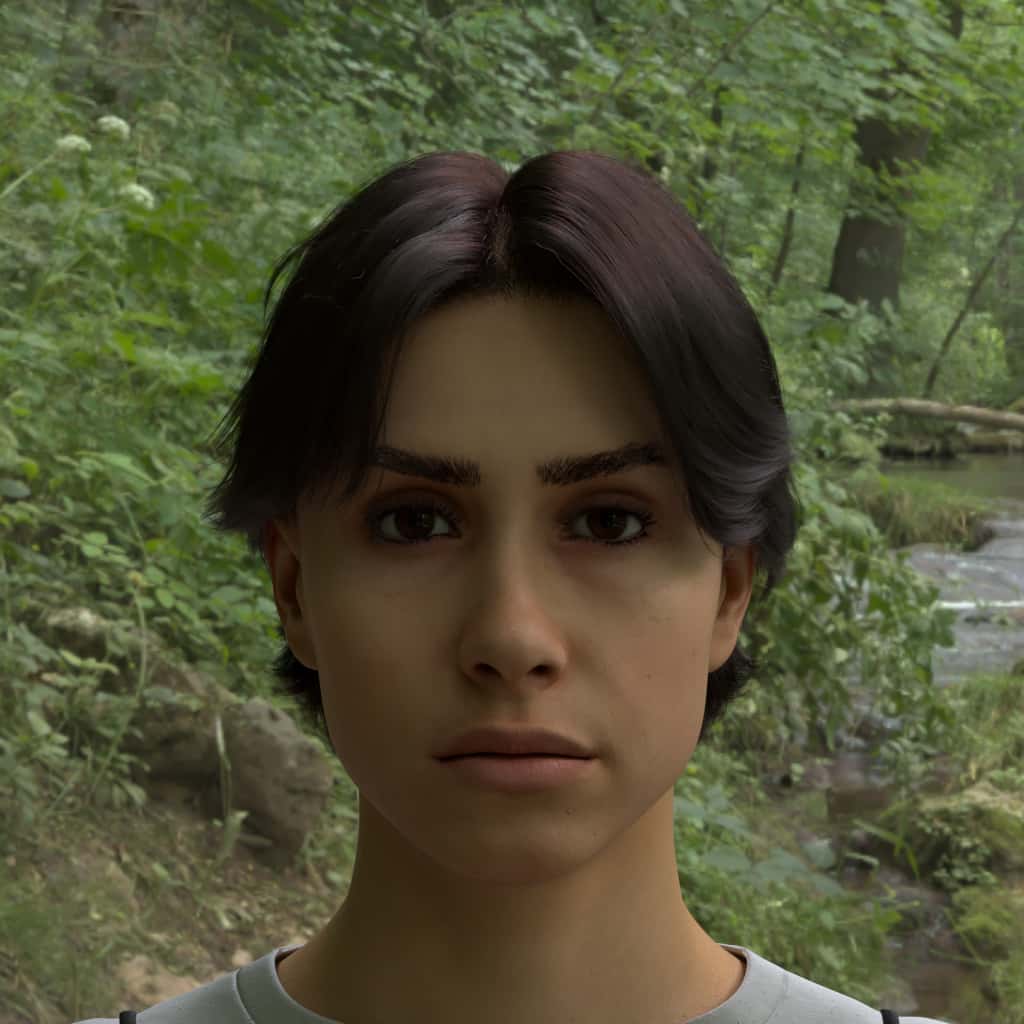

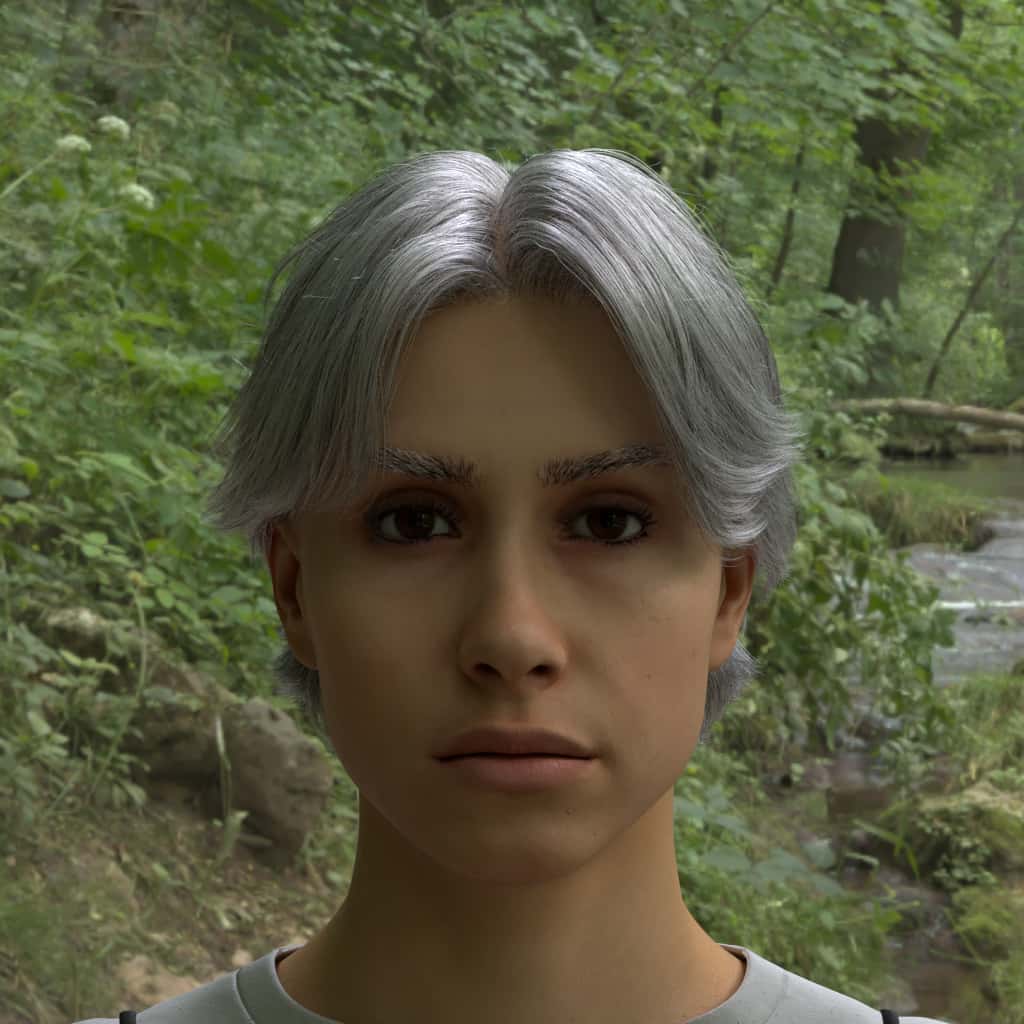

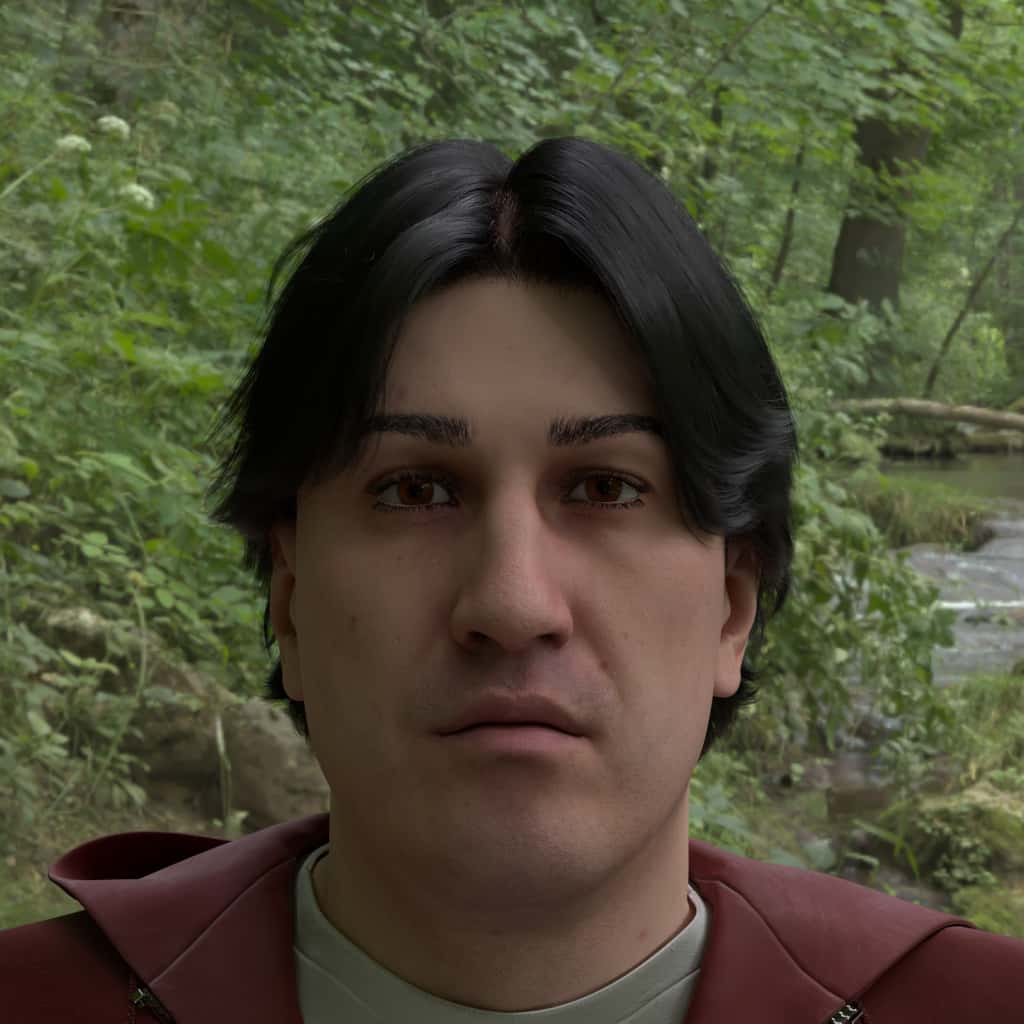

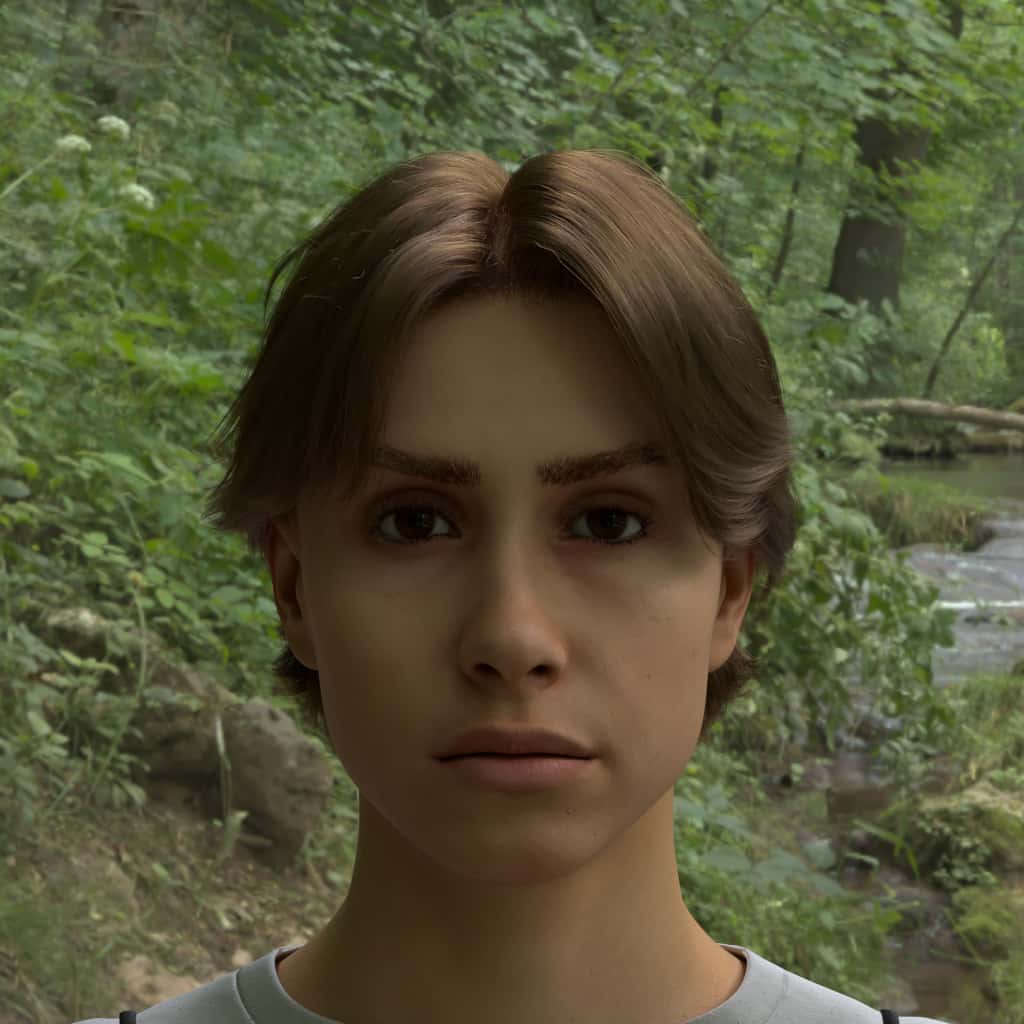

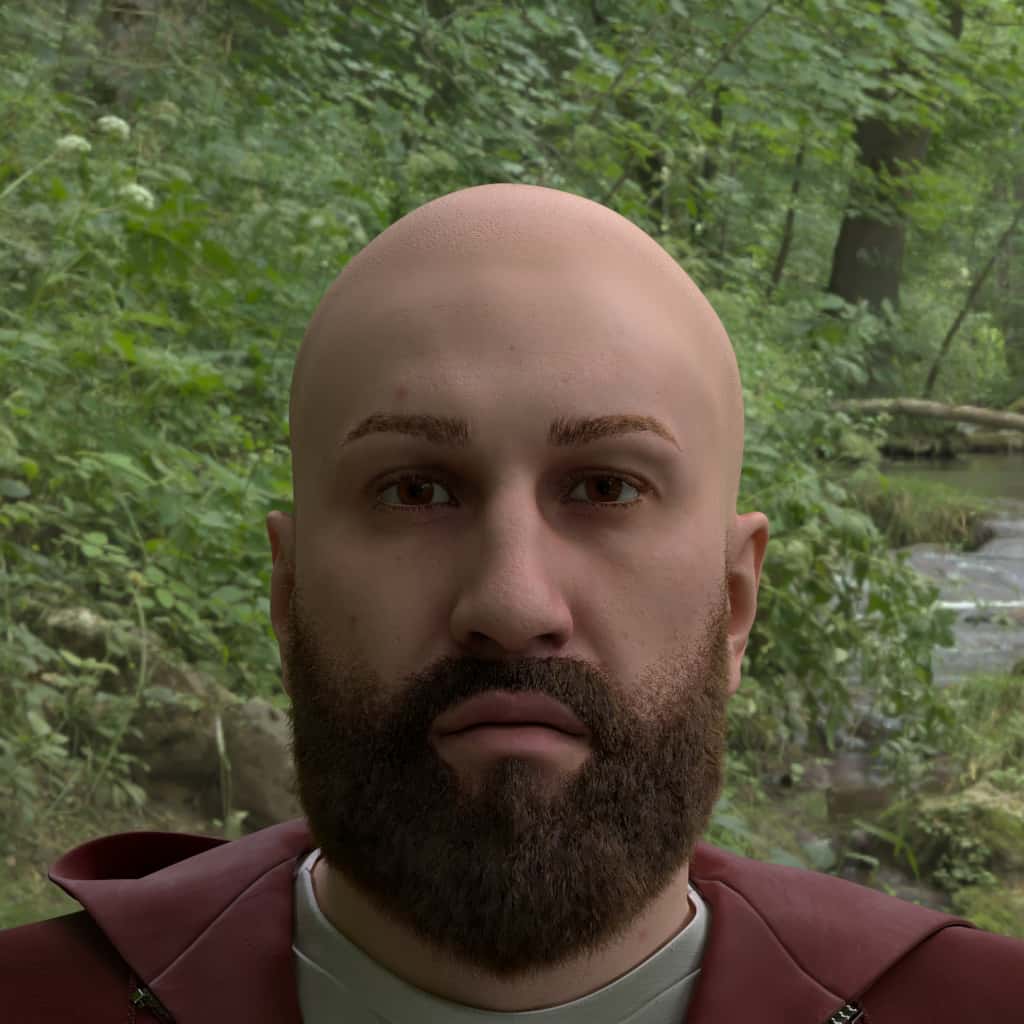

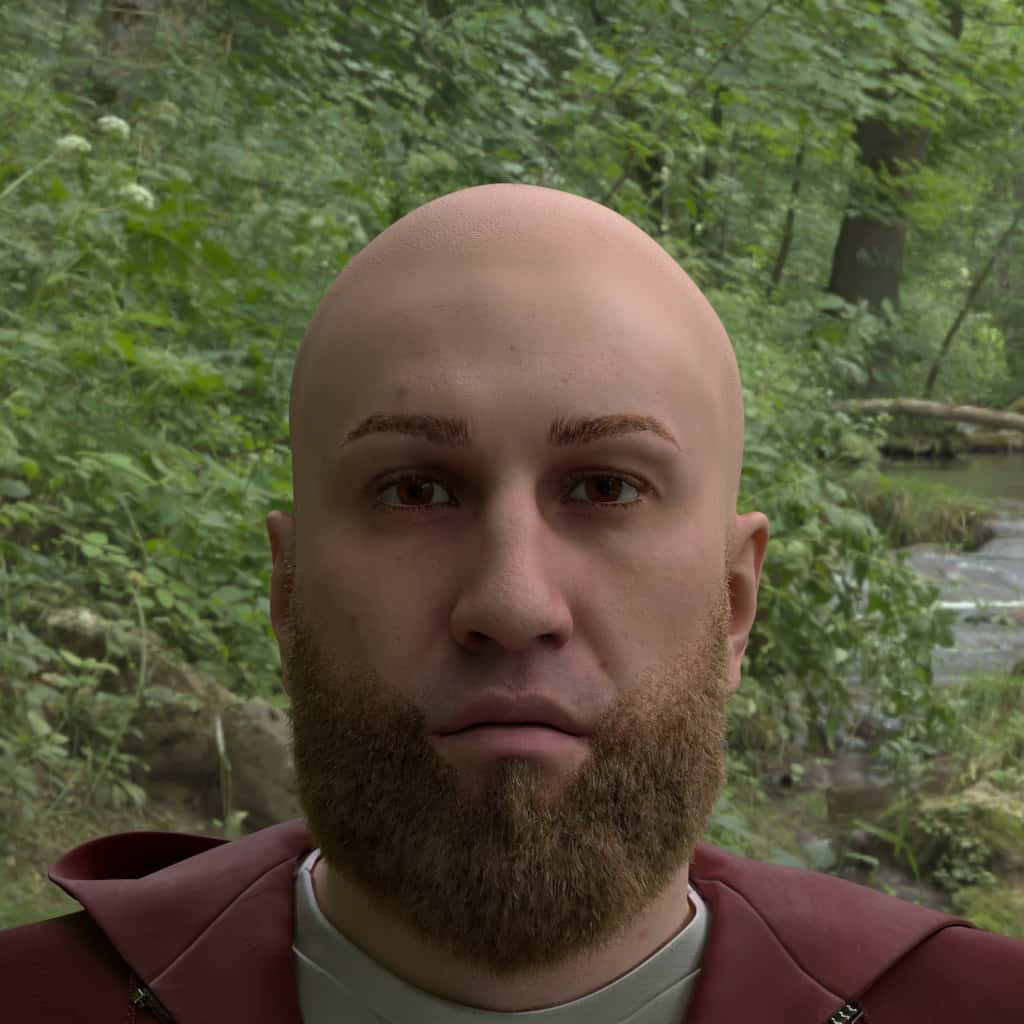

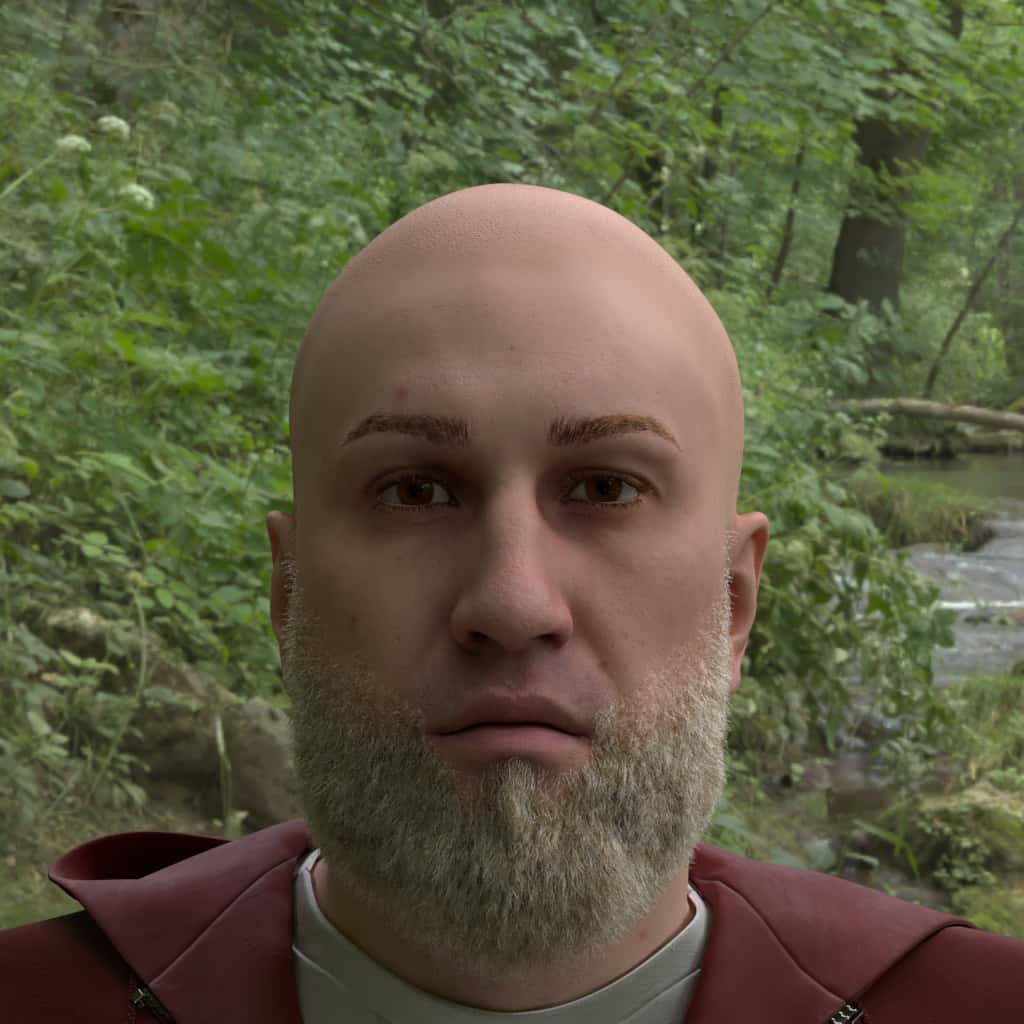

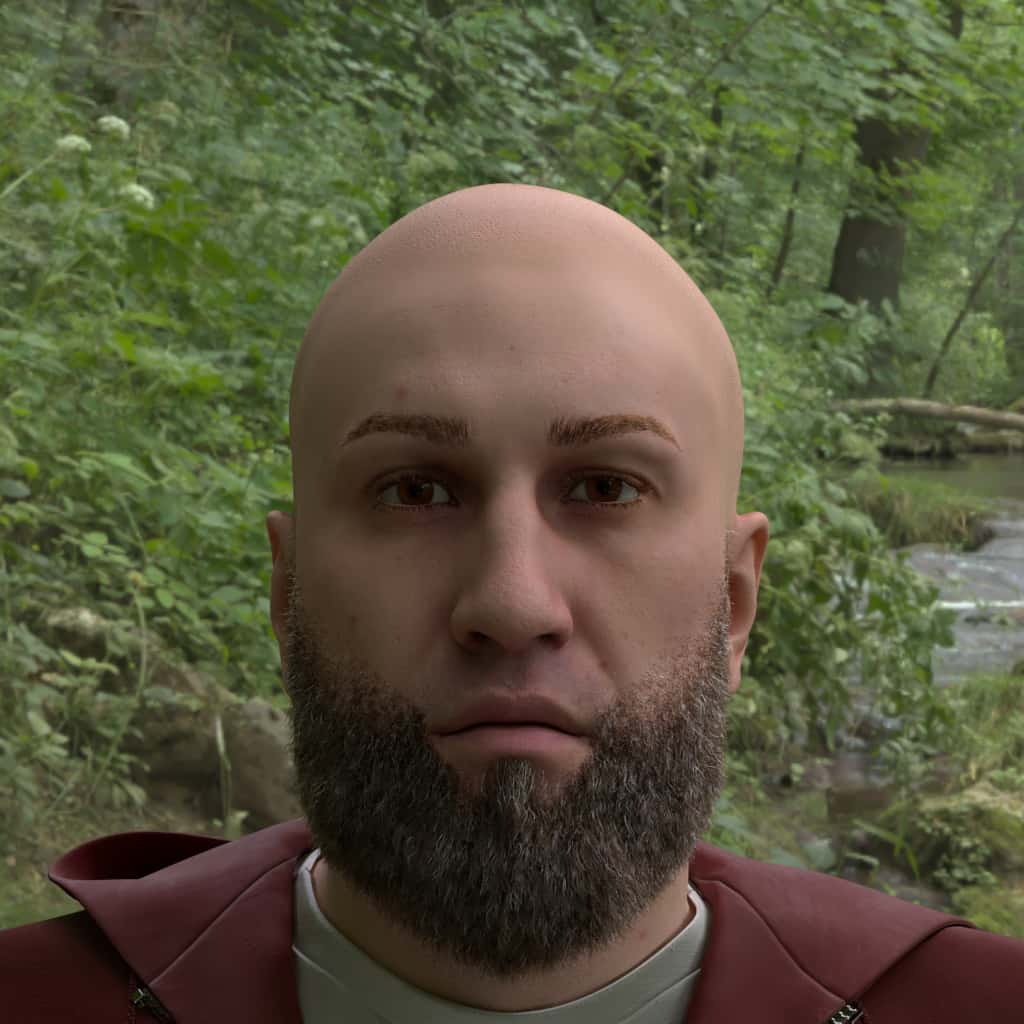

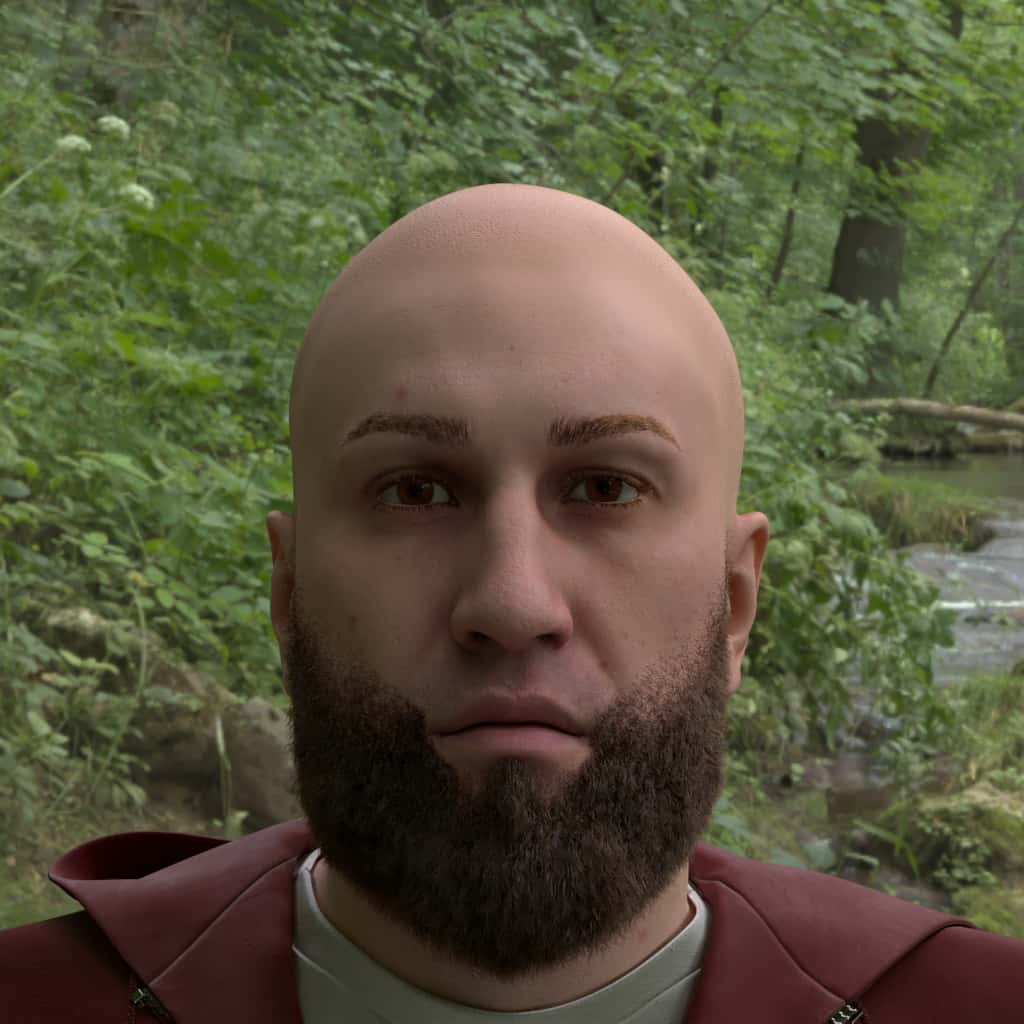

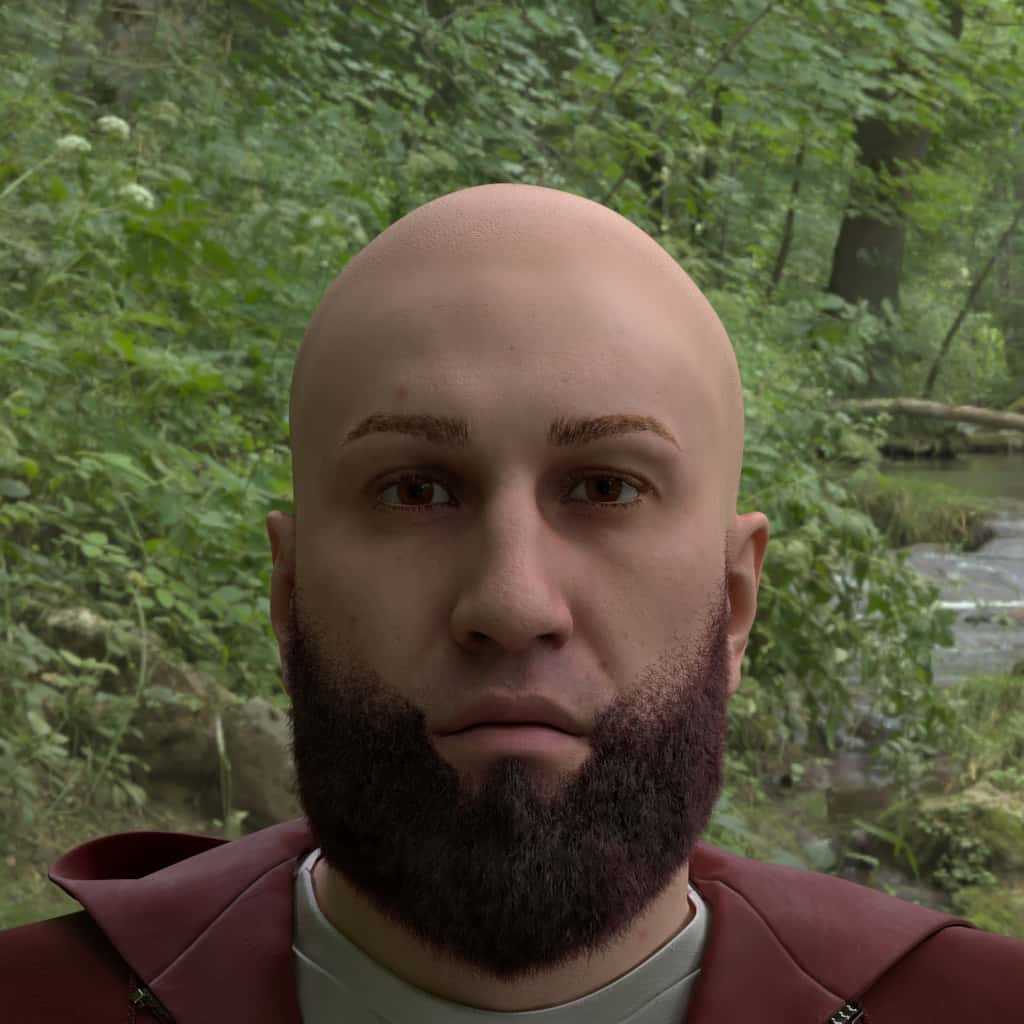

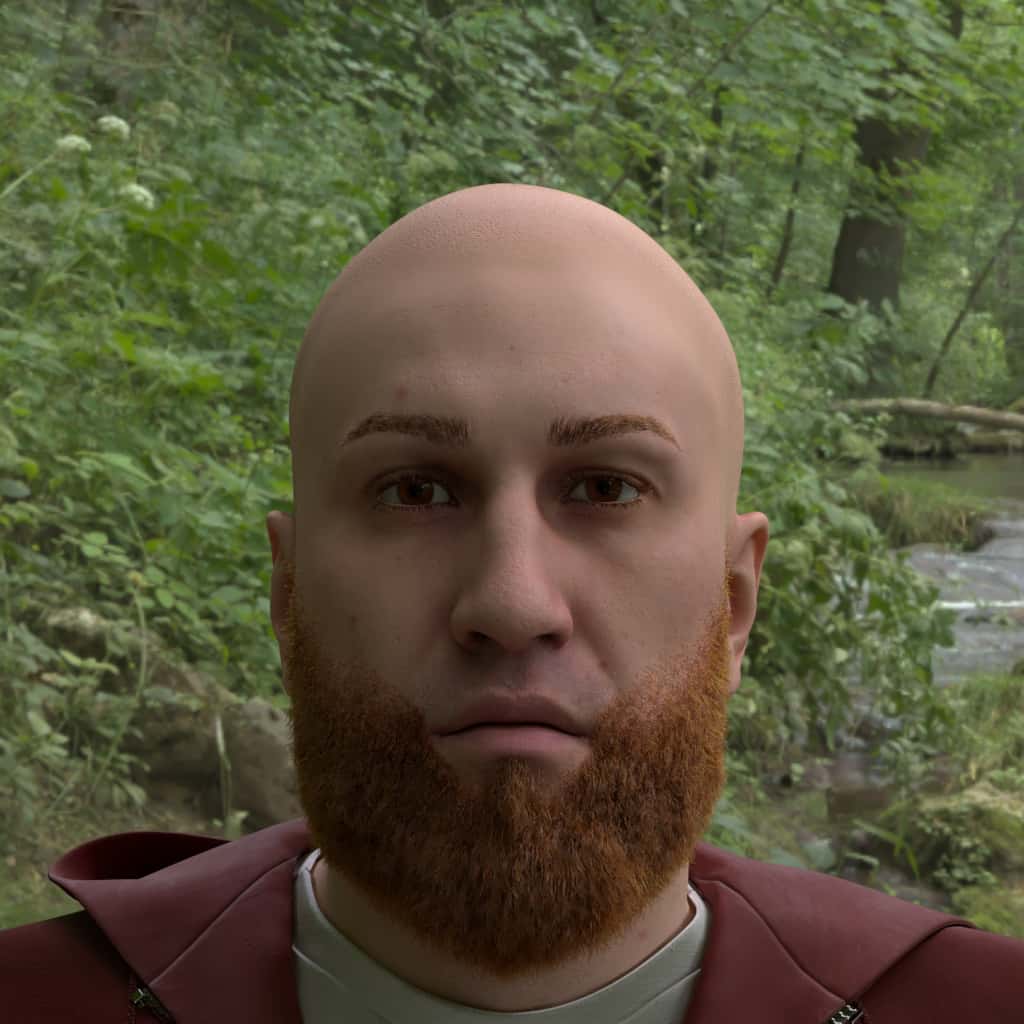

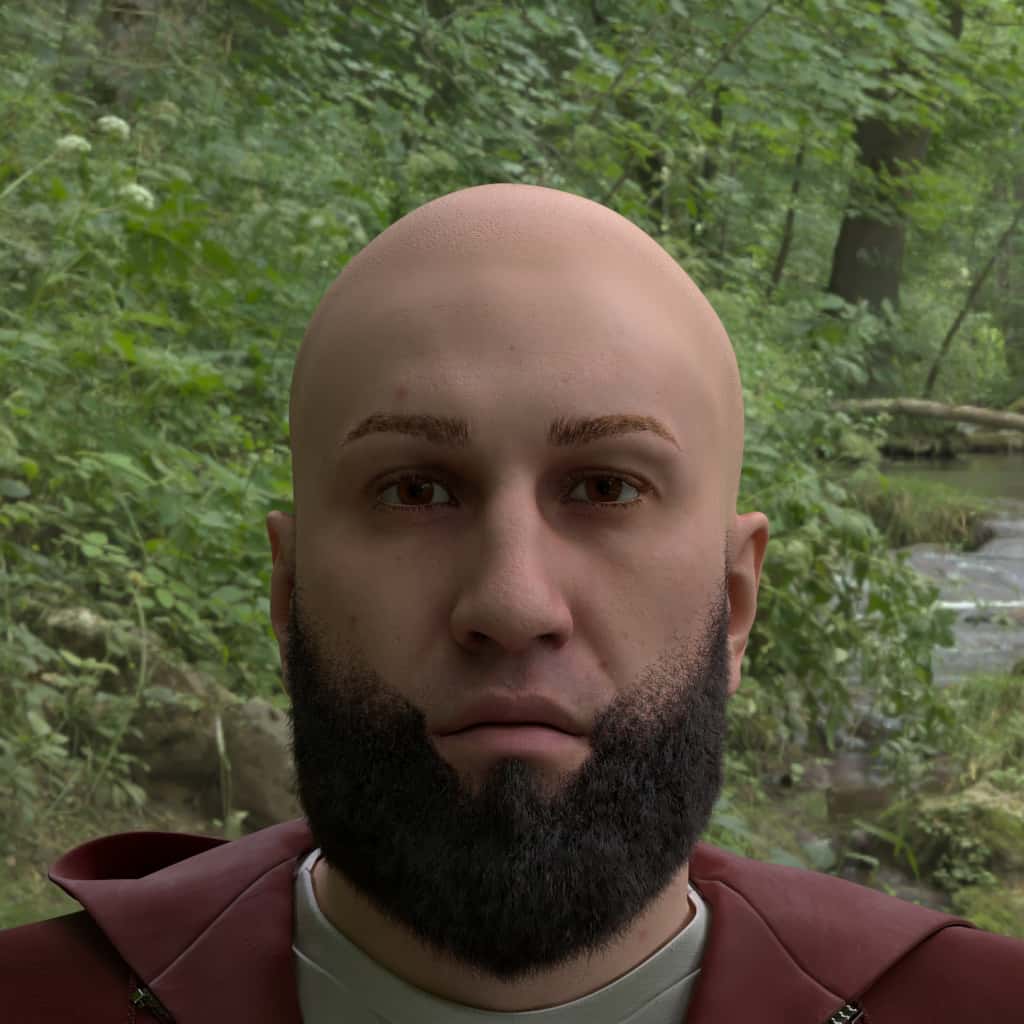

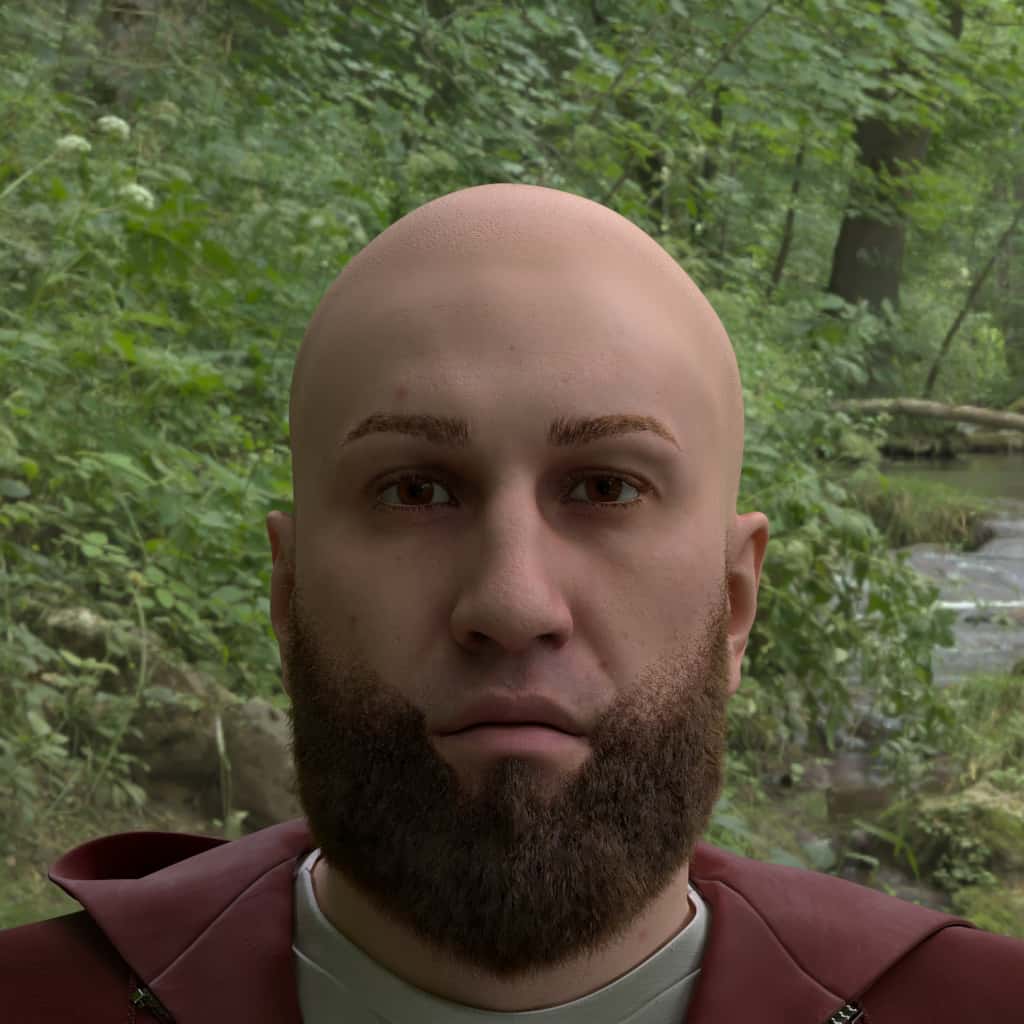

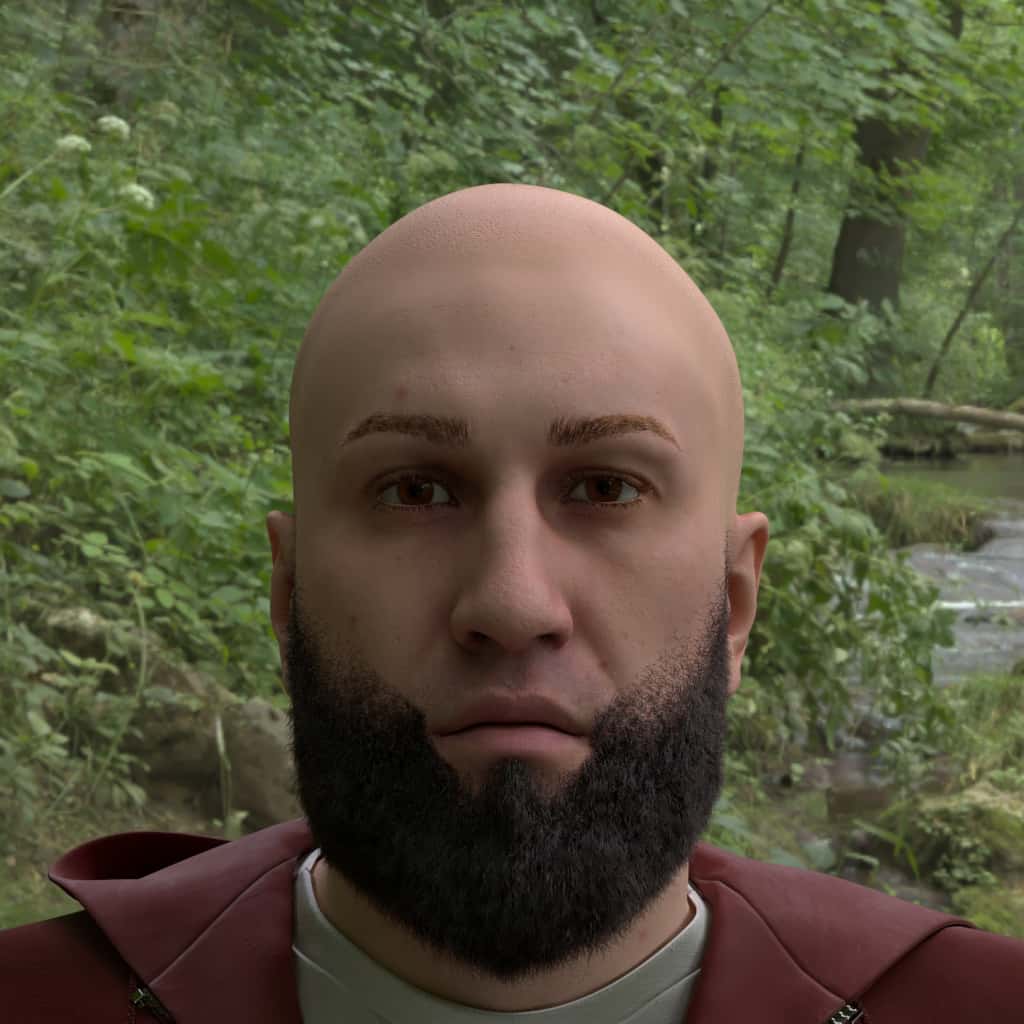

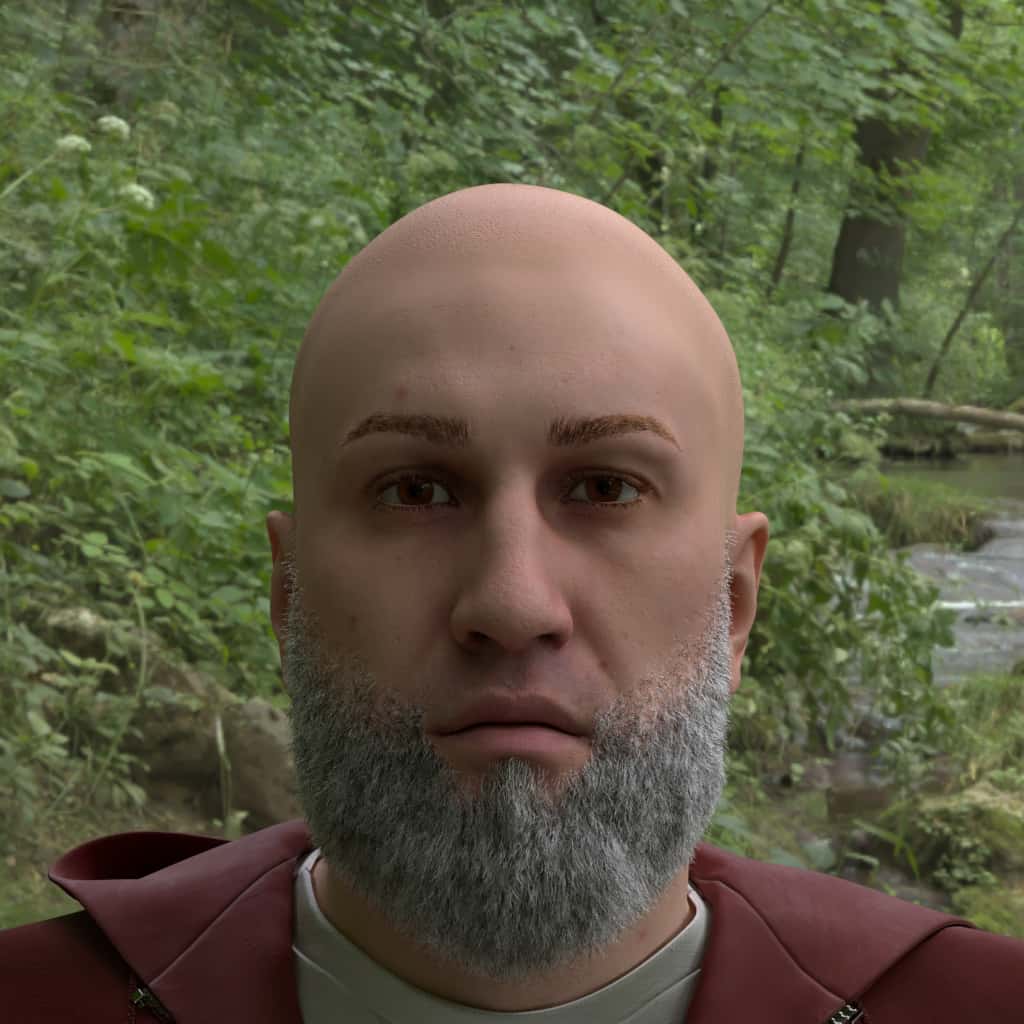

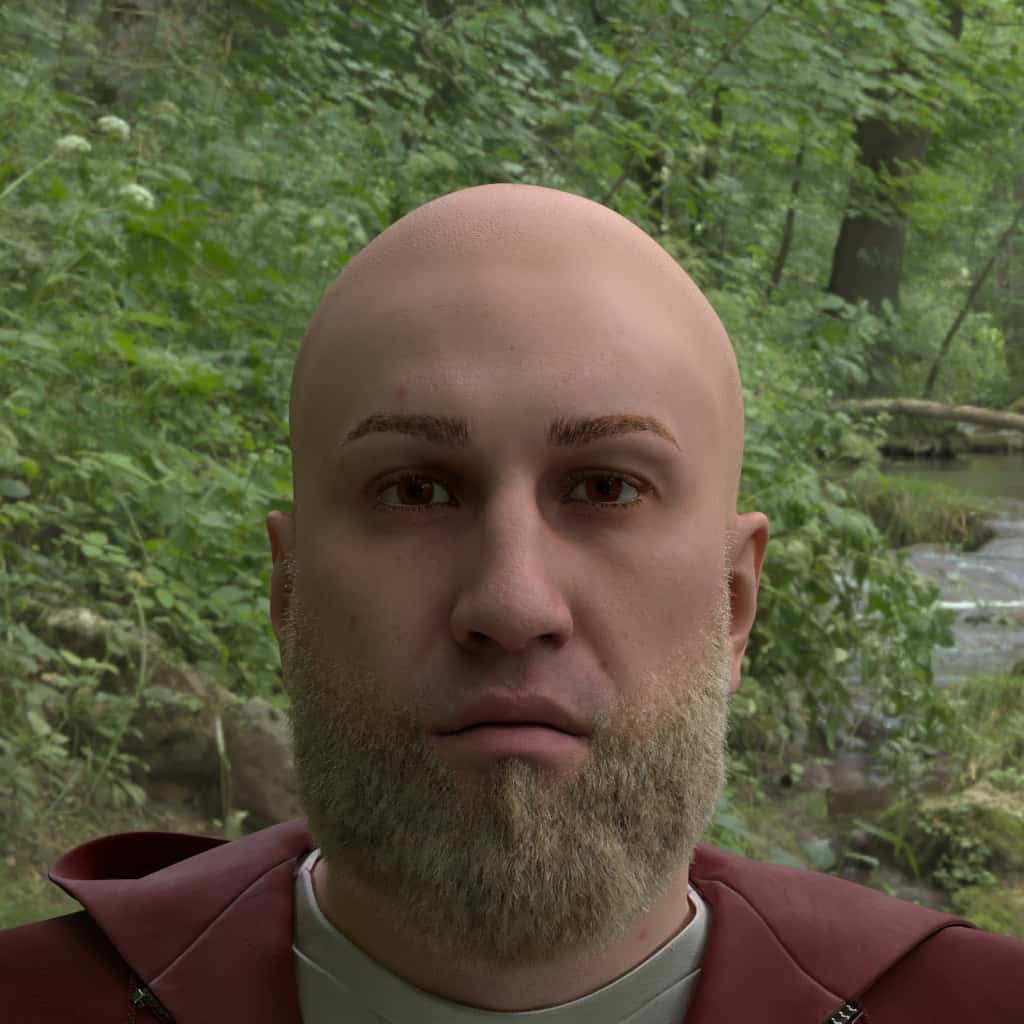

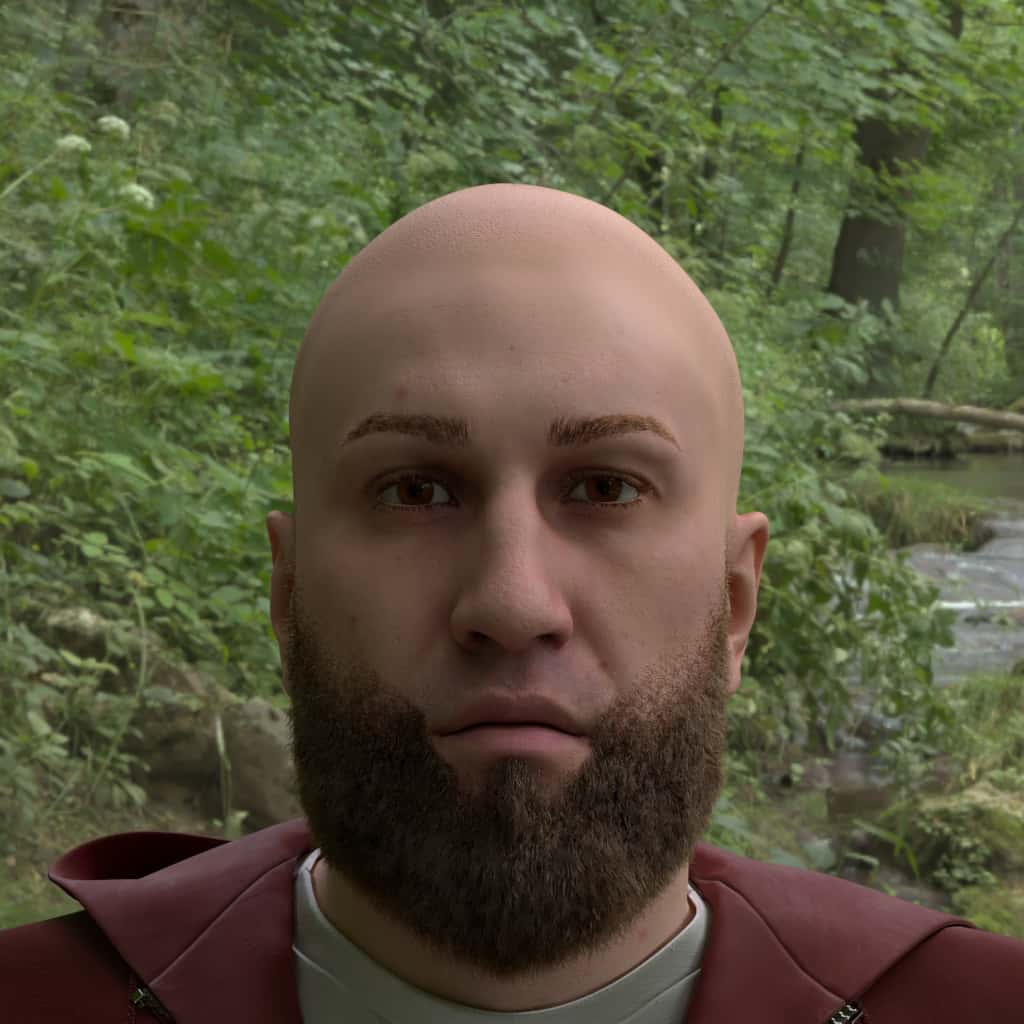

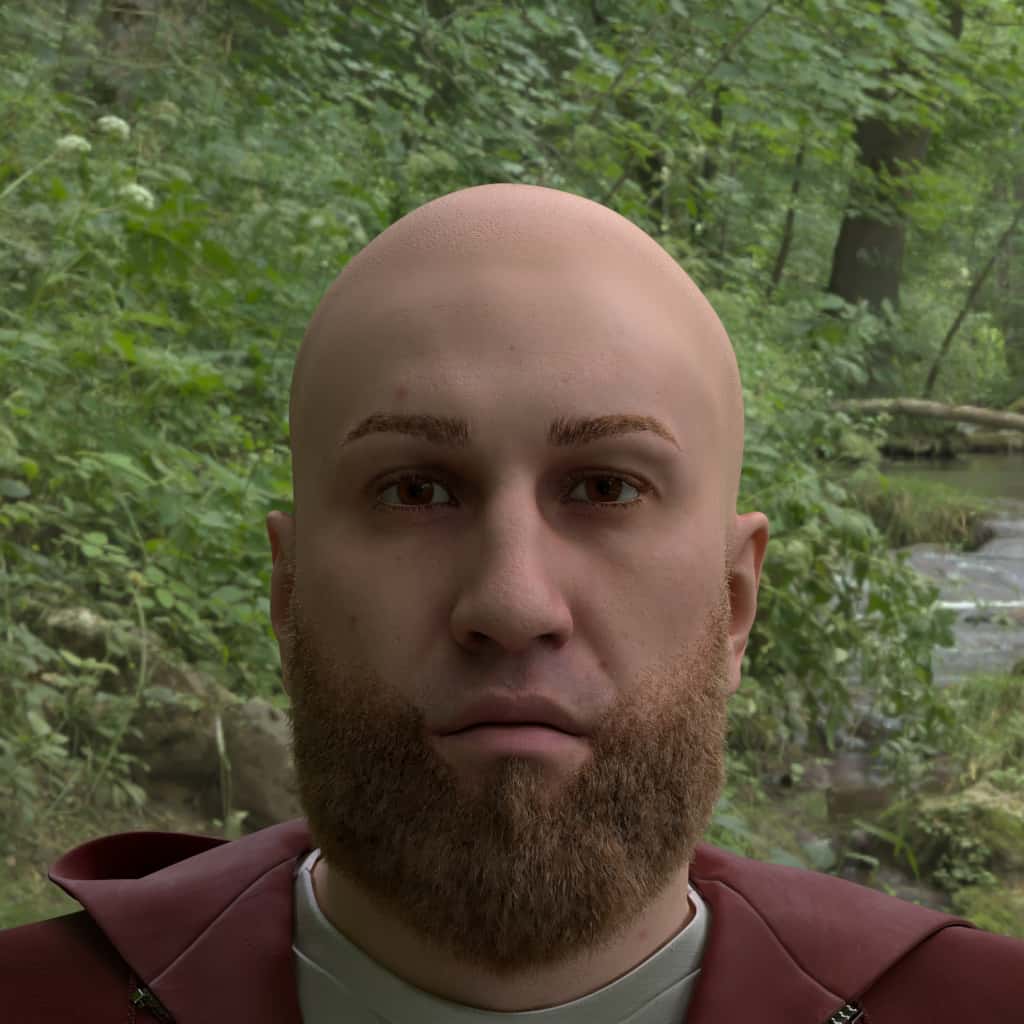

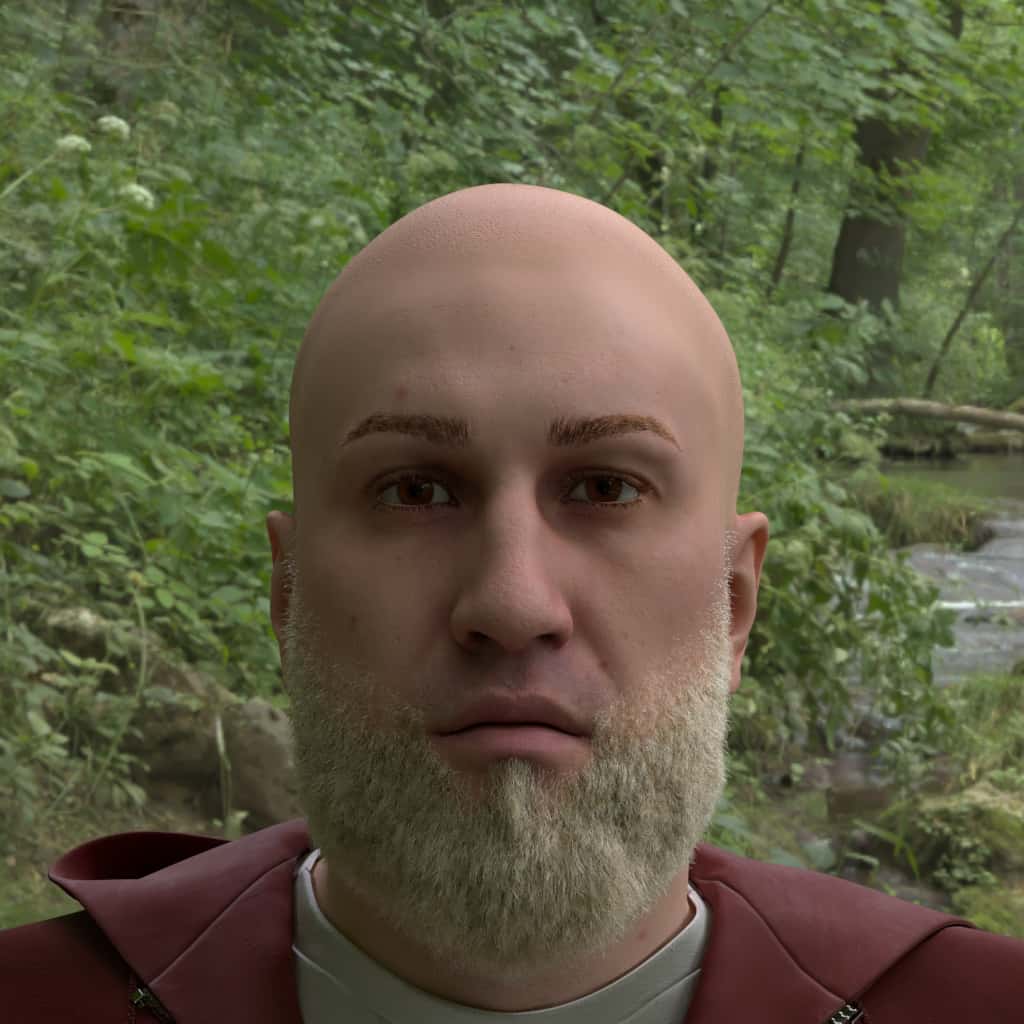

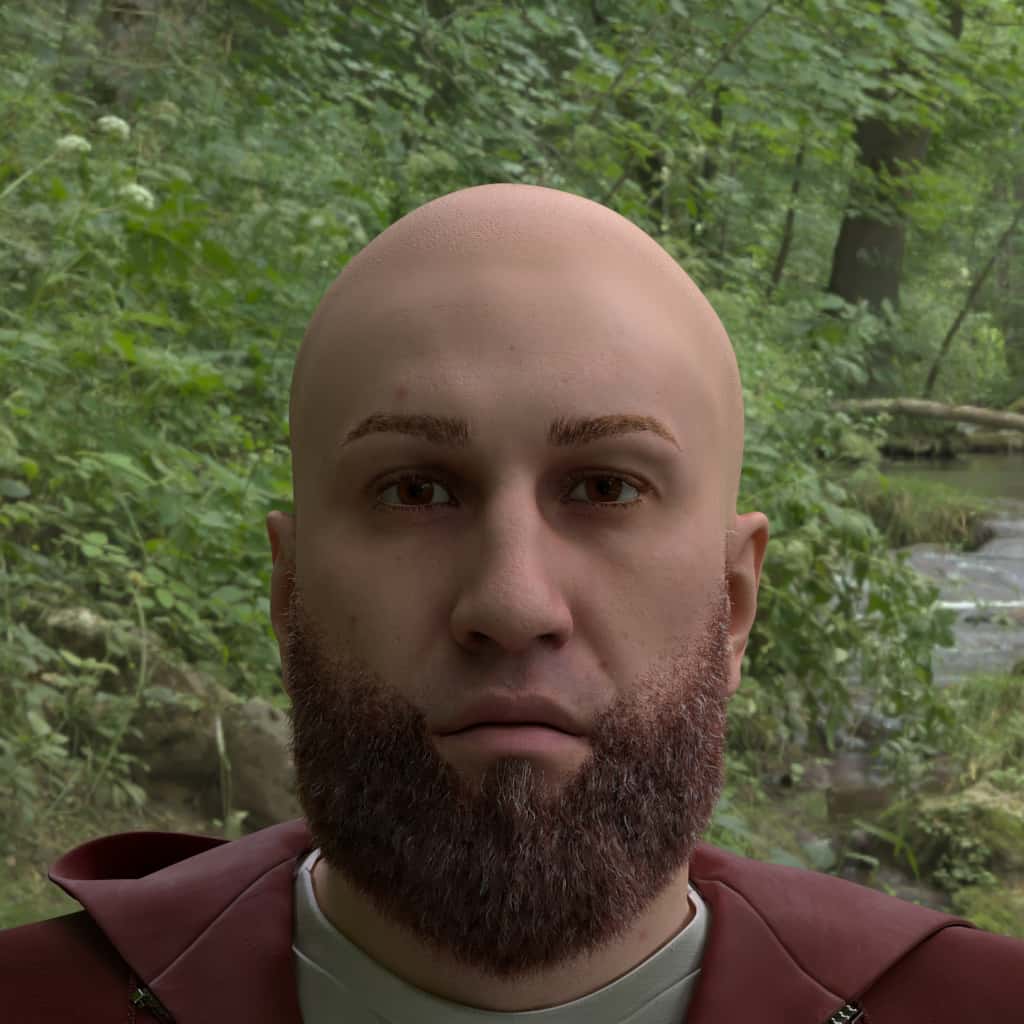

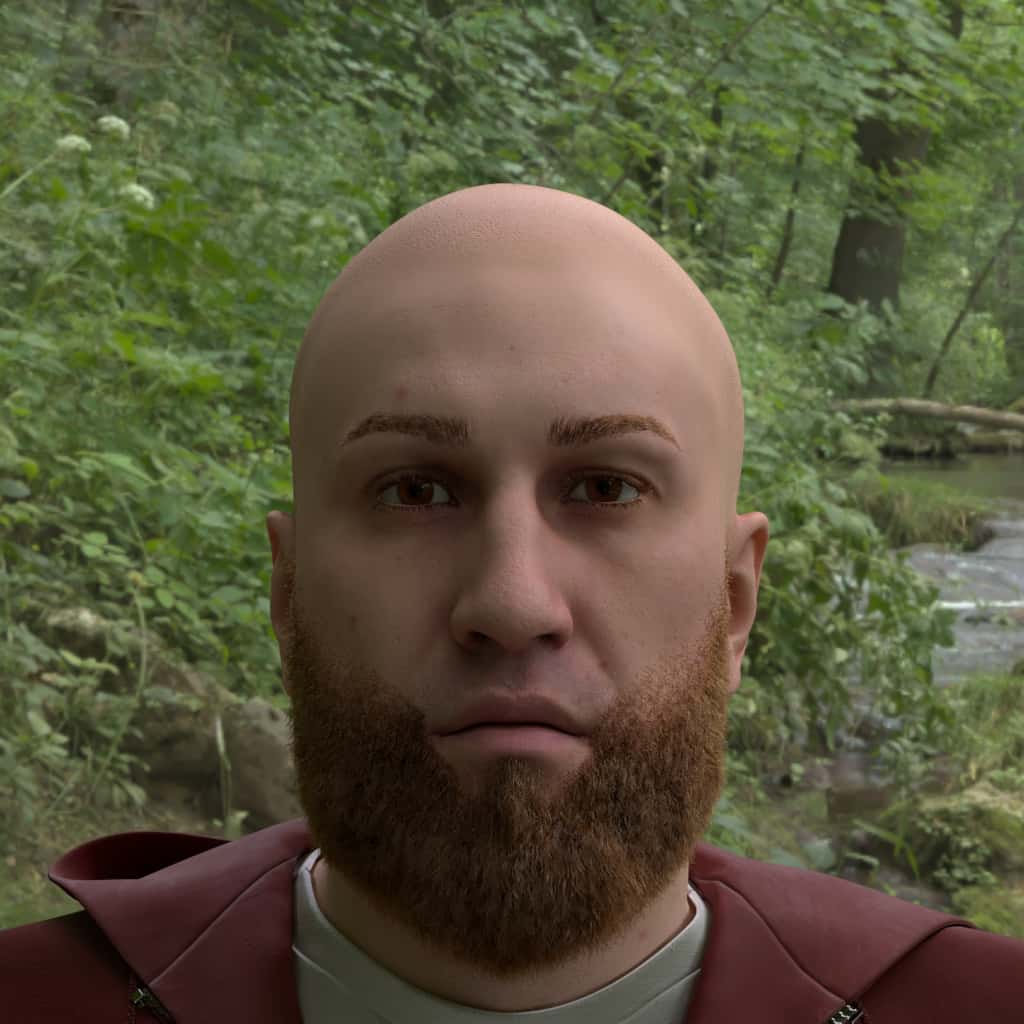

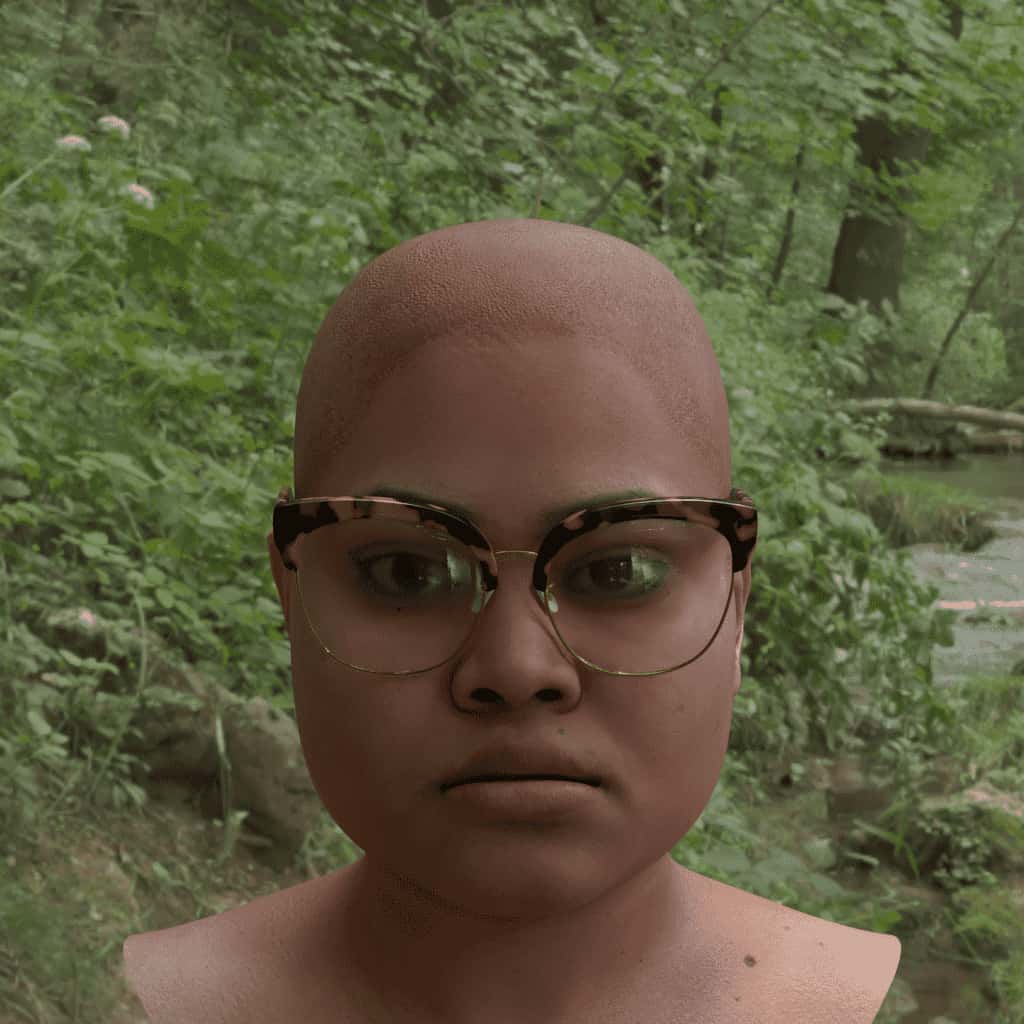

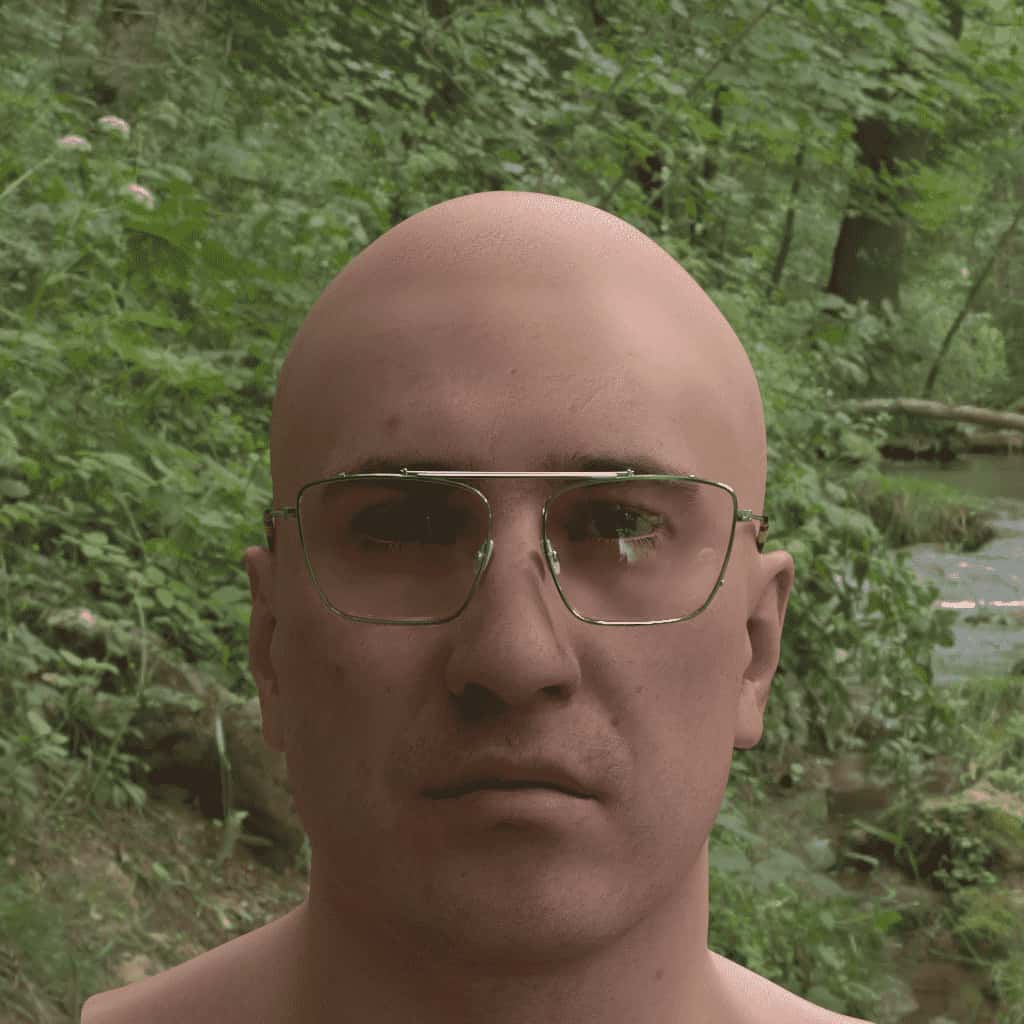

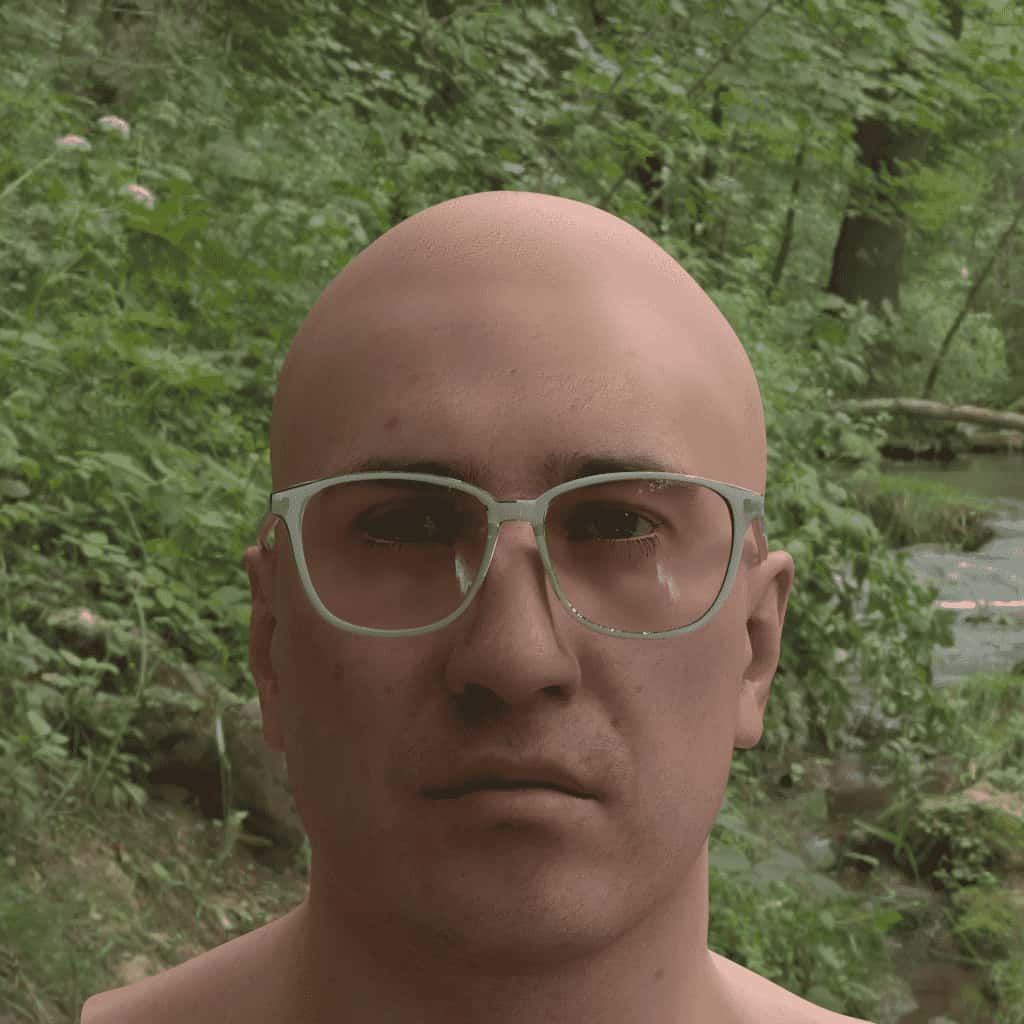

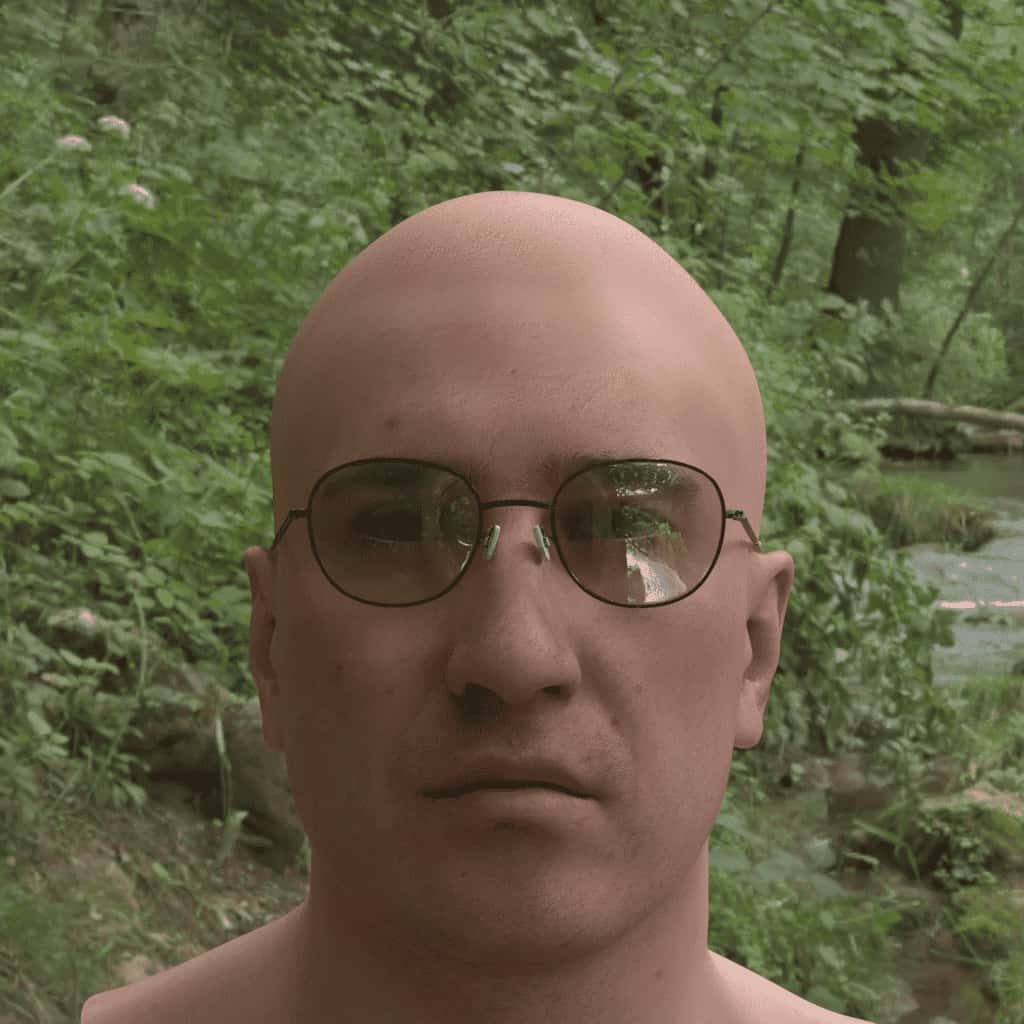

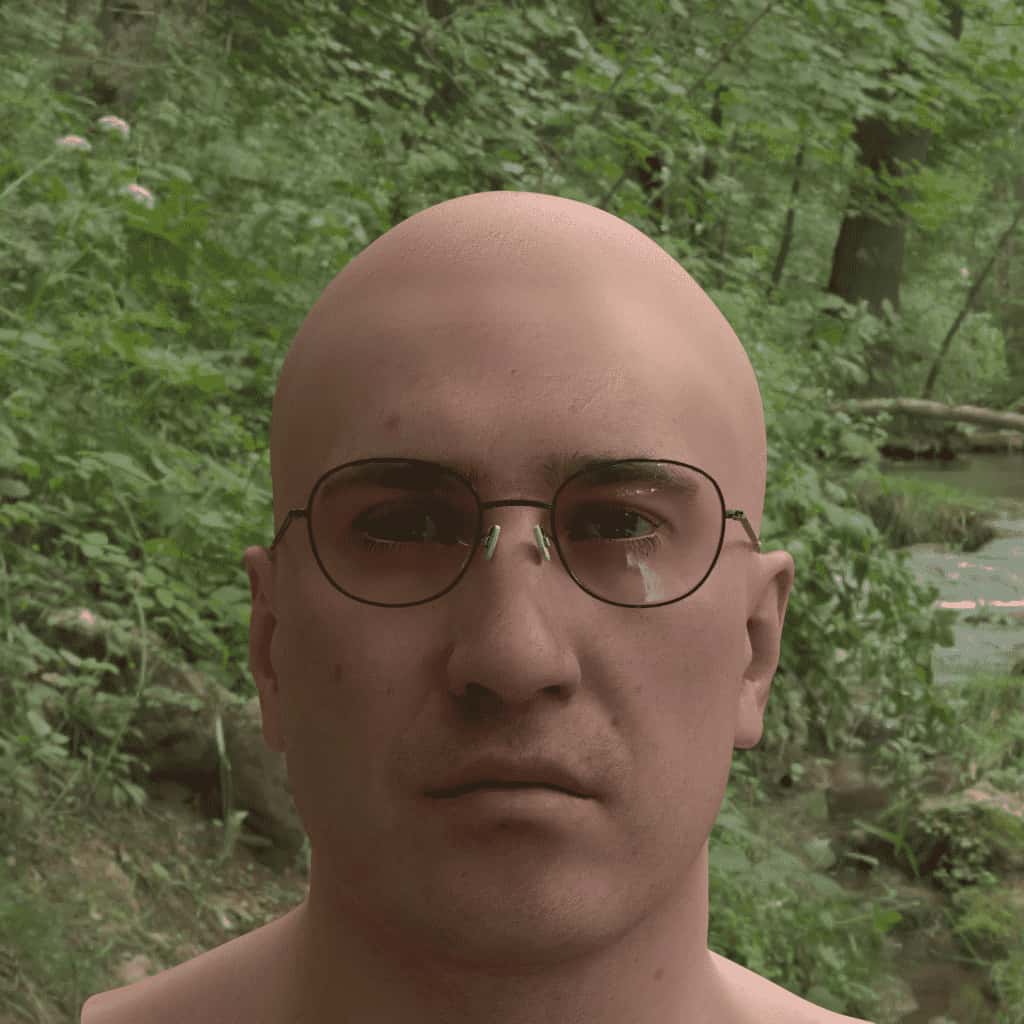

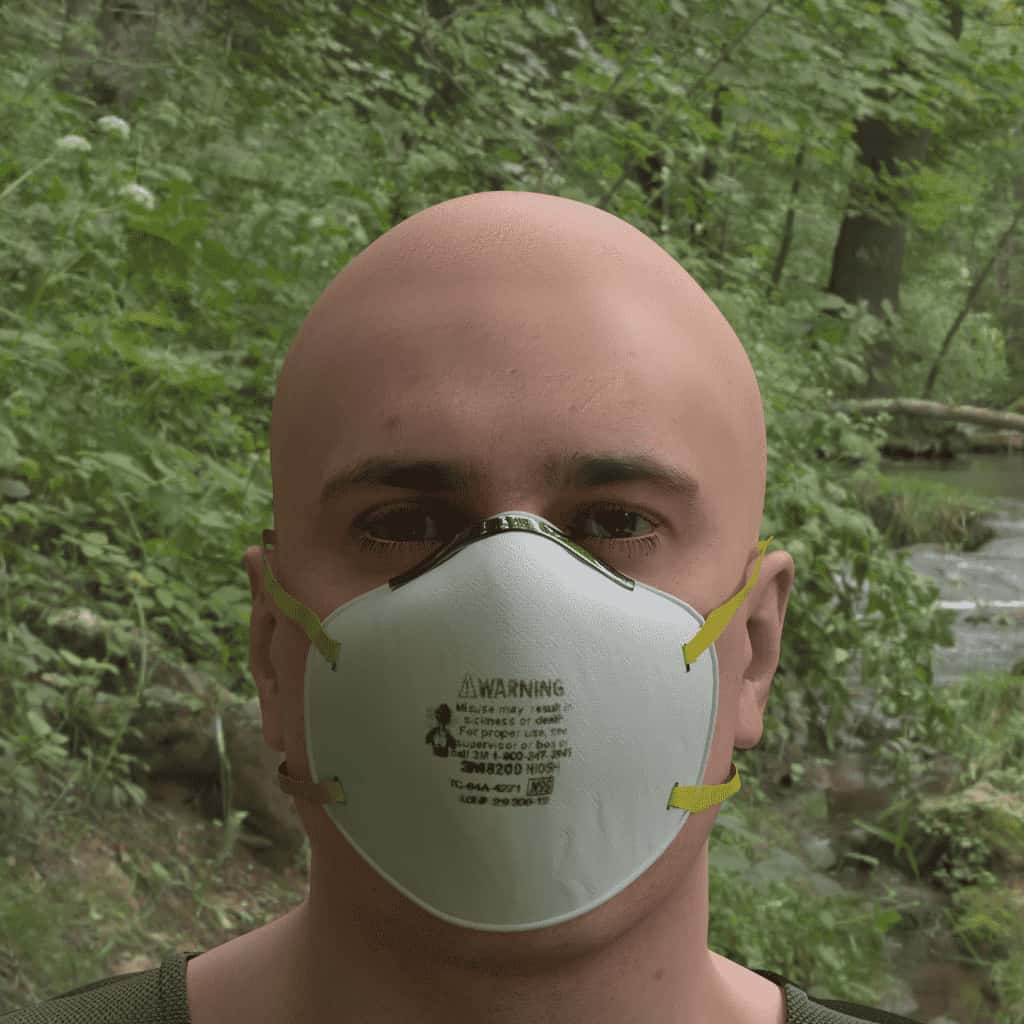

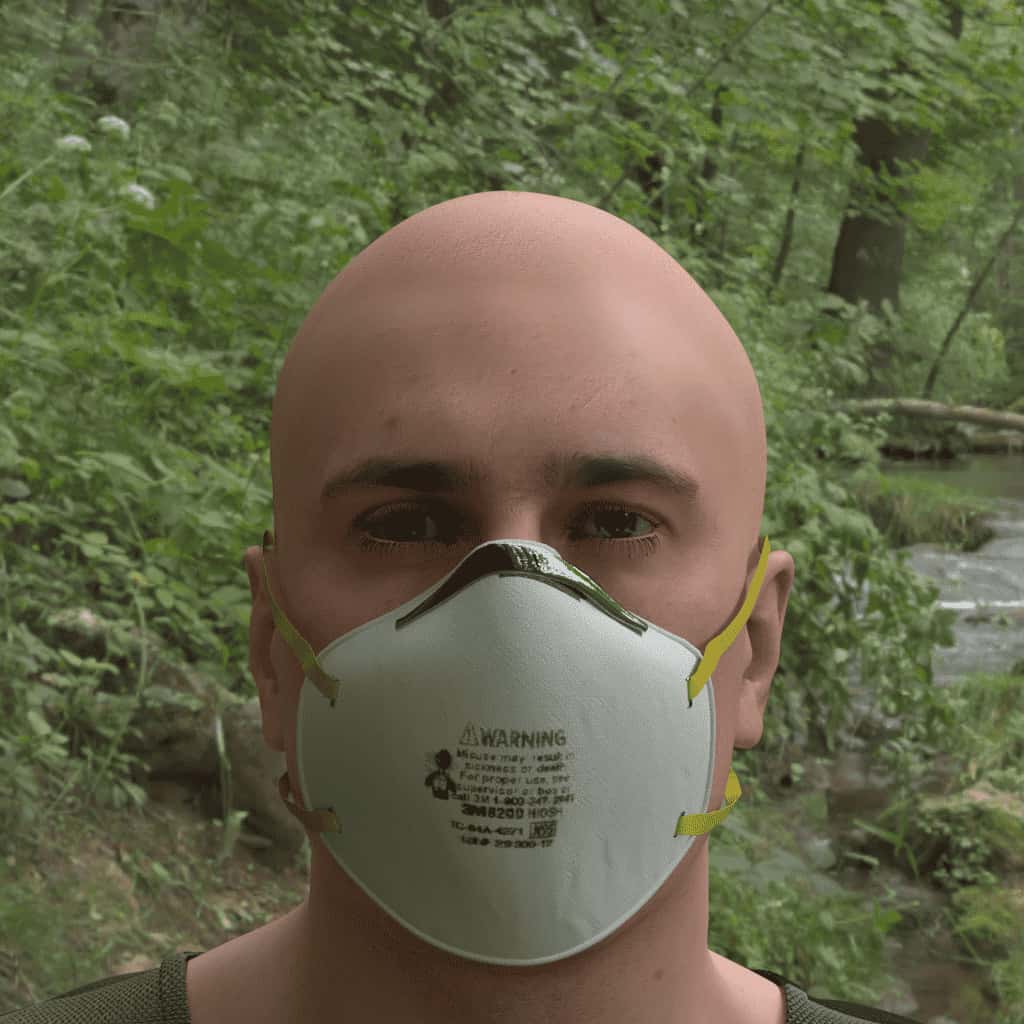

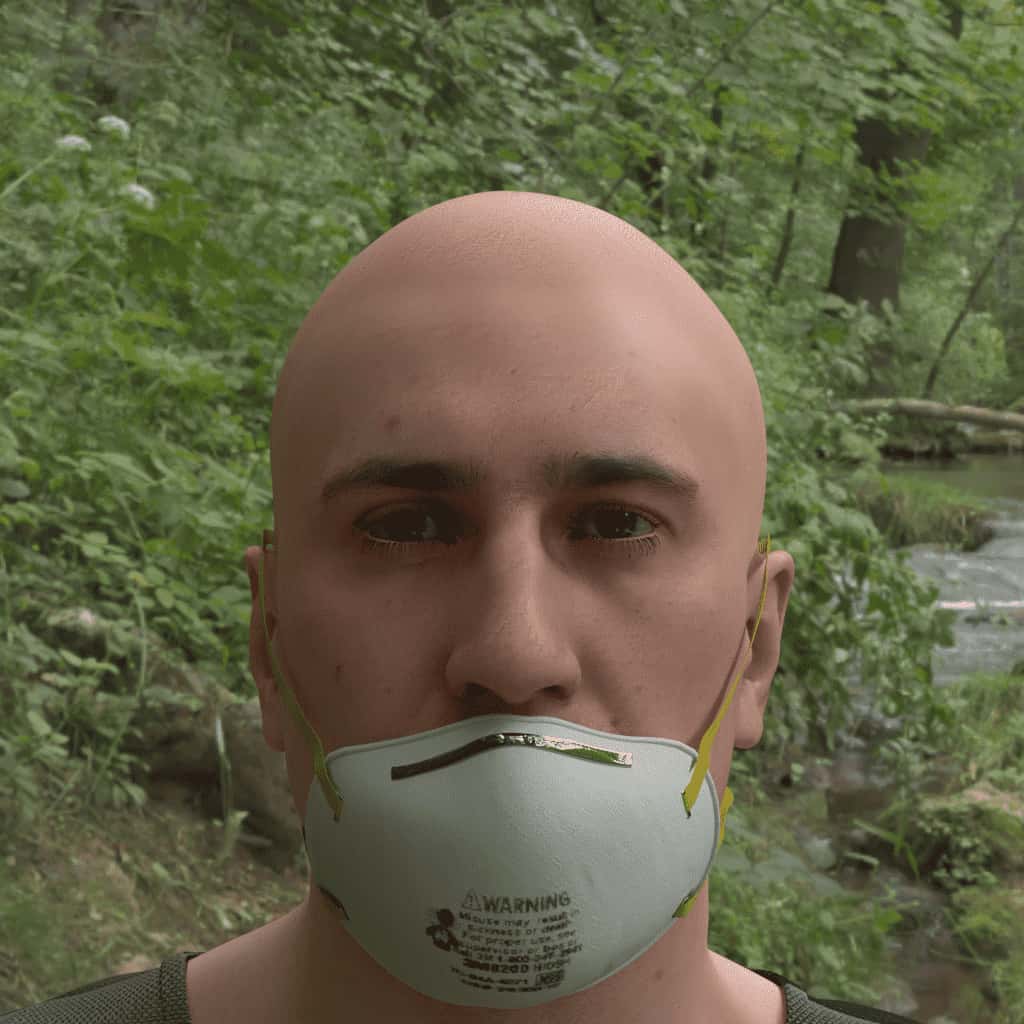

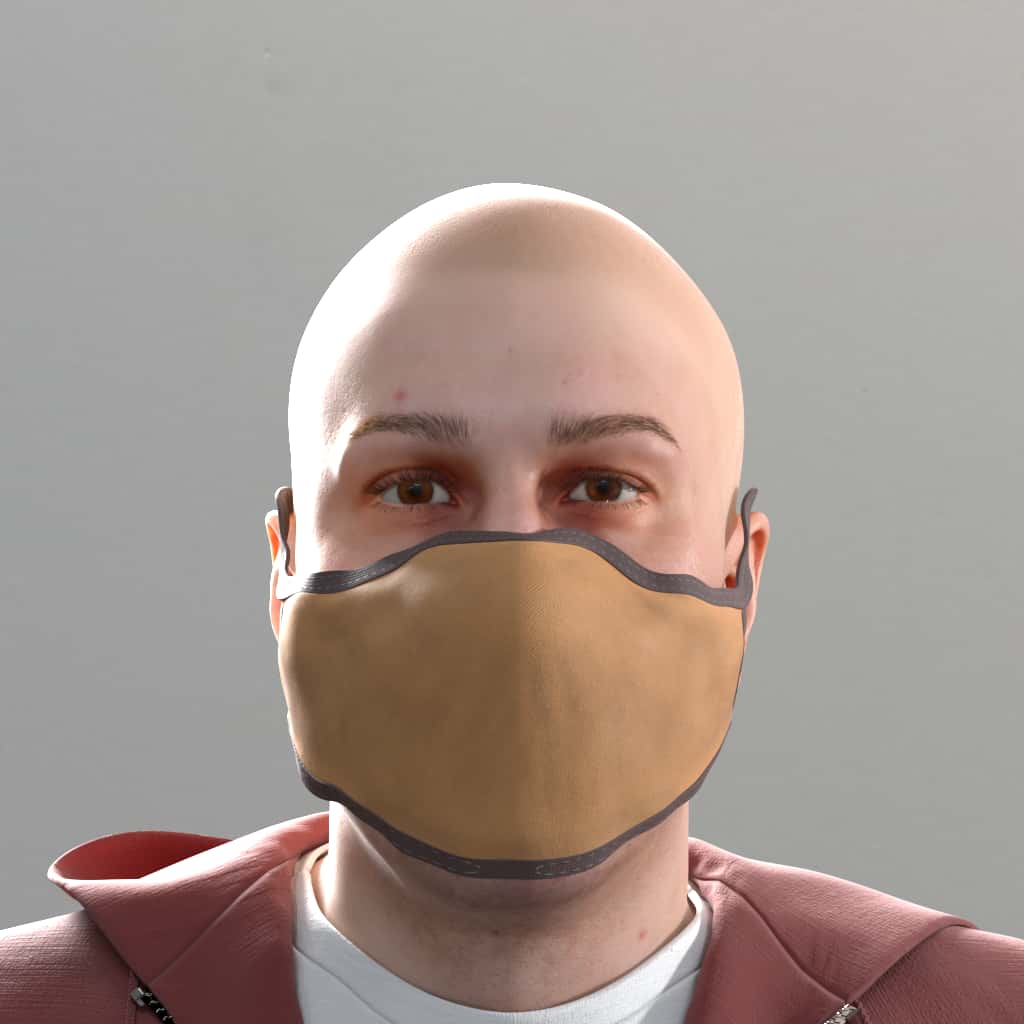

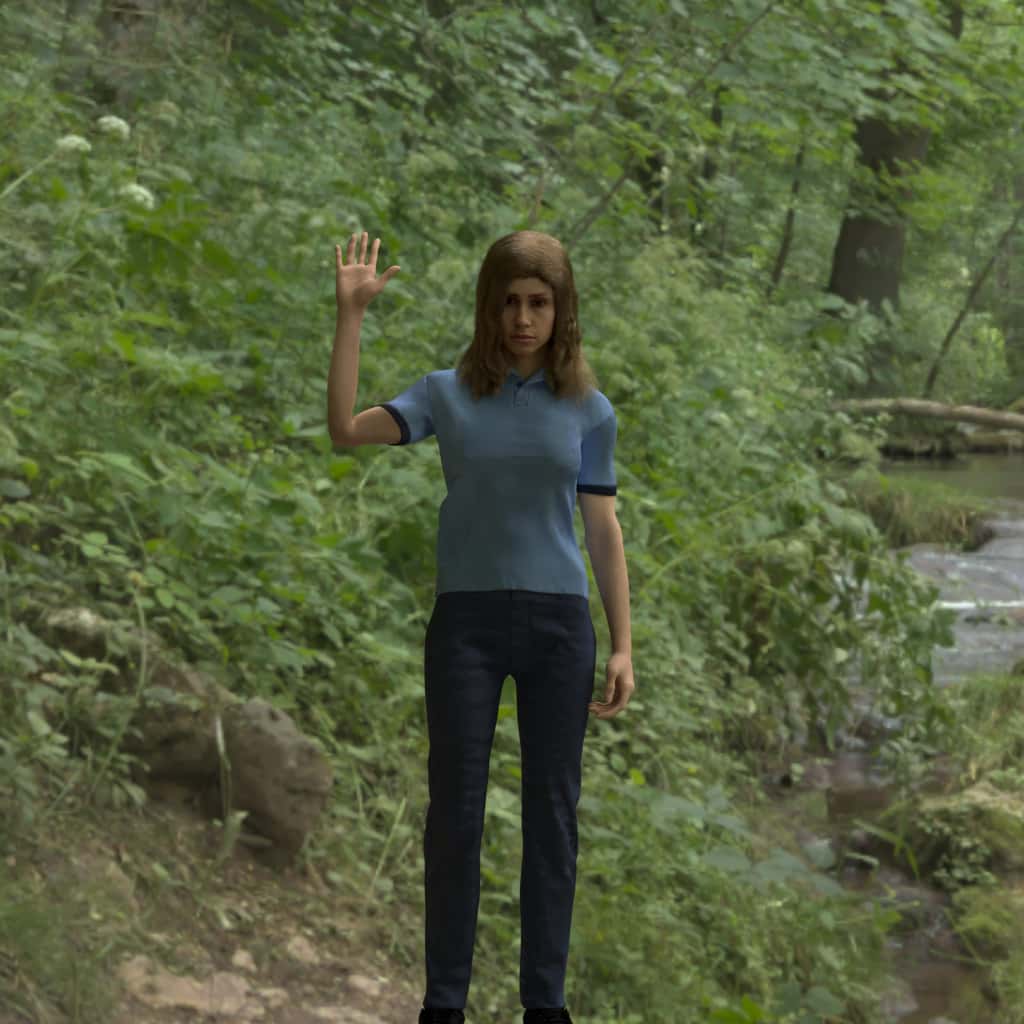

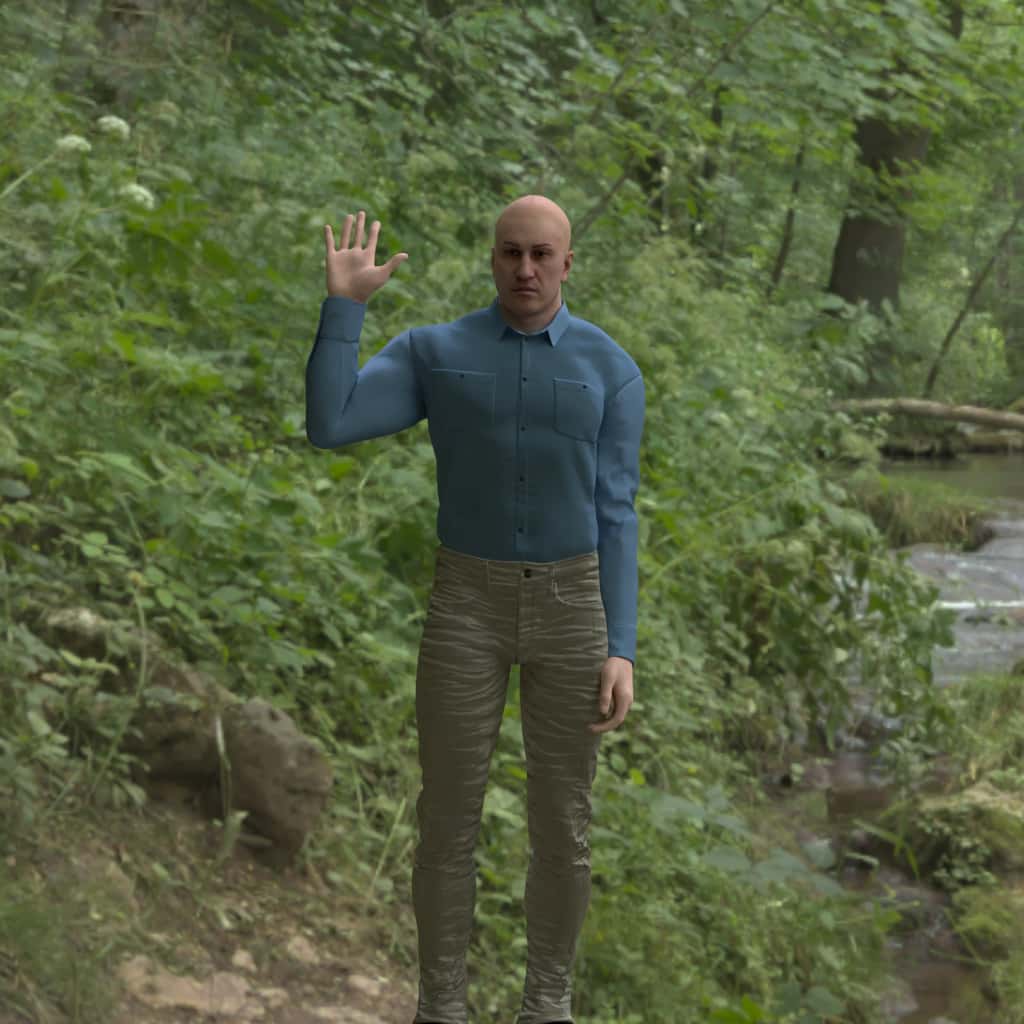

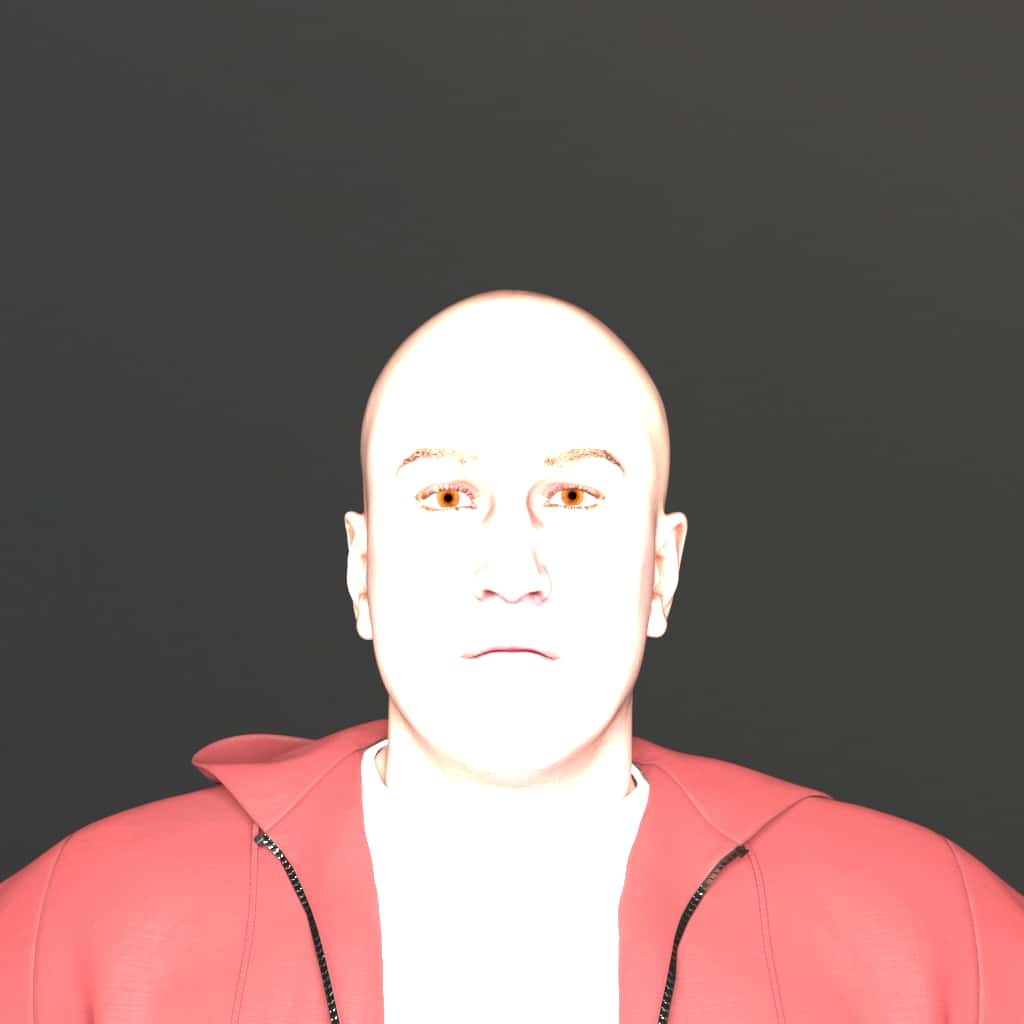

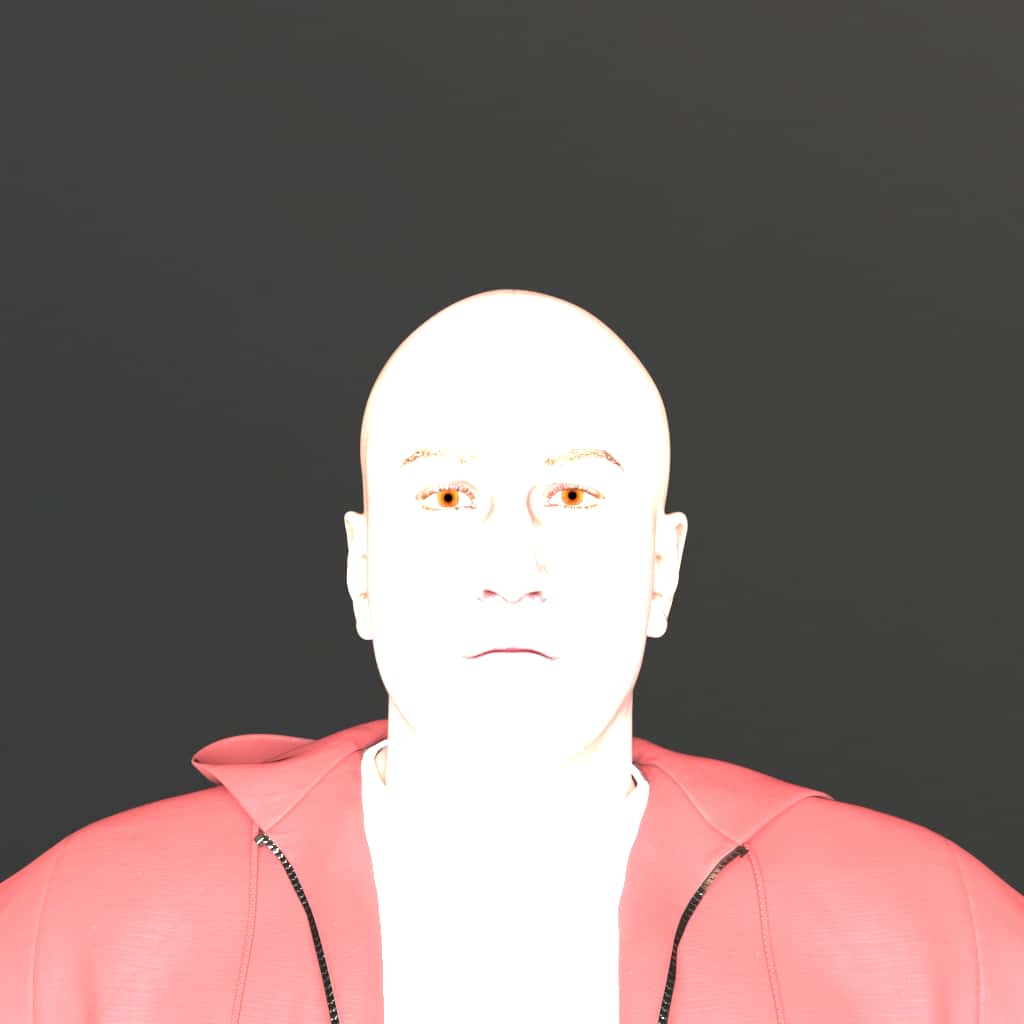

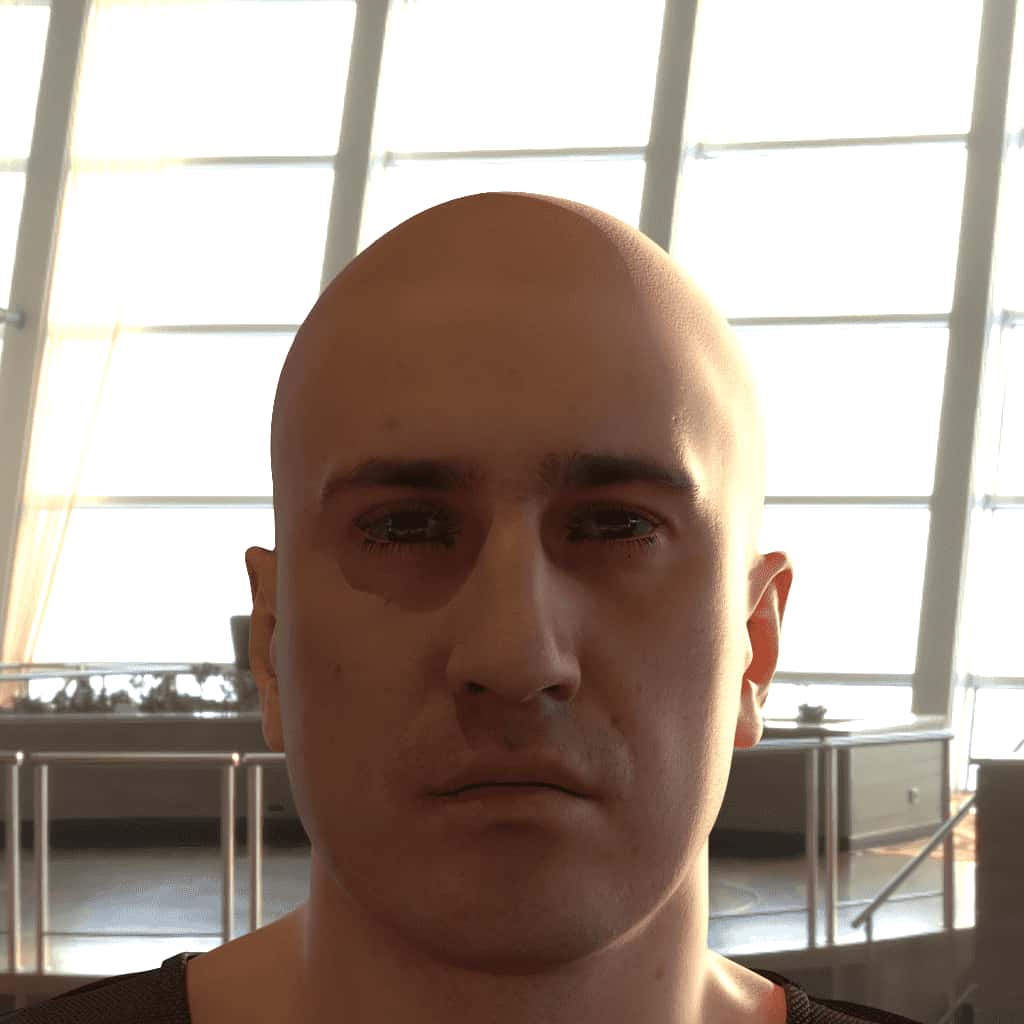

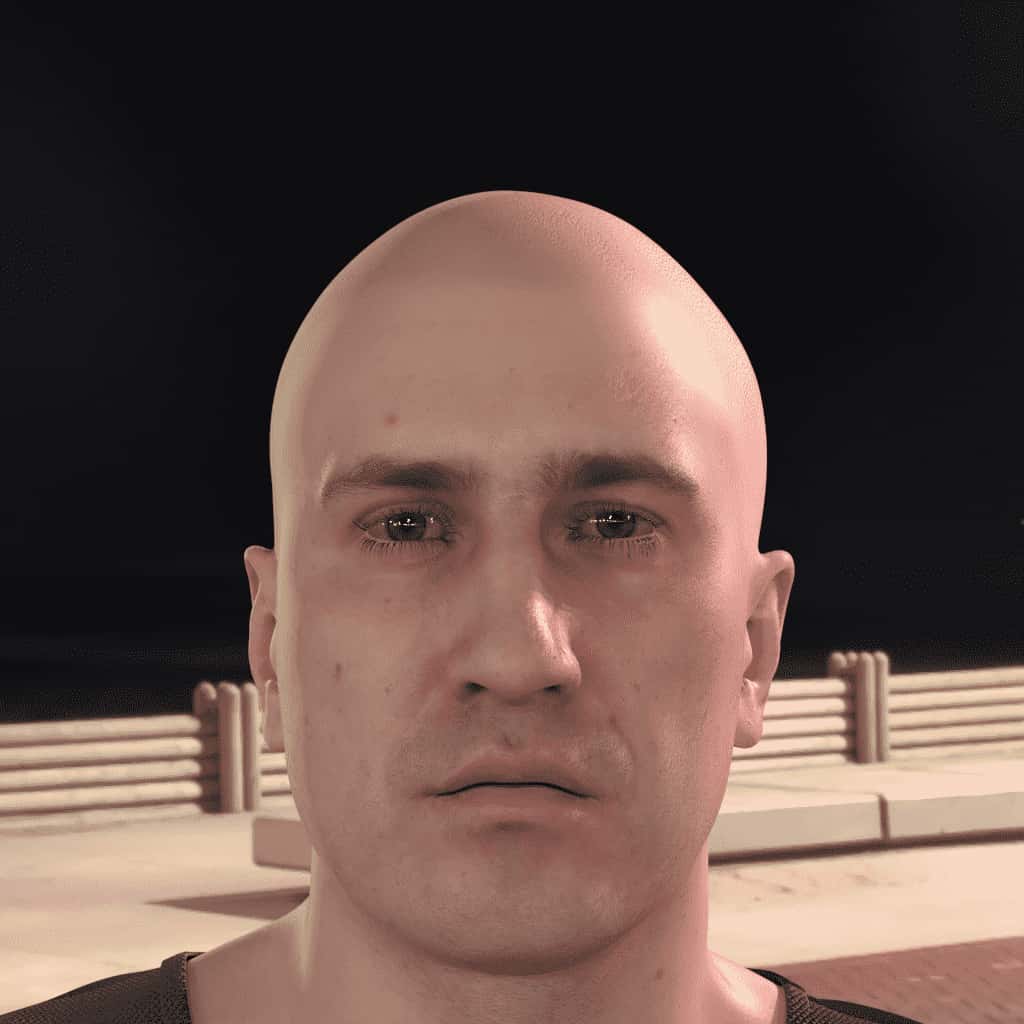

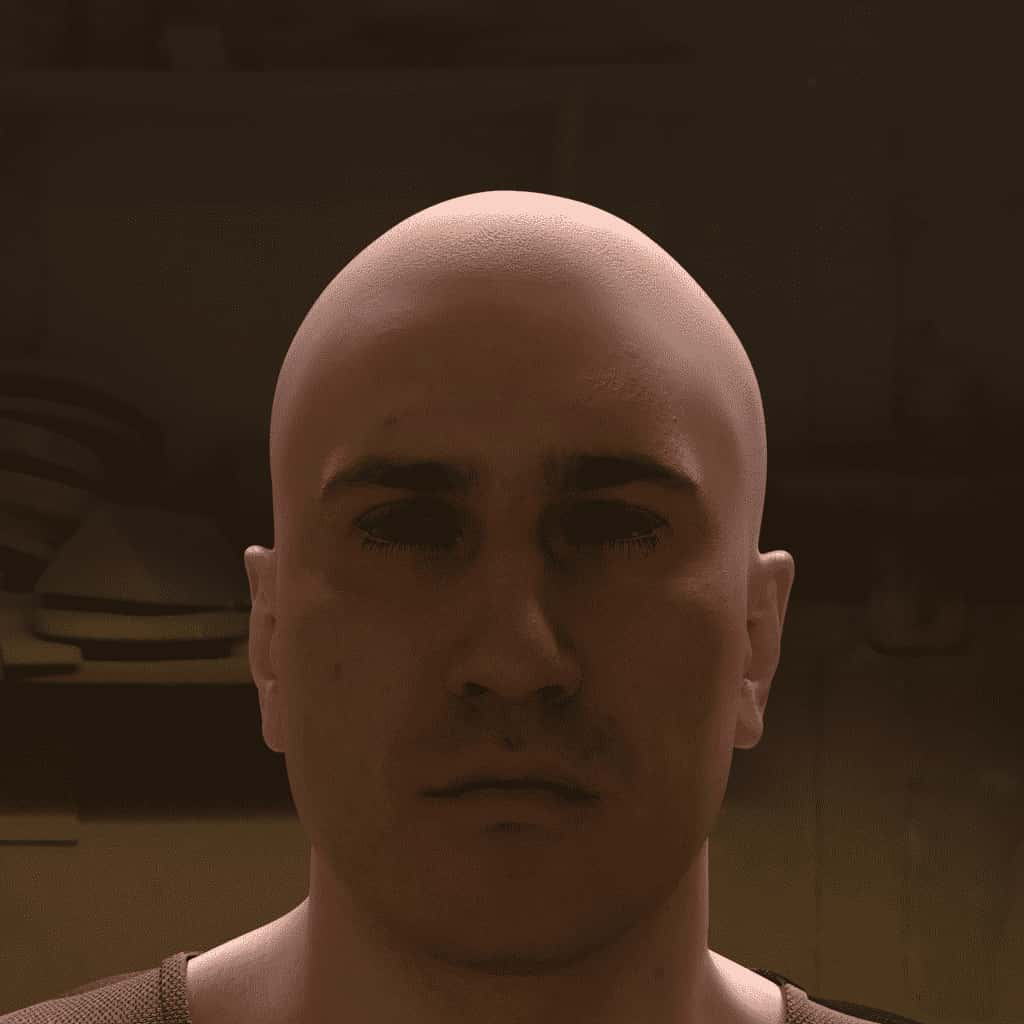

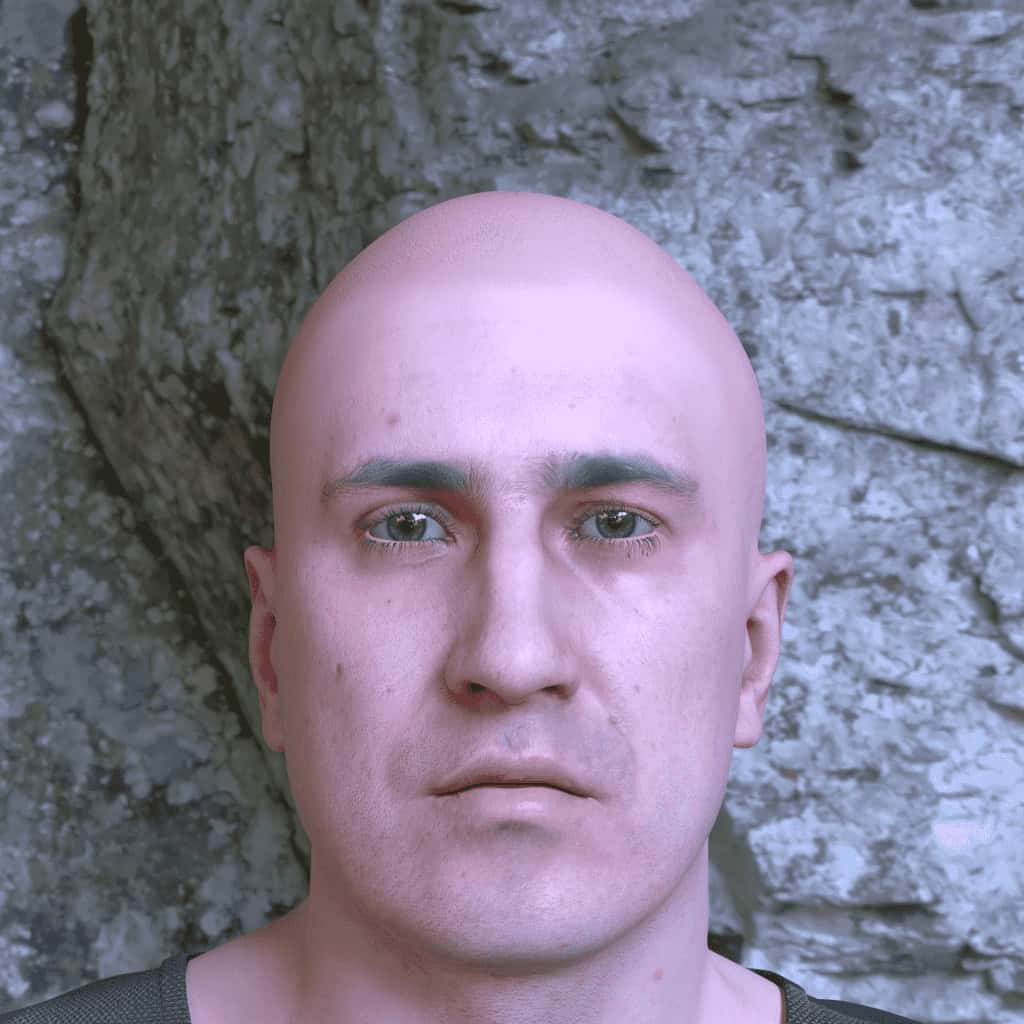

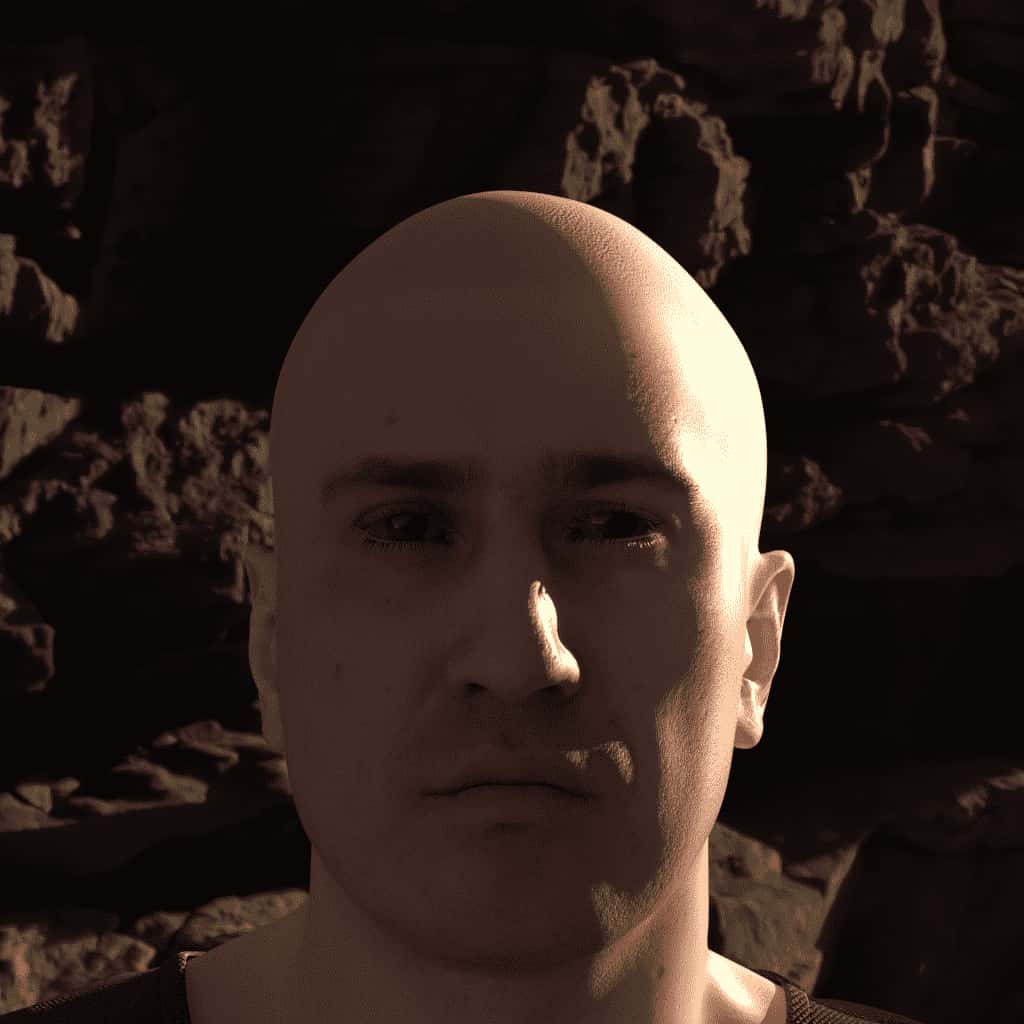

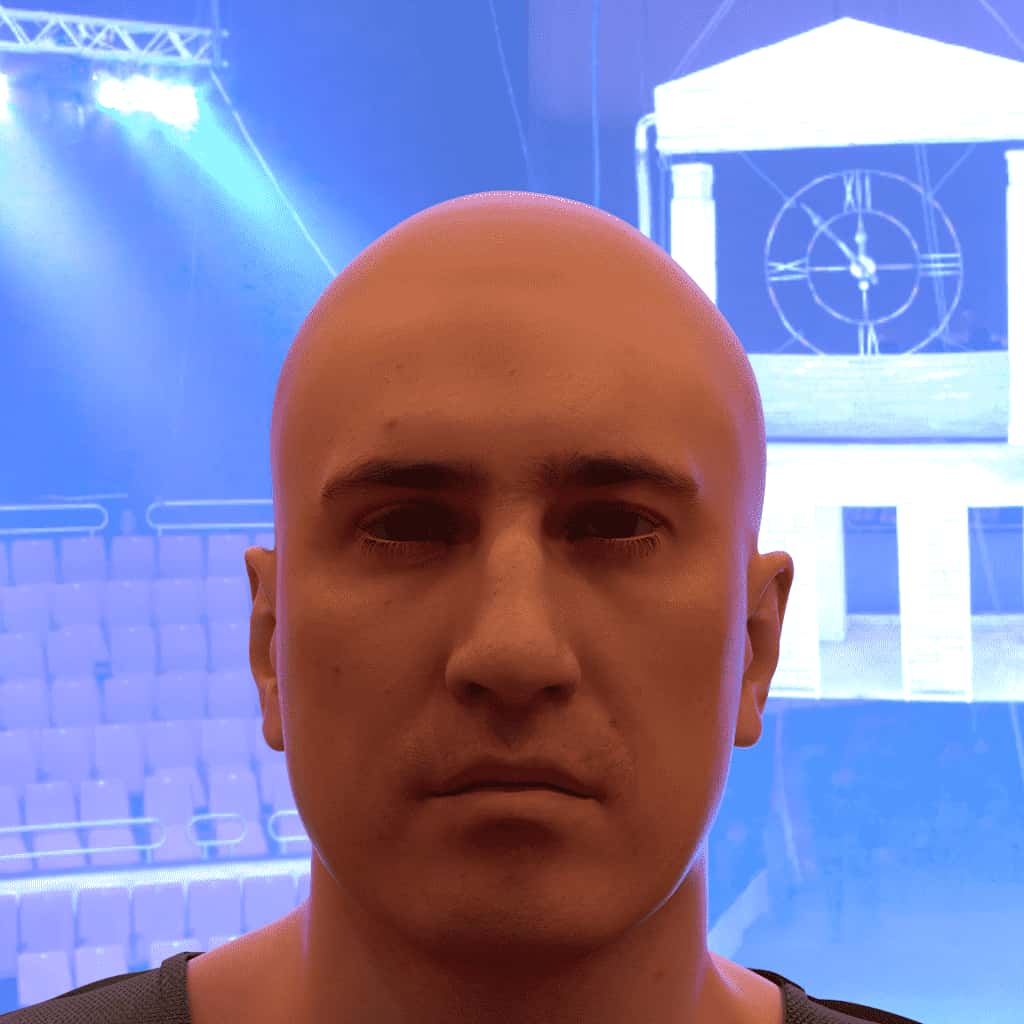

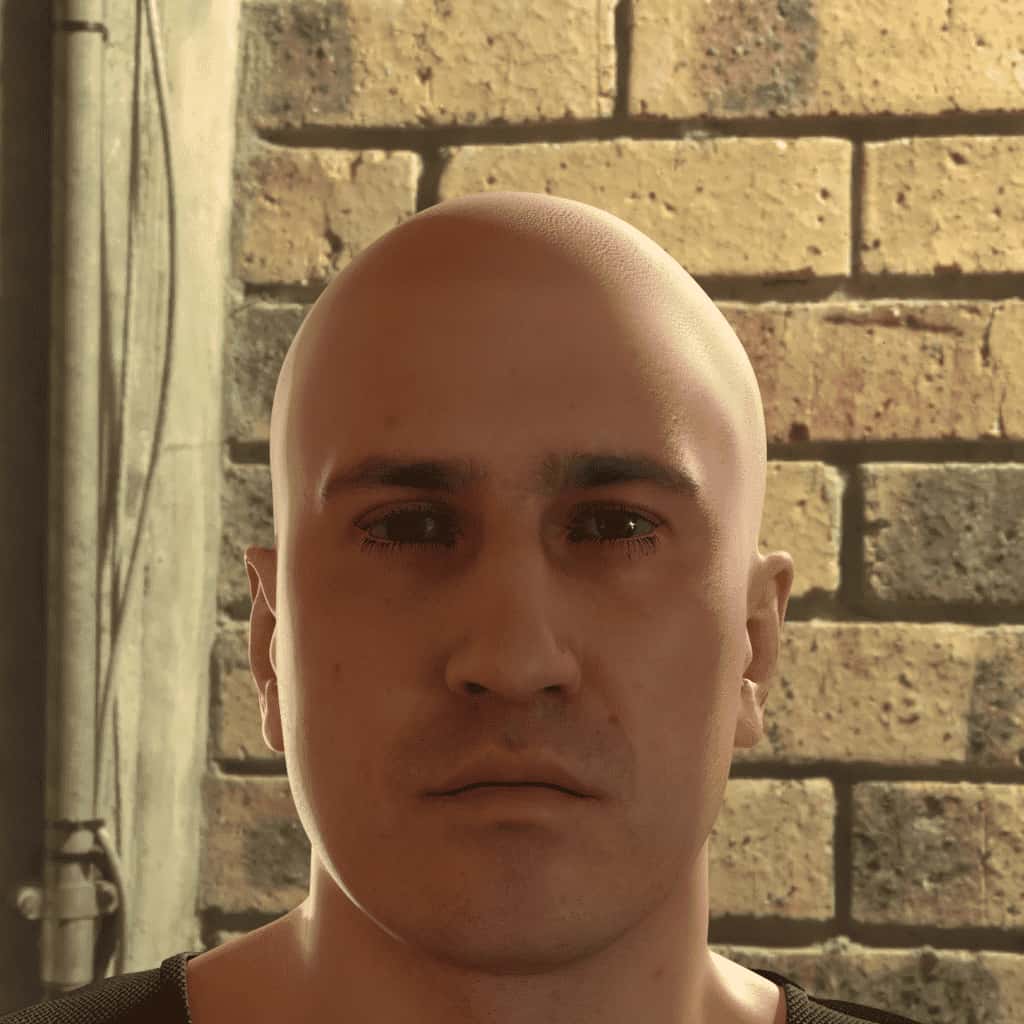

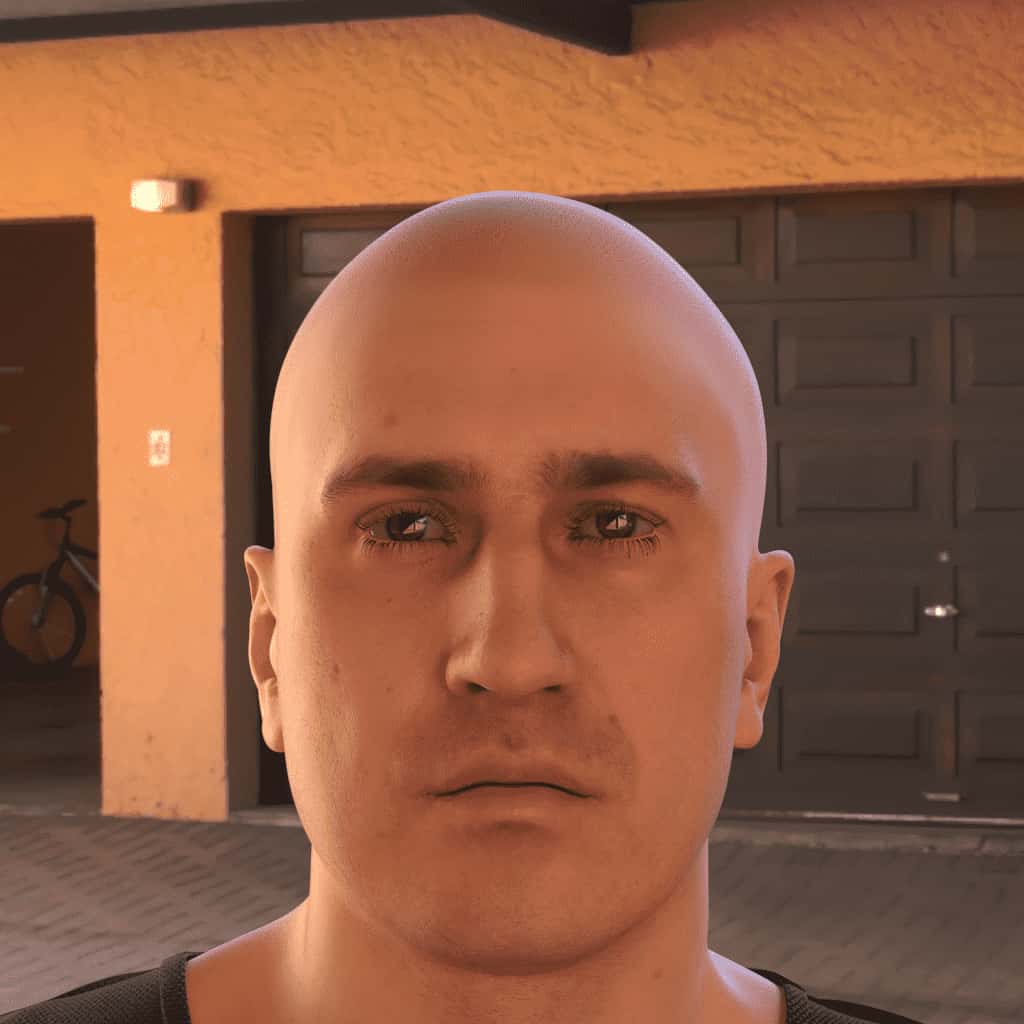

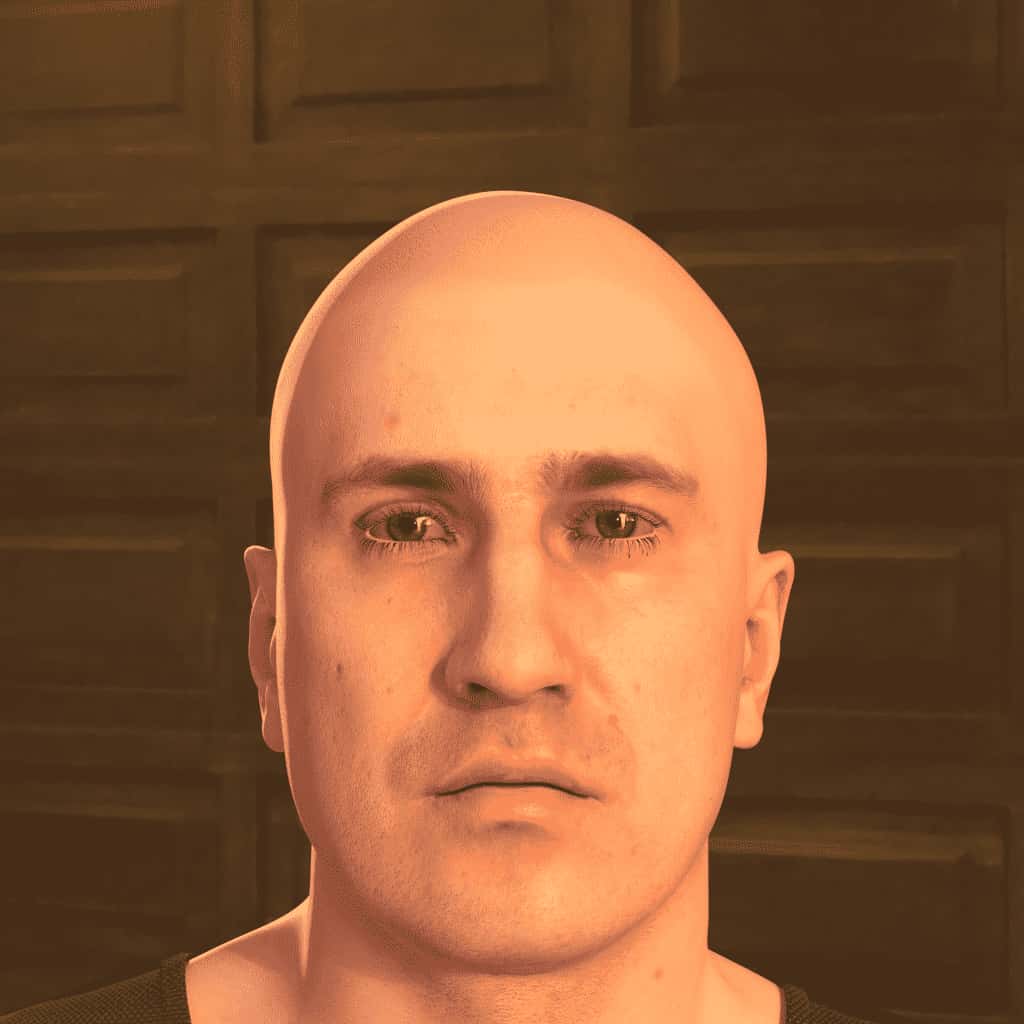

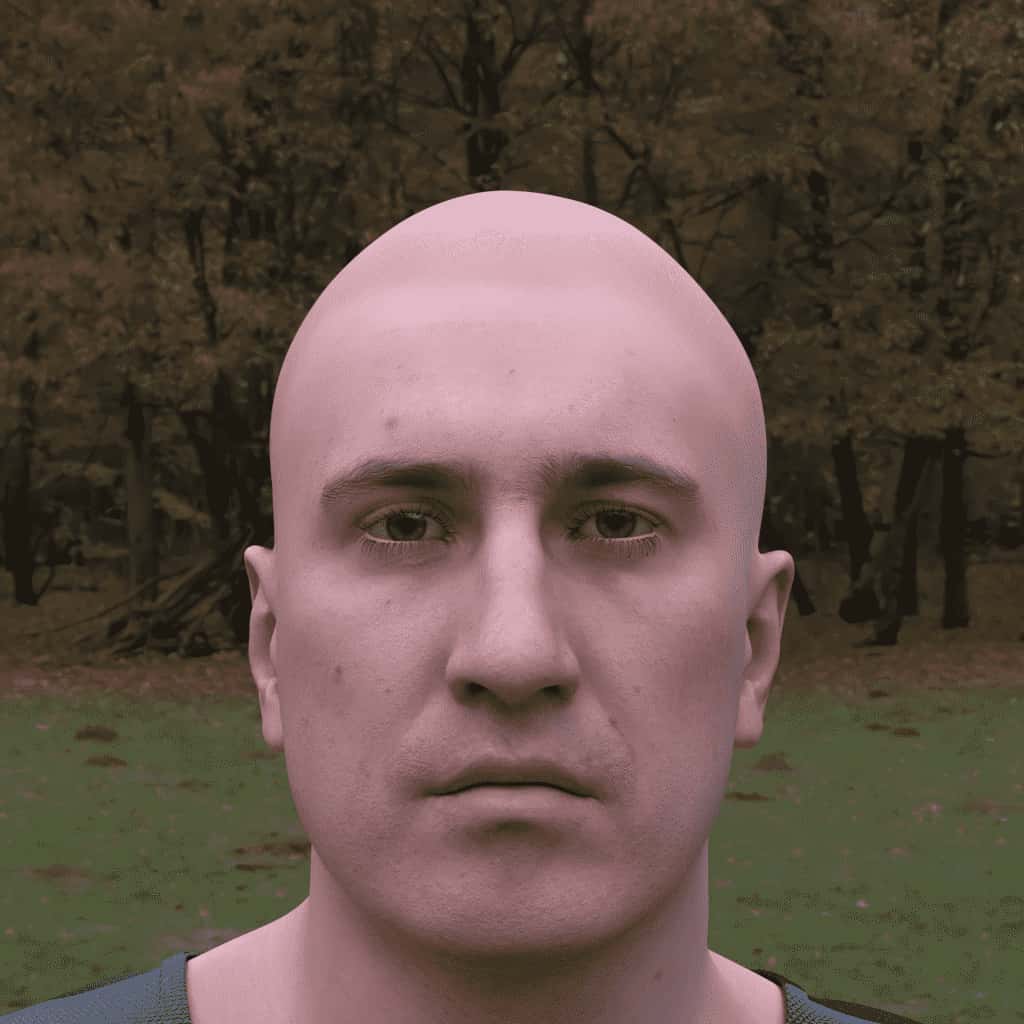

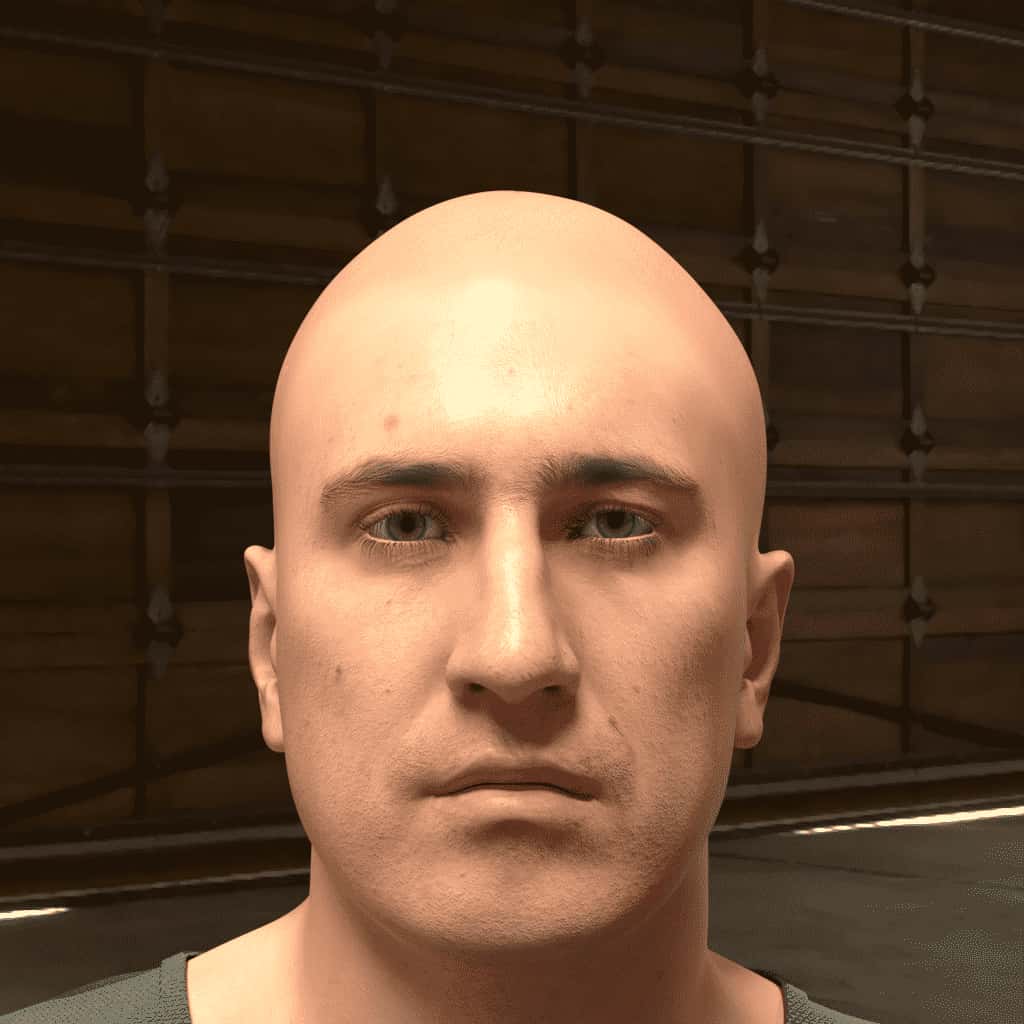

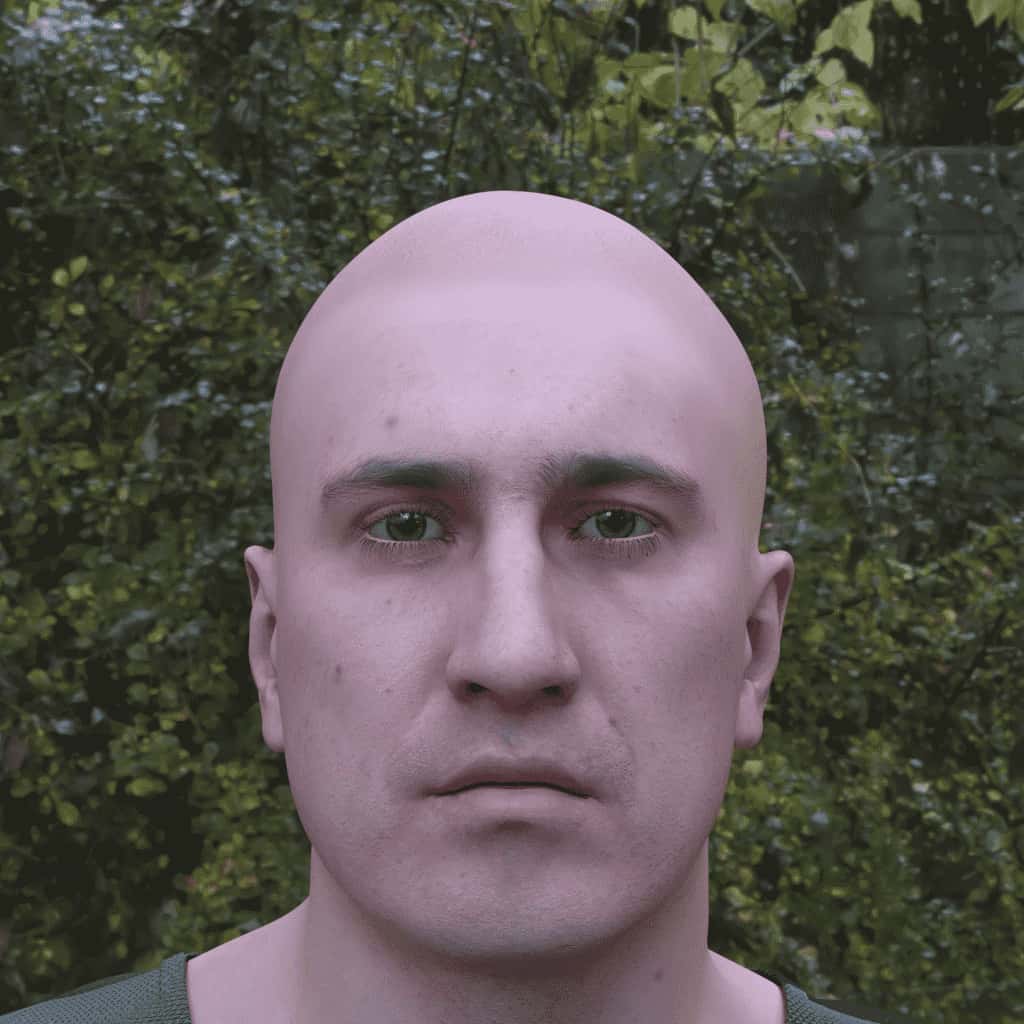

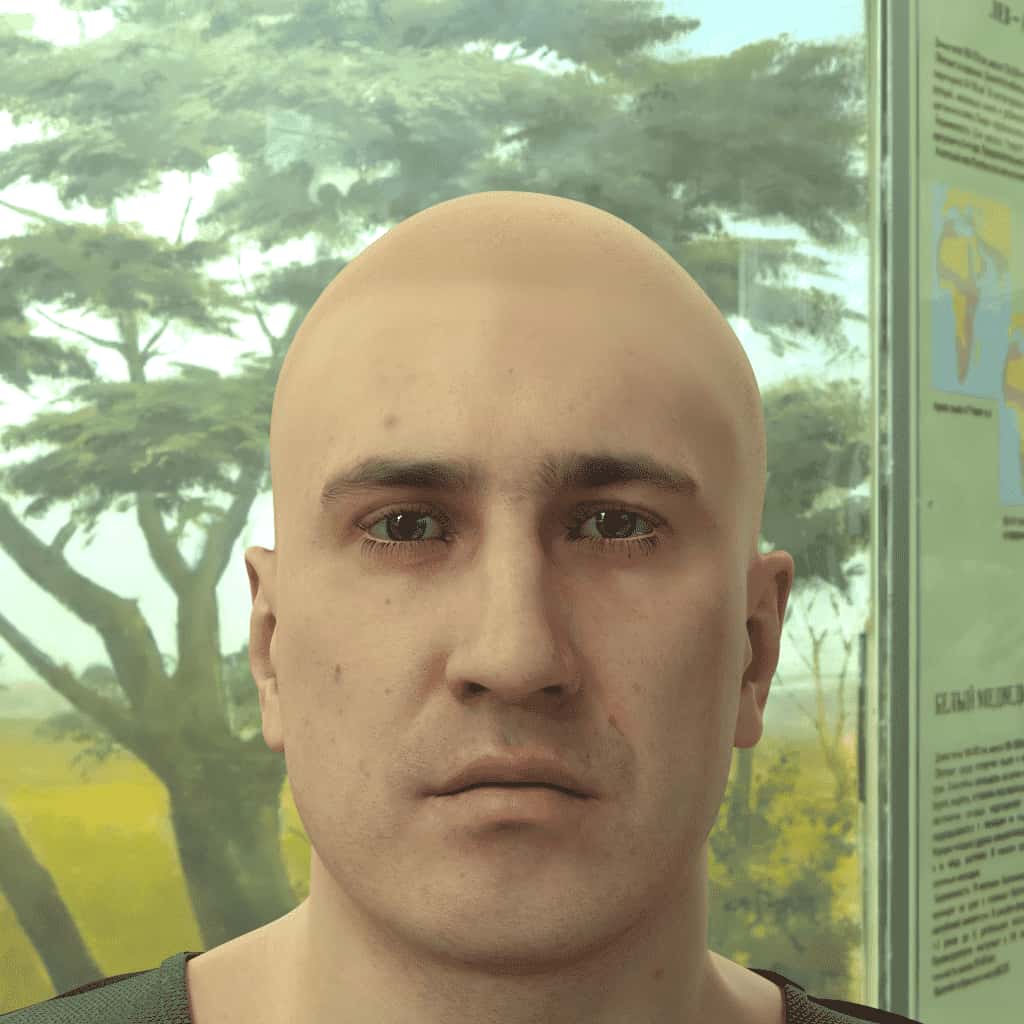

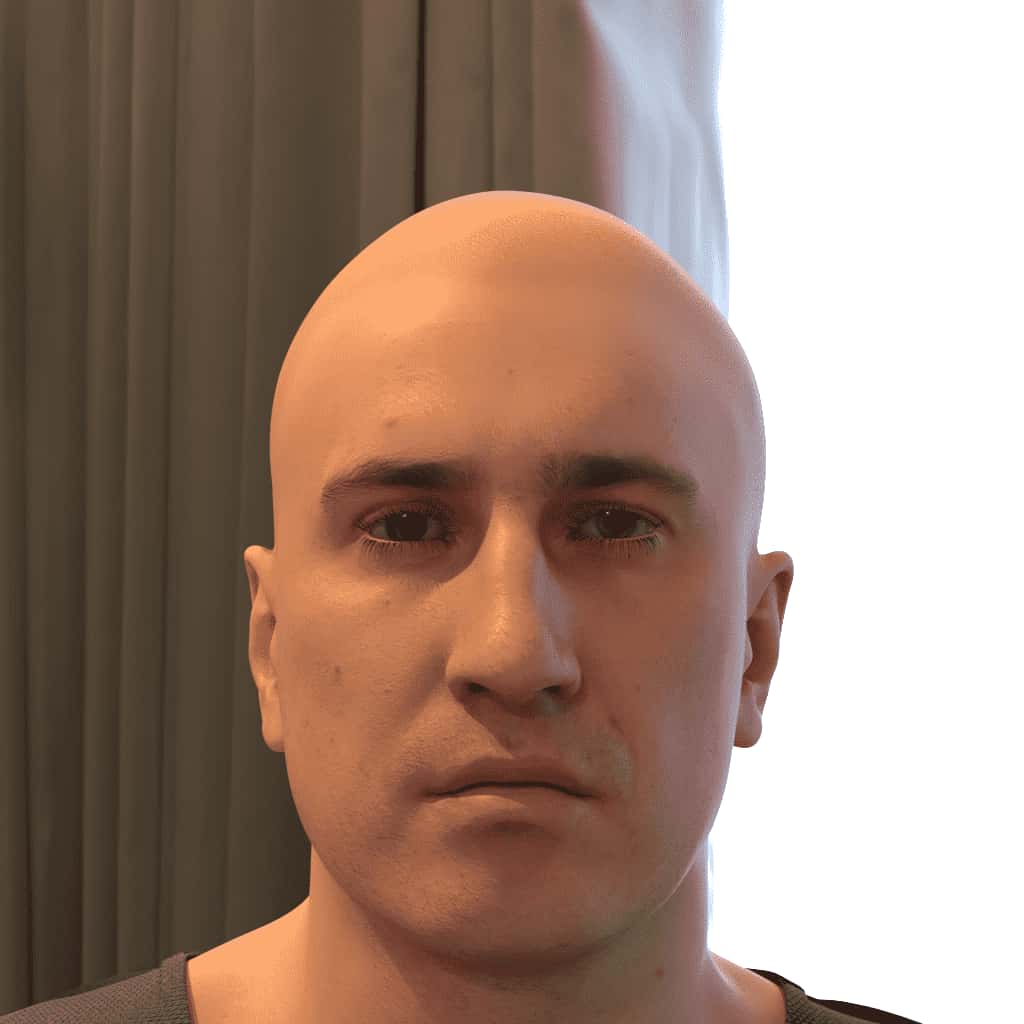

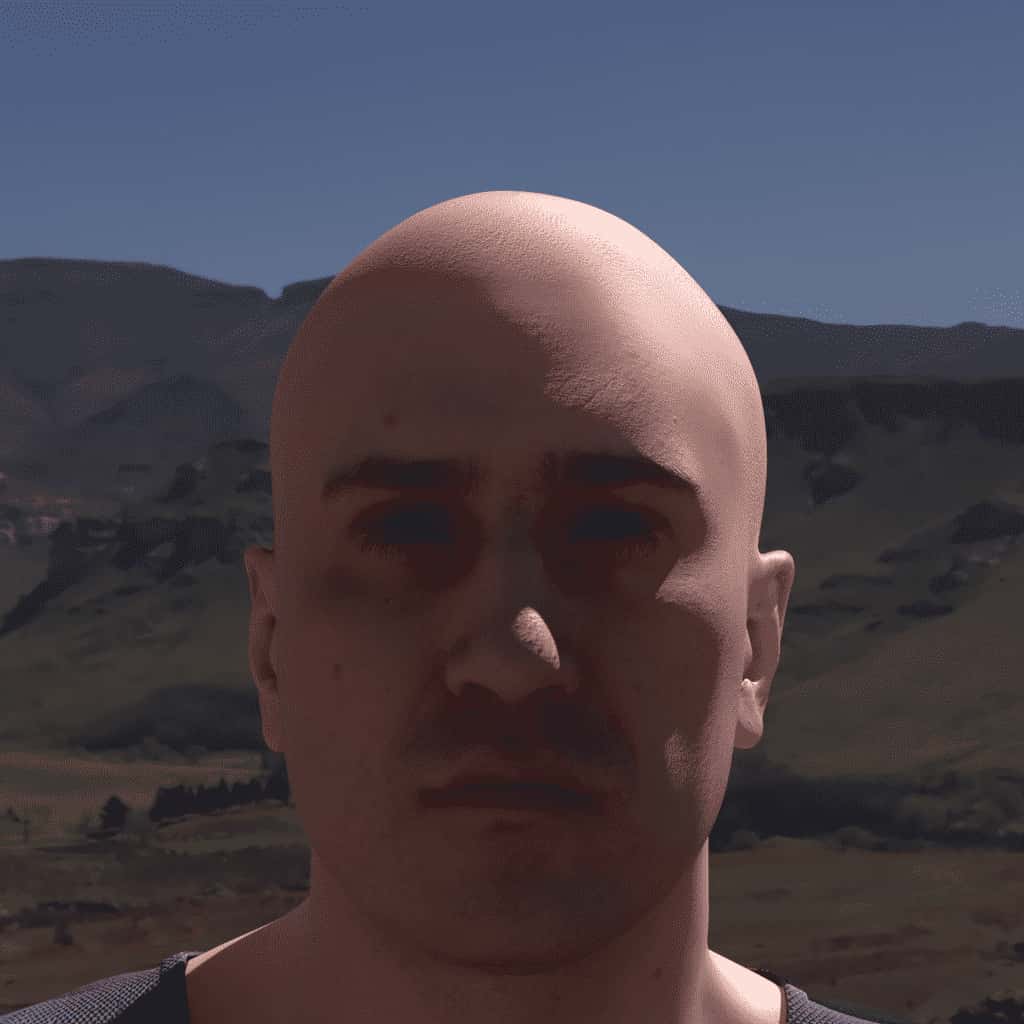

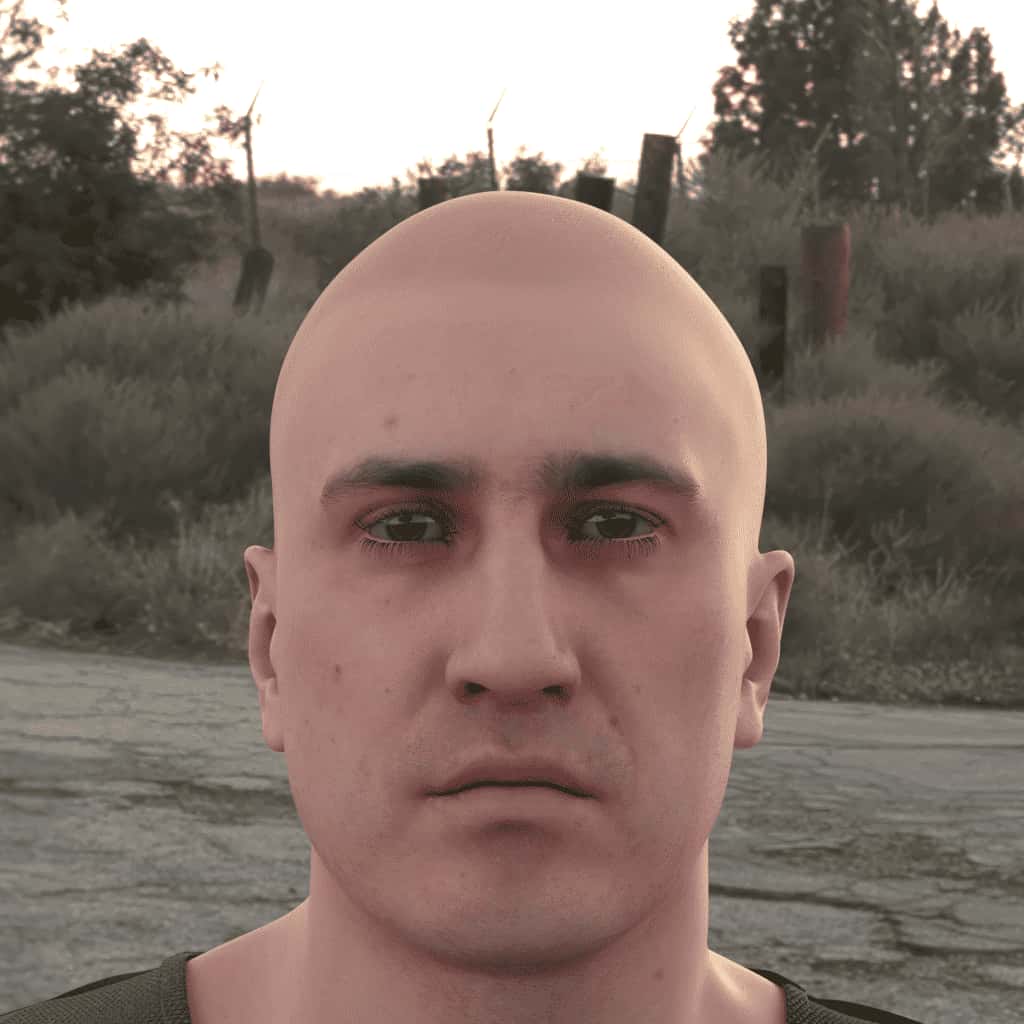

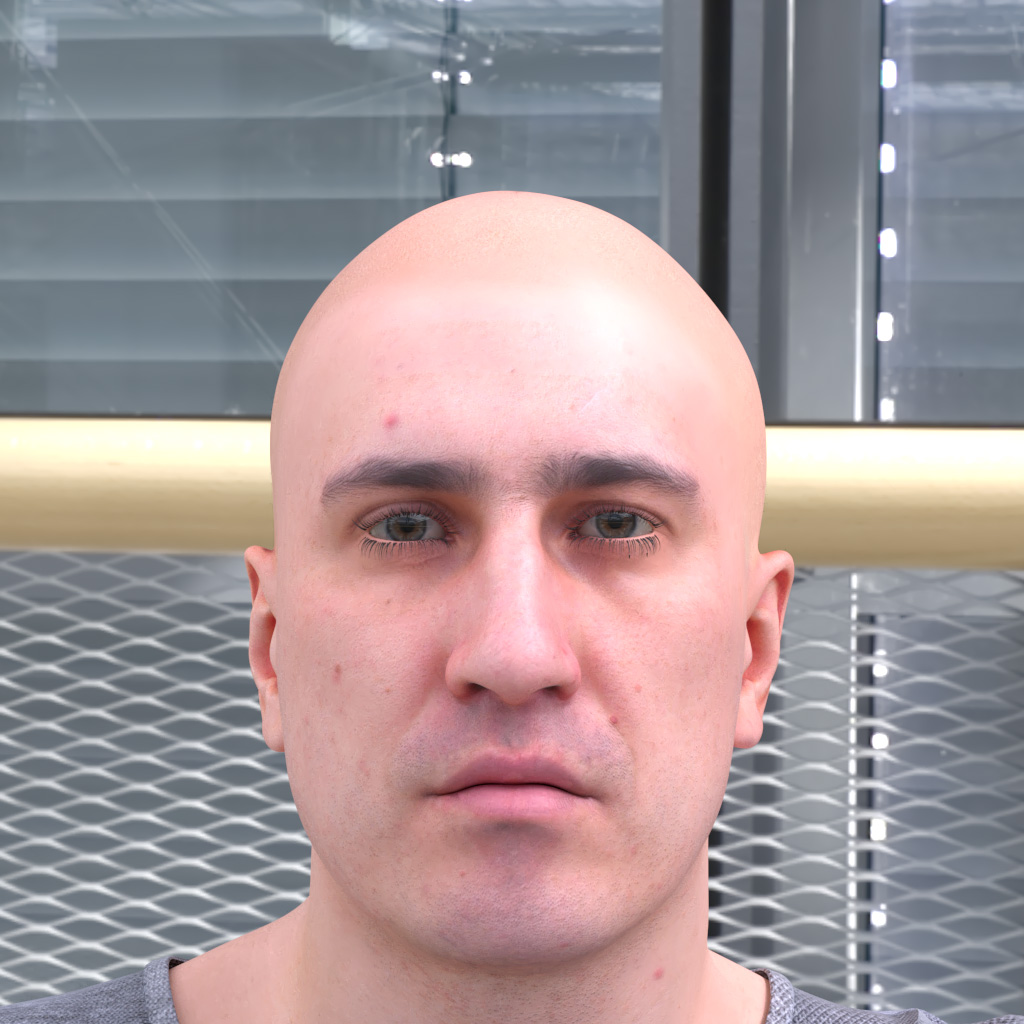

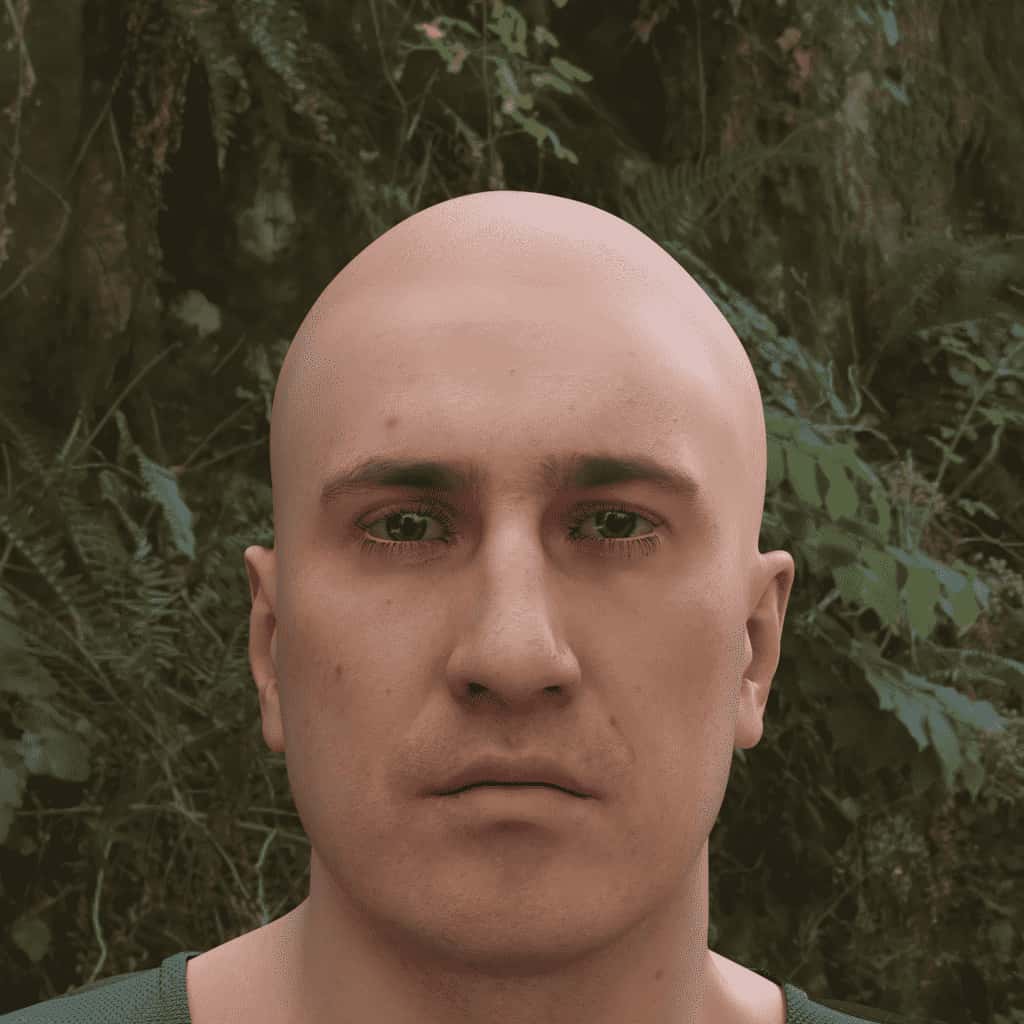

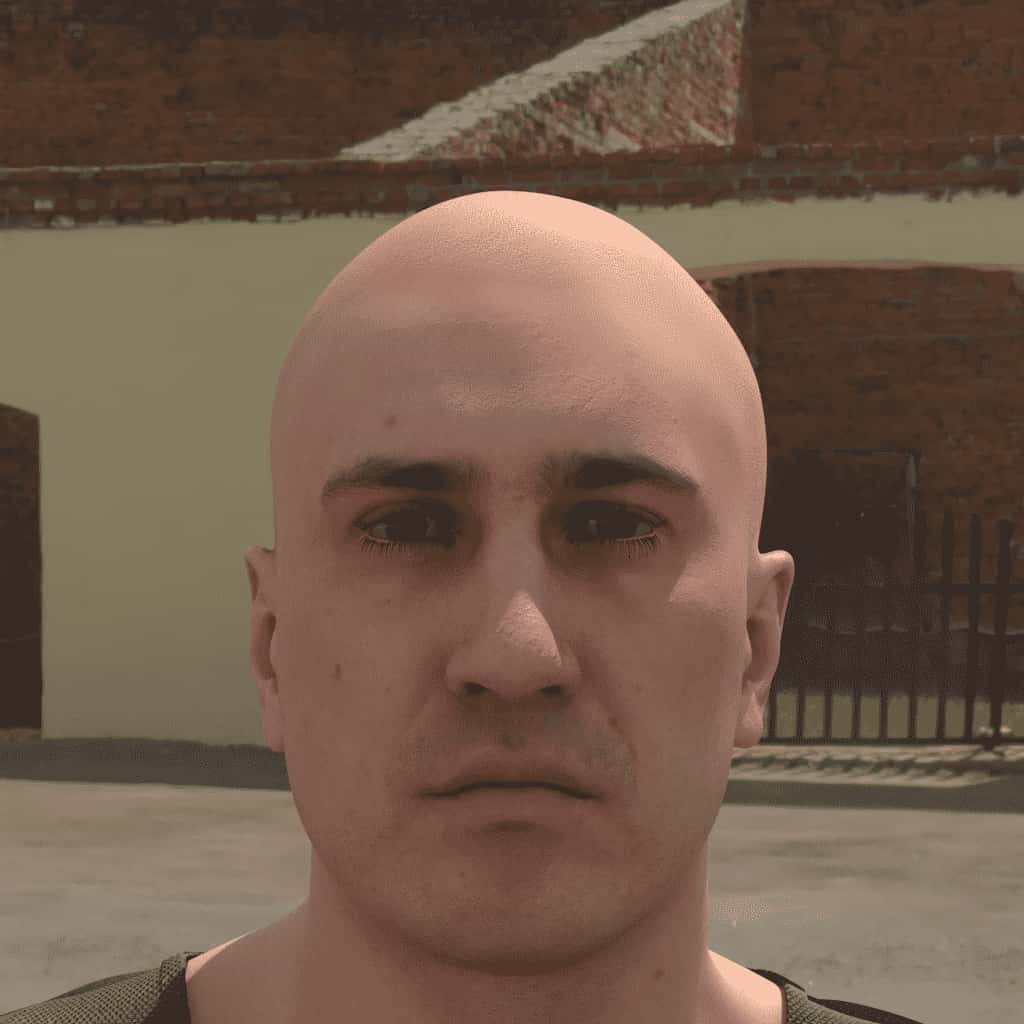

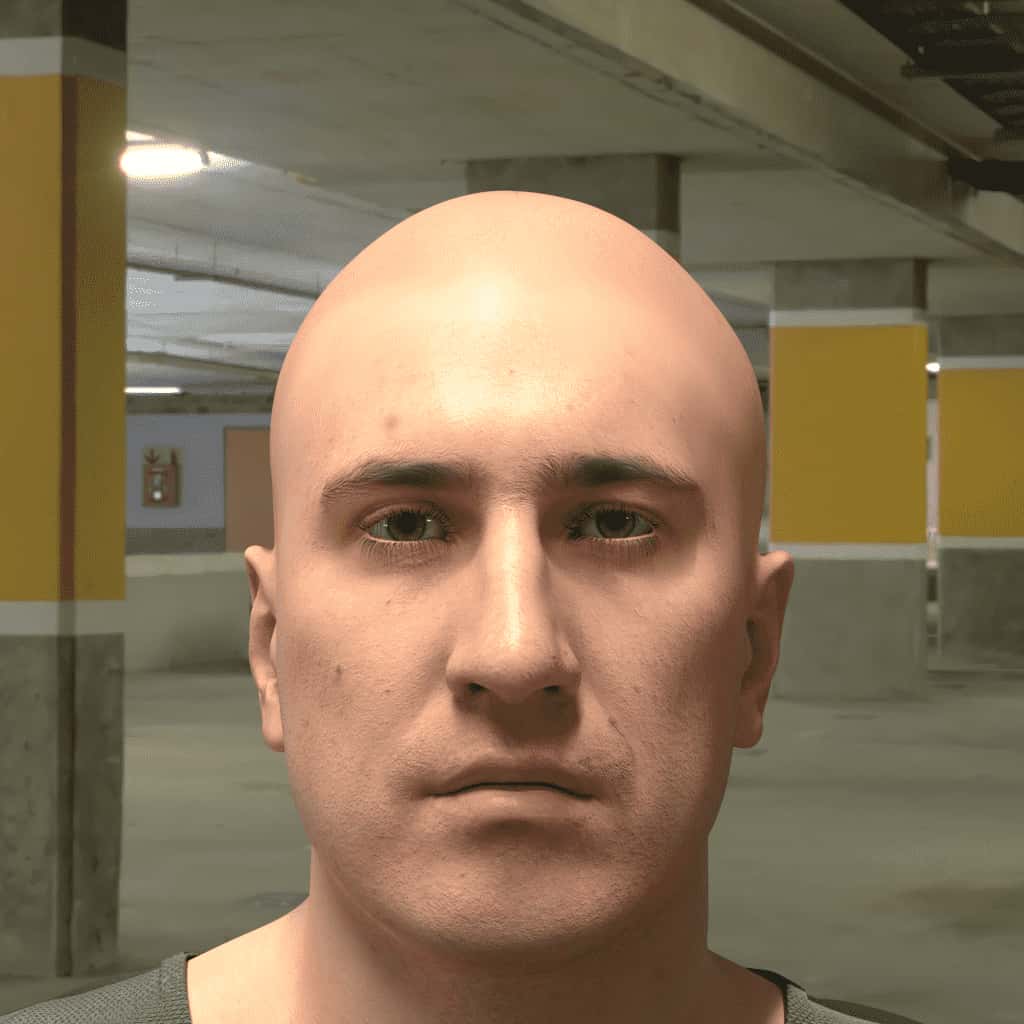

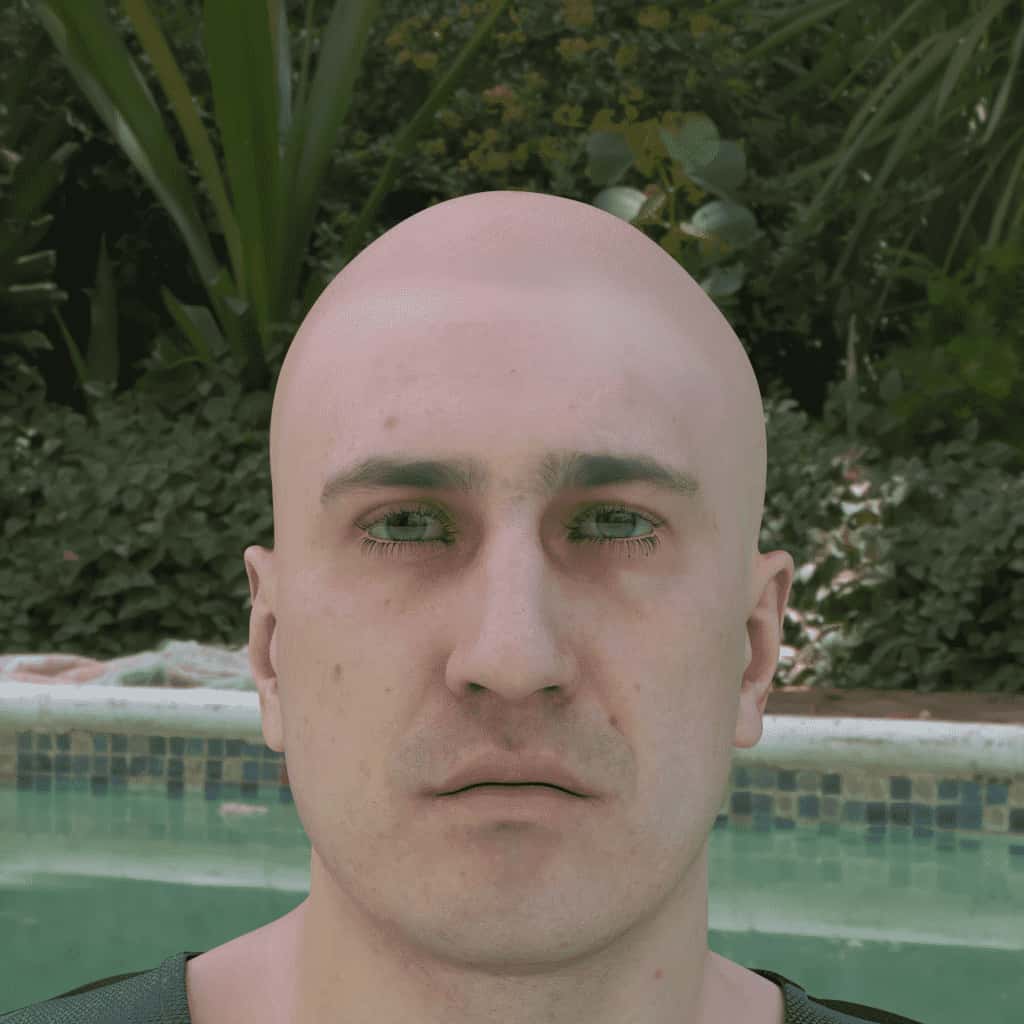

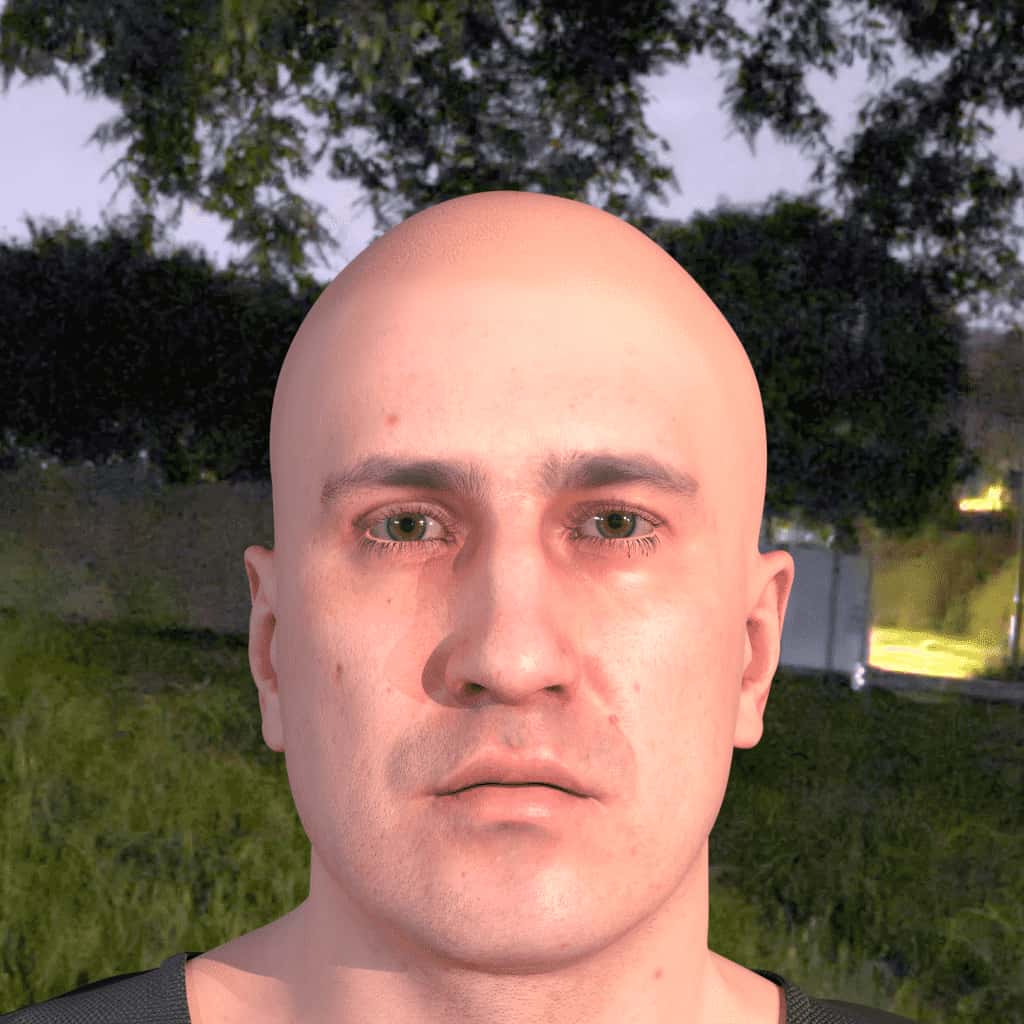

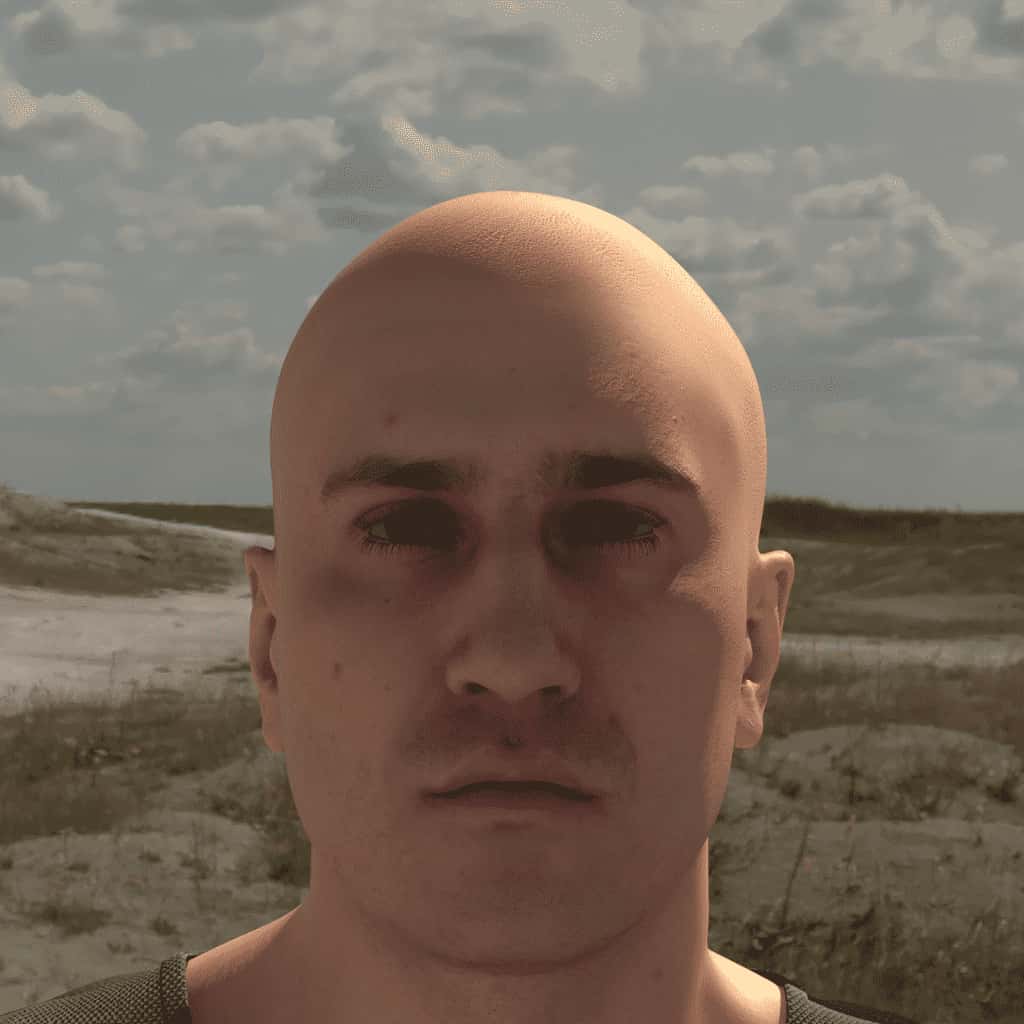

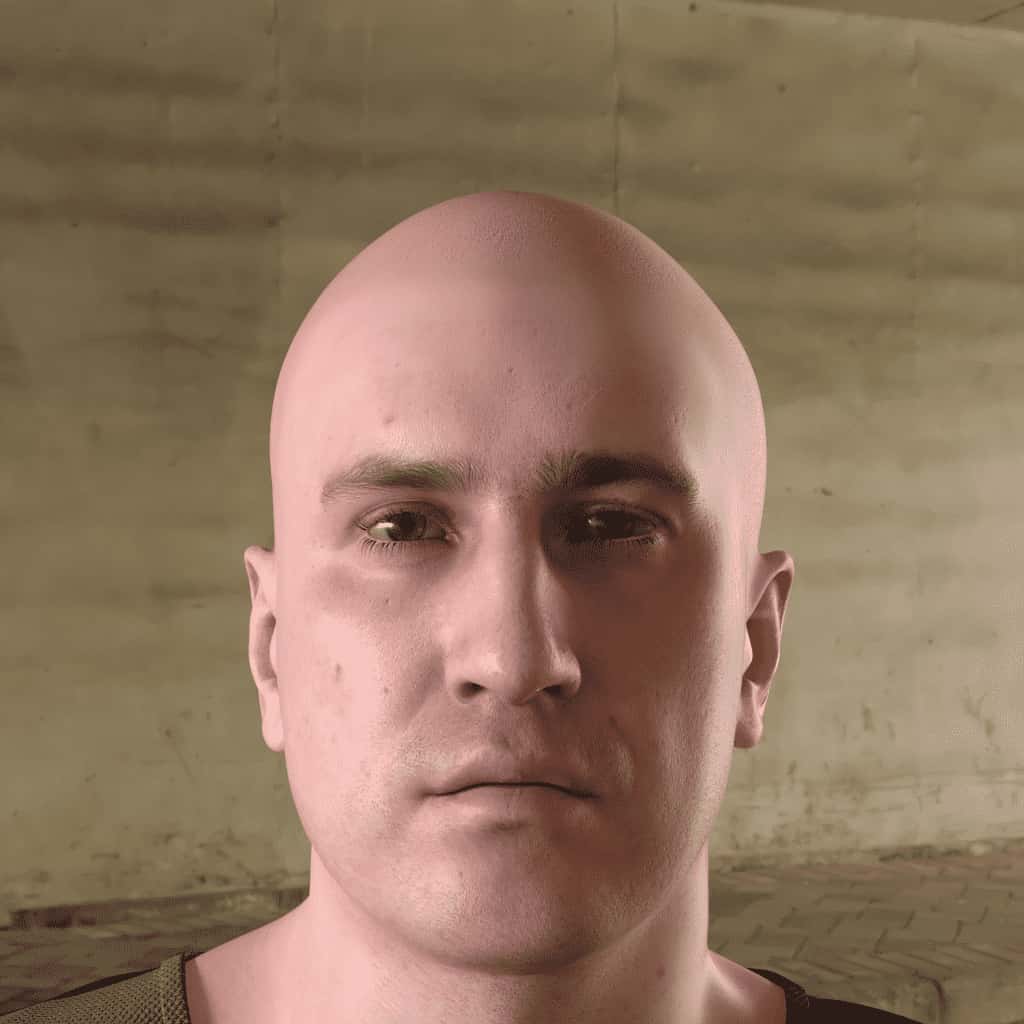

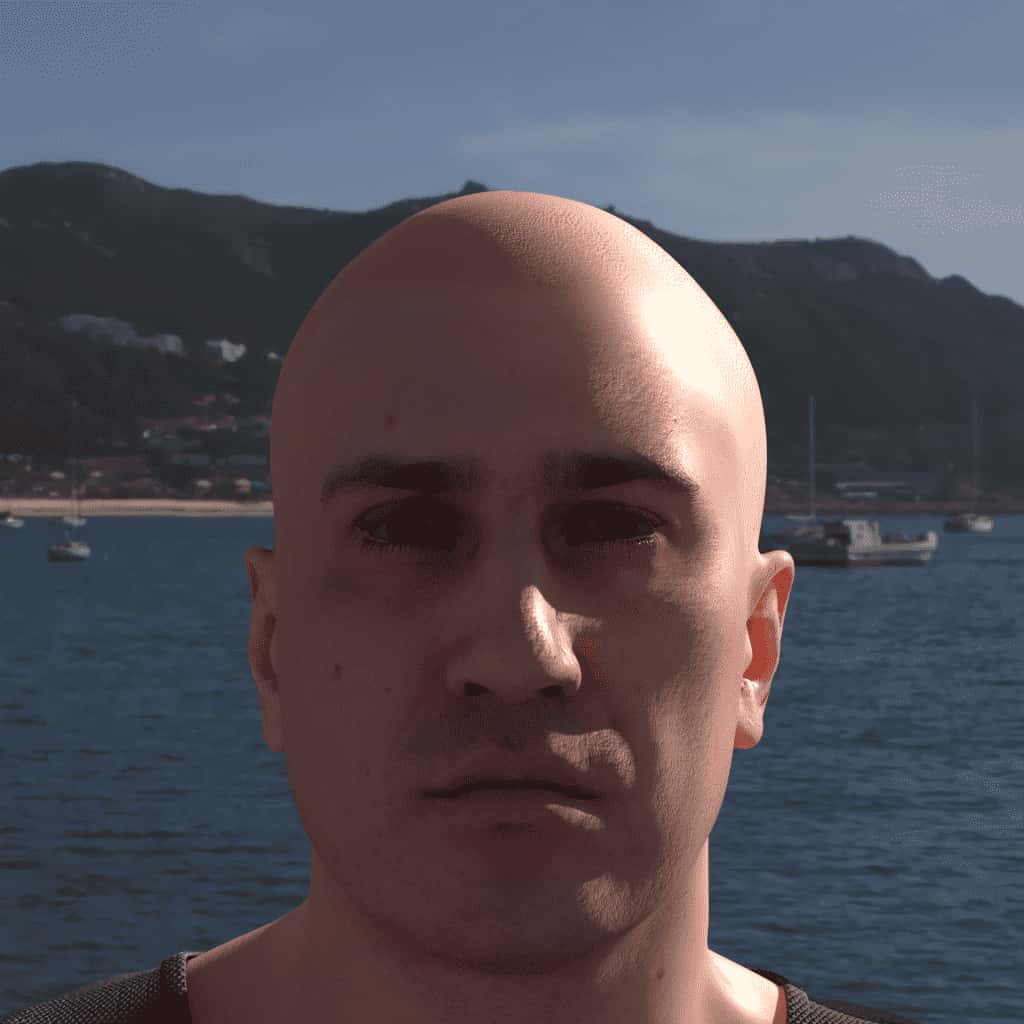

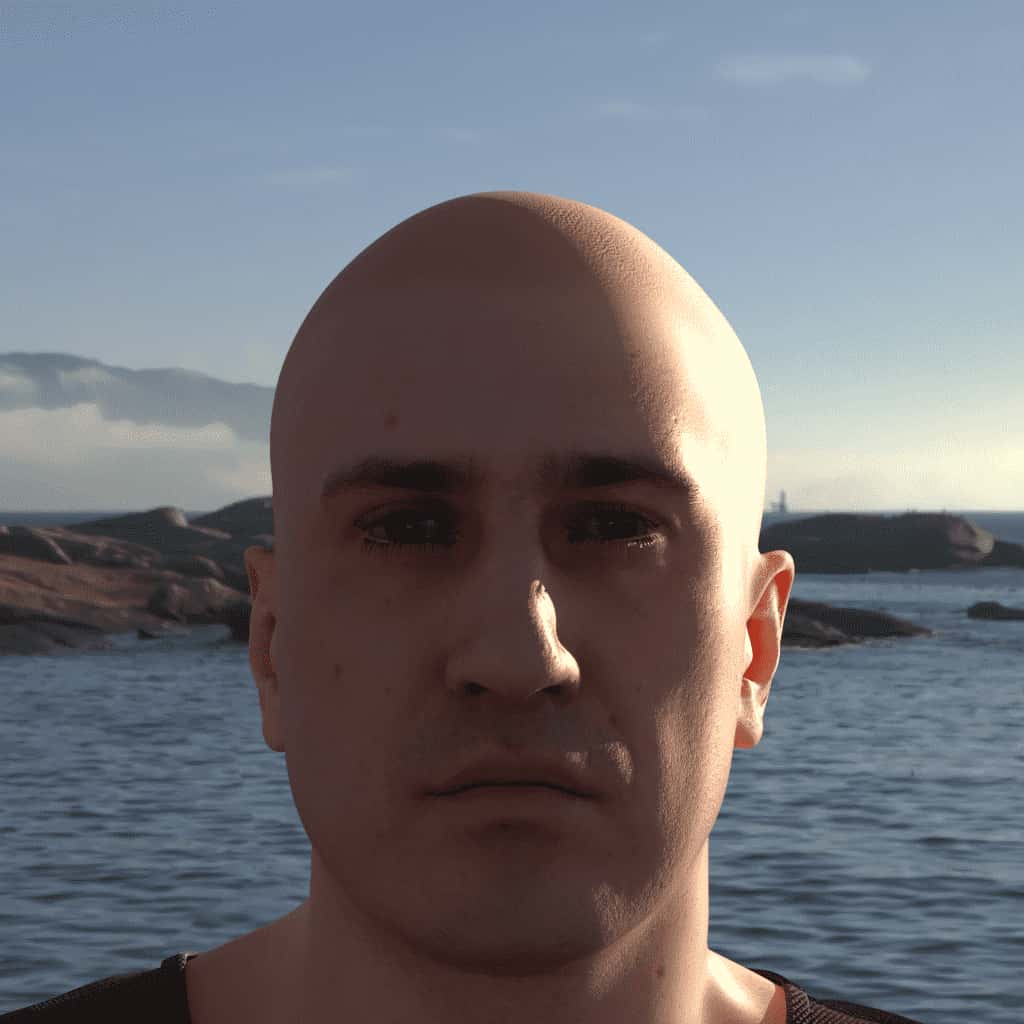

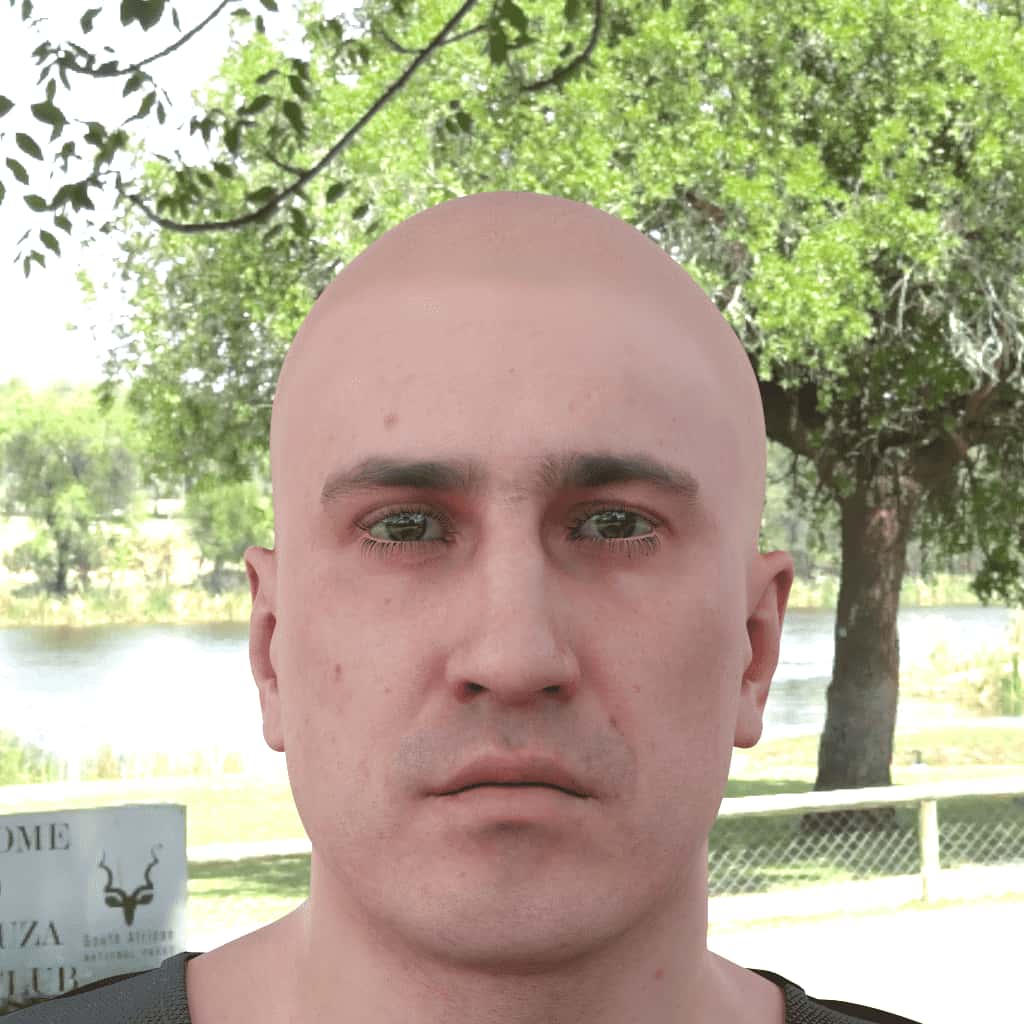

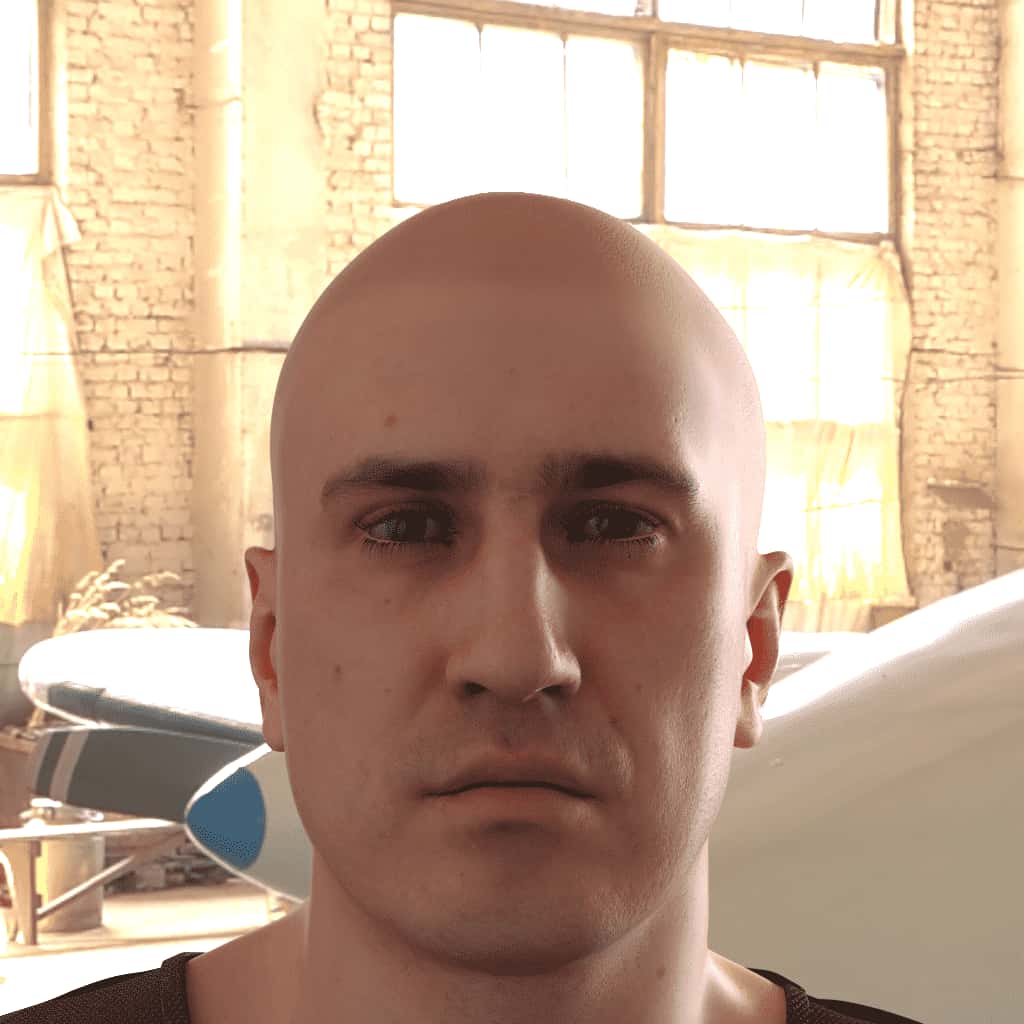

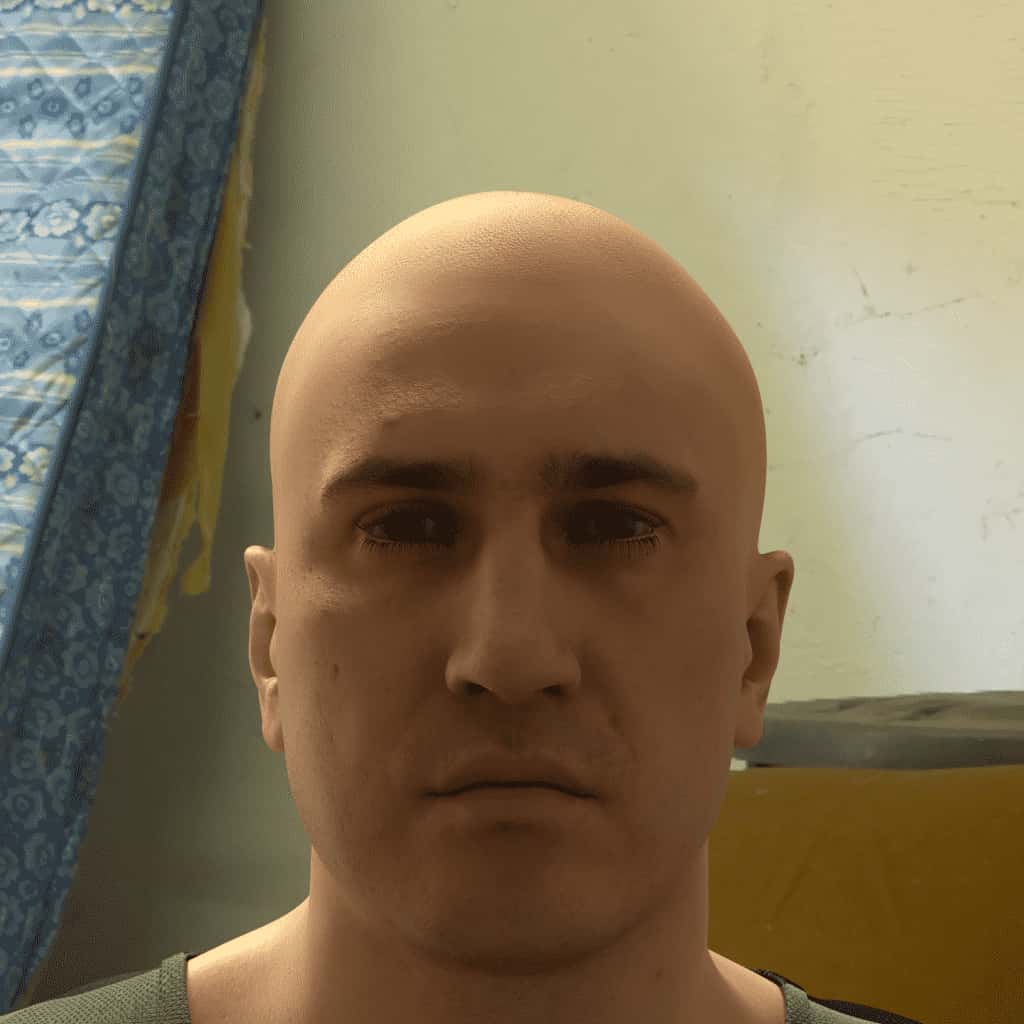

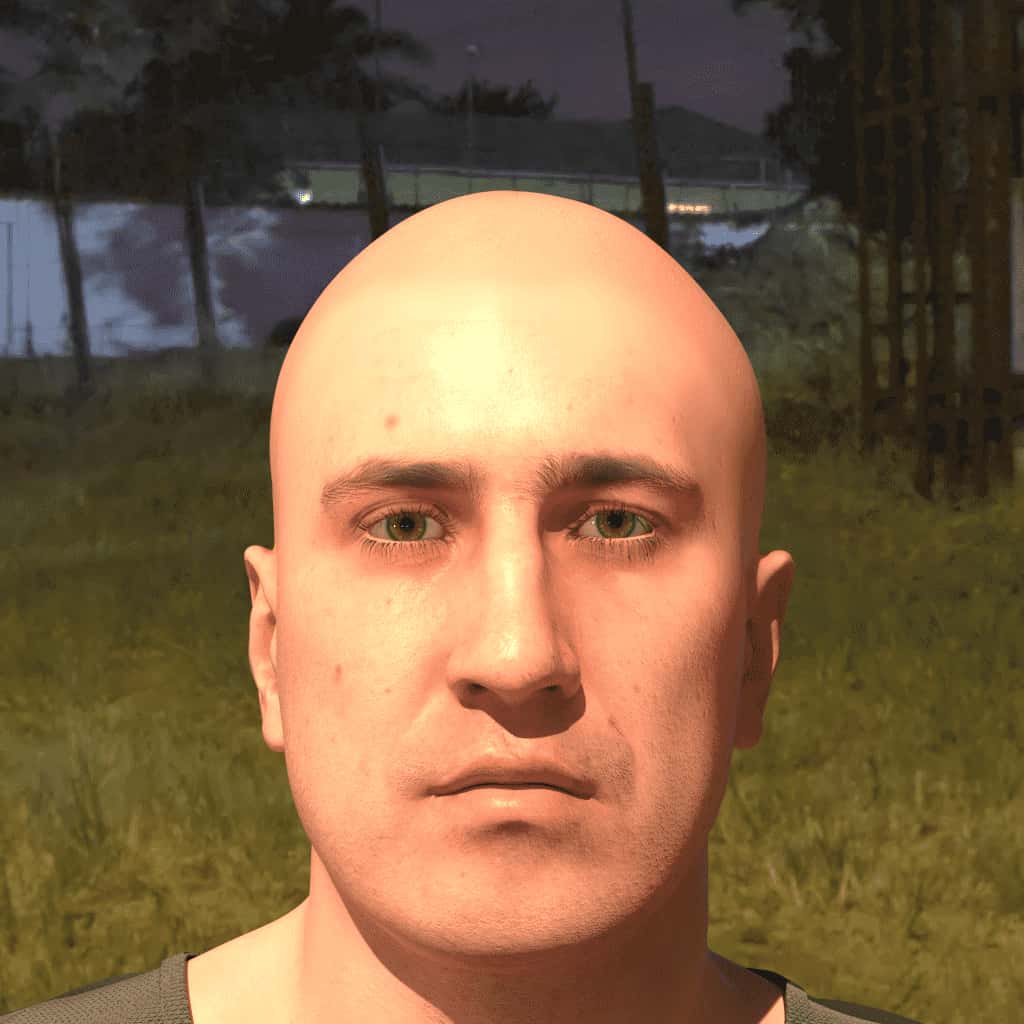

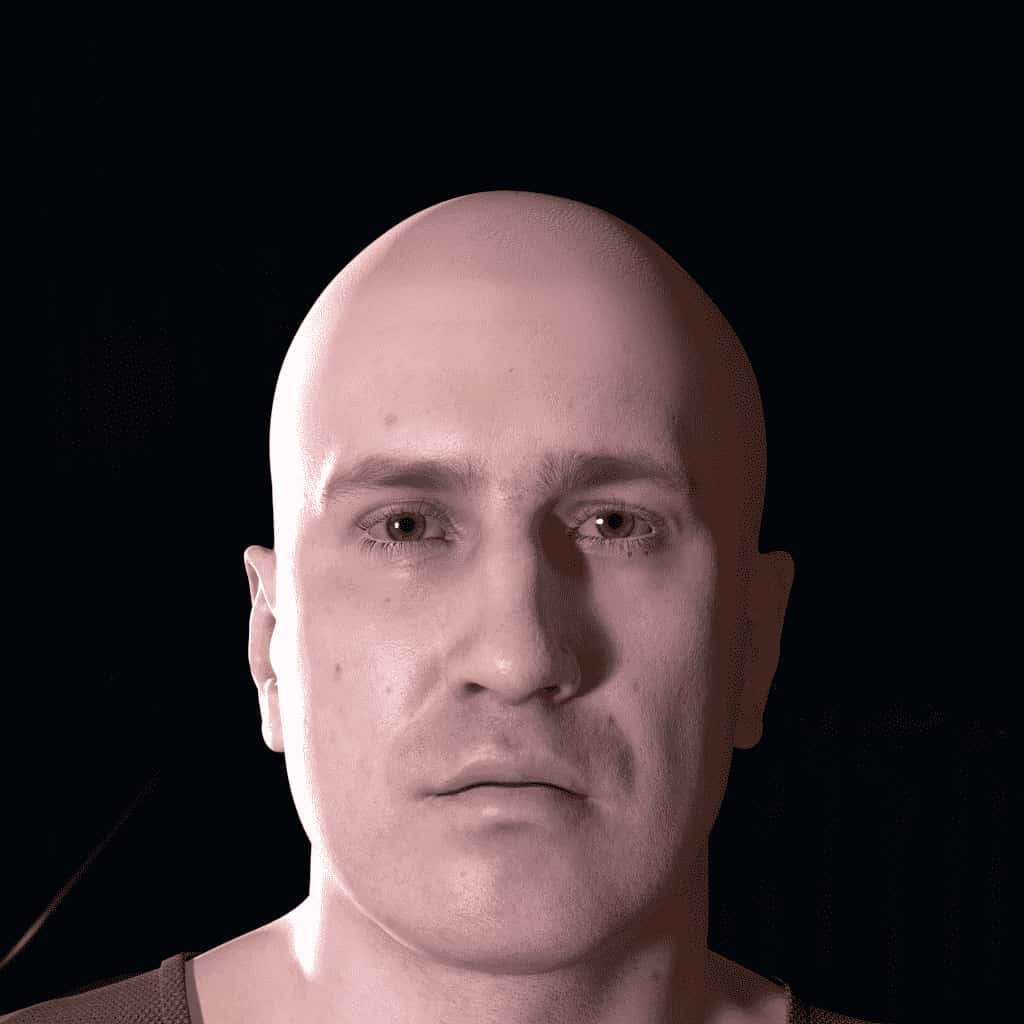

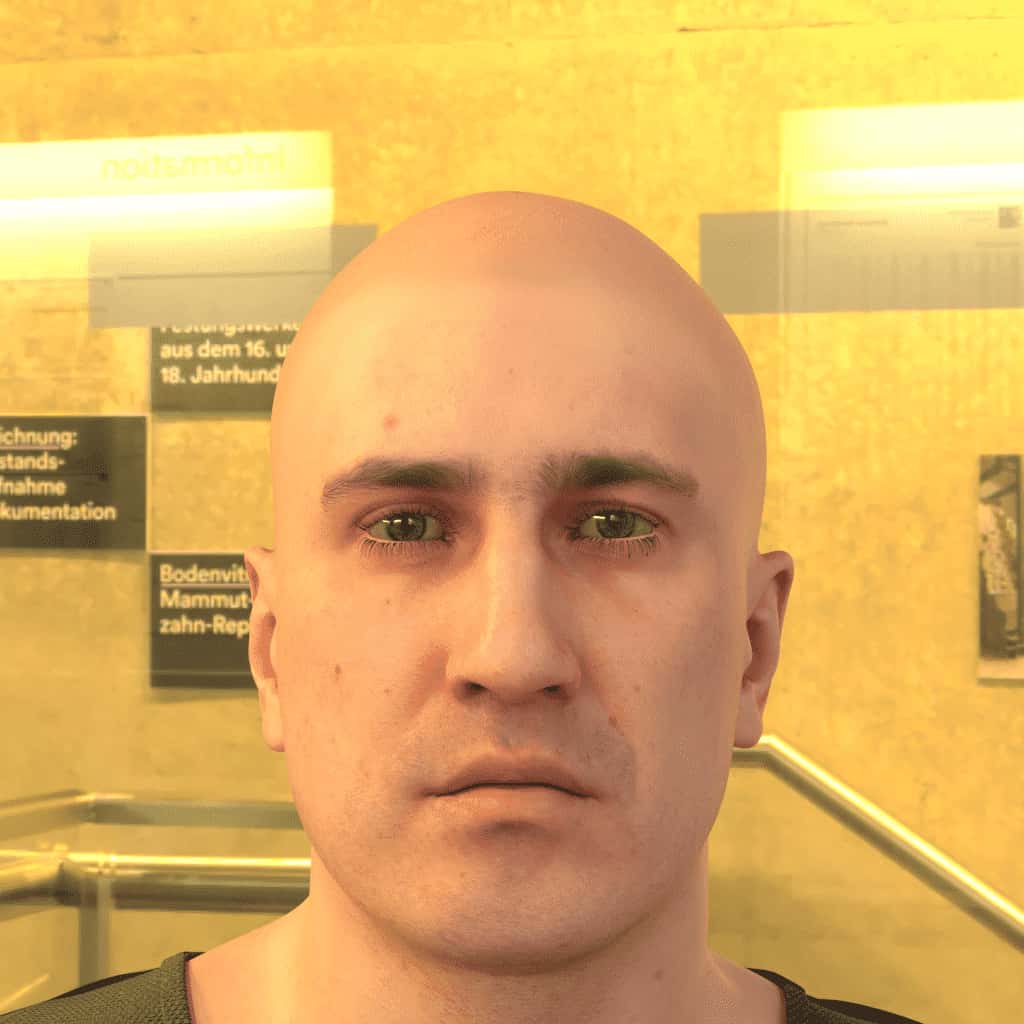

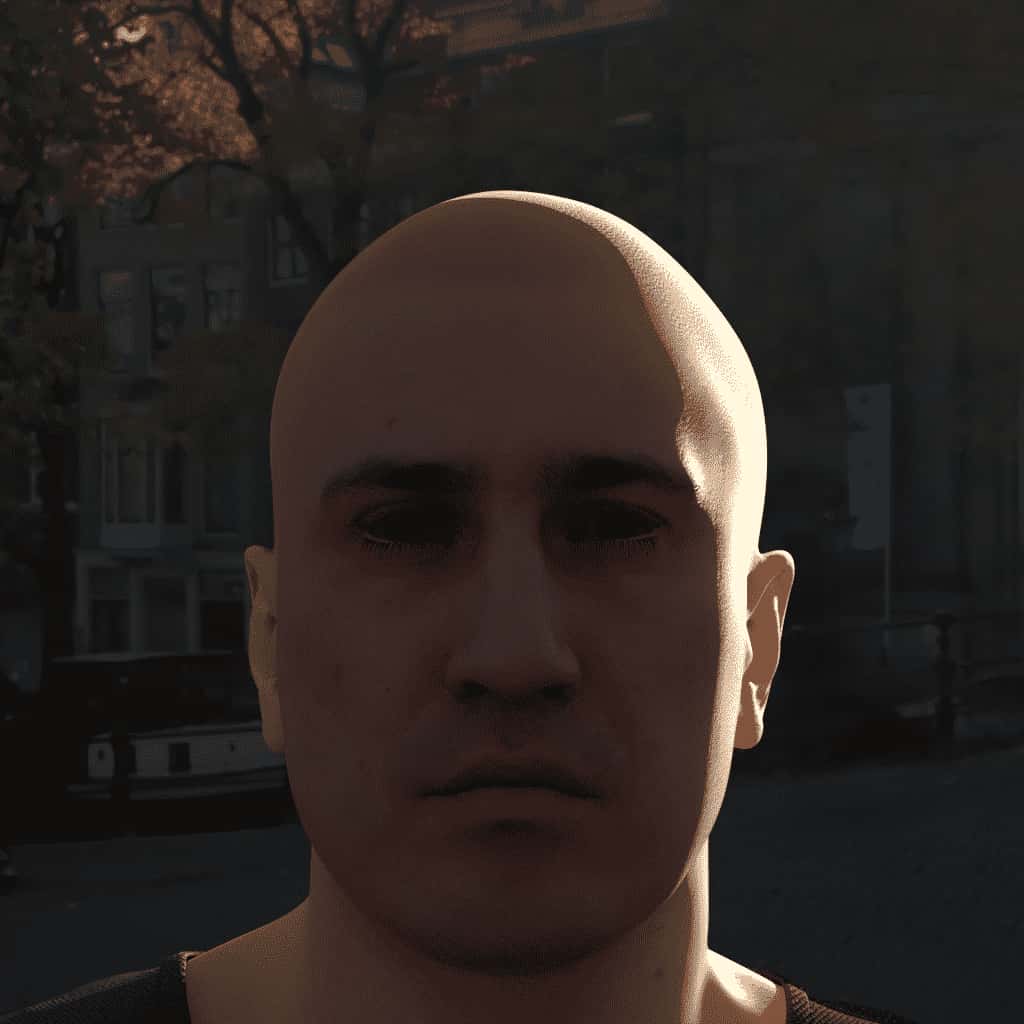

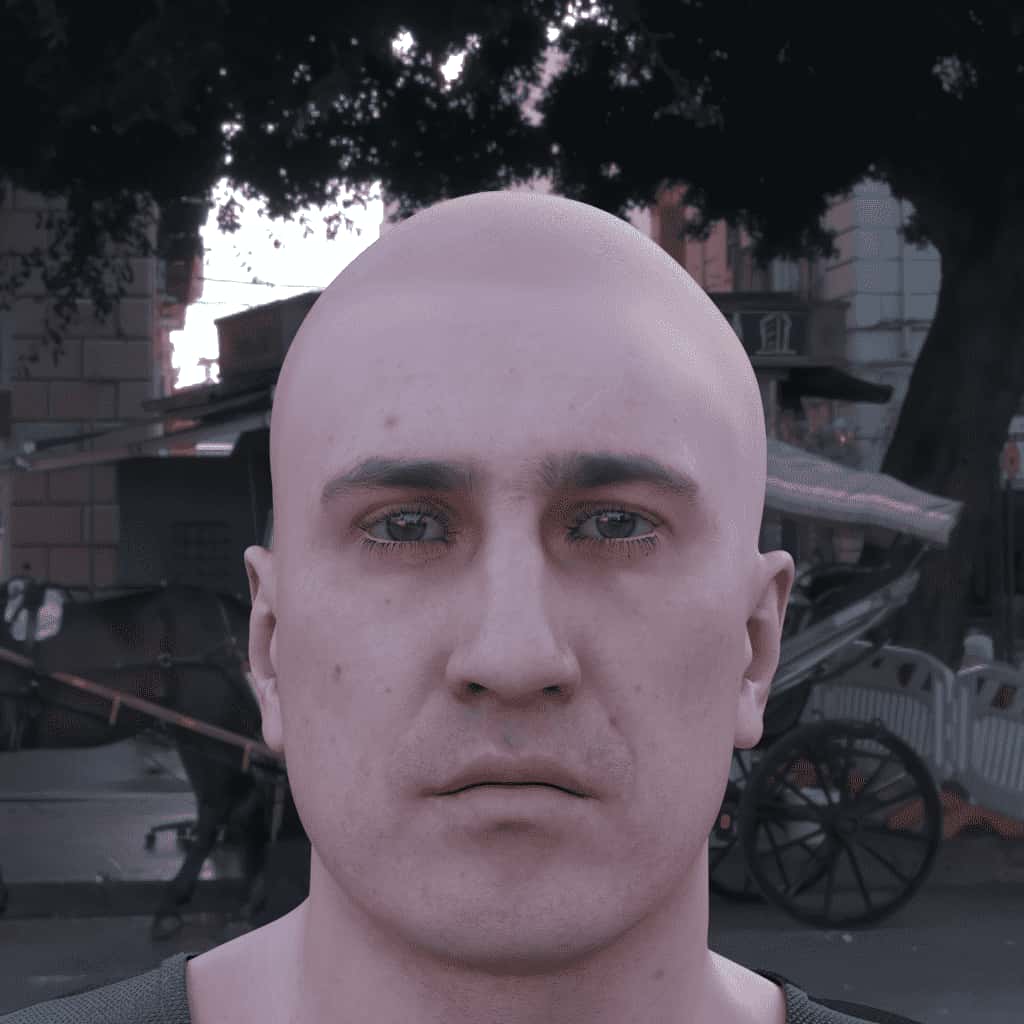

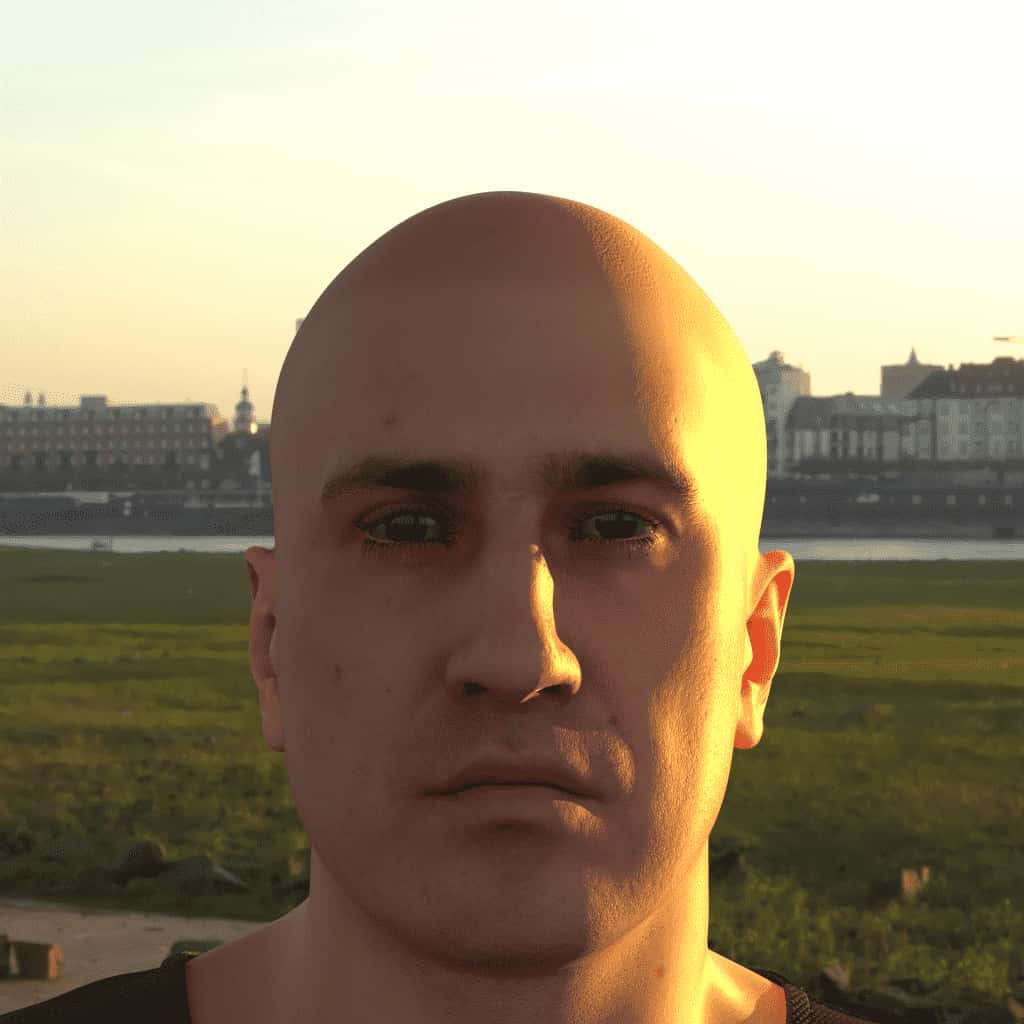

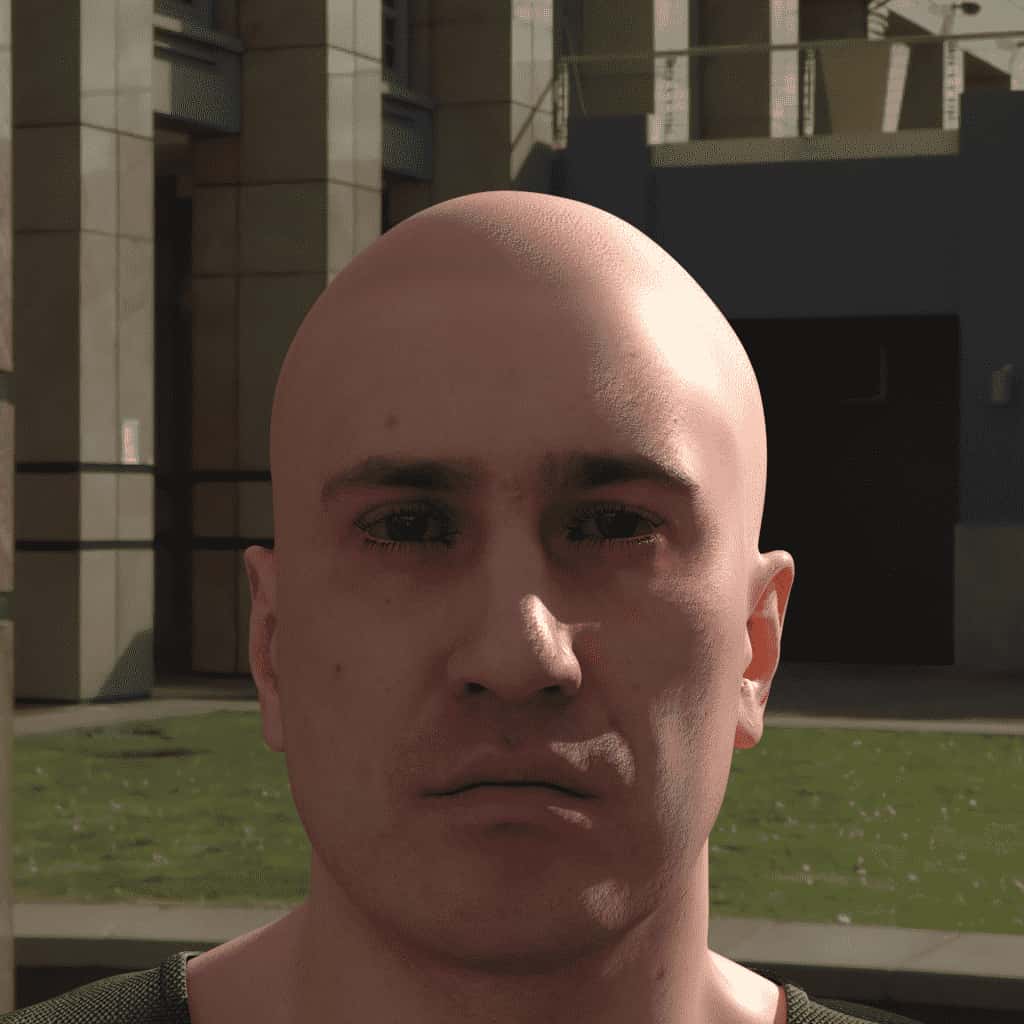

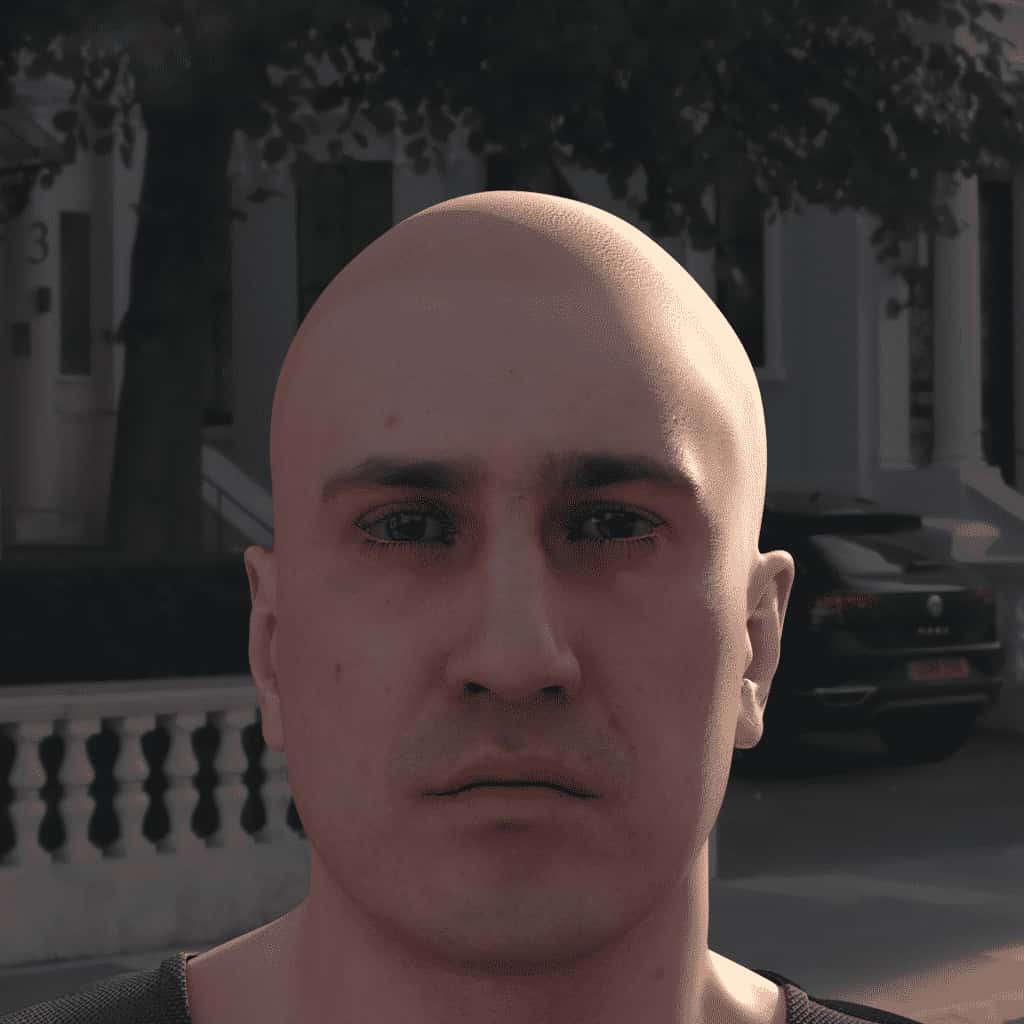

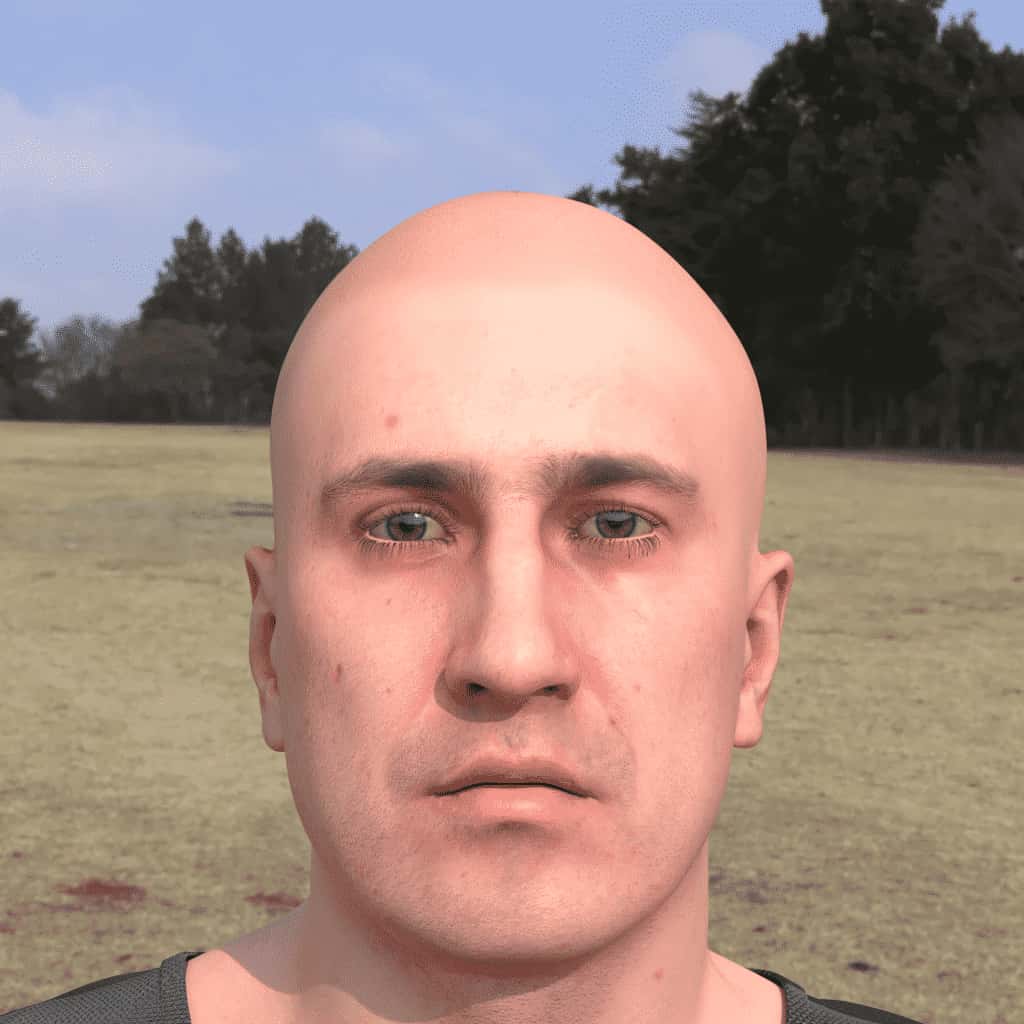

Visual examples of varying identities (see also: full input JSON to generate them):

3D Location

Overview

Once a human is created, they are placed both into an broader environmental context environment and into a local setting that represents a real world context, called a 3d_location. Currently, there are three types of locations available. A human can be placed in the drivers seat of a vehicle, or be standing in an interior room. Alternatively, a person can be left in an open setting with just the environmental background. Some of the different types of 3d_location have an allowed set of properties according to the details that can be changed within that setting. Details for each type can be found in the following section.

NOTE: 3d_locations work best with specific gestures and rig types to simulate real world activities and image capture setups.

At the top level, 3d_location is an array of objects to form a distribution group. Each object in the array should have a specifications object and a percent. Inside each specifications object a type and properties are defined, or a set of defaults will be used if nothing given.

type:

- HDRI only:

"open"(Default) - Interior Locations:

"interior" - Vehicles:

"vehicle"

Top Level Distribution Example

"3d_locations": [

{

"specifications": {

"type": "open",

"properties": {}

},

"percent": 50

},

{

"specifications": {

"type": "interior",

"properties": {}

},

"percent": 50

}

],

Open Type (Default)

Description: open locations are given an "open" coordinate system with no additional walls or structures created around the human. Open locations are useful when training on faces or when a quick render is needed to preview a human without all the surrounding details.

properties: the properties object is empty and can be omitted, shown here for completeness

Open Location Properties

"3d_locations": [{

"specifications": {

"type": "open",

"properties": {

}

},

"percent": 100

}],

Interior

Description: interior locations create a three dimensional room that the human is placed into. Each room id has a different type of furniture and layout.

id:

- Description: each ID is a different interior room layout

- Default:

"0"

indoor_light_enabled:

- Description: Turns any indoor lighting elements on or off

- Default:

false

head_position_seed:

- Description: Determines a random position of the human within the interior location

- Default: 0

- Min: 0

- Max: infinity

facing_direction:

- Description: Determines the rotation of the human to be looking at different locations in the room

- Default: 0

- Min: -180

- Max: 180

agent properties :

style:

- Description: Style determines which agent to choose

- Default:

"00021_Yvonne004"

enabled:

- Description: Turns the agent on or off within the percentage group

- Default: false

position_seed:

- Description: Sets a random position of the agent within the interior location space

- Default: 0

- Min: 0

- Max: infinity

Example of Interior Location

"3d_locations": [{

"specifications": {

"type": "interior",

"properties": {

"id": ["0", "1", "2"],

"indoor_light_enabled": ["true"],

"head_position_seed": {

"type": "list",

"values": [0]

},

"facing_direction": {

"type": "list",

"values": [0]

},

"agent": {

"style": ["00062_Camille008"],

"enabled": ["true"],

"position_seed": { "type": "list", "values": [0]}

},

}

},

"percent": 100

}],

Vehicle

Description: vehicle locations place the human in the driver's seat of the vehicle. There is a list of vehicle compatible gestures for the human, and a rig type called head orbit in car to better assist rig placement in vehicles. The current vehicle model is a 2022 Ford Escape SE.

Examples of in vehicle images.

properties: the properties object is empty and can be omitted, shown here for completeness

Example of Vehicle Location

"3d_locations": [{

"specifications": {

"type": "vehicle",

"properties": {

}

},

"percent": 100

}],

Facial Attributes

The facial attributes section describes parts of the head anatomy. This includes:

- Expressions

- Eyebrows

- Eye Gaze

- Head Turn

- Head Hair

- Facial Hair

- Eyes

JSON example of all facial-attributes sections specified

{

"humans": [{

"identities": {"ids": [80],"renders_per_identity": 1},

"facial_attributes": {

"expression": [{

"name": ["all"],

"intensity": { "type": "range", "values": {"min": 0.0, "max": 1.0}},

"percent": 100

}],

"eyebrows": [{

"style": ["all"],

"relative_length": {"type": "range","values": {"min": 1.0, "max": 1.0}},

"relative_density": {"type": "range","values": {"min": 1.0,"max": 1.0}},

"color": ["all"],

"color_seed": {"type": "list", "values": [0]},

"match_hair_color": true,

"sex_matched_only": true,

"percent": 100

}],

"gaze": [{

"horizontal_angle": {"type": "range", "values": {"min": -30.0, "max": 30.0}},

"vertical_angle": {"type": "range", "values": {"min": -30.0, "max": 30.0}},

"percent": 100

}],

"head_turn": [{

"pitch": {"type": "range", "values": {"min": -45.0, "max": 45.0}},

"yaw": {"type": "range", "values": {"min": -45.0, "max": 45.0}},

"roll": {"type": "range", "values": {"min": -45.0, "max": 45.0}},

"percent": 100

}],

"hair": [{

"style": ["all"],

"color": ["all"],

"sex_matched_only": true,

"ethnicity_matched_only": true,

"relative_length": {"type": "range", "values": { "min": 0.5, "max": 1.0}},

"relative_density": {"type": "range", "values": { "min": 0.5, "max": 1.0}},

"percent": 100

}],

"facial_hair": [{

"style": ["all"],

"color": ["all"],

"match_hair_color": true,

"relative_length": {"type": "list", "values": [1.0]},

"relative_density": {"type": "list", "values": [1.0]},

"percent": 100

}],

"eyes": [{

"iris_color": ["all"],

"redness": {"type": "range", "values": {"min": 0.0, "max": 1.0}},

"pupil_dilation": {"type": "range", "values": {"min": 0.0, "max": 1.0}},

"percent": 100

}]

}

}]

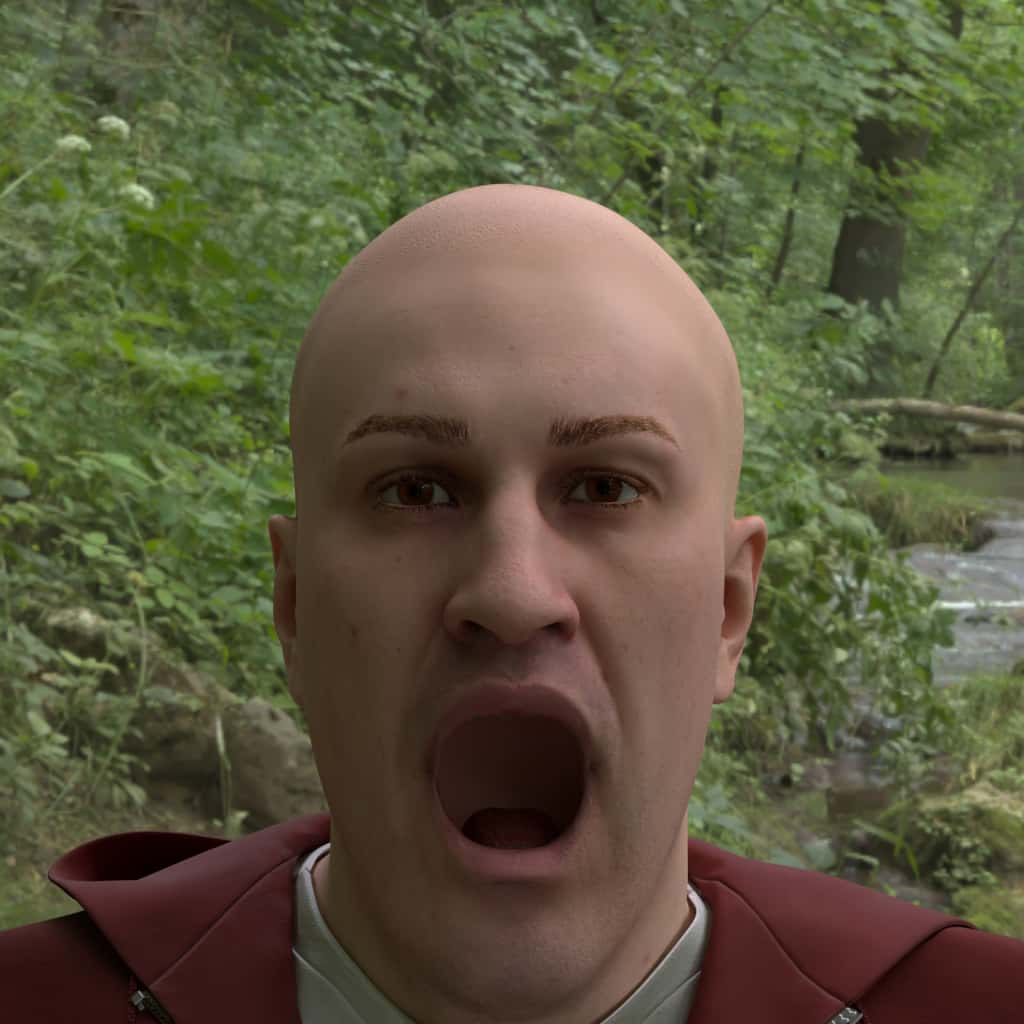

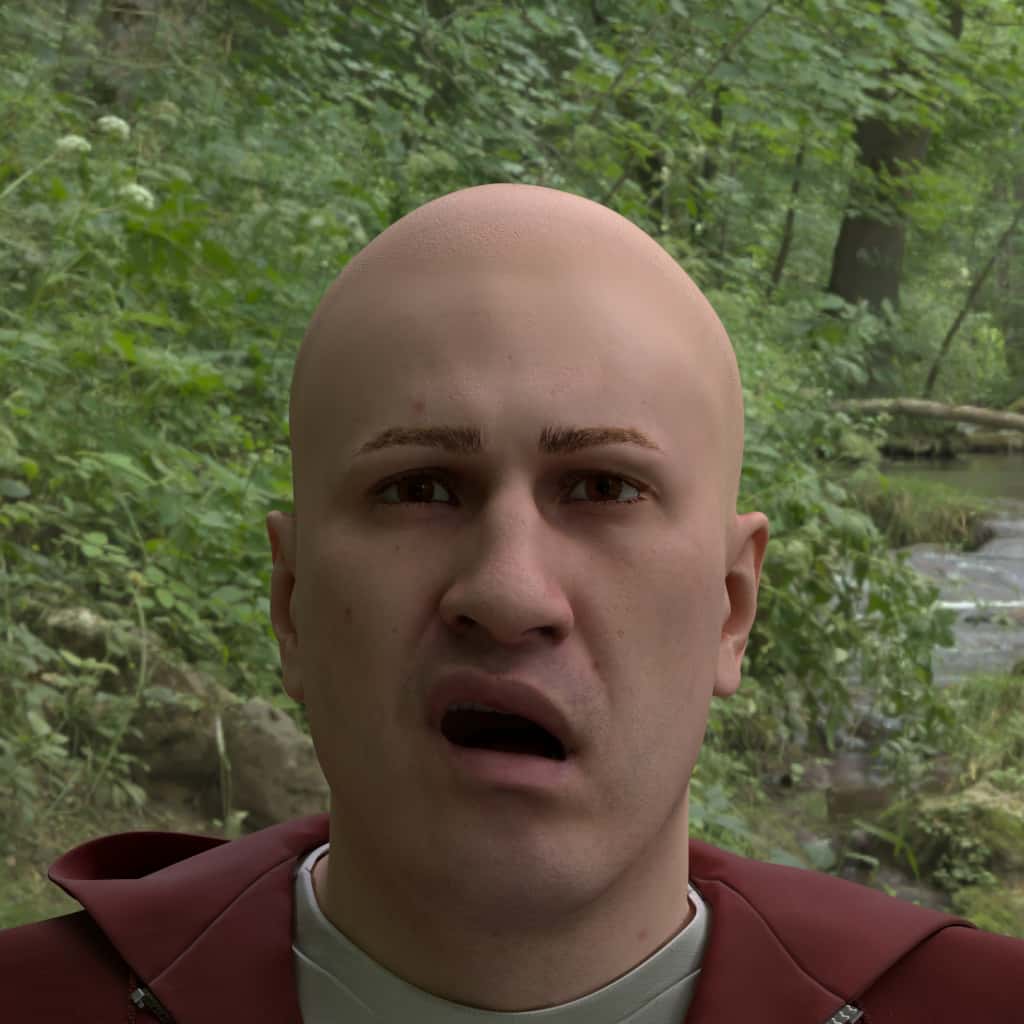

}Expression

Expression manipulates the shape of the eyes, mouth, cheeks, etc. according to the name of an expression, and an amount, called intensity.

Note: at the moment, only a single expression can be applied at a time.

There are over 150 available expressions, which can be found in our documentation appendix. The intensity is a '%' that denotes the relative deformation from neutral to each (full) expression.

name:

- Default:

["none"] - Note: Use

["all"] as a shorthand for every option

intensity:

- Default: 0.75

- Min: 0

- Max: 1

Two expressions with fully varying intensities

{

"humans": [

{

"identities": {"ids": [80],"renders_per_identity": 2},

"facial_attributes": {

"expression": [

{

"name": ["eyes_closed_max", "chew"],

"intensity": { "type": "range", "values": {"min": 0.0, "max": 1.0} },

"percent": 100

}

]

}

}

]

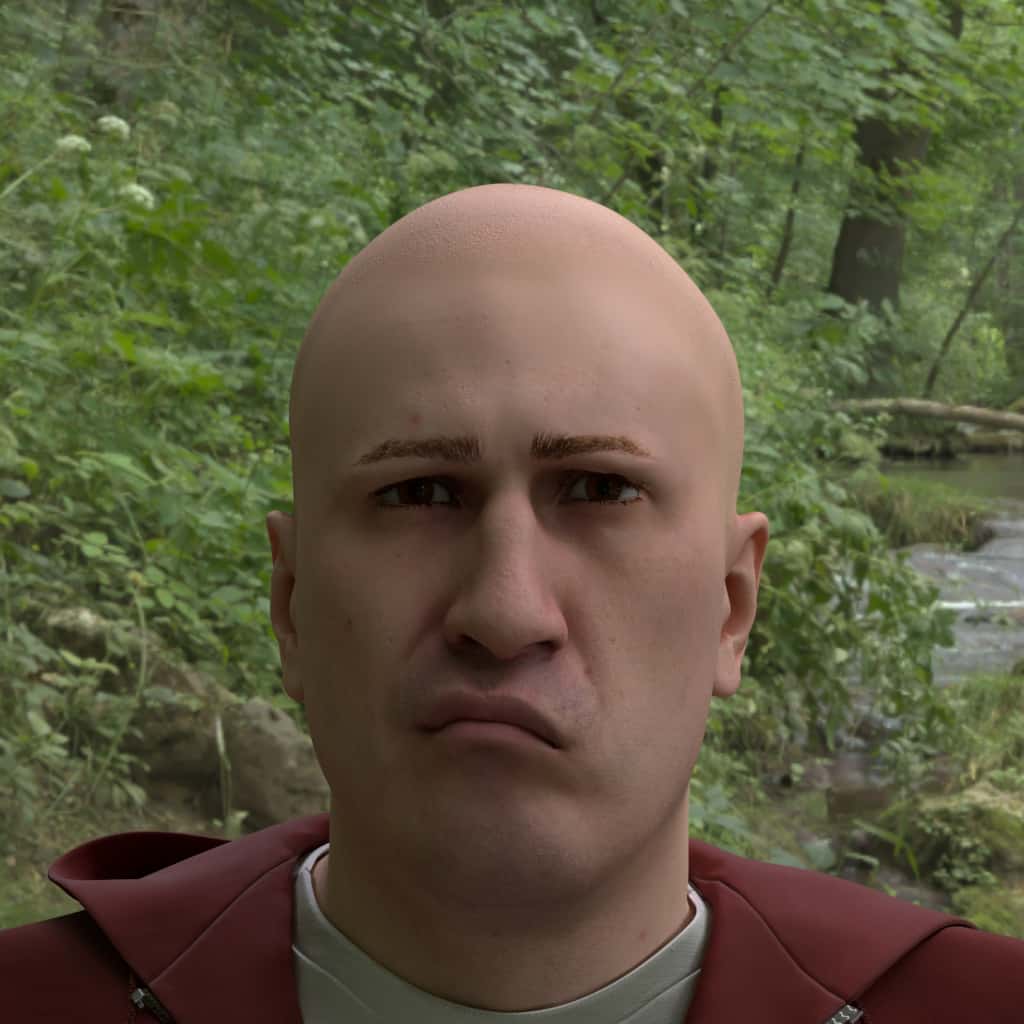

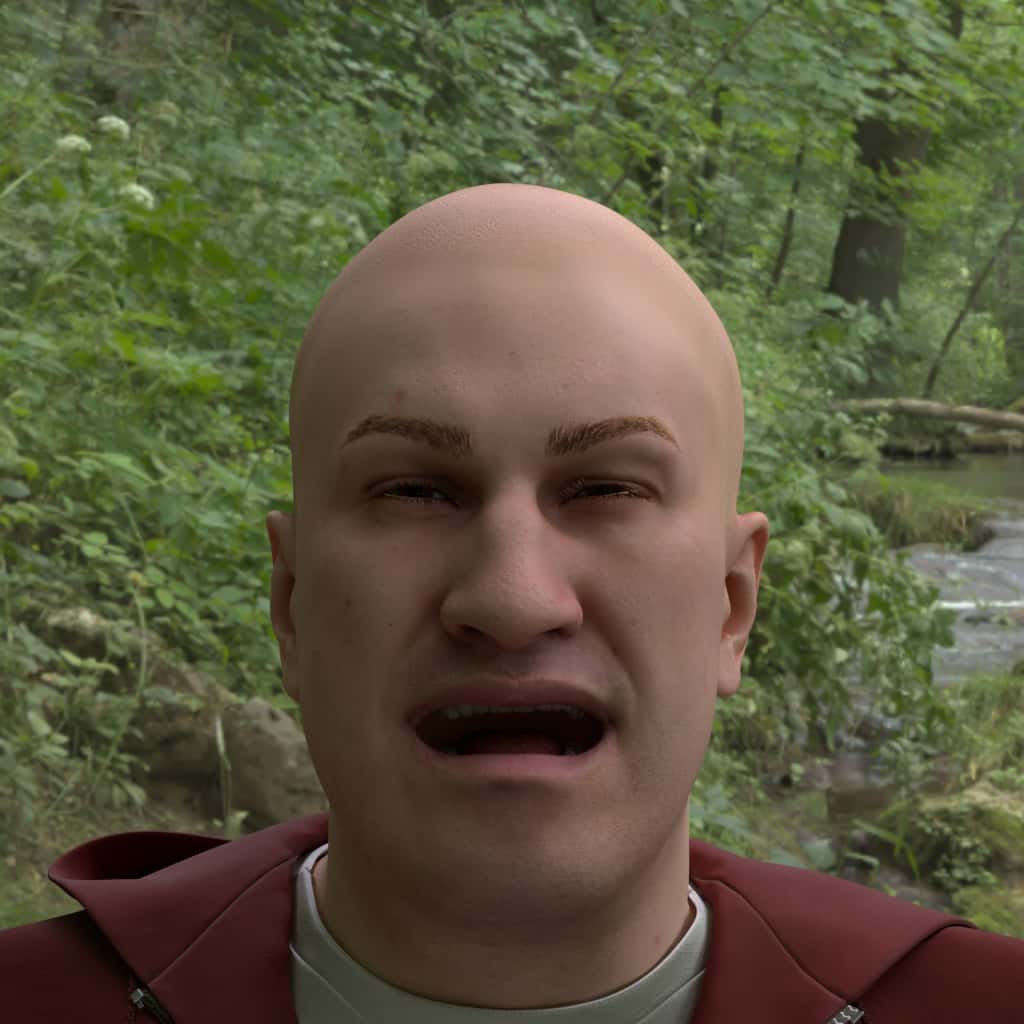

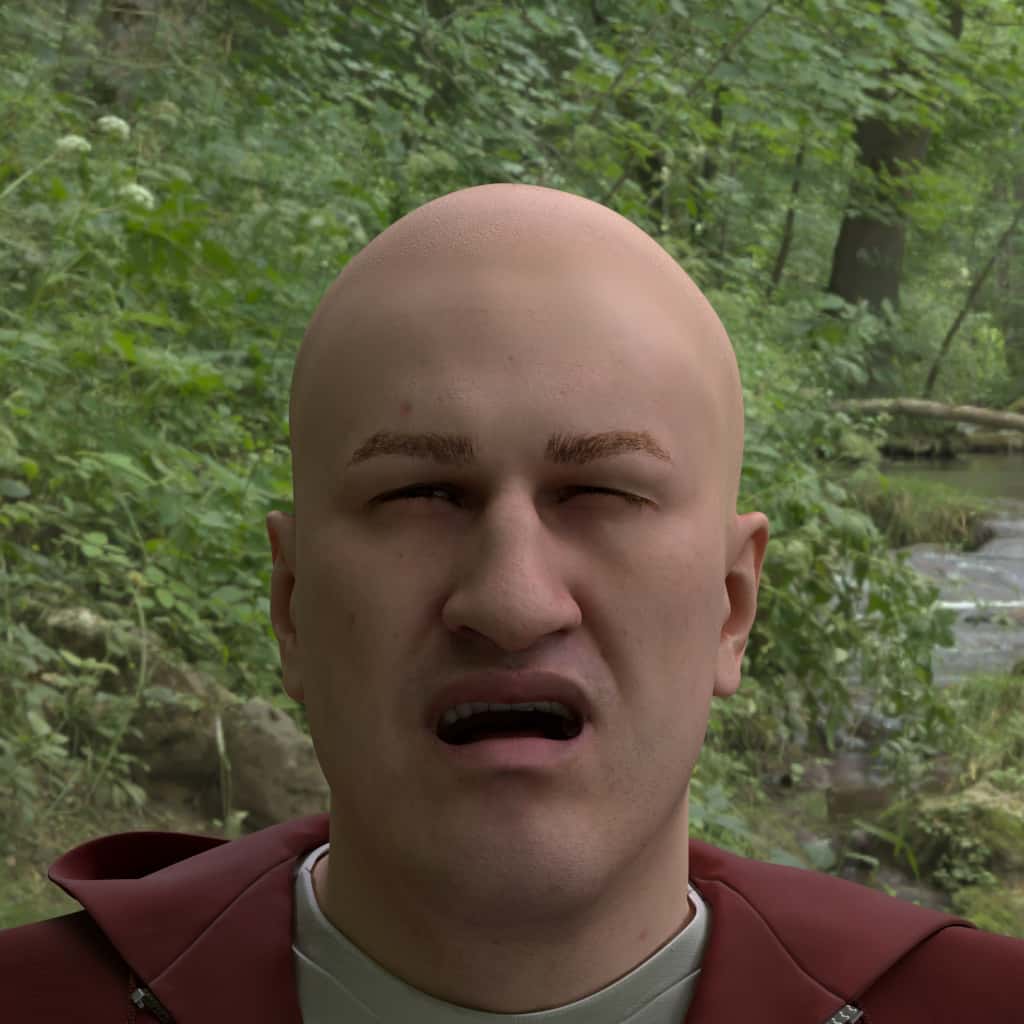

}Visual examples of our expressions (see also: full input JSON to generate them):

Eye Gaze

The direction the eyes look, or our gaze value, is described from the -30 to 30 degrees as a difference from looking straight ahead. An eye looking up and to the left, from the character’s point of view, would have a positive horizontal angle and a positive vertical angle.

In the output, we also output the gaze_vector, which is the vector from the centroid of the eye volume, to the location of the pupil in 3d world-space coordinates.

horizontal_angle:

- Default: 0

- Min: -30

- Max: 30

vertical_angle:

- Default: 0

- Min: -30

- Max: 30

Gaze section featuring widest gaze ranges

{

"humans": [

{

"identities": {"ids": [80],"renders_per_identity": 1},

"facial_attributes": {

"gaze": [{

"horizontal_angle": { "type": "range", "values": {"min": -30.0, "max": 30.0 }},

"vertical_angle": { "type": "range", "values": {"min": -30.0, "max": 30.0 }},

"percent": 100

}]

}

}

]

}Visual examples of gazes (see also: full input JSON to generate them):

| vertical/horizontal | horizontal: -30 | horizontal: 0 | horizontal: 30 |

|---|---|---|---|

| vertical: 30 |  |

|

|

| vertical: 0 |  |

|

|

| vertical: -30 |  |

|

|

Head Turn

Head turn describes the amount the head rotates about the neck/torso. It ranges from -45 to 45 degrees, in all 3 dimensions as a difference from posing level and straignt ahead. A head pitched/turned up and to the left, from the character’s point of view, would have a positive pitch angle and a positive yaw angle.

Note: Head turn rotatation is applied in the following order: (1) yaw, (2) pitch, (3) roll. These are applied in this order regardless of how the JSON keys are ordered in a job.

yaw:

- Default: 0

- Min: -45

- Max: 45

pitch:

- Default: 0

- Min: -45

- Max: 45

roll:

- Default: 0

- Min: -45

- Max: 45

Head turn section featuring widest head-turn ranges

{

"humans": [{

"identities": {"ids": [80],"renders_per_identity": 1},

"facial_attributes": {

"head_turn": [{

"pitch": {"type": "range", "values": {"min": -45.0, "max": 45.0}},

"yaw": {"type": "range", "values": {"min": -45.0, "max": 45.0}},

"roll": {"type": "range", "values": {"min": -45.0, "max": 45.0}},

"percent": 100

}]

}

}]

}Visual examples of head turns (see also: full input JSON to generate them):

| -45 | -15 | 0 | 15 | 45 | |

|---|---|---|---|---|---|

| Pitch |  |

|

|

|

|

| Yaw |  |

|

|

|

|

| Roll |  |

|

|

|

|

Hair

Hair is grown procedurally based on a few parameters: named style, color, length, and density. Style names and color names can be found in the documentation appendix.

Note: We currently recommend limiting relative_length to greater than 0.5 and relative_density to greater than 0.7.

Note 2: At the moment, hair should not be specified if any headwear is specified.

Note 3: hair does not maintain the same color between renders for the same identity in a single job.

Note 4: The all keyword does not contain the none keyword, meaning that if all is requested for styles, colors, etc., a none or empty value will not be included - which means using the all keyword for hair returns zero bald heads.

Randomization color_seed controls variance in color of hair (and facial hair).

- For consistent renders from one input to the next, always specify

color_seedand set it to the same value - For randomized renders, specify

color_seedas a range

You can also force that the hair style be sex-appropriate for the identity (sex_matched_only), and ethnically appropriate for the identity (ethnicity_matched_only). While these options may “feel” more appropriate, we have found that for machine learning, full variance can generalize better (use-case dependent).

style:

- Default:

["none"] - Note: Use

["all"] as a shorthand for every option

color:

- Default:

["dark_golden_brown"] - Note: Use

["all"] as a shorthand for every option

relative_length:

- Default: 1.0

- Min: 0.5

- Max: 1.0

relative_density:

- Default: 1.0

- Min: 0.5

- Max: 1.0

color_seed:

- Default: 0.0

- Min: 0.0

- Max: 1.0

sex_matched_only:

- Default:

true

ethnicity_matched_only:

- Default:

false

{

"humans": [

{

"identities": {"ids": [80],"renders_per_identity": 1},

"facial_attributes": {

"hair": [{

"style": ["all"],

"color": ["all"],

"relative_length": {"type": "range", "values": {"min": 0.85, "max": 1}},

"relative_density": {"type": "range", "values": {"min": 0.85, "max": 1}},

"percent": 100

}]

}

}

]

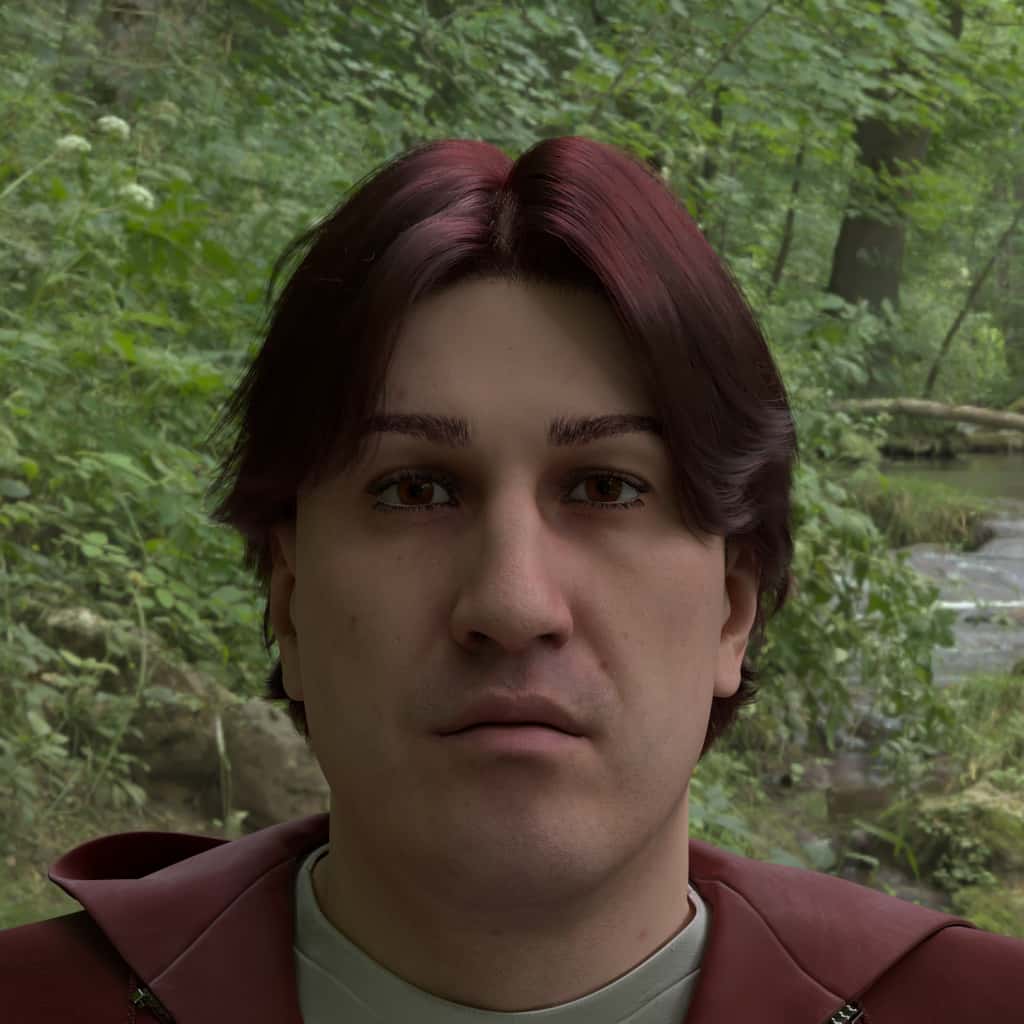

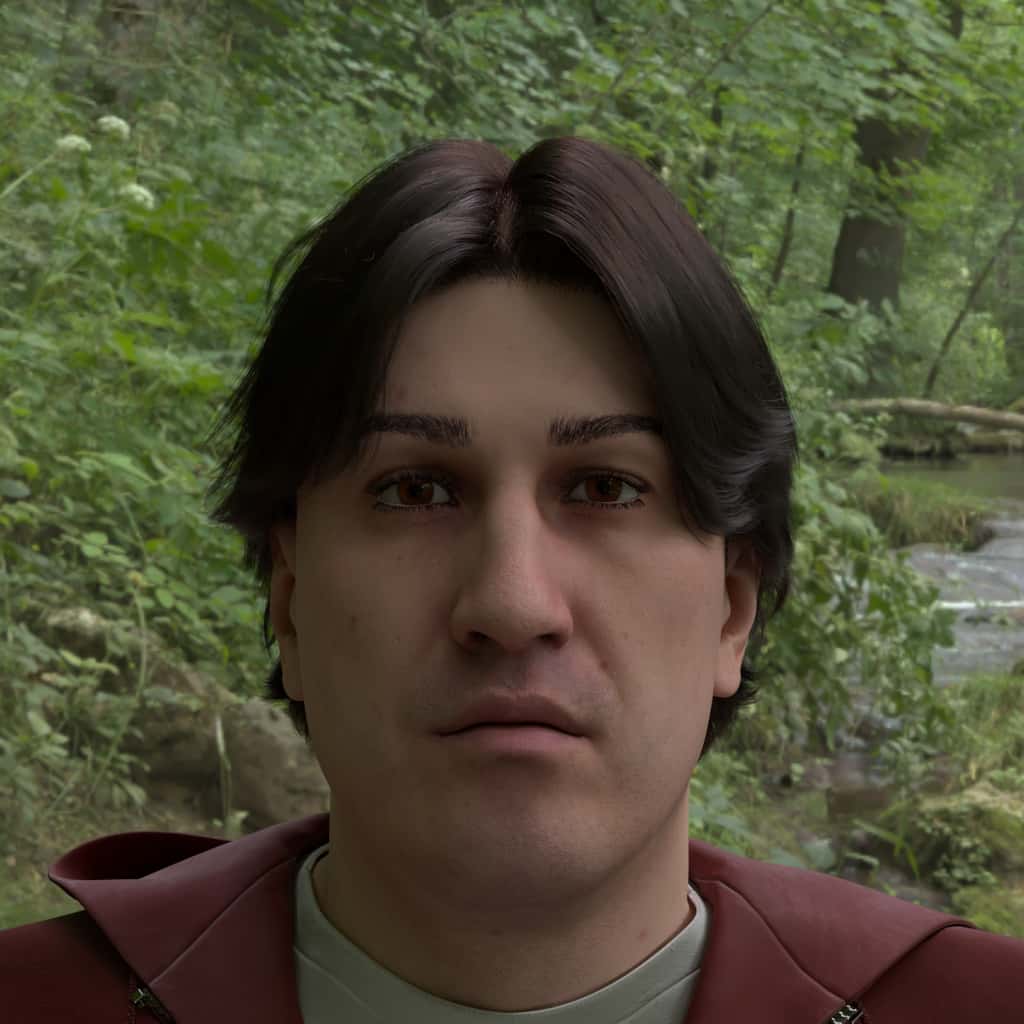

}Visual examples of hairstyles (see also: full input JSON to generate them):

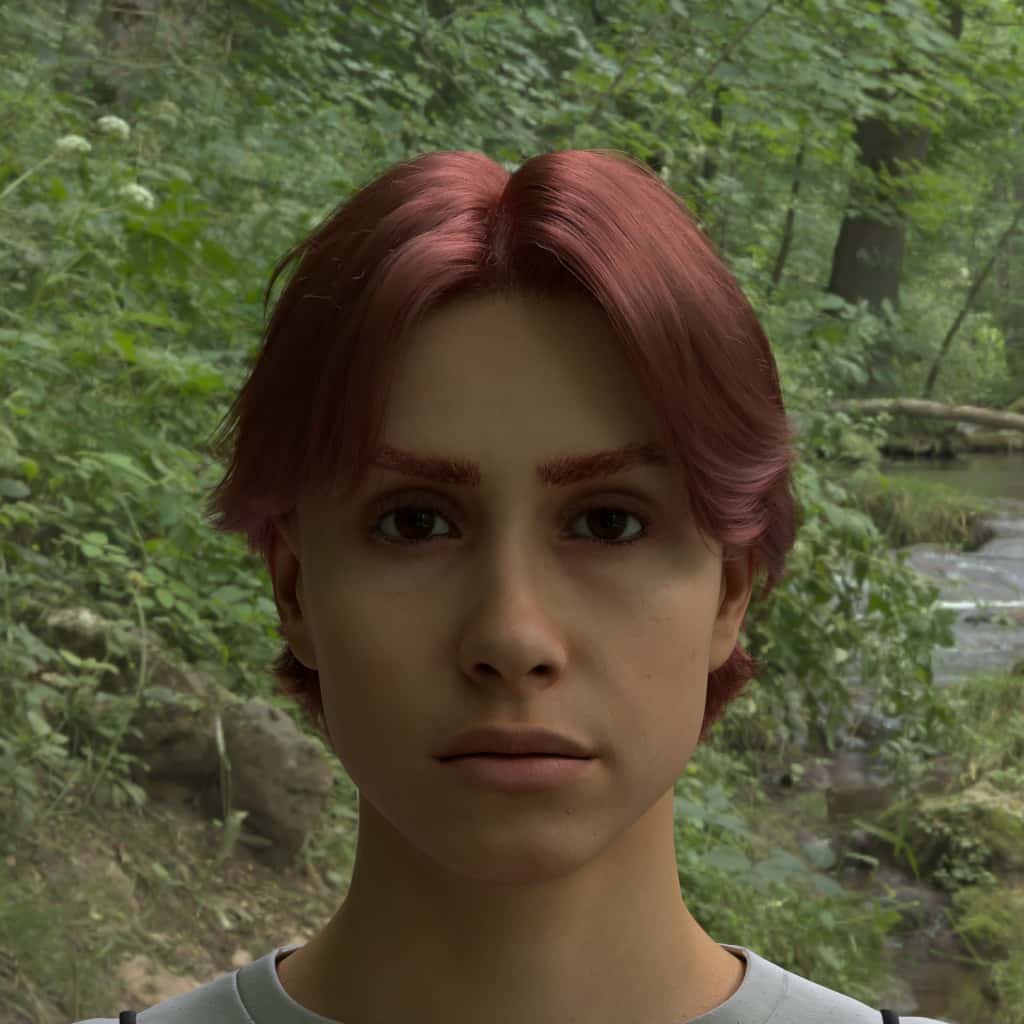

Visual examples of hairstyle colors (see also: full input JSON to generate them):

Facial Hair

Facial hair is also grown procedurally based on the same parameter types as hair. There are mixes of just mustaches, just beards, and full mustache and beards. Check the documentation appendix for style and color names.

Note: At the moment, facial hair should not be specified if any mask is specified.

Note 2: The all keyword does not contain the none keyword, meaning that if all is requested for styles, colors, etc., a none or empty value will not be included.

Randomization color_seed controls variance in color of facial hair (and head hair).

- For consistent renders from one input to the next, always specify

color_seedand set it to the same value - For randomized renders, specify

color_seedas a range

You can also force the facial hair color to be the same as the head hair color with the match_hair_color flag, for that particular render.

style:

- Default: Some IDs may have a edfault value of

["none"] while others may have a specific style - Note: Use

["all"] as a shorthand for every option

color:

- Default:

["dark_golden_brown"] - Note: Use

["all"] as a shorthand for every option

relative_length:

- Default: 1.0

- Min: 0.5

- Max: 1.0

relative_density:

- Default: 1.0

- Min: 0.5

- Max: 1.0

color_seed:

- Default: 0.0

- Min: 0.0

- Max: 1.0

match_hair_color:

- Default: true

{

"humans": [

{

"identities": {"ids": [80], "renders_per_identity": 1},

"facial_attributes": {

"facial_hair": [{

"style": ["all"],

"color": ["all"],

"match_hair_color": true,

"relative_length": {"type": "list", "values": [1.0]},

"relative_density": {"type": "list", "values": [1.0]},

"color_seed": { "type": "range", "values": { "min": 0, "max": 1 }},

"percent": 100

}]

}

}

]

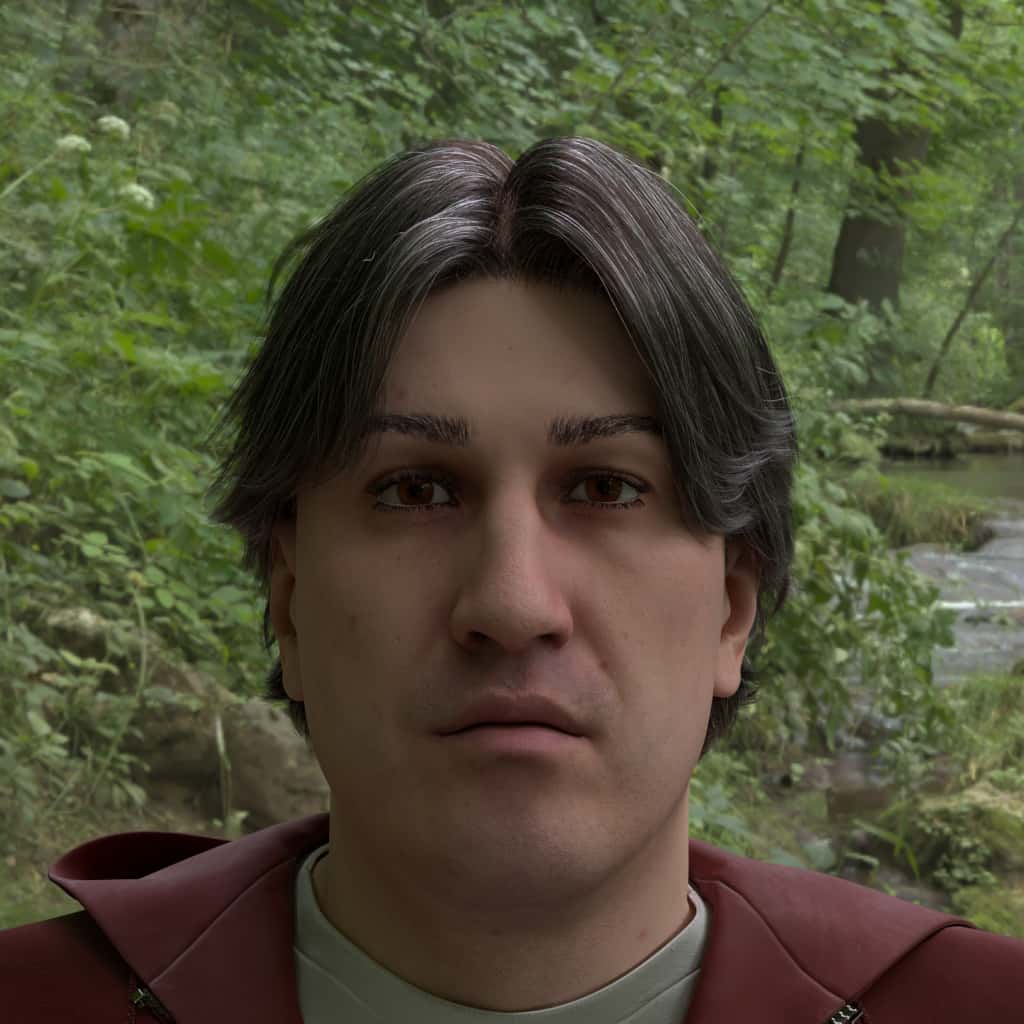

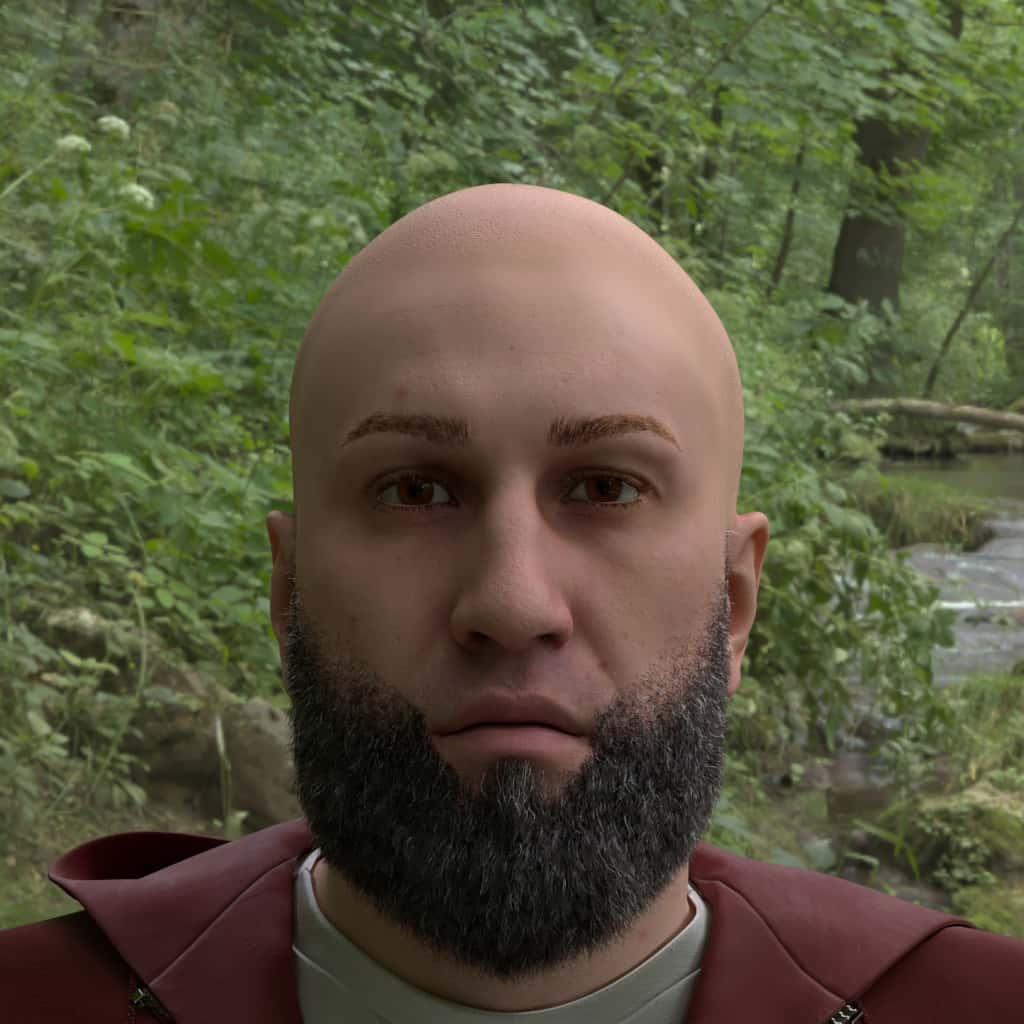

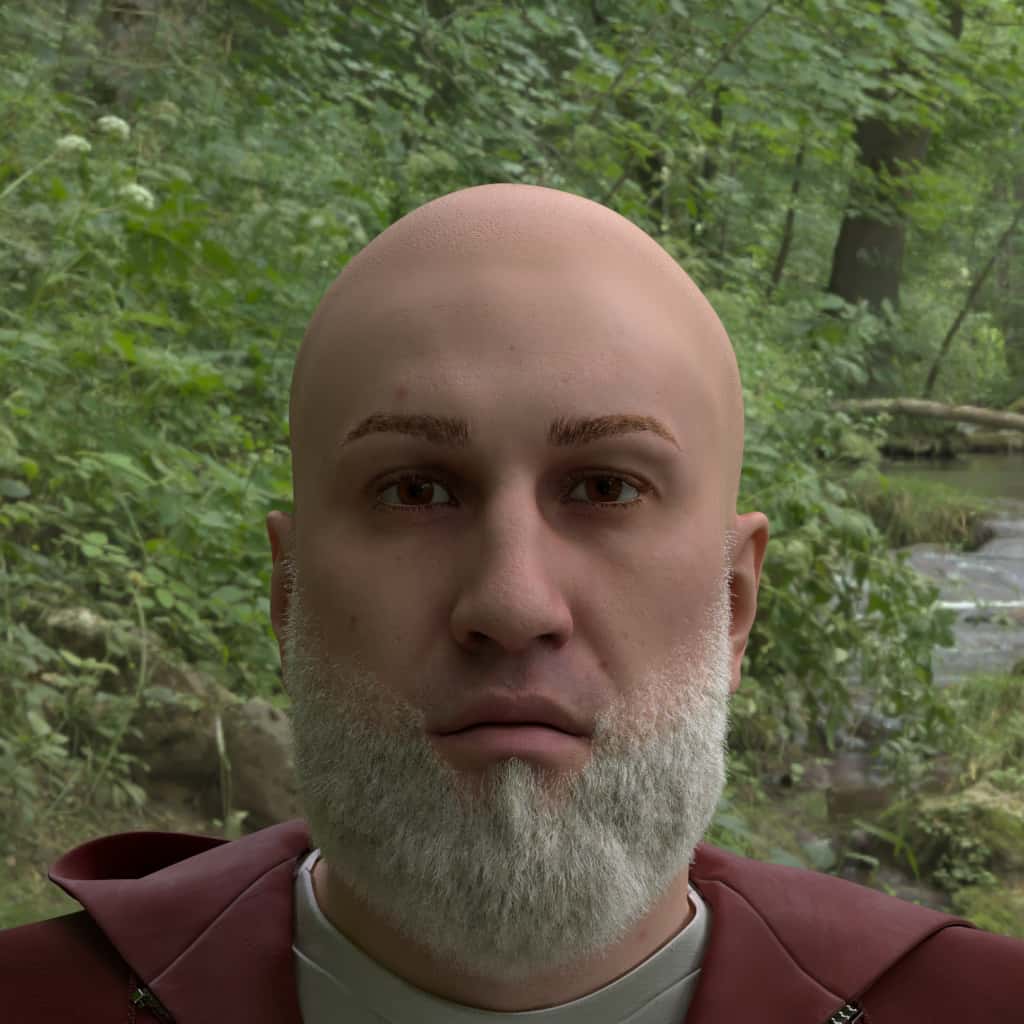

}Visual examples of facial hair styles (see also: full input JSON to generate them):

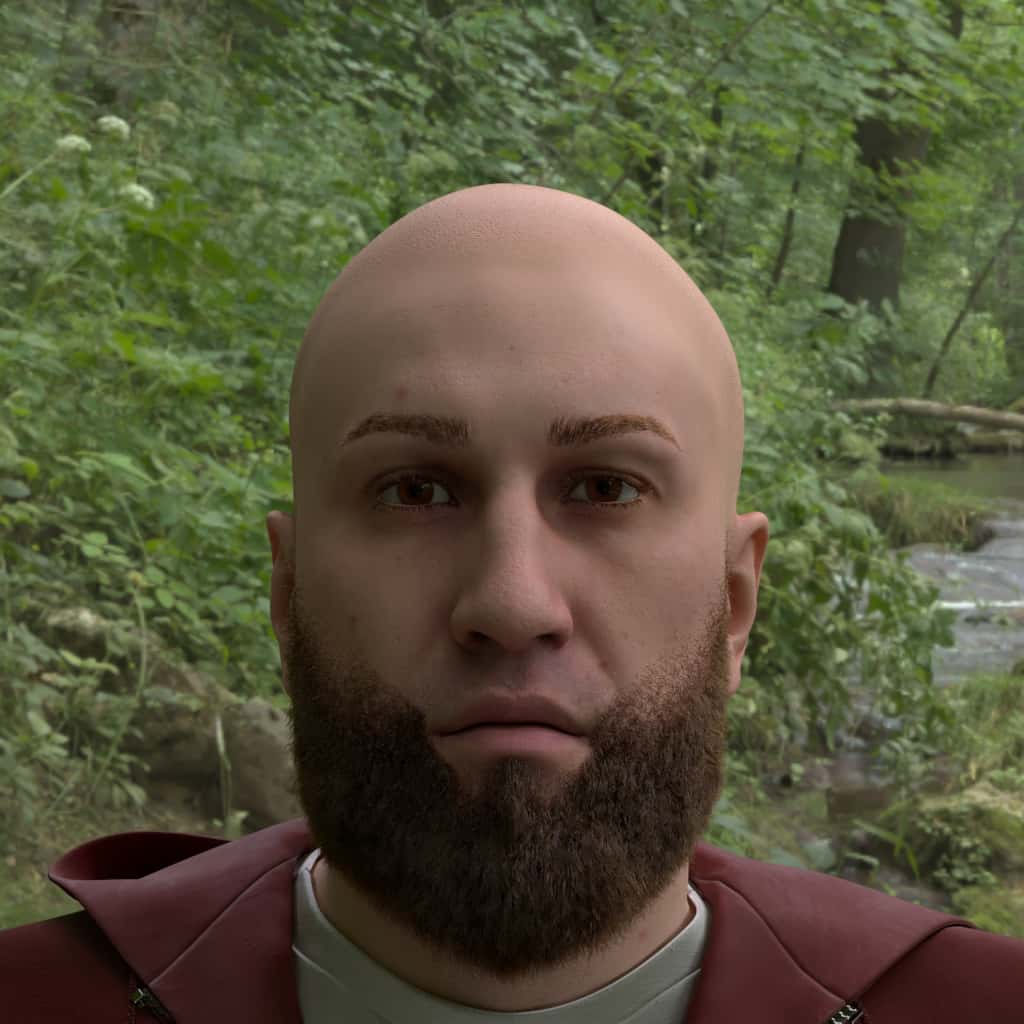

Visual examples of facial hair colors (see also: full input JSON to generate them):

Eyebrows

Each eyebrow associated with an ID has internally assigned and curated values for style, relative_length, and relative_density. The eyebrow color remains natural by defaulting to match the hair color specified in the request — if no hair color is specified, eyebrows revert to the default hair color which is currently dark_golden_brown.

In order to provide an even wider varation of eyebrows shapes and sizes on the models, each of these can be overridden within the distribution by adding any or all three properties of style, relative_length, and relative_density. Additionally, setting sex_matched_only to false will allow the style list to accept styles from both male and female genders when creating the distribution.

match_hair_color defaults to true, matching eyebrow color to hair color. Alternatively, setting match_hair_color to false allows the color and color_seed values to be overridden by a distrubution.

There are 20 male and 32 female eyebrow styles, which are listed in the eyebrow documentation appendix.

style:

- Default: varies by ID

- Note: Use

["all"] as a shorthand for every option

relative_length:

- Default: varies by ID

- Min: 0.7

- Max: 1.0

relative_density:

- Default: varies by ID

- Min: 0.7

- Max: 1.0

color:

- Default: Matches hair color, hair color default is

["dark_golden_brown"] - Allow Distro: set

match_hair_color=false - Note: Use

["all"] as a shorthand for every option

color_seed:

- Default: 0.0

- Min: 0.0

- Max: any postive number

match_hair_color:

- Default: true, set to false to override

sex_matched_only:

- Default: true, set to false to override

{

"humans": [

{

"identities": {"ids": [80],"renders_per_identity": 1},

"facial_attributes": {

"eyebrows": [{

"style": ["all"],

"color": ["all"],

"relative_length": {"type": "range", "values": {"min": 0.7, "max": 1.0}},

"relative_density": {"type": "range", "values": {"min": 0.7, "max": 1.0}},

"color_seed": {"type": "list", "values": [0]},

"match_hair_color": false,

"sex_matched_only": true,

"percent": 100

}]

}

}

]

}A few different examples of IDs with their default eyebrows applied, and a random hair color. (see also: full input JSON to generate them):

Visual examples of eyebrow lengths applied to a single style (see also: full input JSON to generate them):

Visual examples of eyebrow densities applied to a single style (see also: full input JSON to generate them):

Eyebrows can also be "style": ["none"]

Eyes

Eyes come in 90 variations of iris_color, and each ID has an iris color set to it by default. All eyes are set to a default redness of 0.0, which can scale to full redness of 1.0. Similary, pupil_dilation is set at 0.5 for all eyes but can be overridden from a range of 0.0 very small to full dilation 1.0. A reference list of iris_color IDs, their base color and lightness can be found here.

To override any of these, add any or all of the following params into a distribution group.

iris_color:

- Default: Per ID

- Note: Use

["all"] as a shorthand for every option

pupil_dilation:

- Default: 0.5

- Min: 0.0

- Max: 1.0

redness:

- Default: 0.0

- Min: 0.0

- Max: 1.0

{

"humans": [

{

"identities": {"ids": [80],"renders_per_identity": 1},

"facial_attributes": {

"eyes": [{

"iris_color": ["all"],

"redness": {"type": "range", "values": {"min": 0.0, "max": 1.0}},

"pupil_dilation": {"type": "range", "values": {"min": 0.0, "max": 1.0}},

"percent": 100

}]

}

}

]

}Visual examples of iris color:

Accessories

The facial attributes section describes adornments that are additions beyond just the head anatomy.

This includes:

- Glasses

- Headwear/Hats

- Face Masks

- Headphones

An example of all accessories filled out:

{

"humans": [

{

"identities": {"ids": [80],"renders_per_identity": 1},

"accessories": {

"glasses": [{

"style": ["all"],

"lens_color": ["default"],

"transparency": {"type":"range", "values": {"min":0.0,"max":1.0}},

"metalness": {"type":"range", "values": {"min":0.0,"max":1.0}},

"sex_matched_only": true,

"percent": 100

}],

"headwear": [{

"style": ["all"],

"sex_matched_only": true,

"percent": 100

}],

"masks": [{

"style": ["all"],

"percent": 100

}],

"headphones": [{

"style": ["all"],

"percent": 100

}]

}

}

]

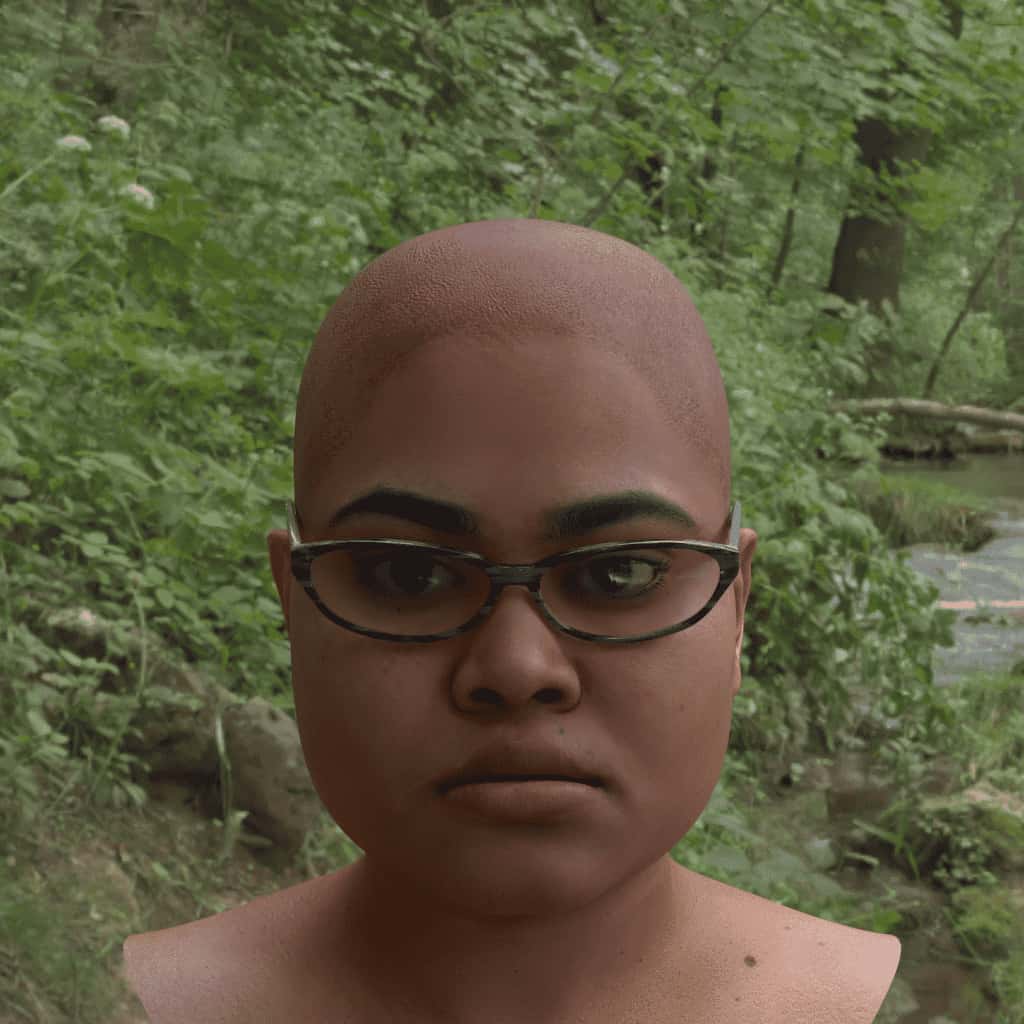

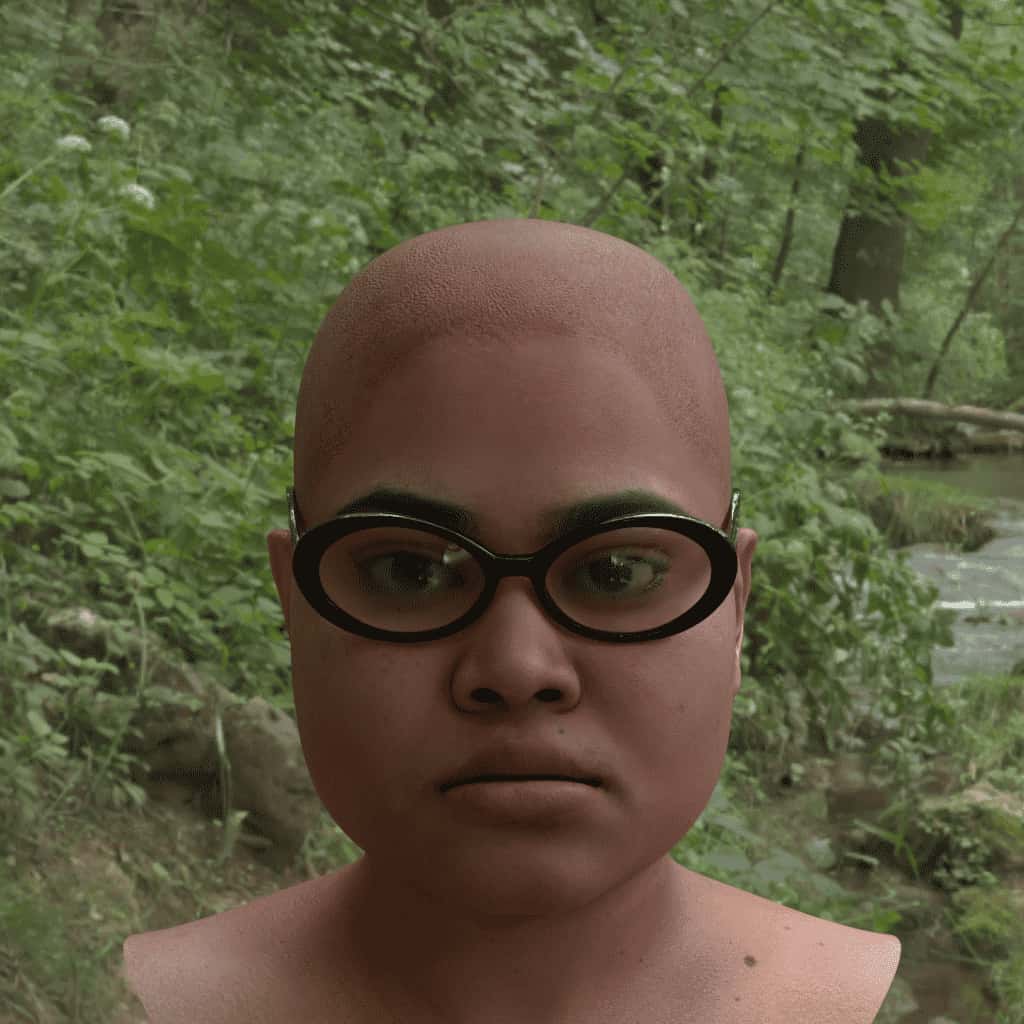

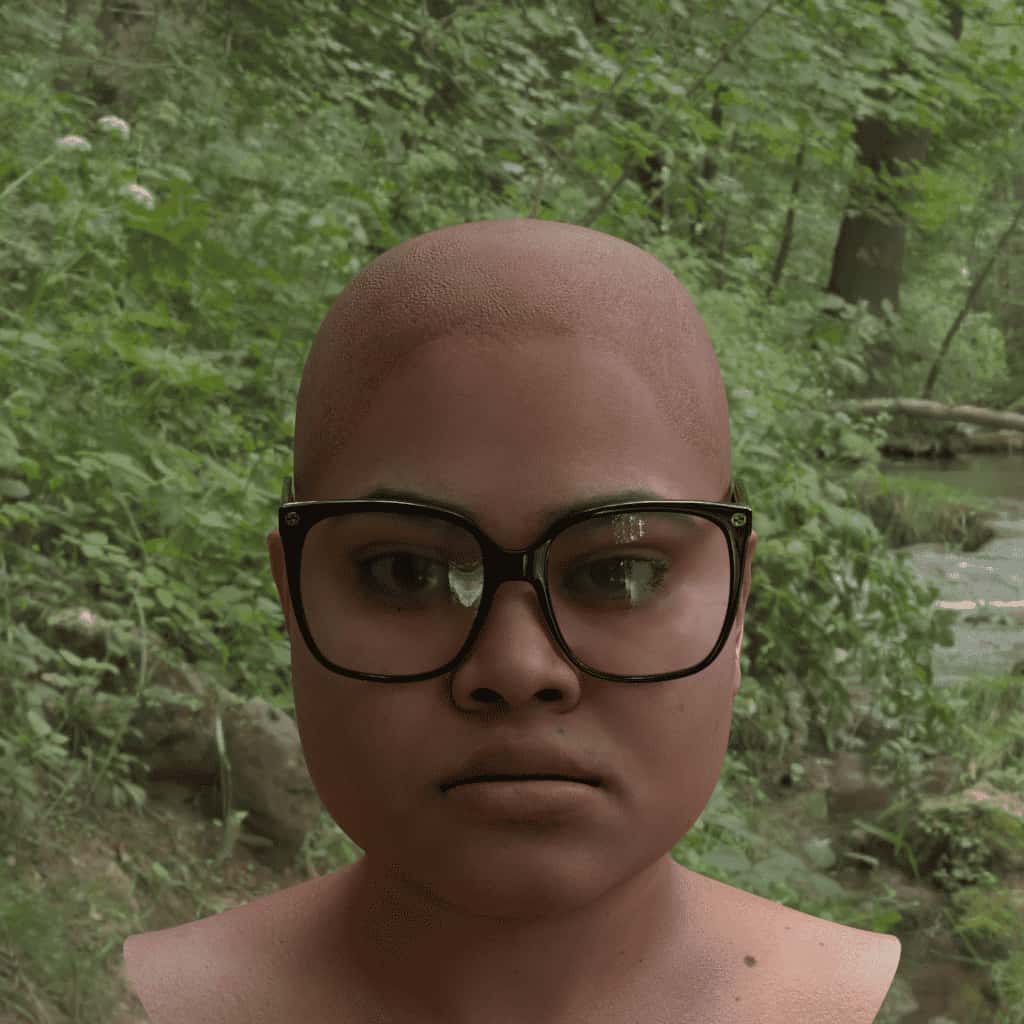

}Glasses

Glasses have style names, several values for color, specified in the documentation appendix, transparency and "mirrored-ness", referred to as metalness. For clear glasses, the transparency value 1 is used. For fully mirrored glasses, the metalness value would be 1.

Note: The all keyword does not contain the none keyword, meaning that if all is requested for styles, lens colors, etc., a none or empty value will not be included.

style:

- Default:

["none"] - Note: Use

["all"] as a shorthand for every option

lens_color:

- Default:

["default"] - Note: Use

["all"] as a shorthand for every option

transparency:

- Default: 1

- Min: 0.0

- Max: 1.0 (fully clear/transparent)

metalness:

- Default: 1

- Min: 0.0

- Max: 1.0 (fully mirrored)

sex_matched_only:

- Default:

true

An example of clear, regular glasses json:

{

"humans": [

{

"identities": {"ids": [80],"renders_per_identity": 1},

"accessories": {

"glasses": [{

"style": ["all"],

"lens_color": ["default"],

"metalness": {"type":"list", "values":[0]},

"transparency": {"type":"list", "values":[1]},

"sex_matched_only": true,

"percent": 100

}]

}

}

]

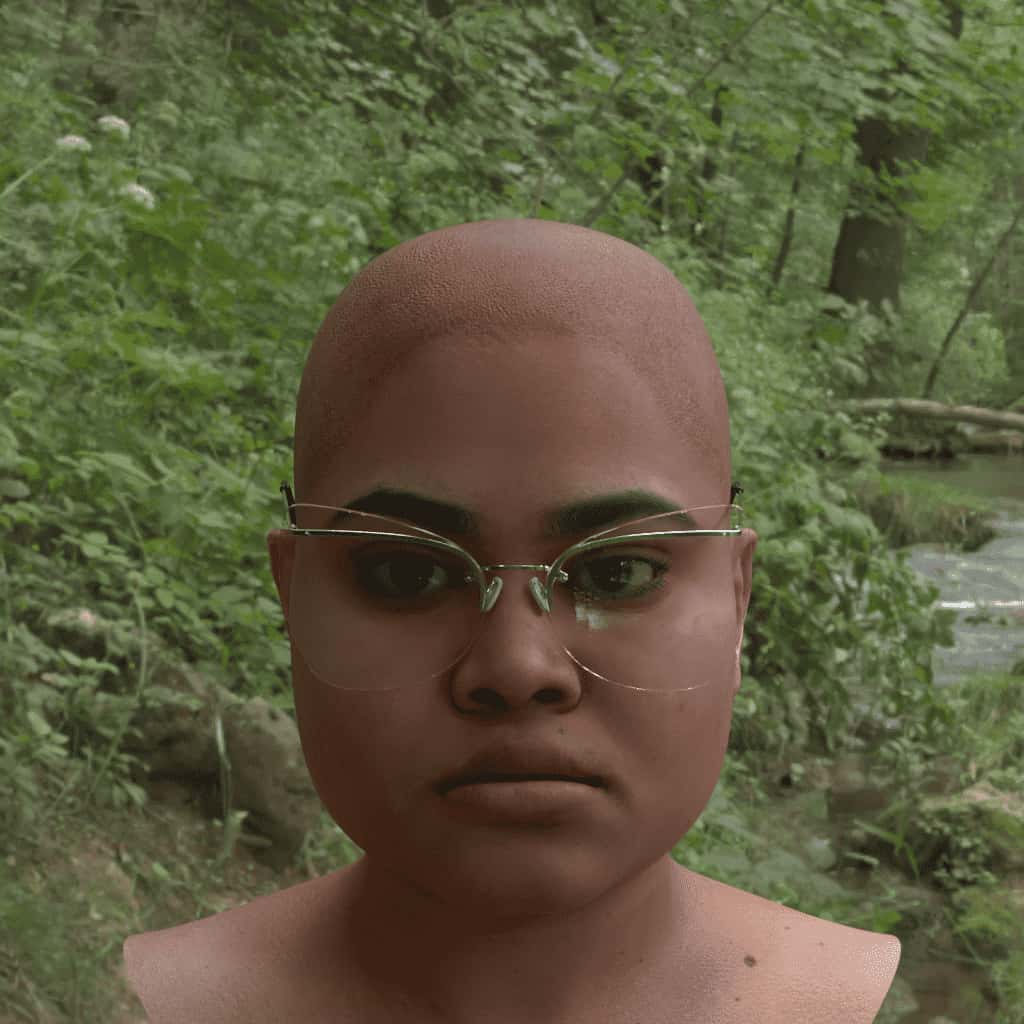

}Visual examples of glasses styles (see also: full input JSON to generate them):

Visual examples of glasses colors (see also: full input JSON to generate them):

Visual examples of glasses metalness (see also: full input JSON to generate them):

Visual examples of glasses transparency (see also: full input JSON to generate them):

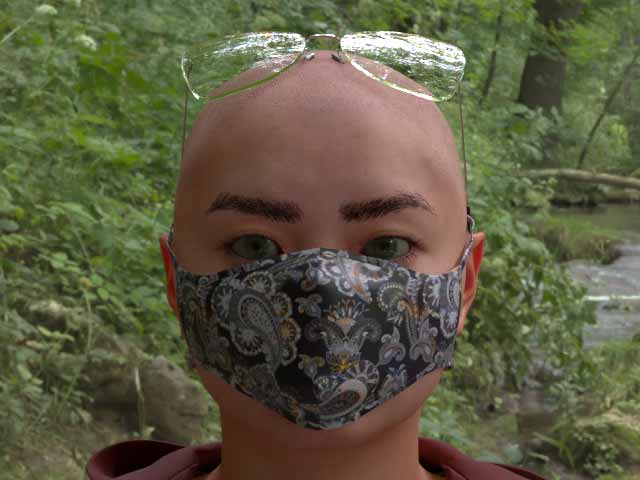

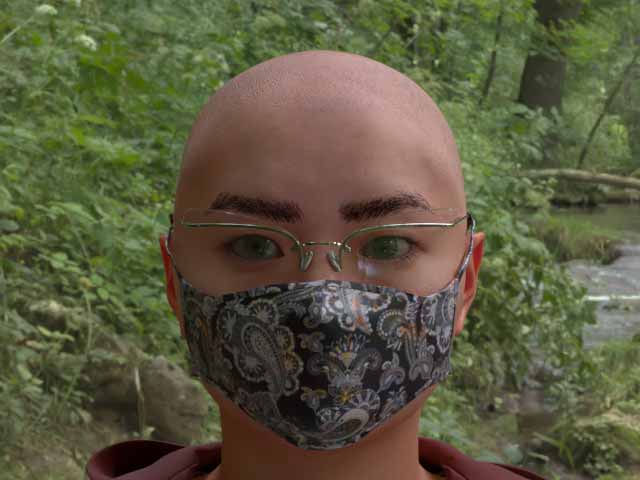

Glasses move to the top of the head when masks are in position 0 or 1 — in all other mask positions, glasses remain over the eyes. A demonstration of the difference:

Headwear

There are several styles of hats scaled to the size of the head. Simply a style name or list of them is specified. Style names can be found in the documentation appendix.

Note: At the moment, hair should not be specified if any headwear is specified.

Note 2: The all keyword does not contain the none keyword, meaning that if all is requested for styles, etc., a none or empty value will not be included.

You can also force the headwear to be sex-appropriate for the identity (sex_matched_only). Like most sex-appropriate values, while this option may “feel” more appropriate, we have found that for machine learning, full variance can generalize better (use-case dependent).

style

- Default:

["none"] - Note: Use

["all"] as a shorthand for every option

sex_matched_only:

- Default:

true

All headwear style selection:

{

"humans": [

{

"identities": {"ids": [80], "renders_per_identity": 1},

"accessories": {

"headwear": [{

"style": ["all"],

"sex_matched_only": true,

"percent": 100

}]

}

}

]

}Visual examples of headwear styles (see also: full input JSON to generate them):

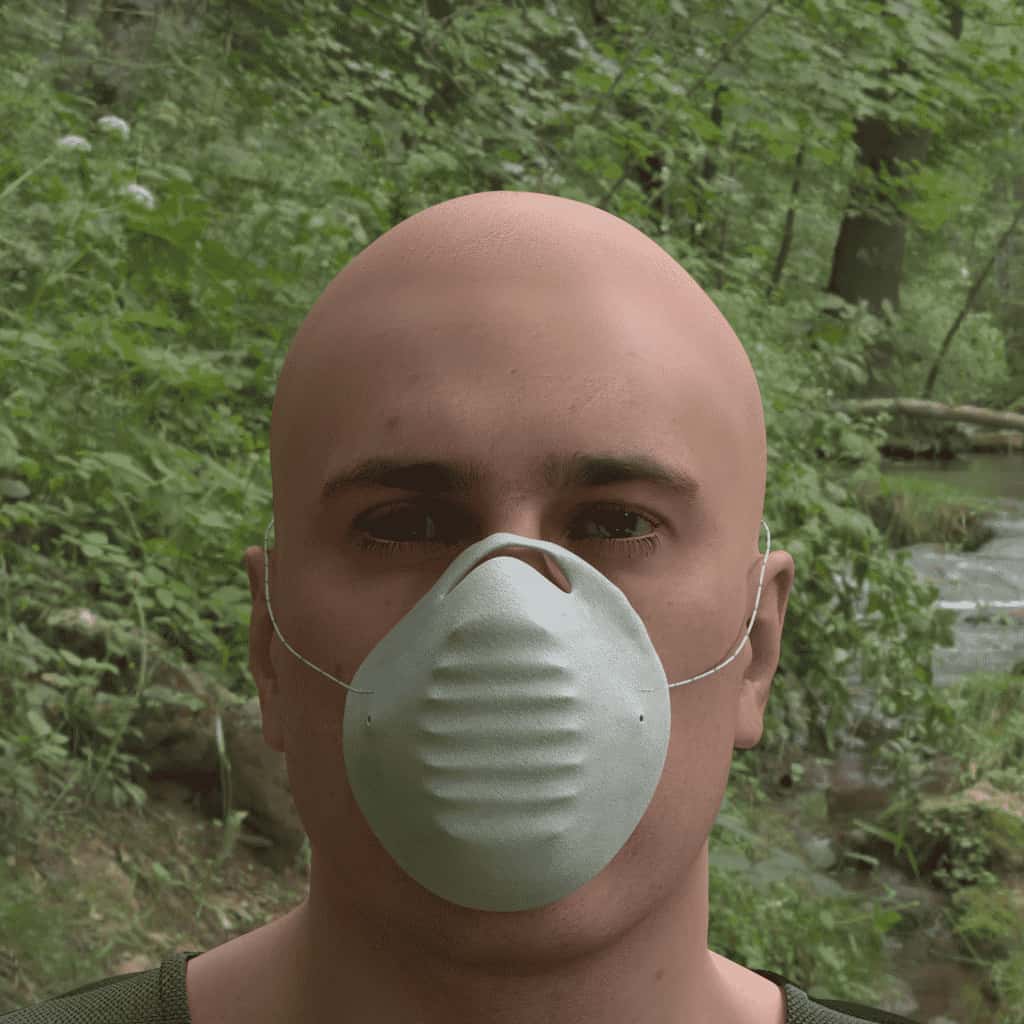

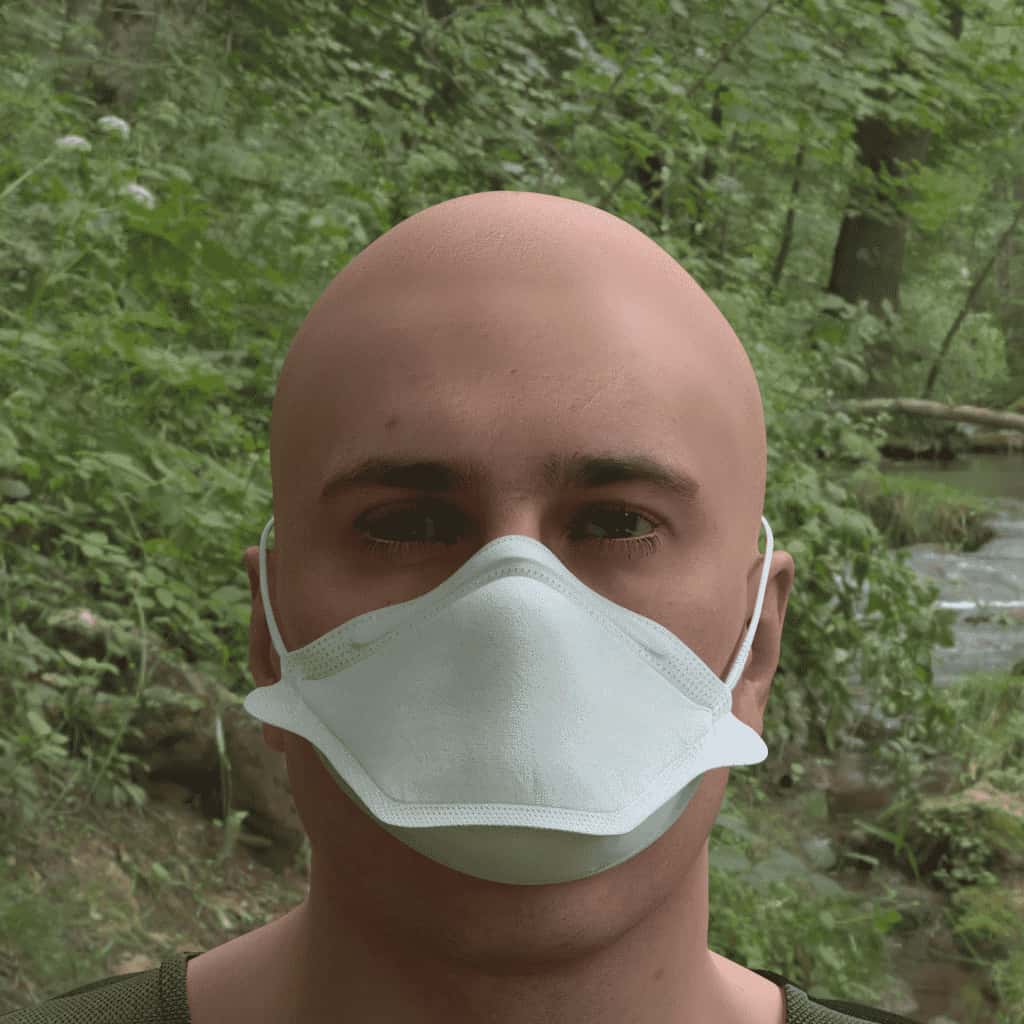

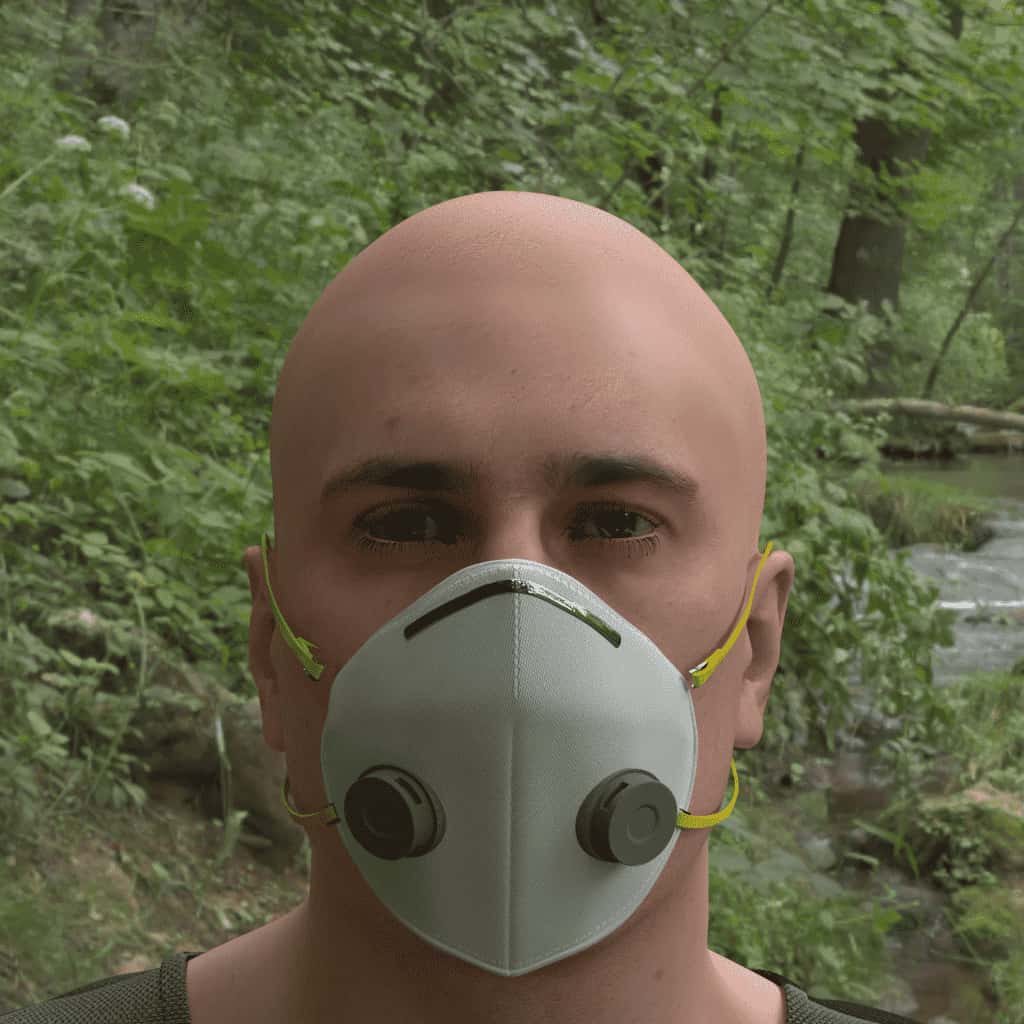

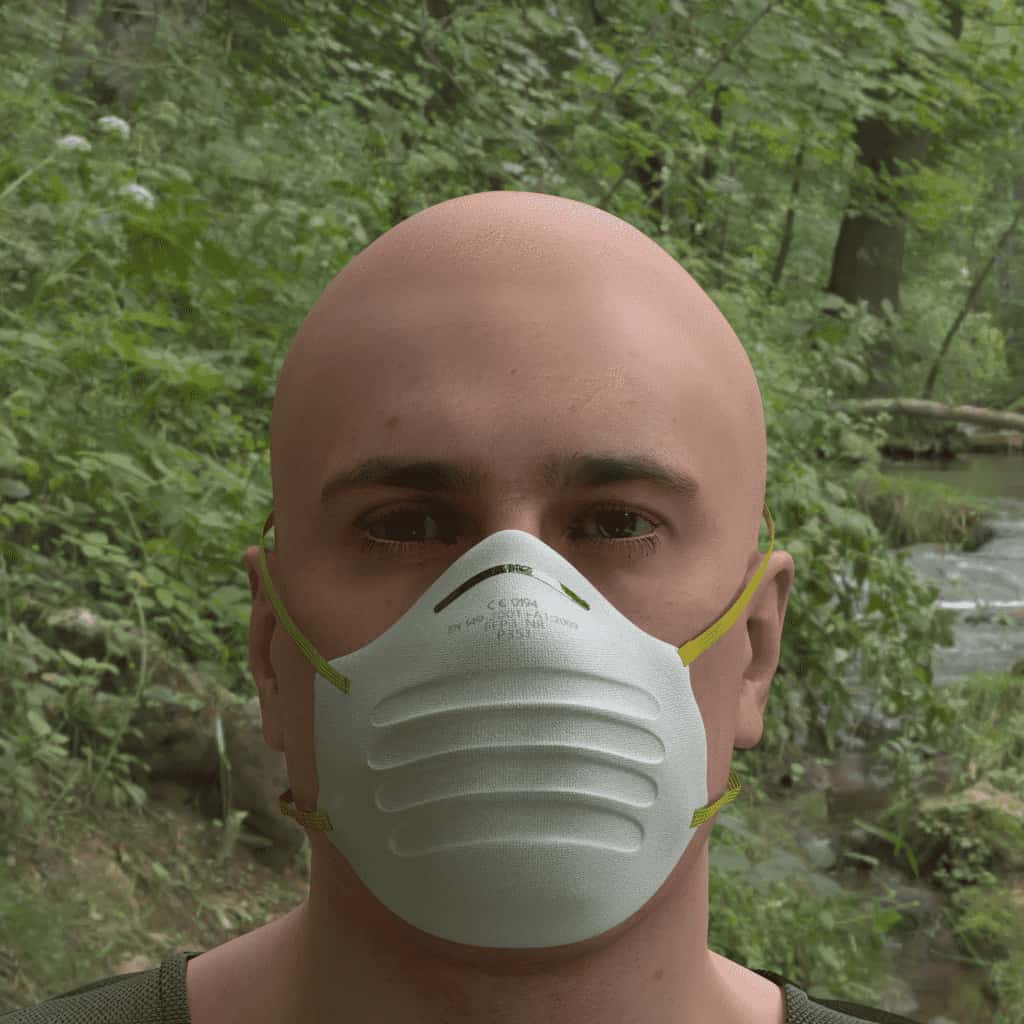

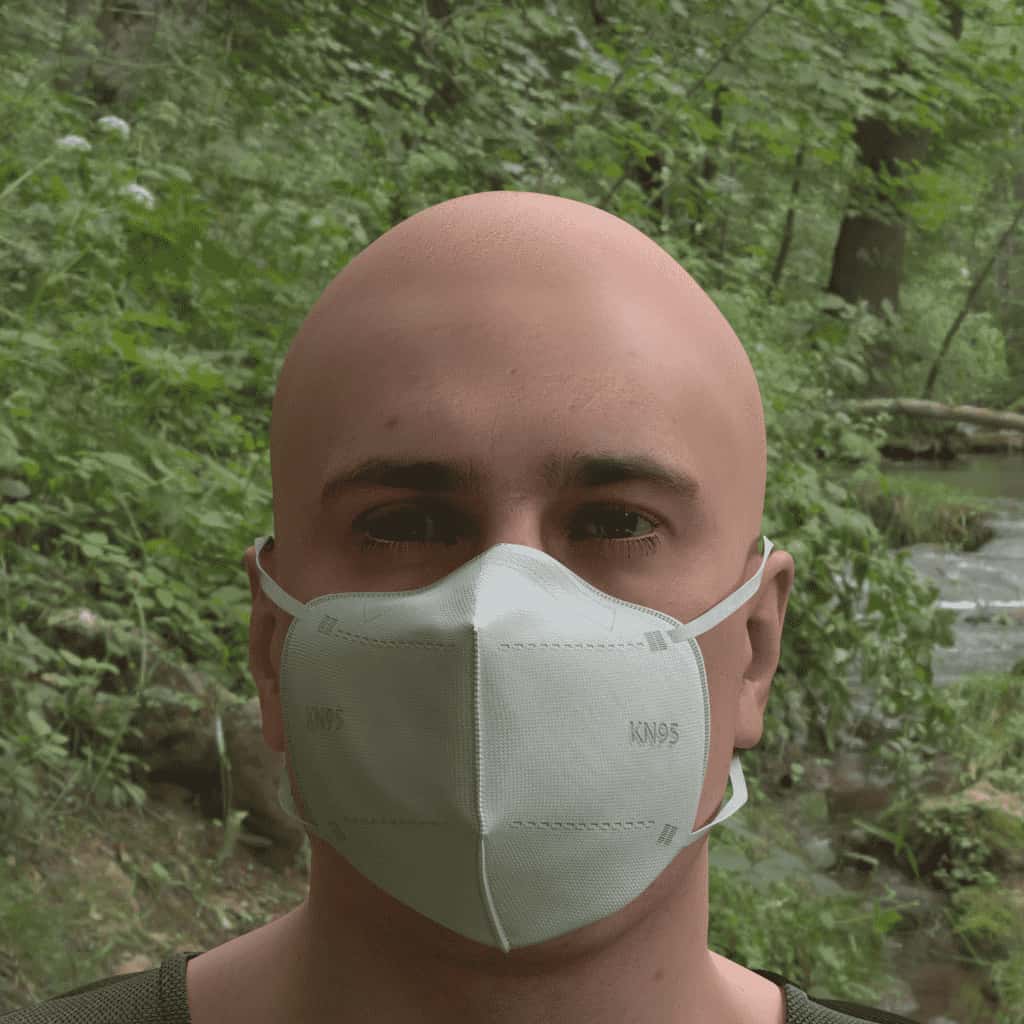

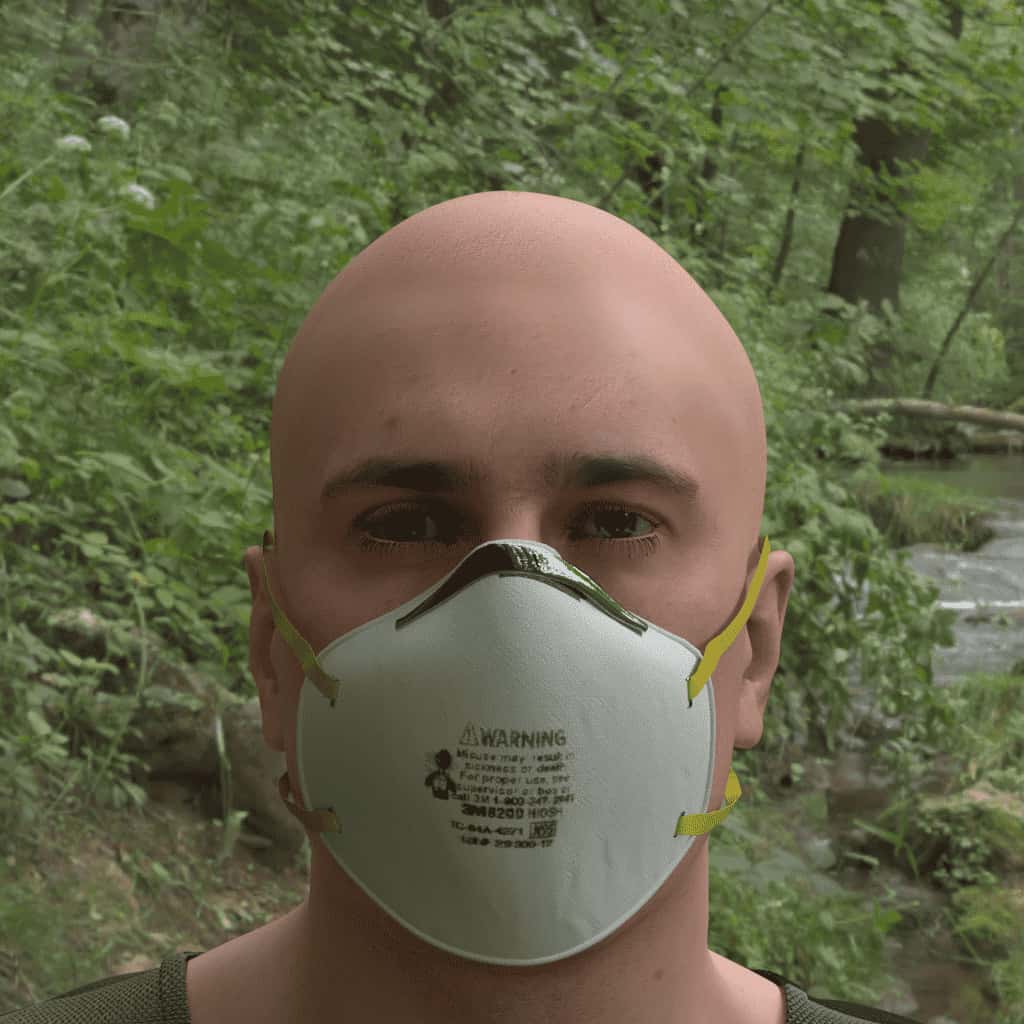

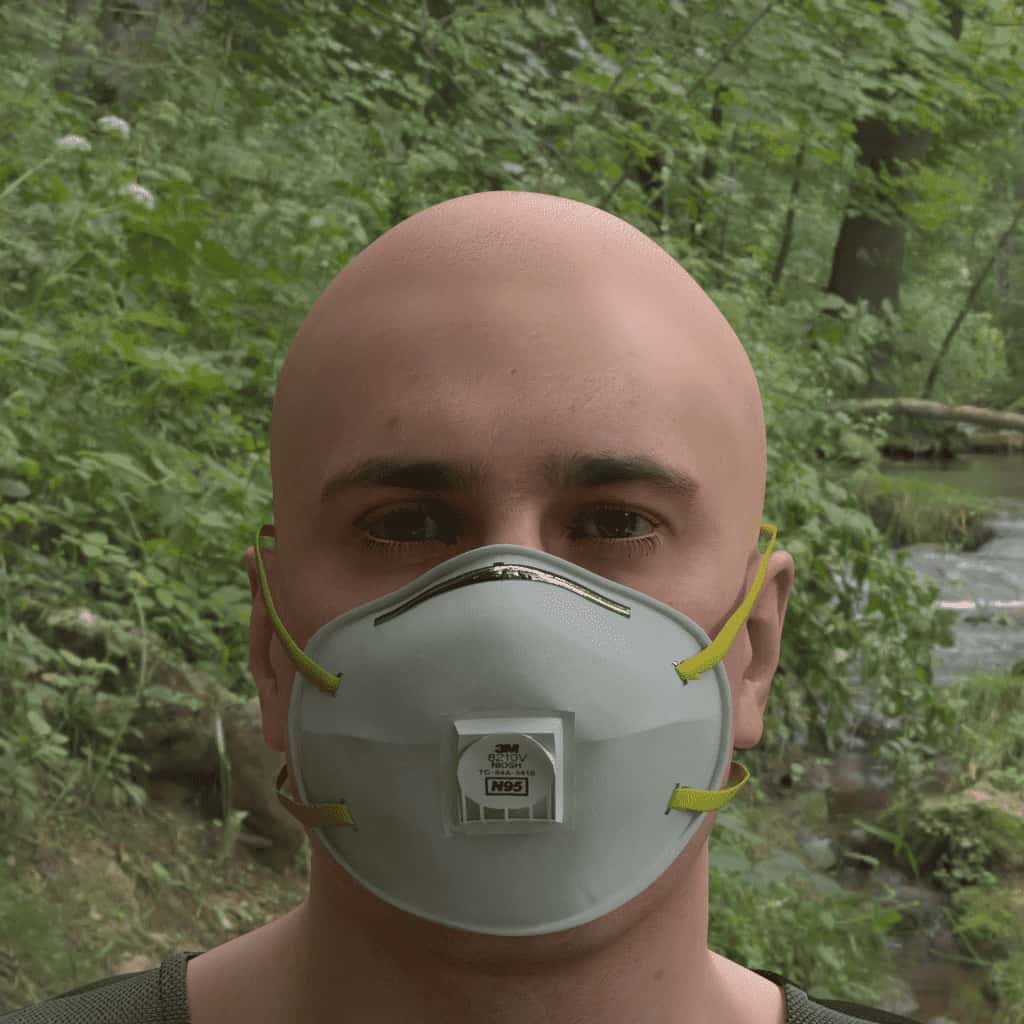

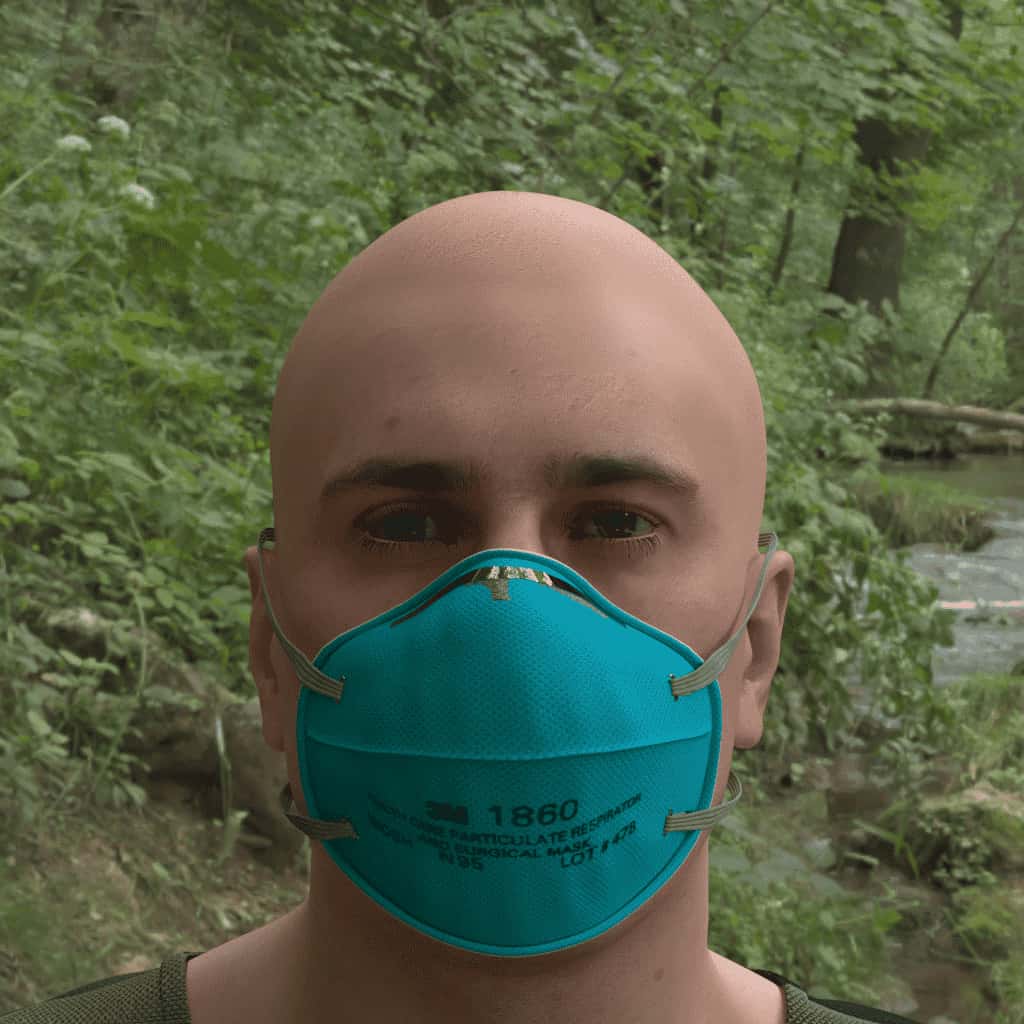

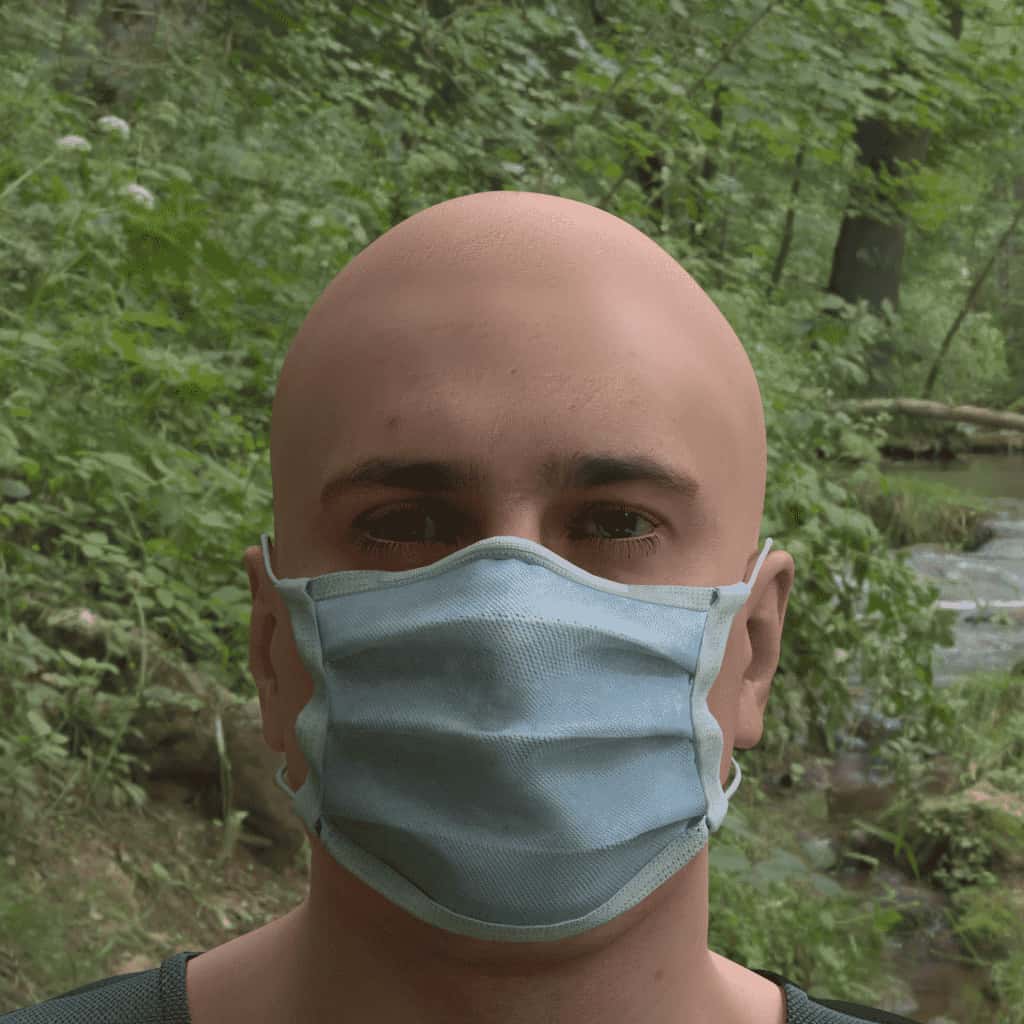

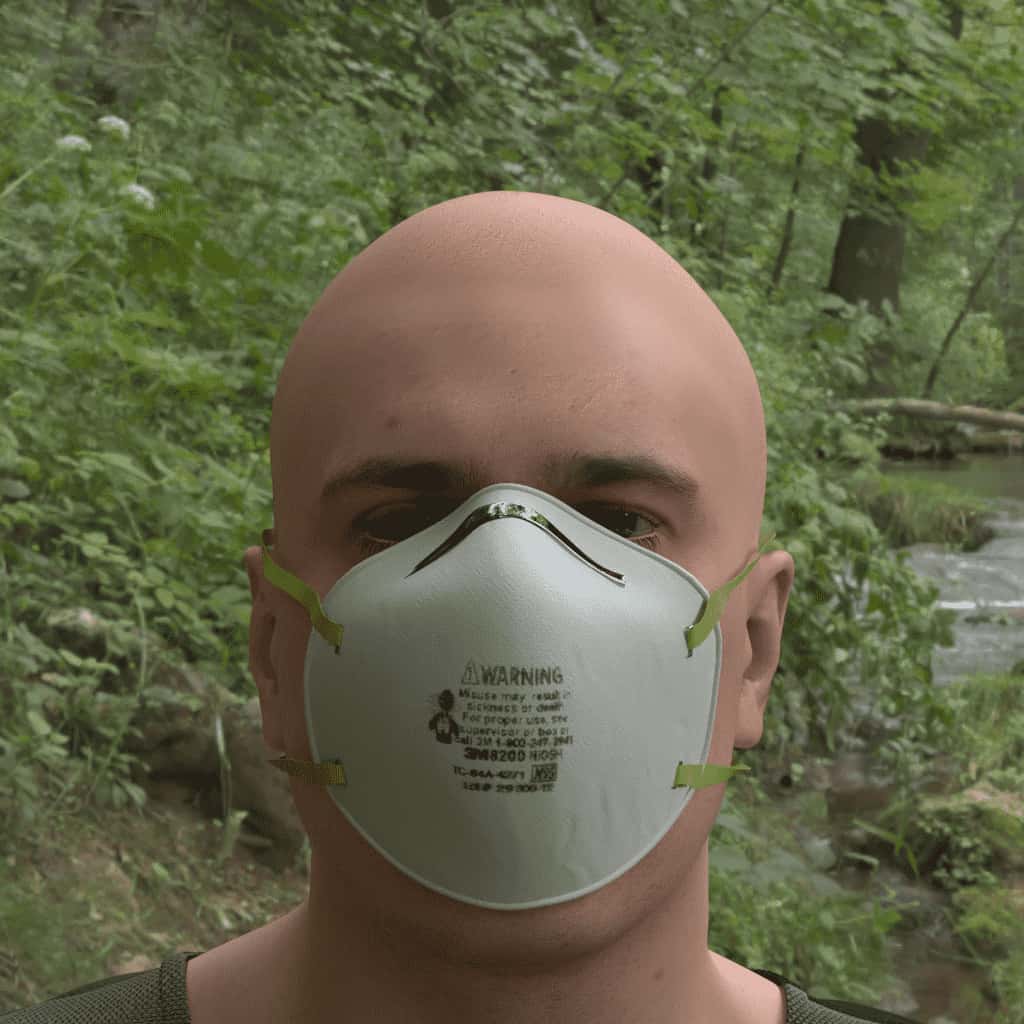

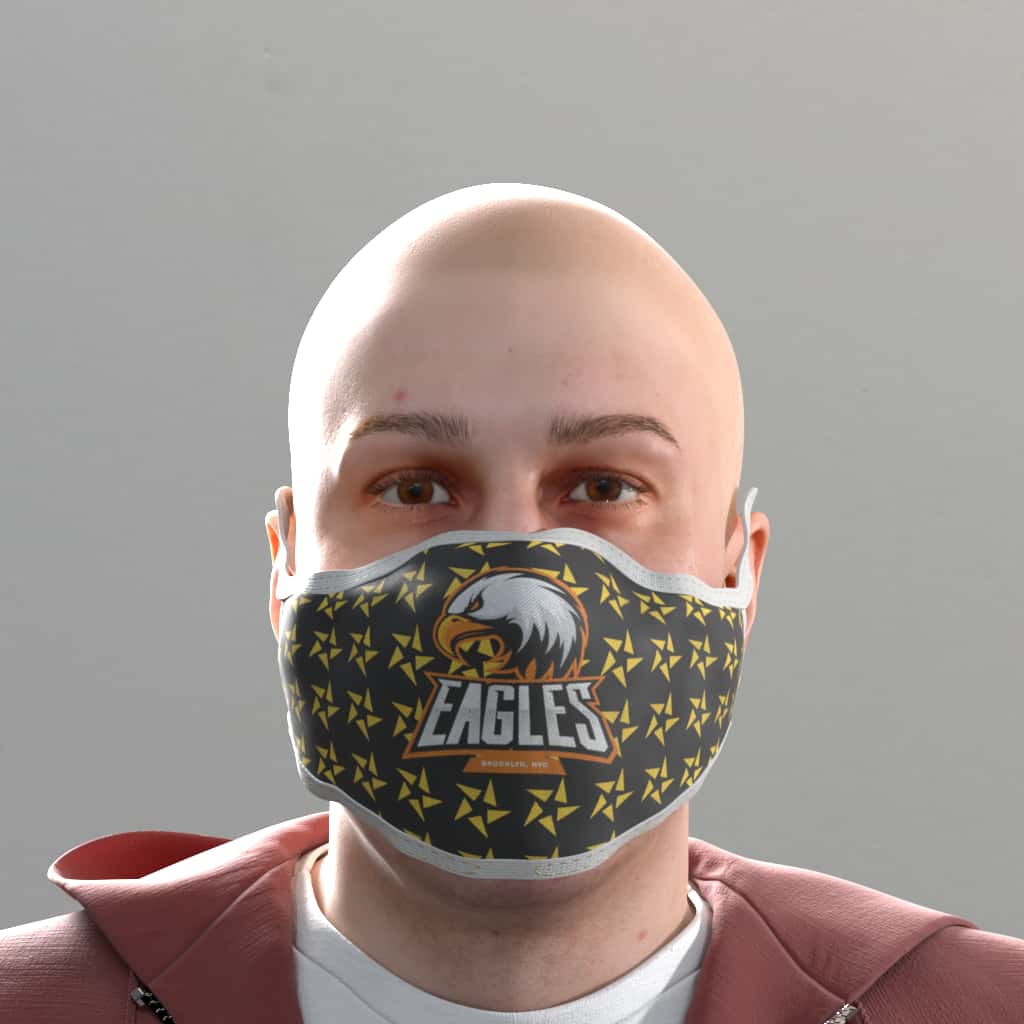

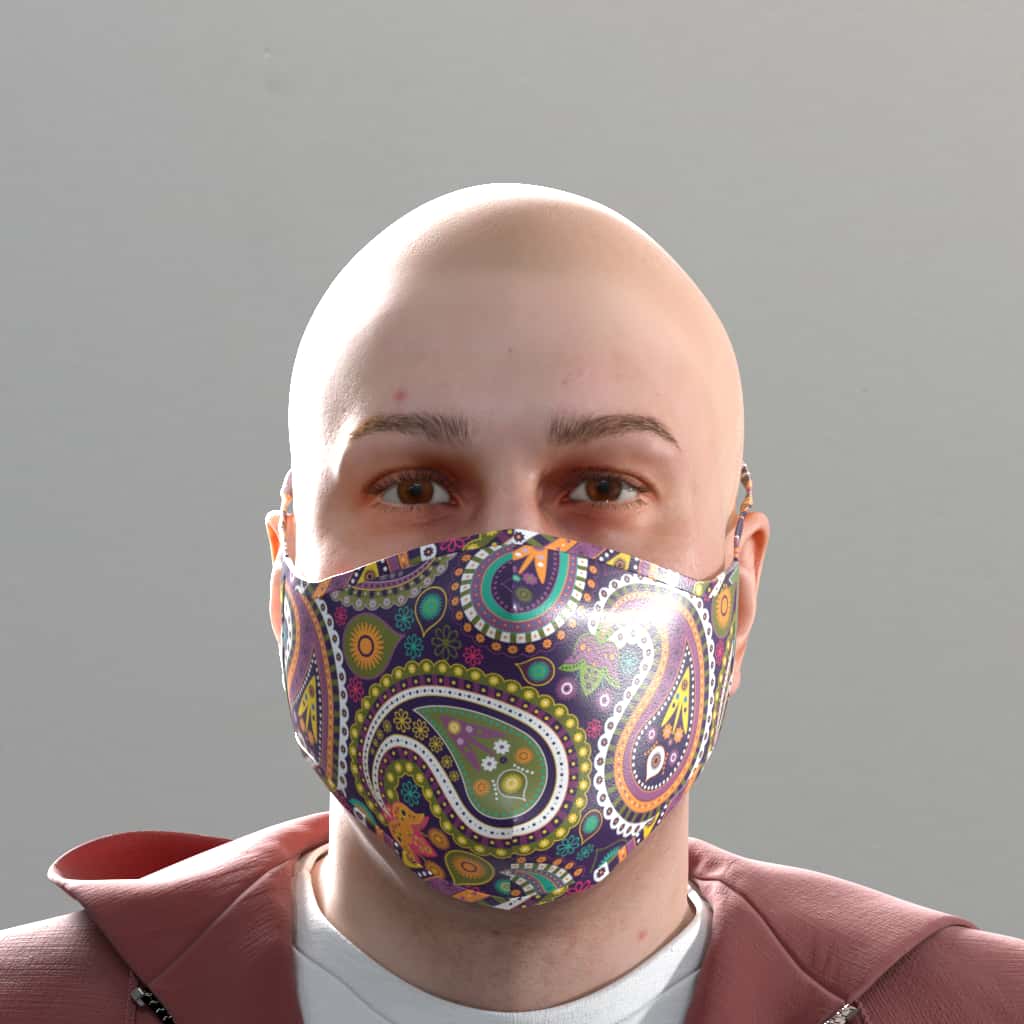

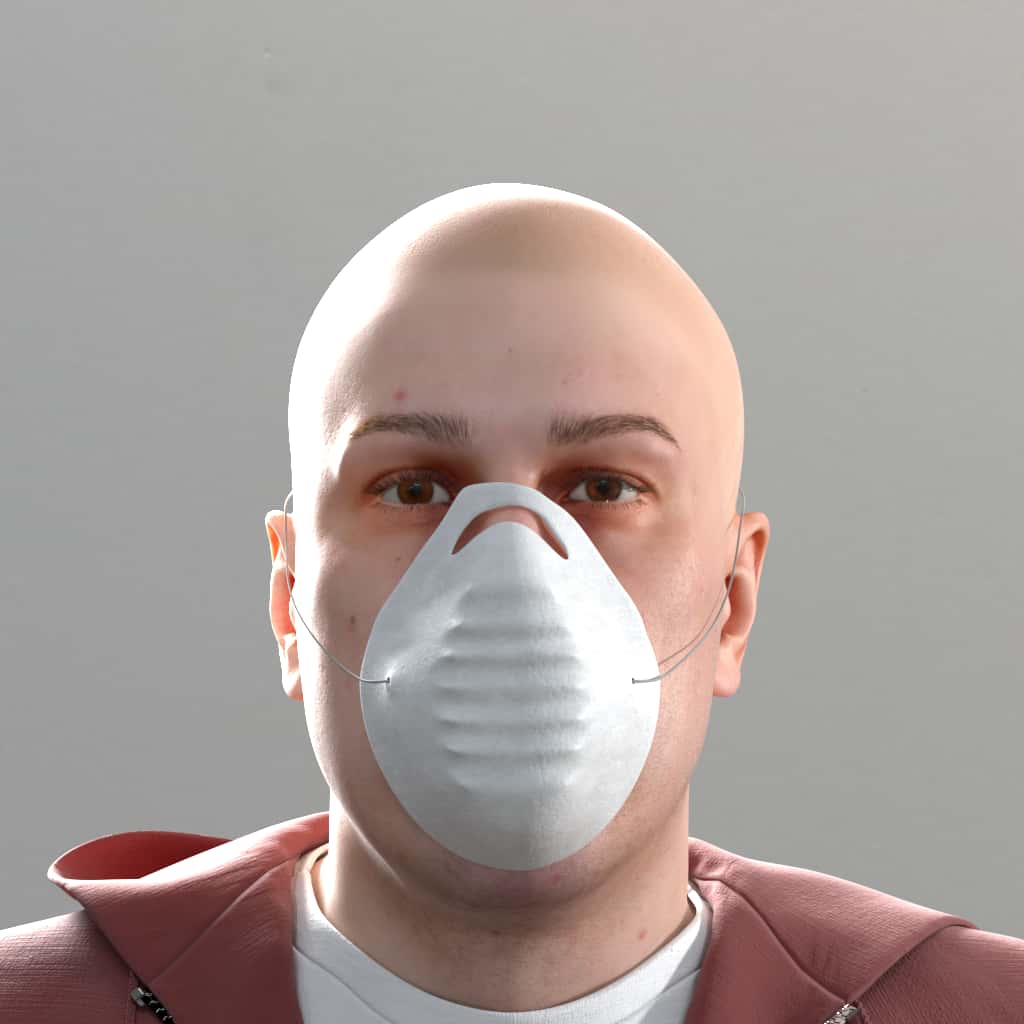

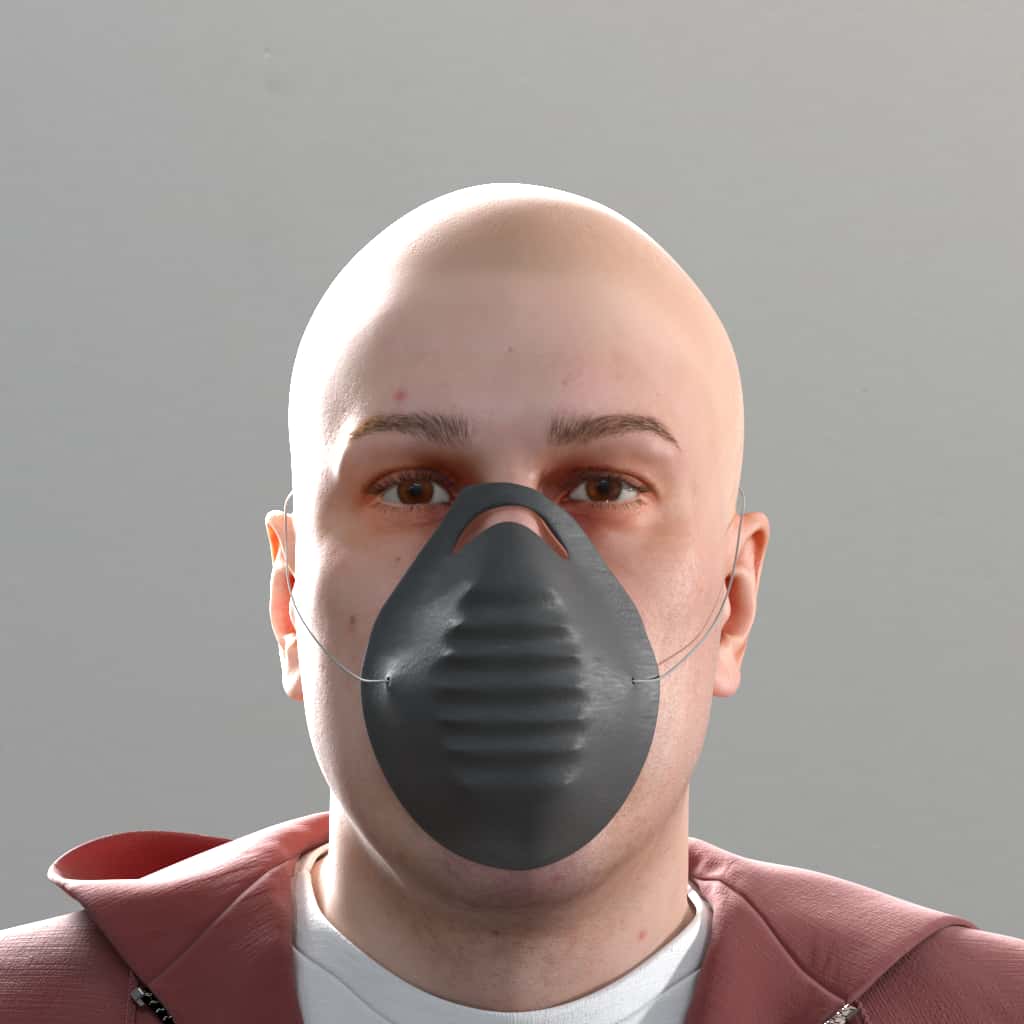

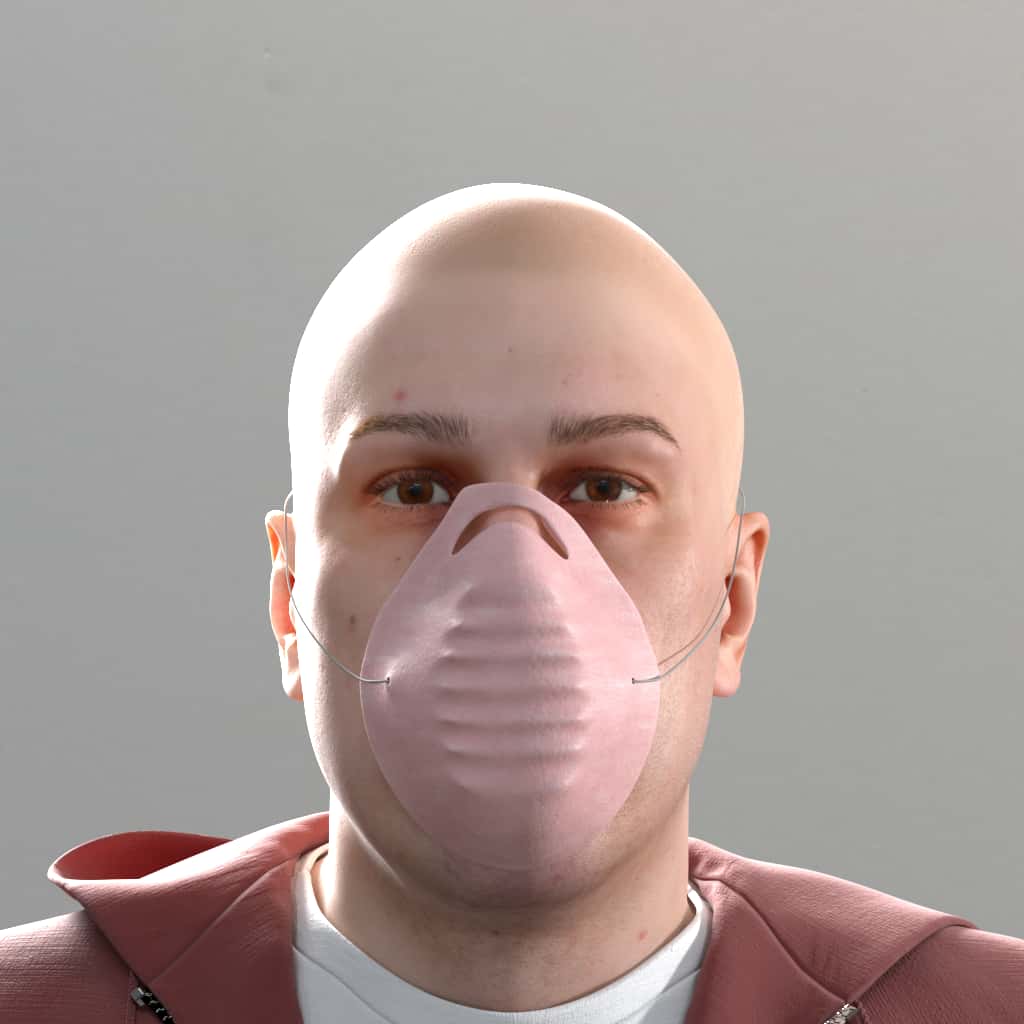

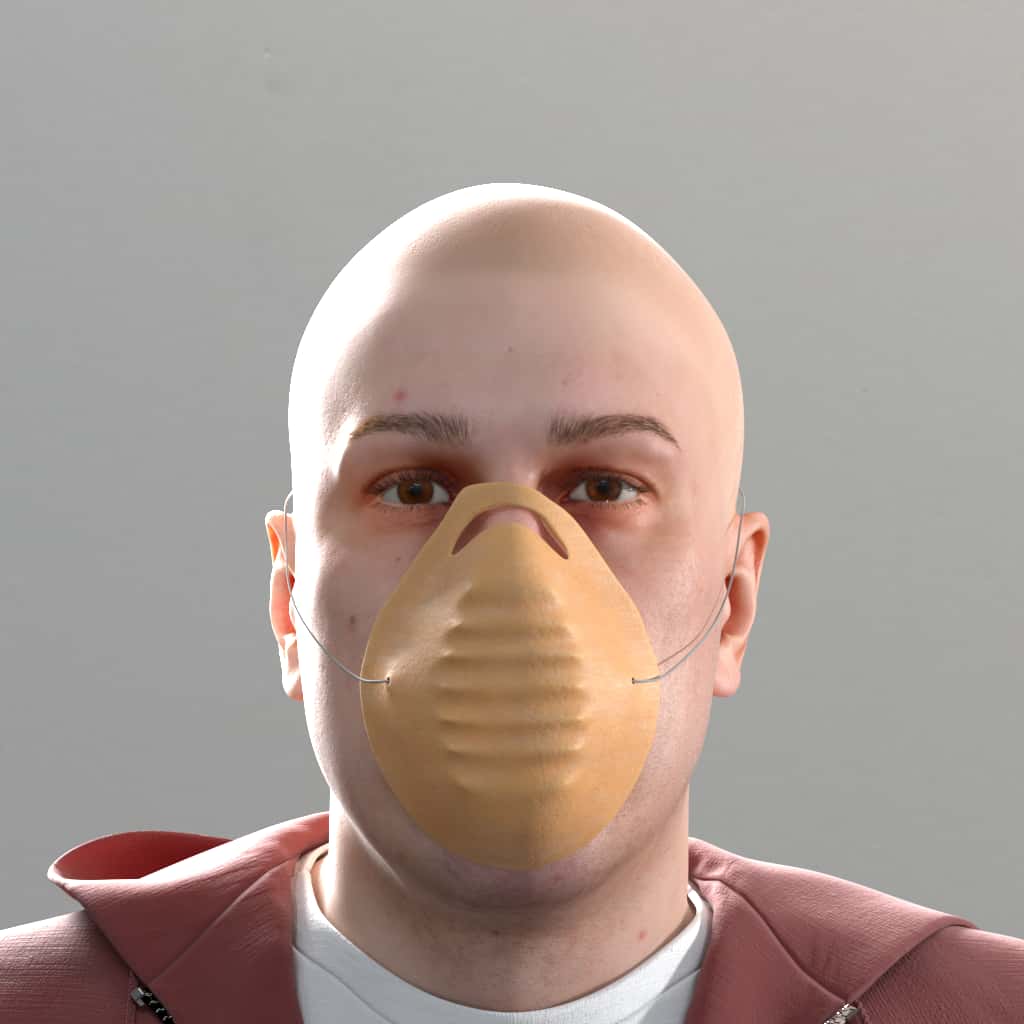

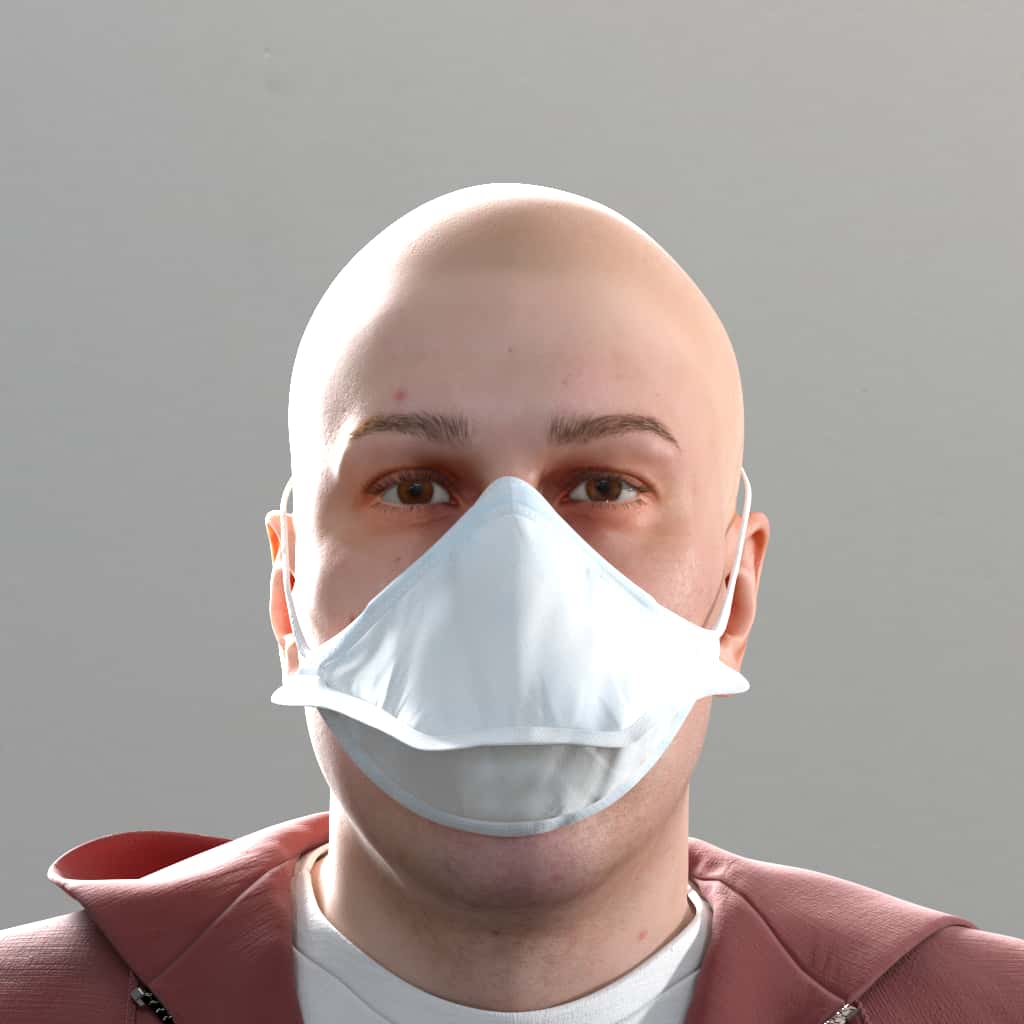

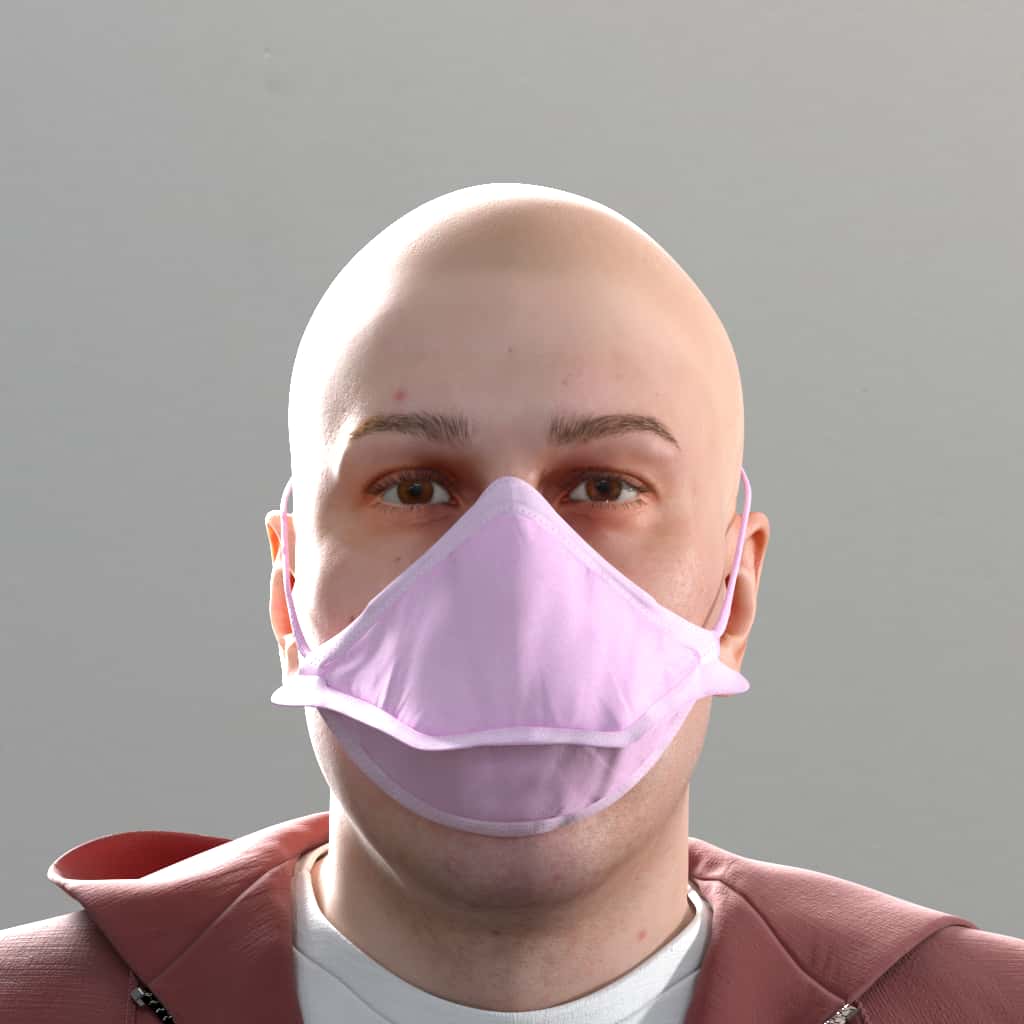

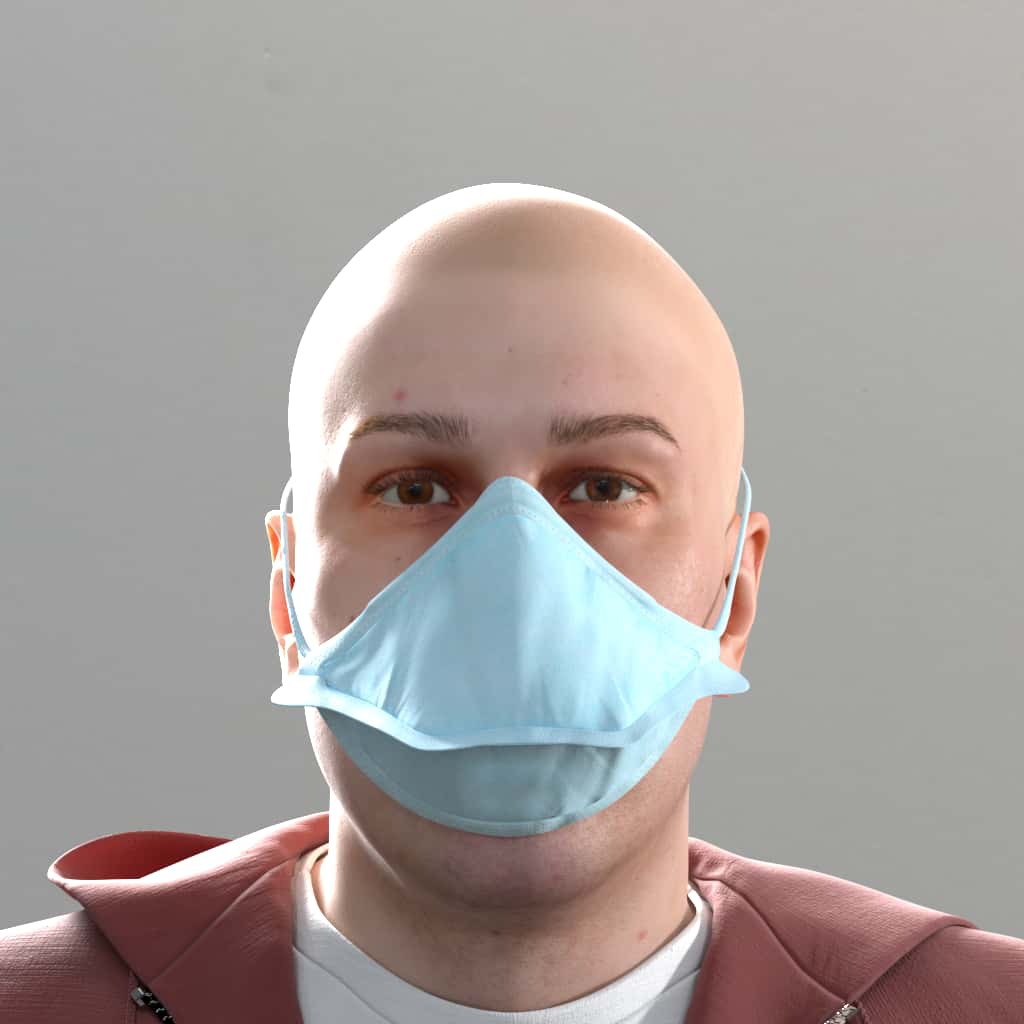

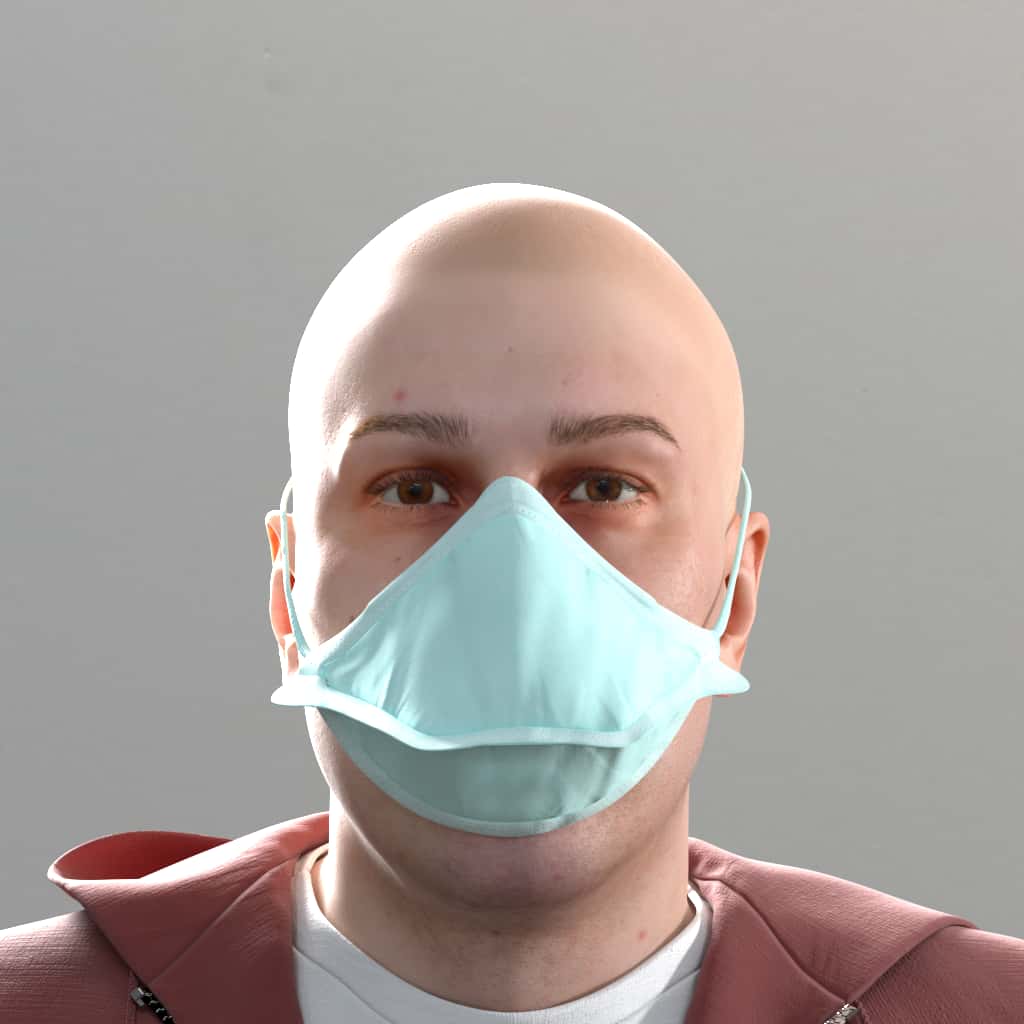

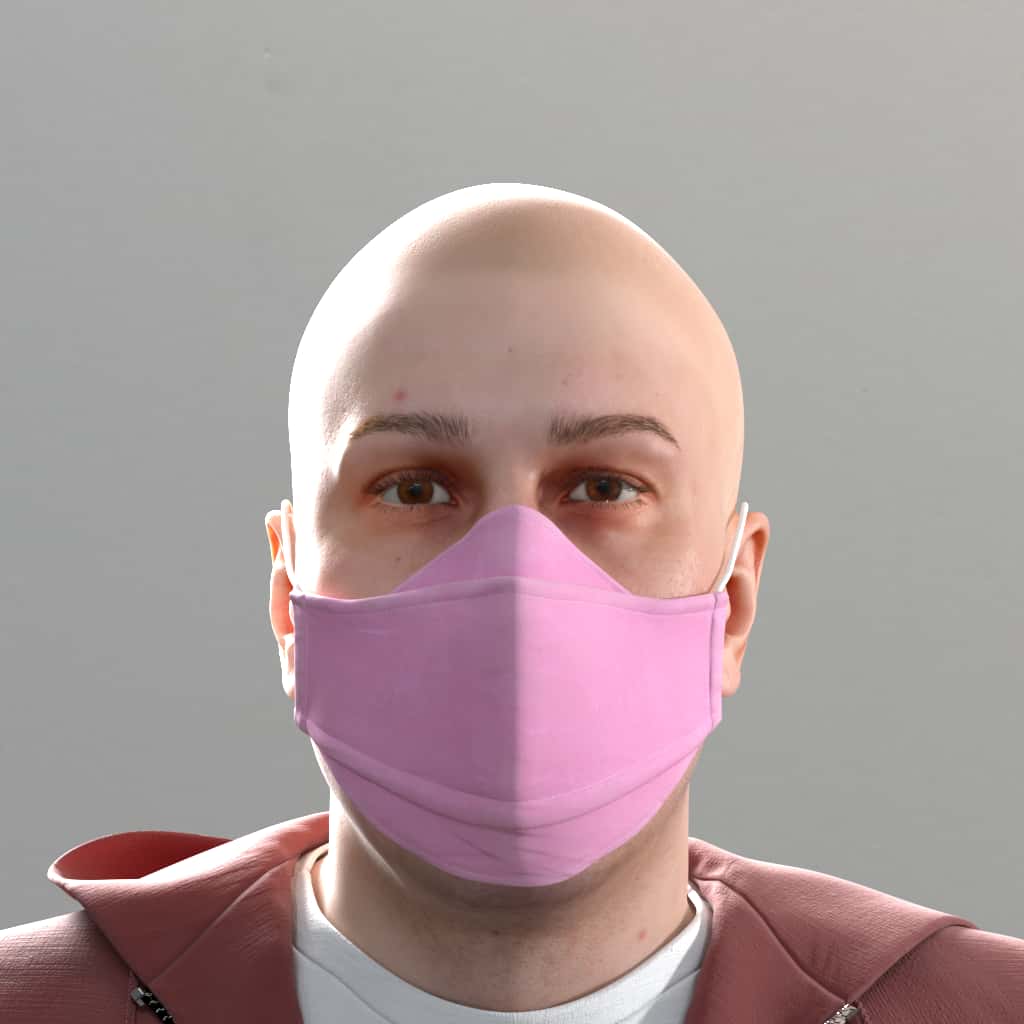

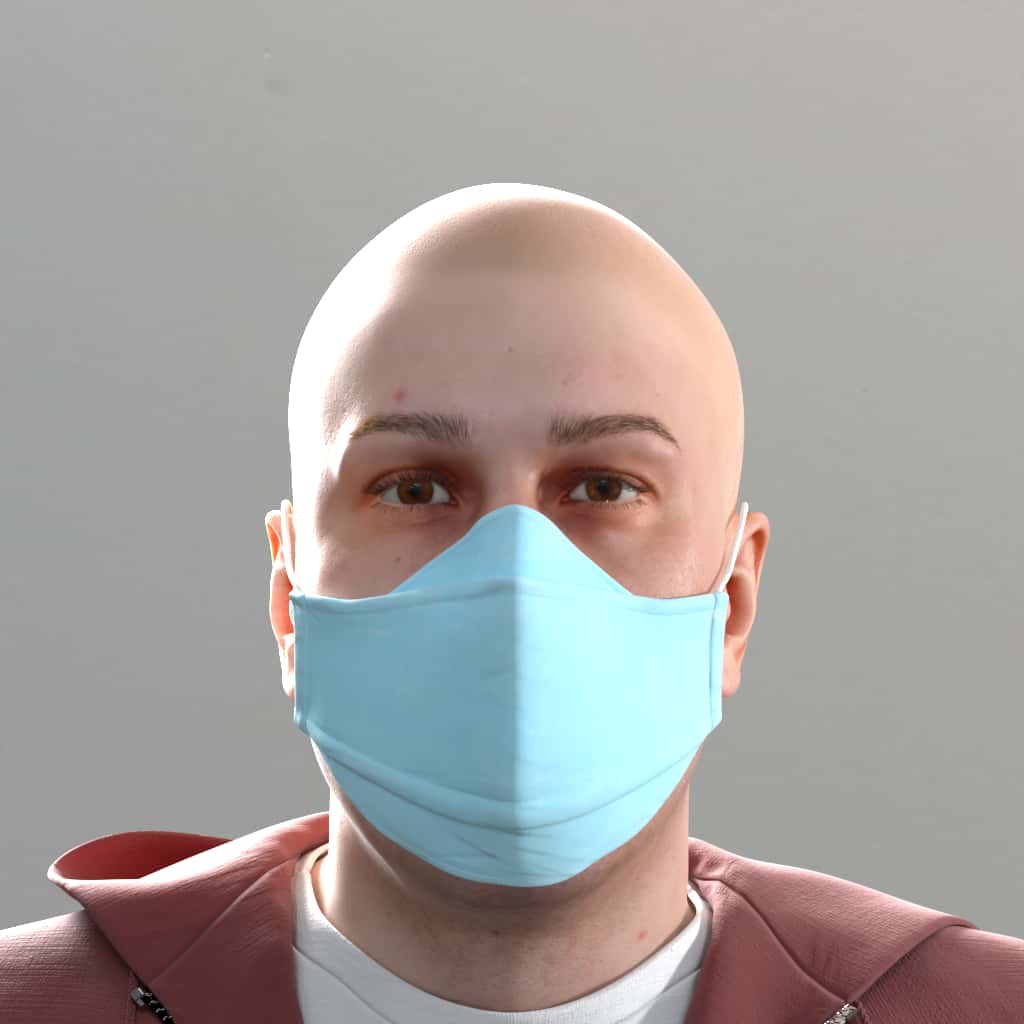

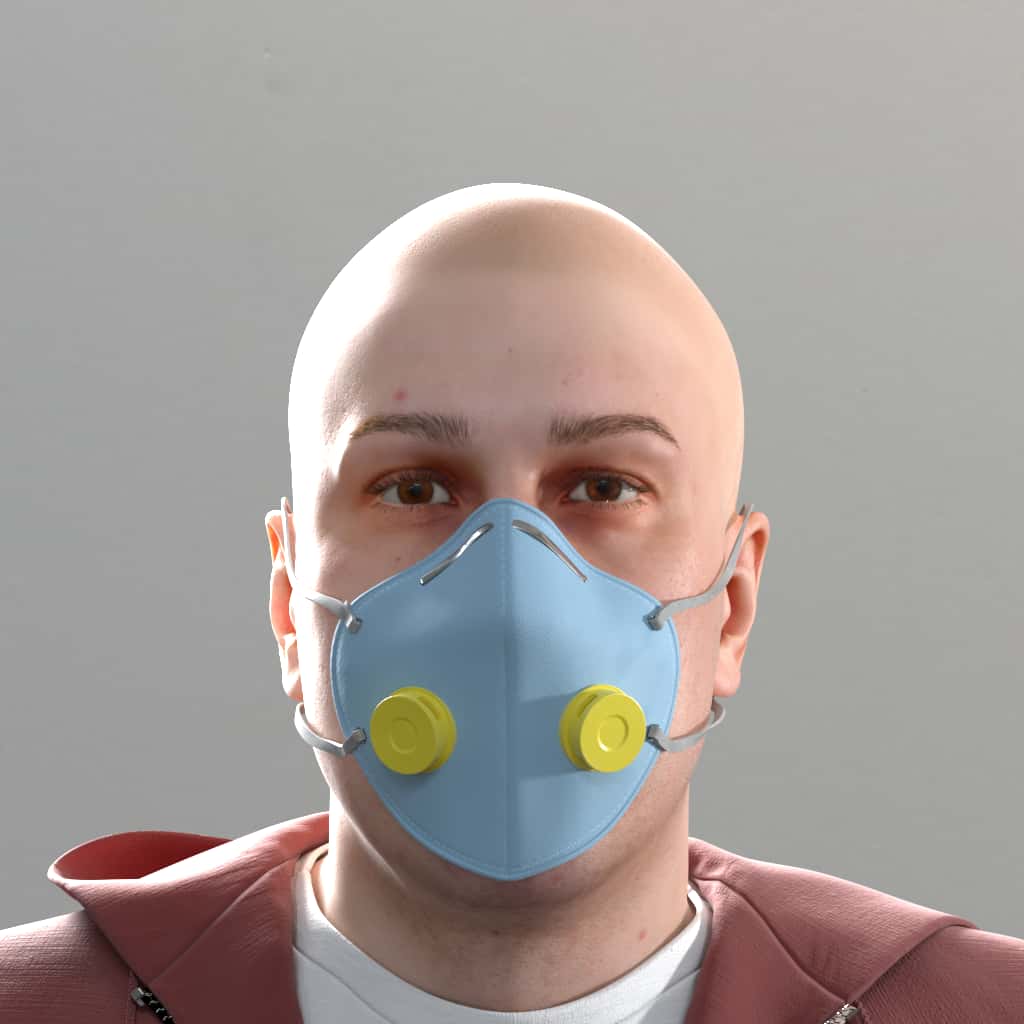

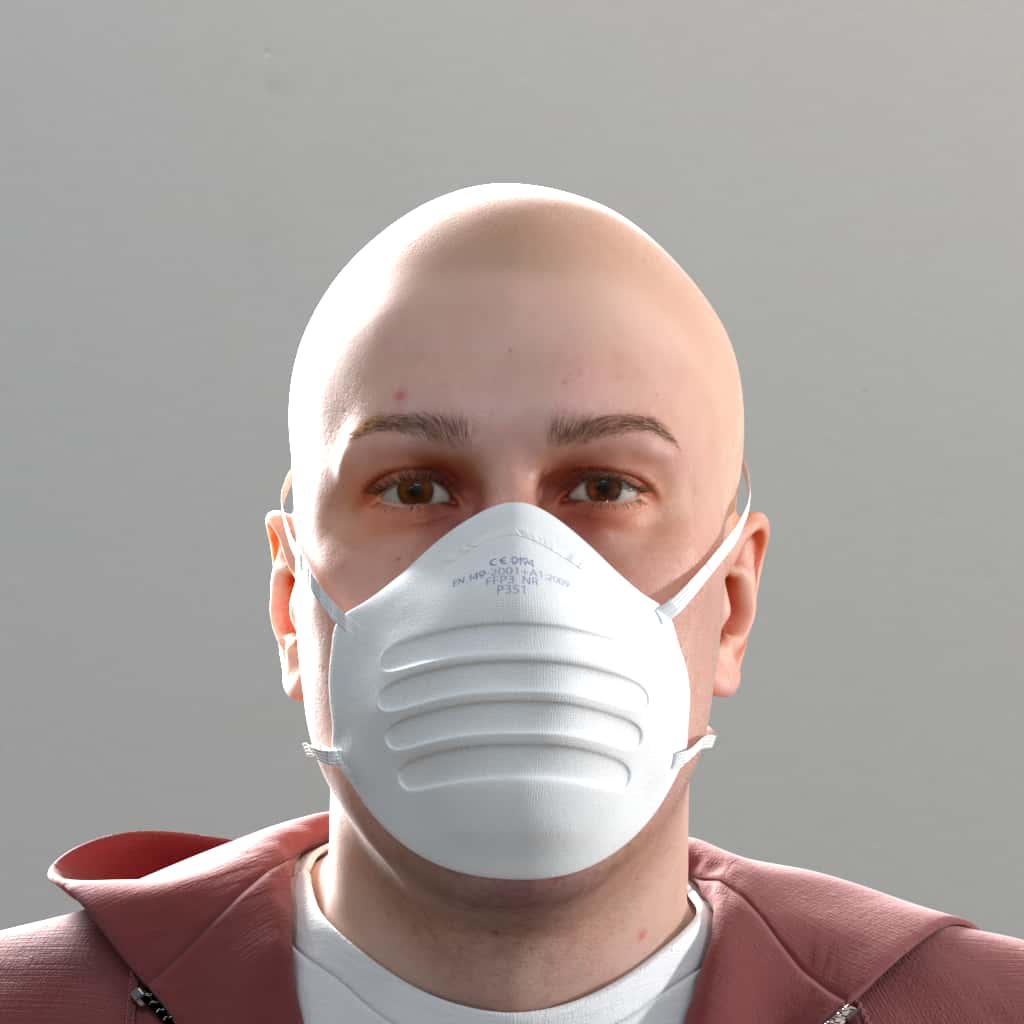

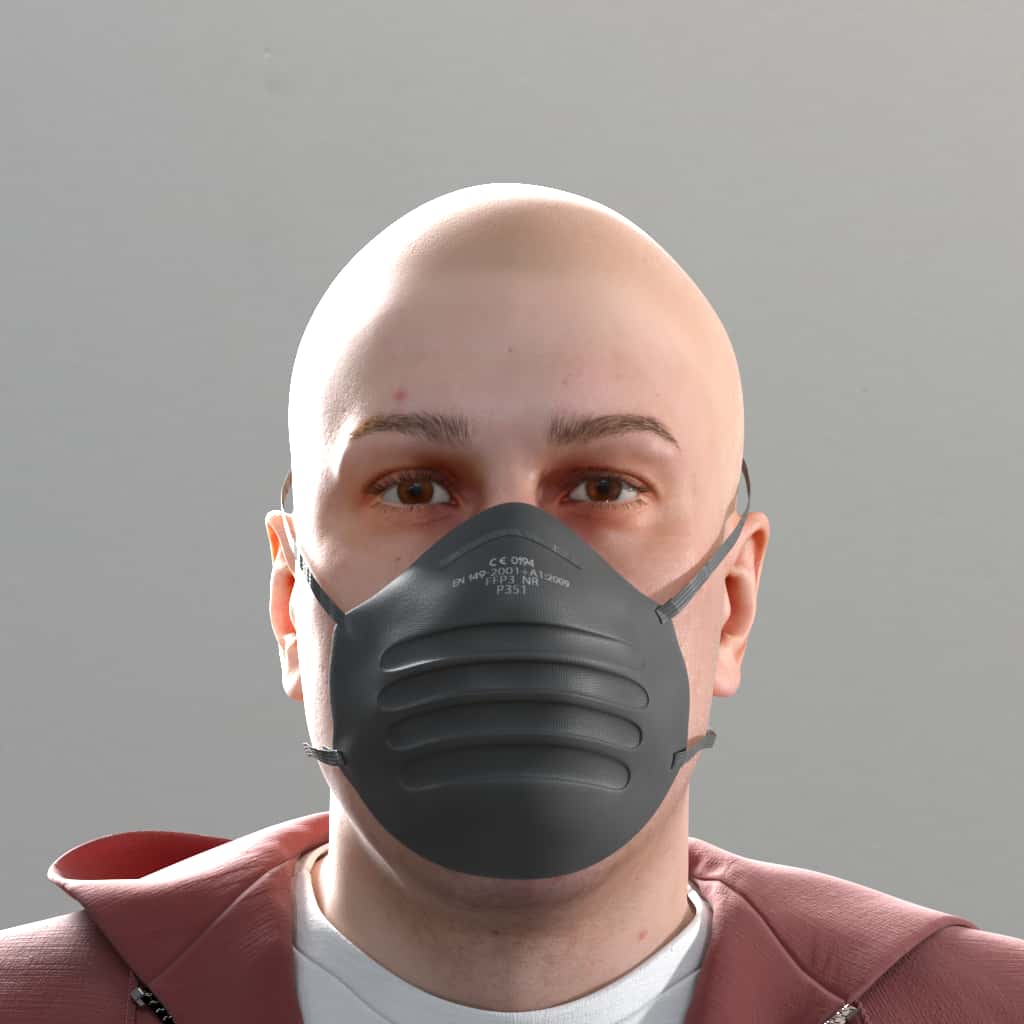

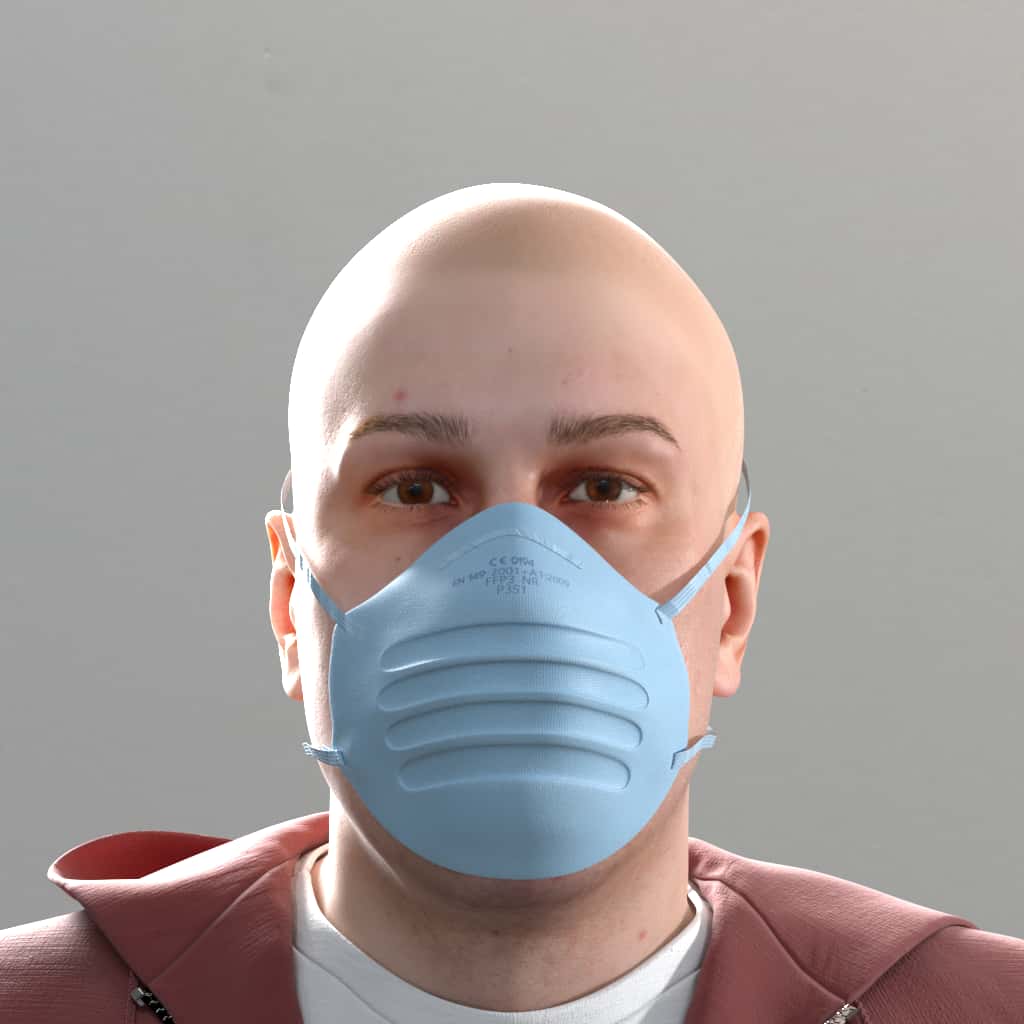

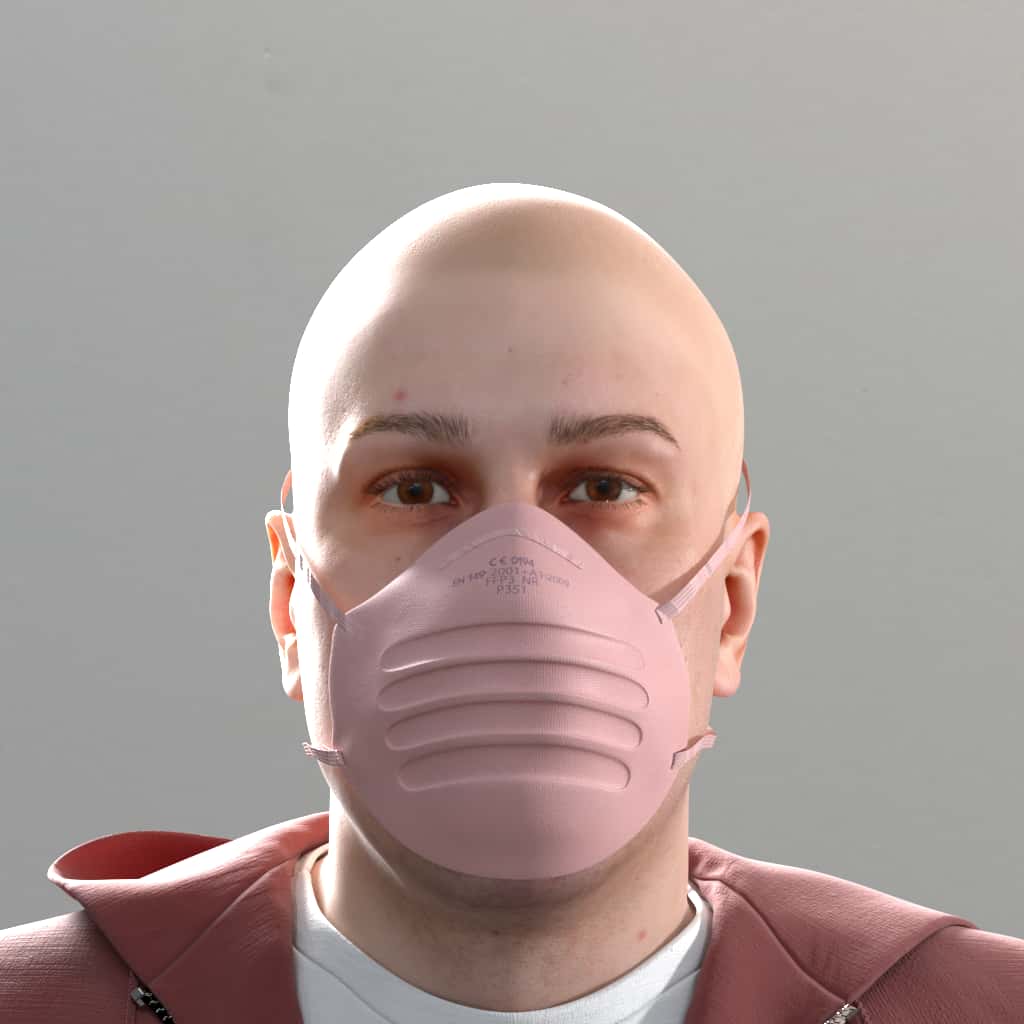

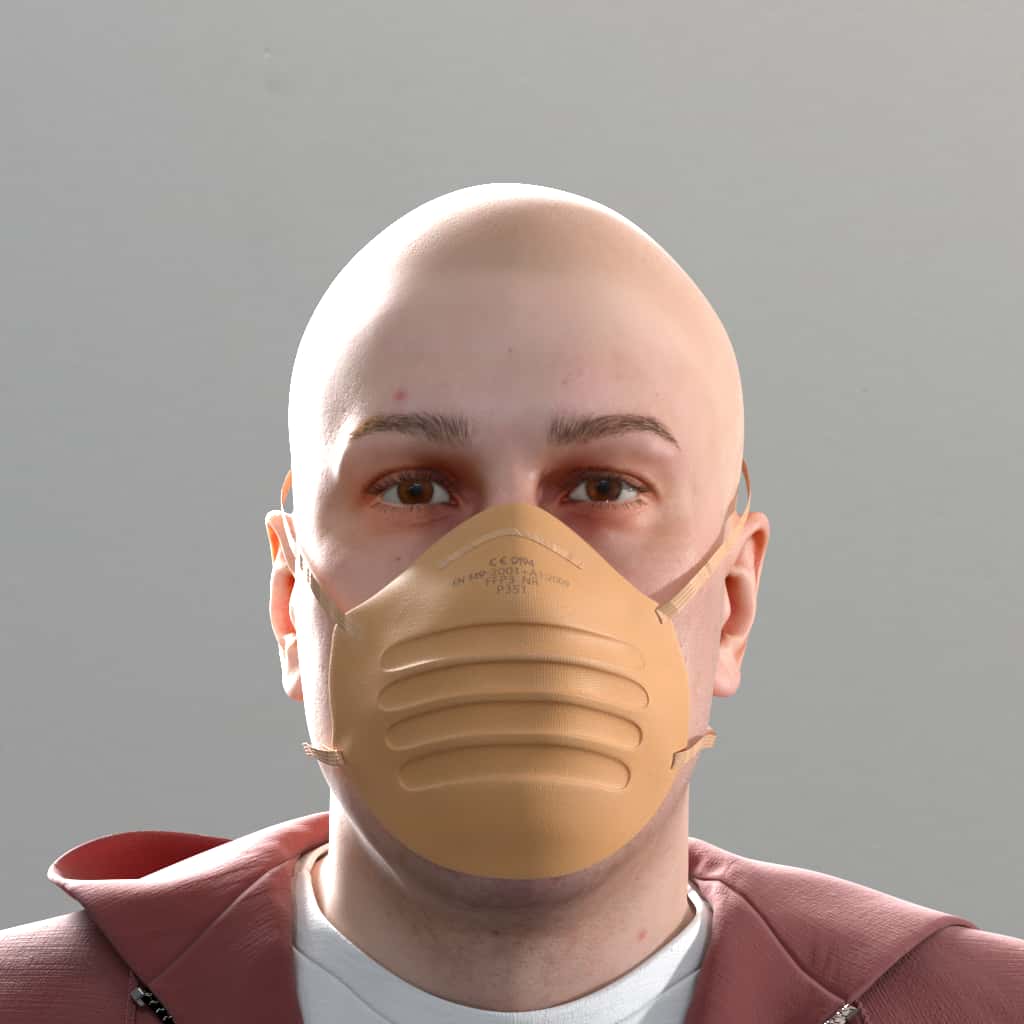

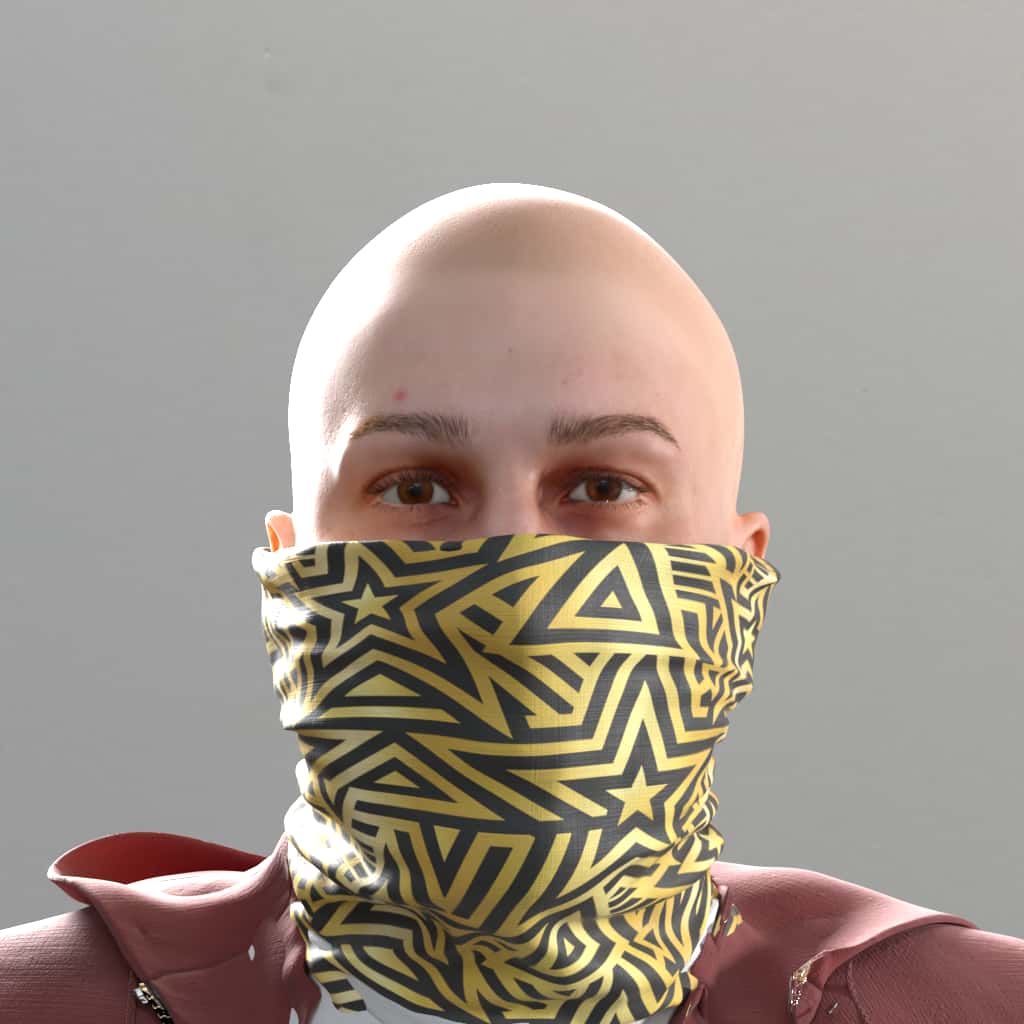

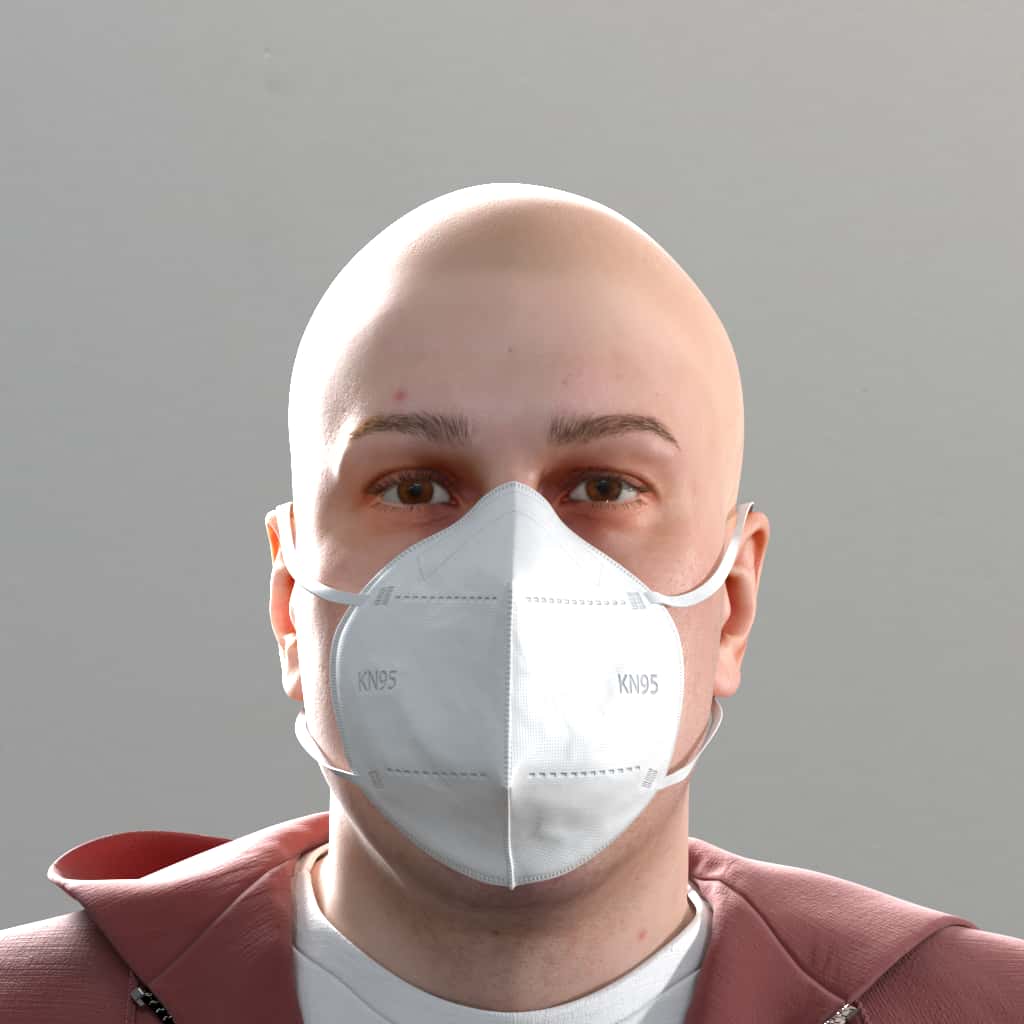

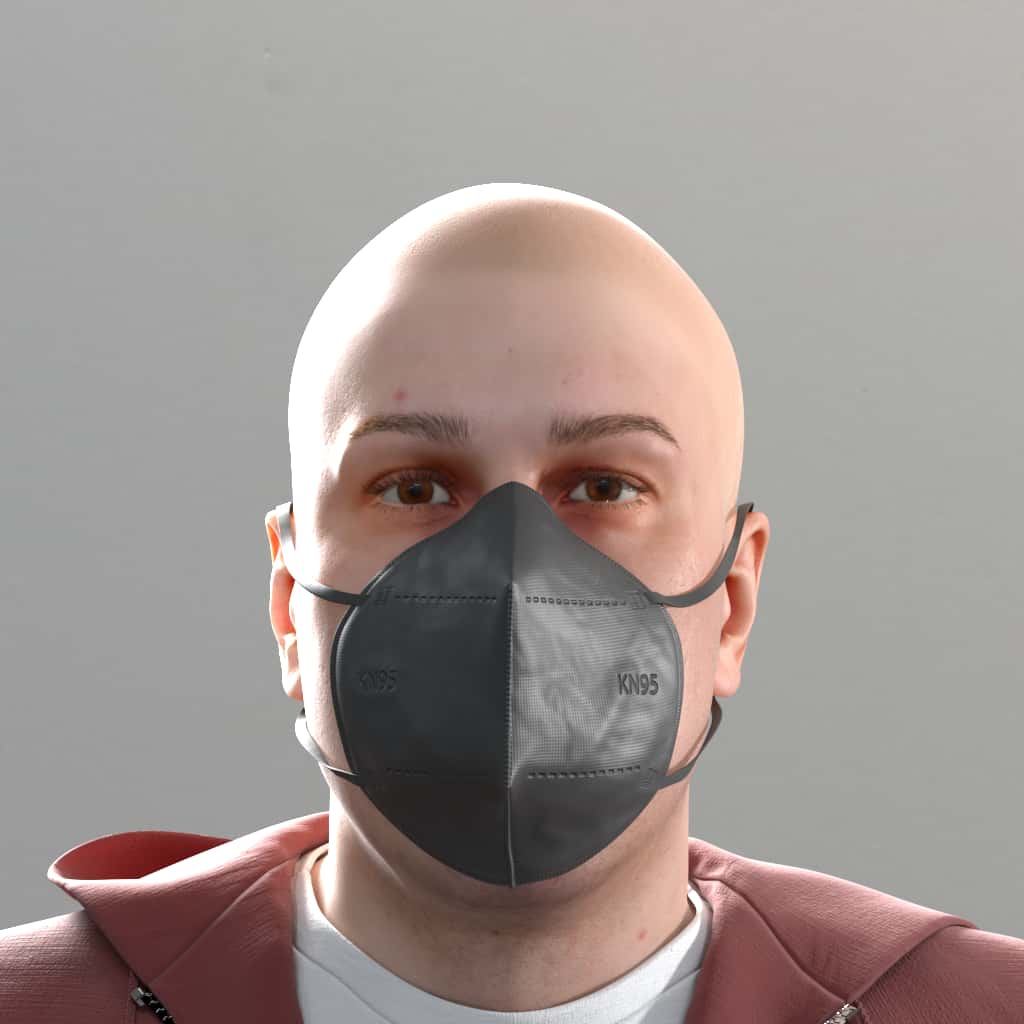

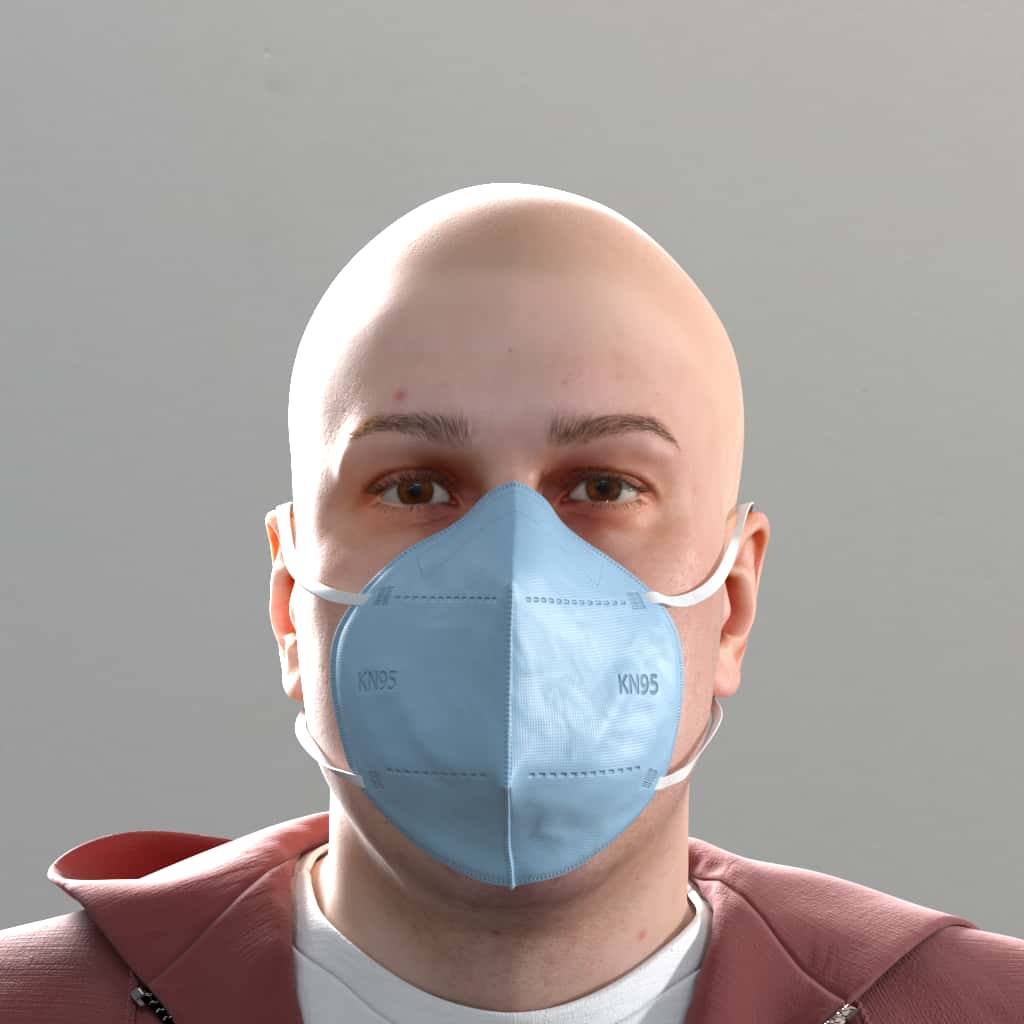

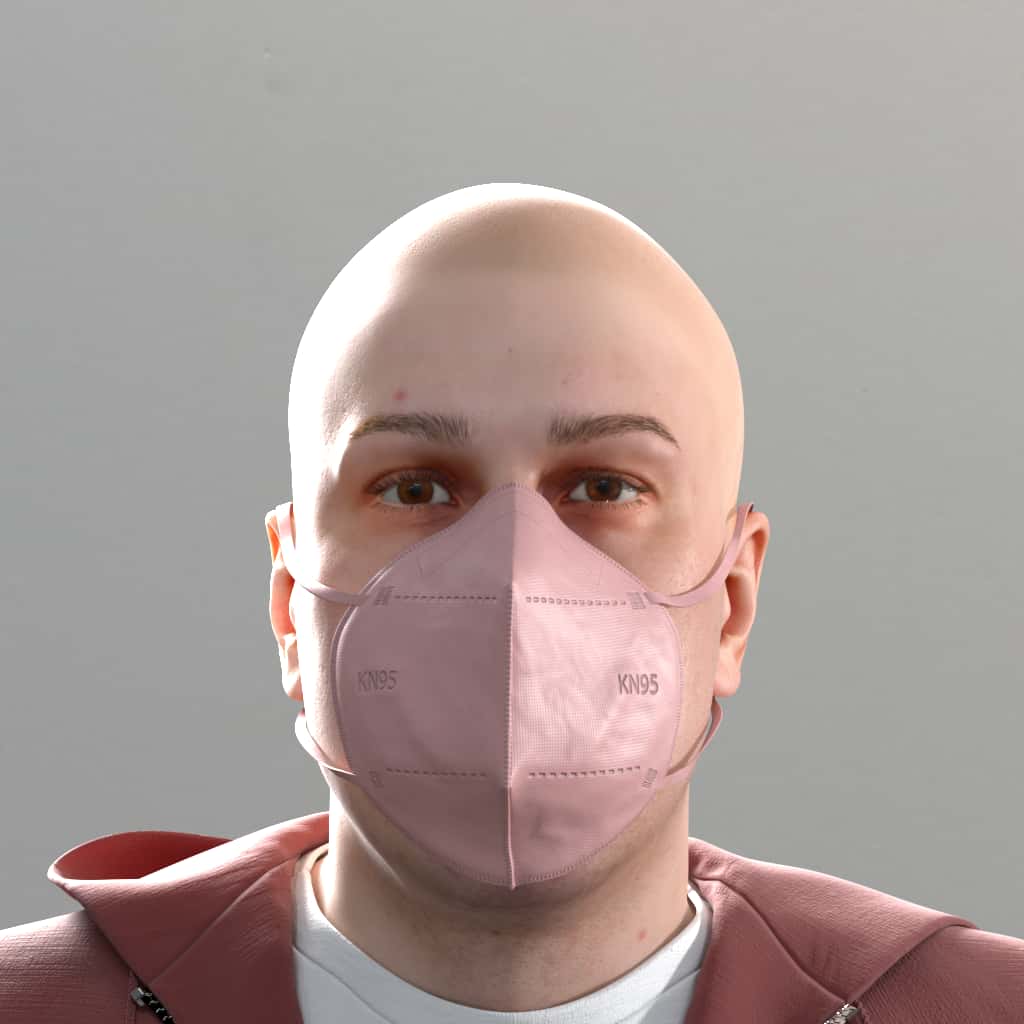

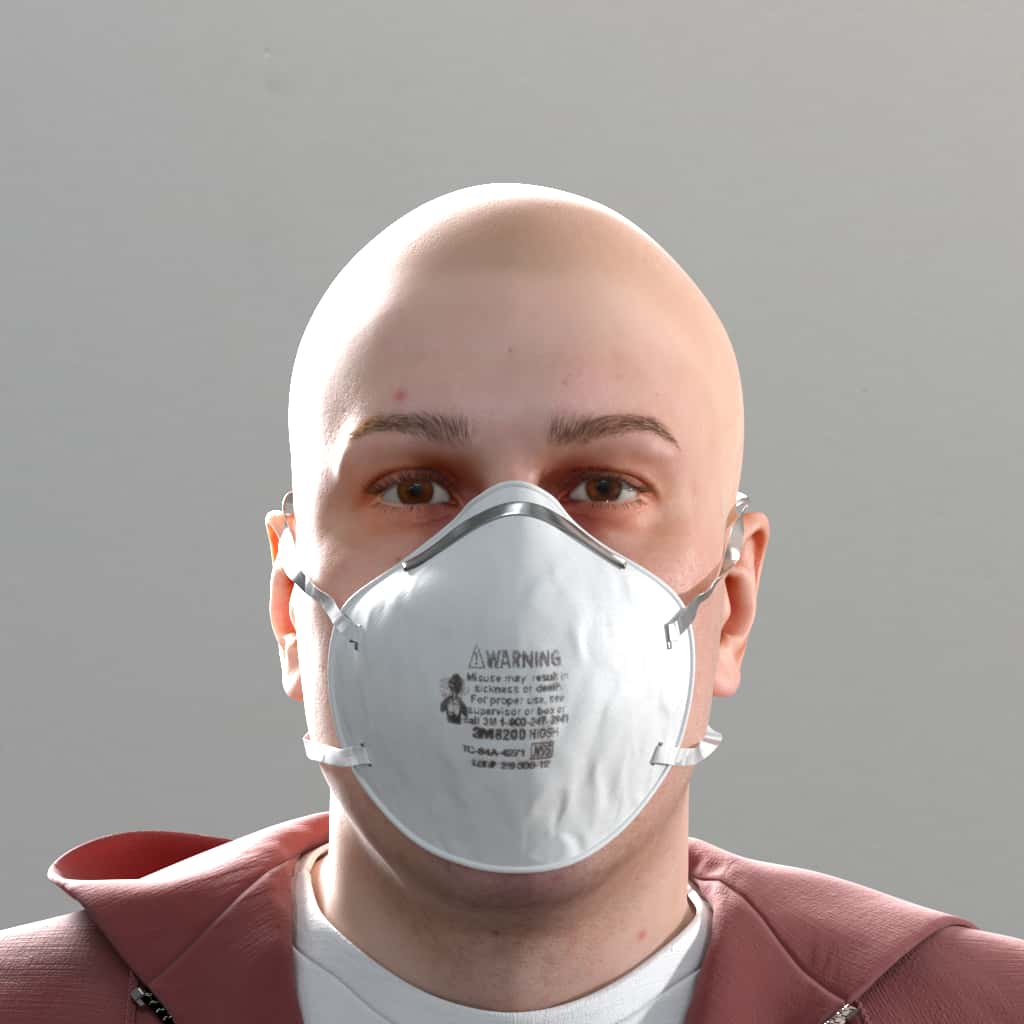

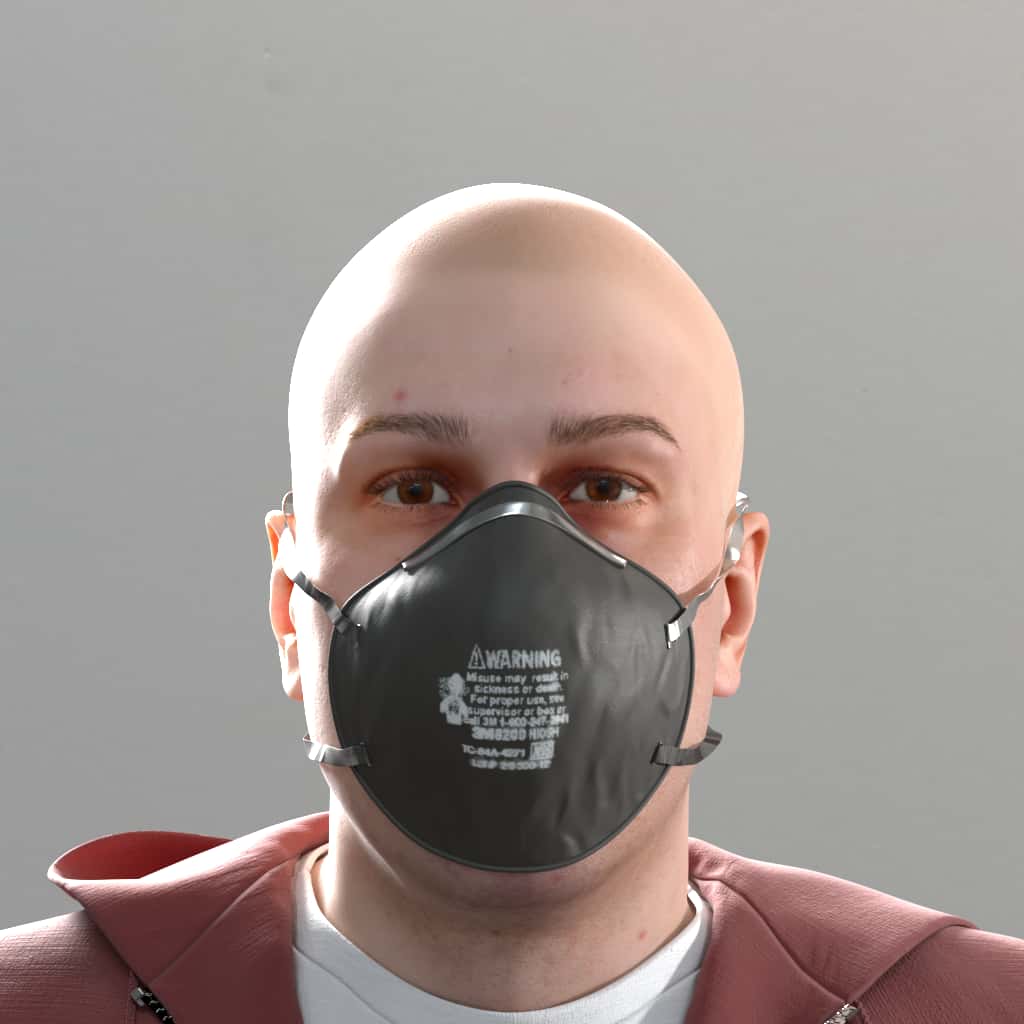

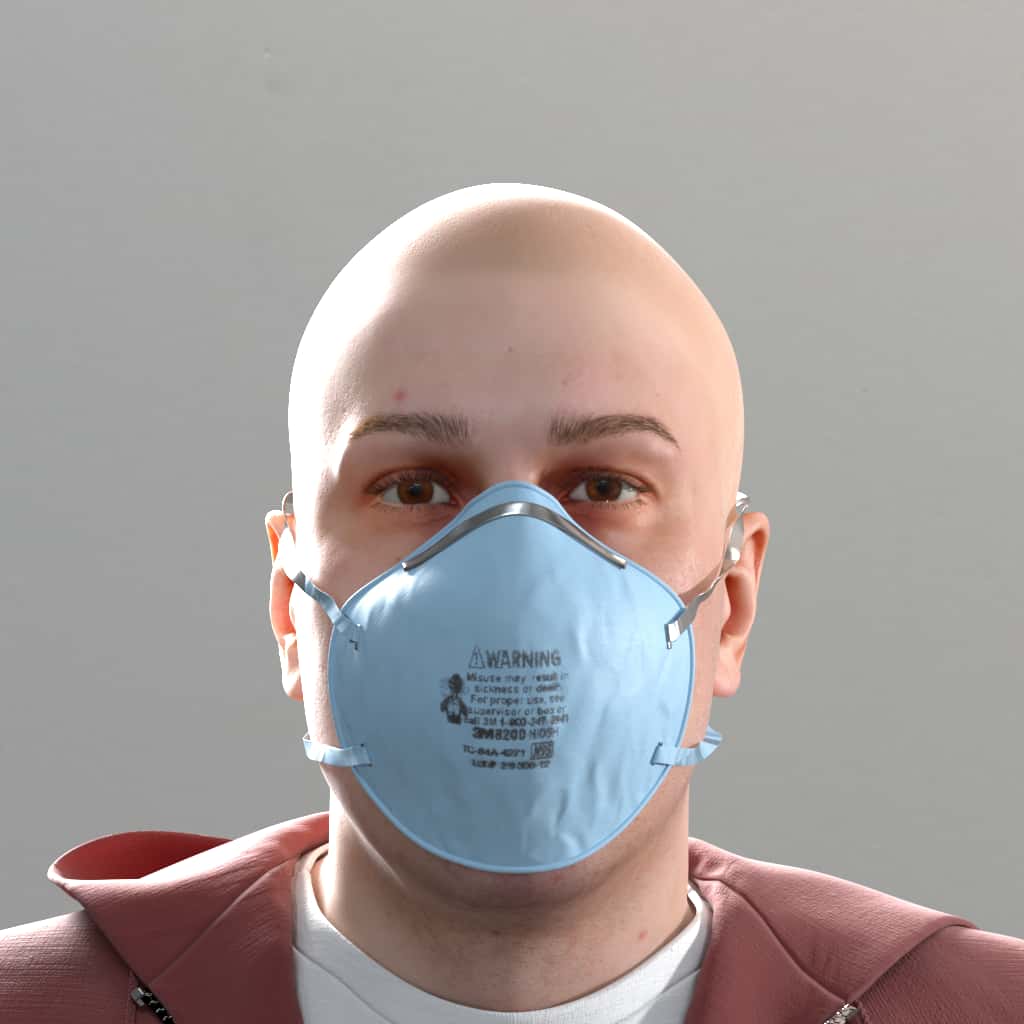

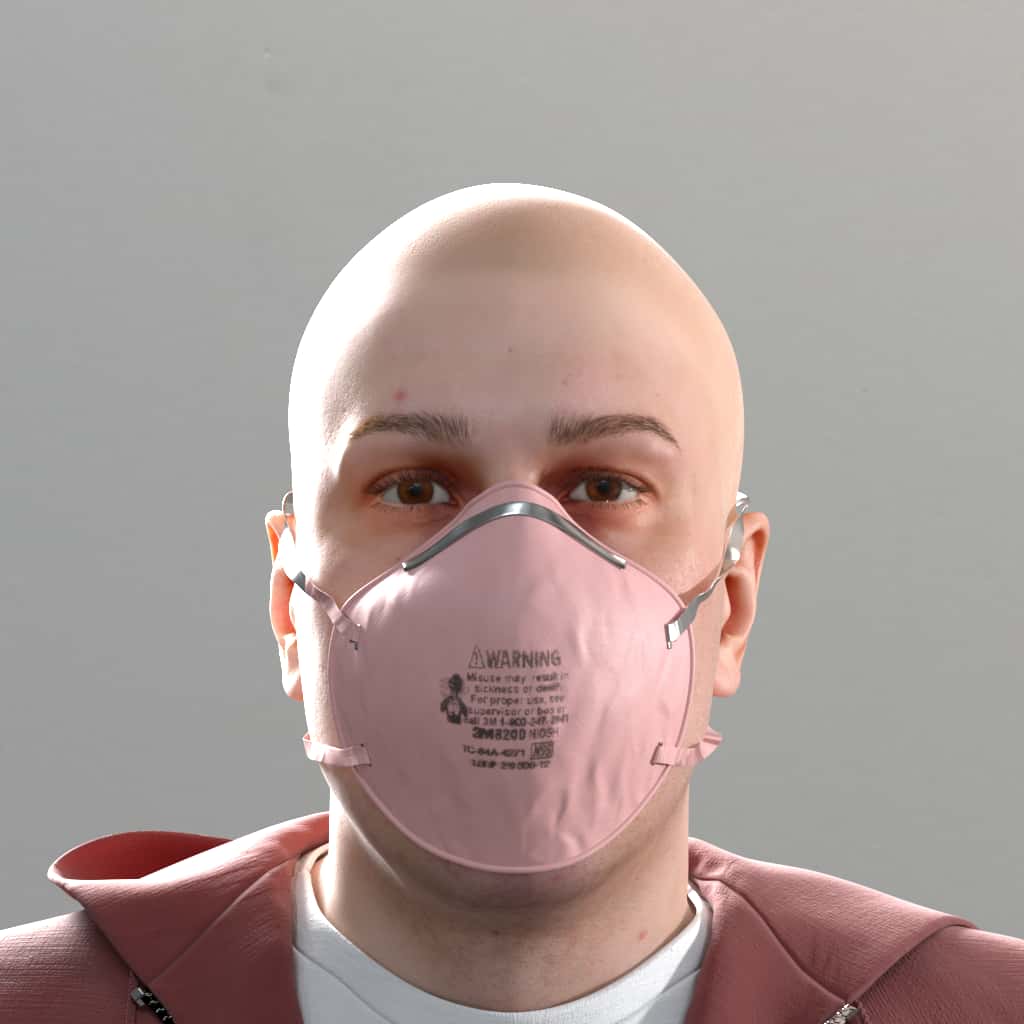

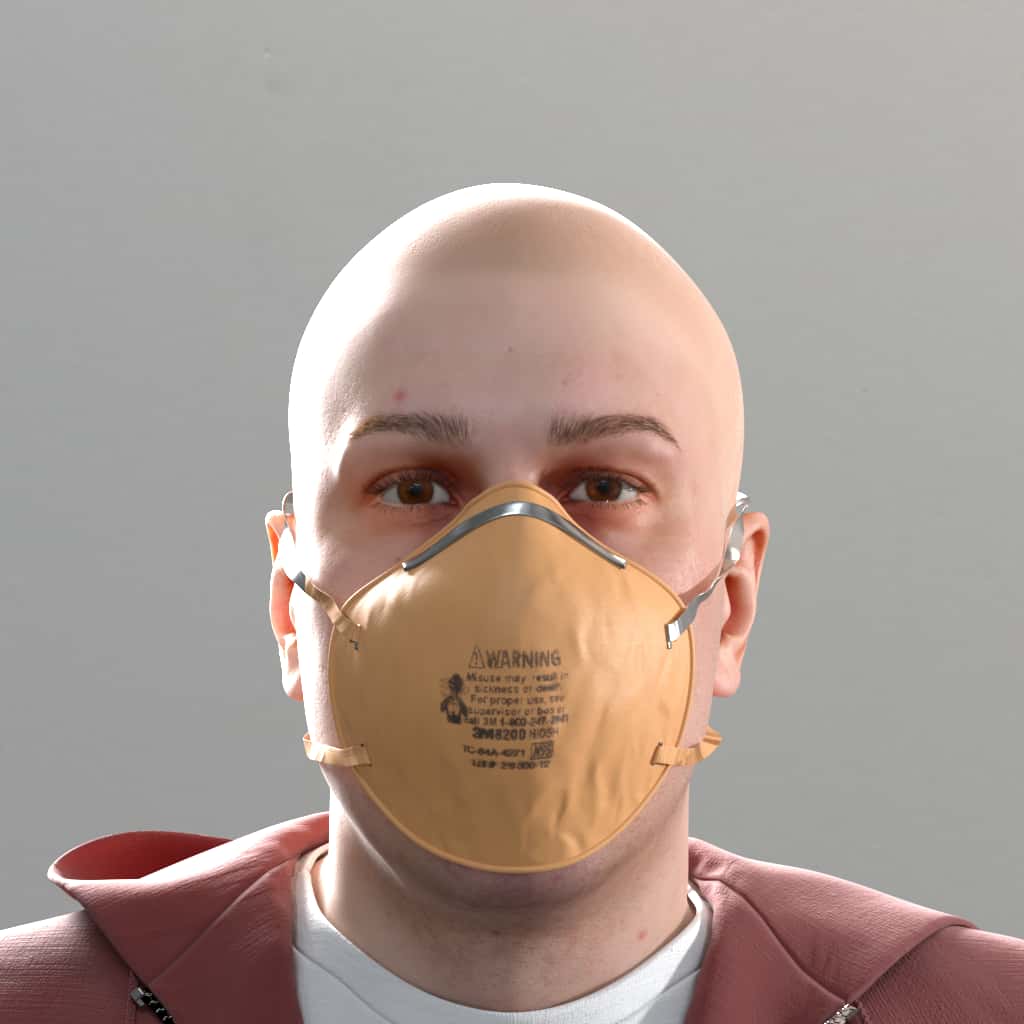

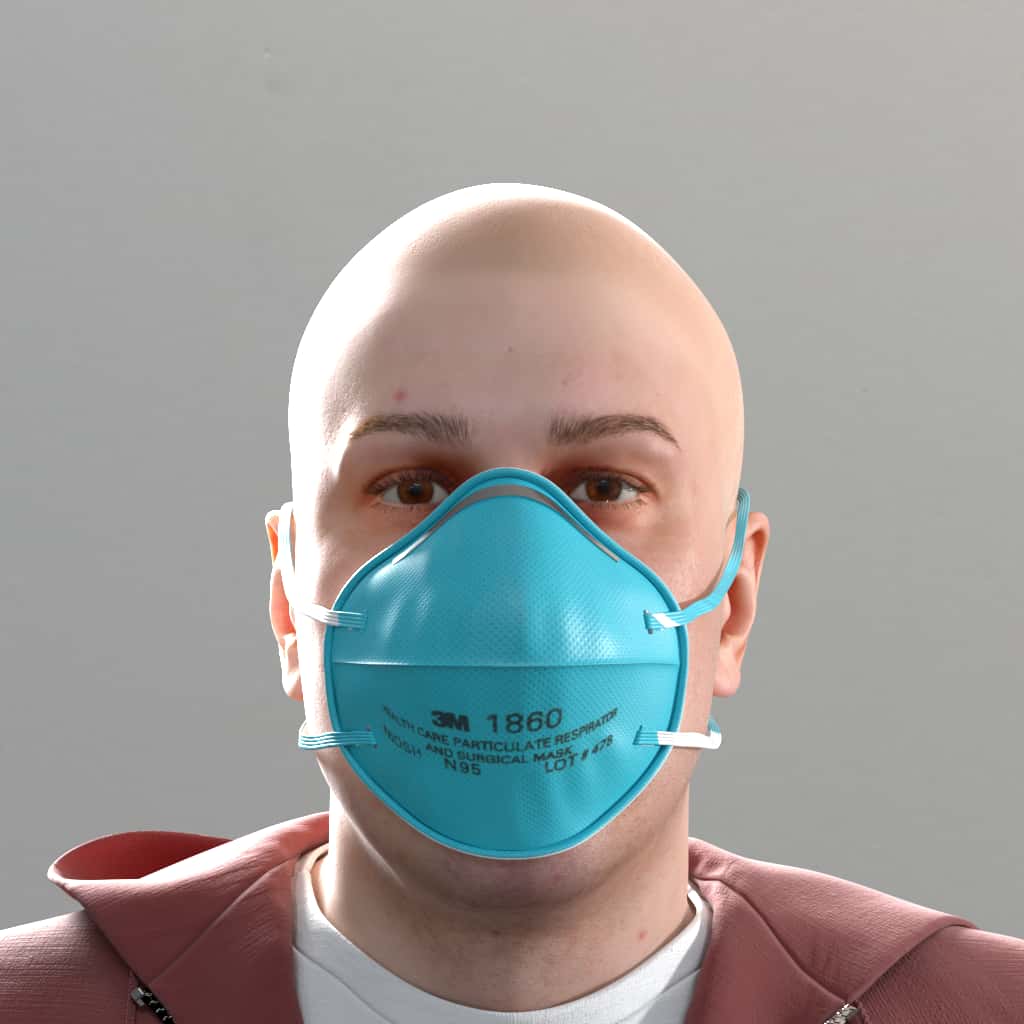

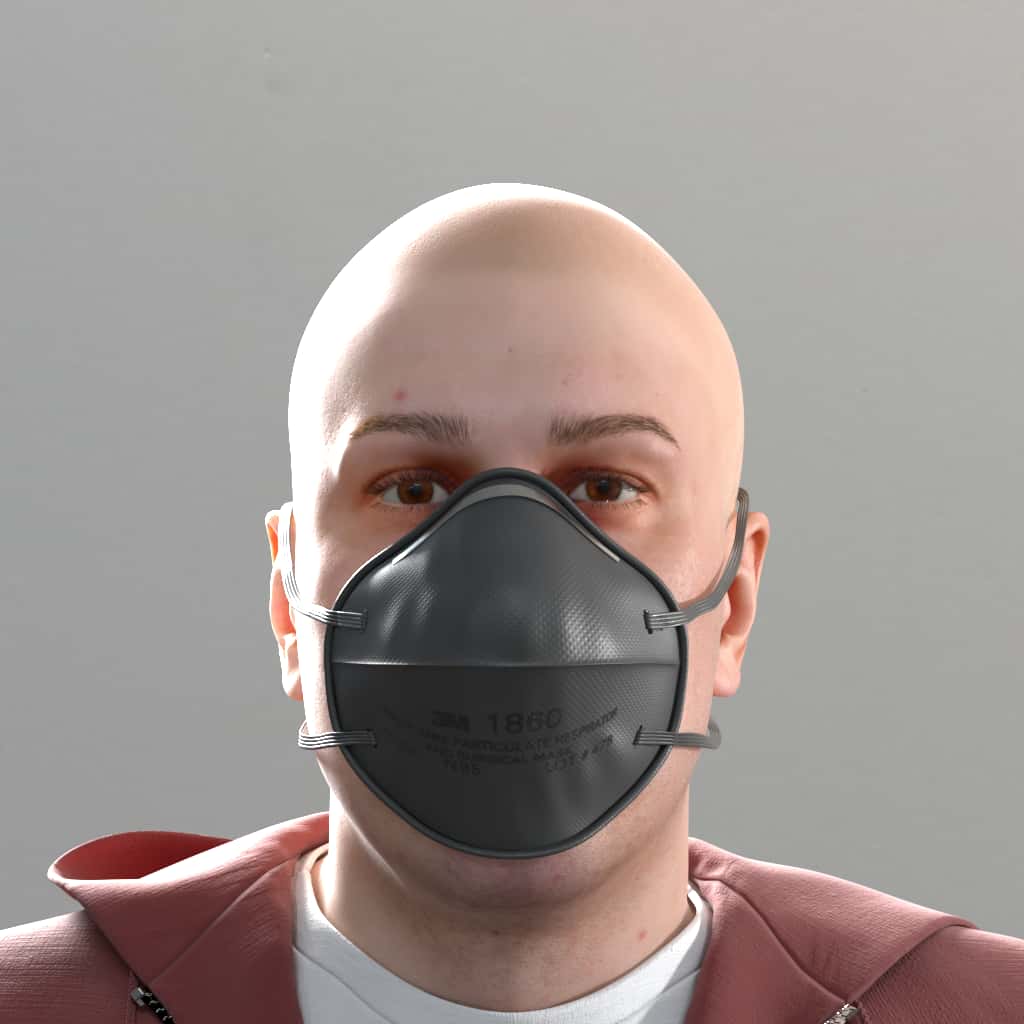

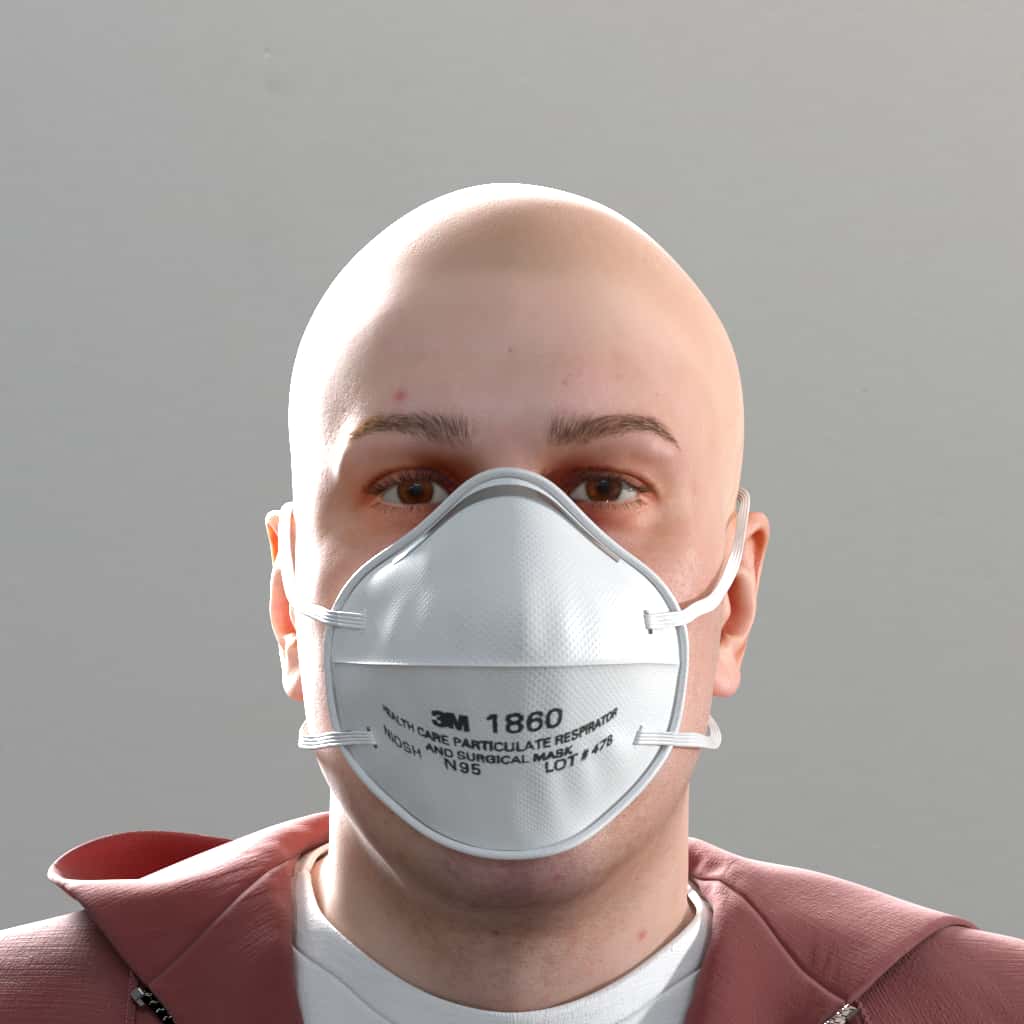

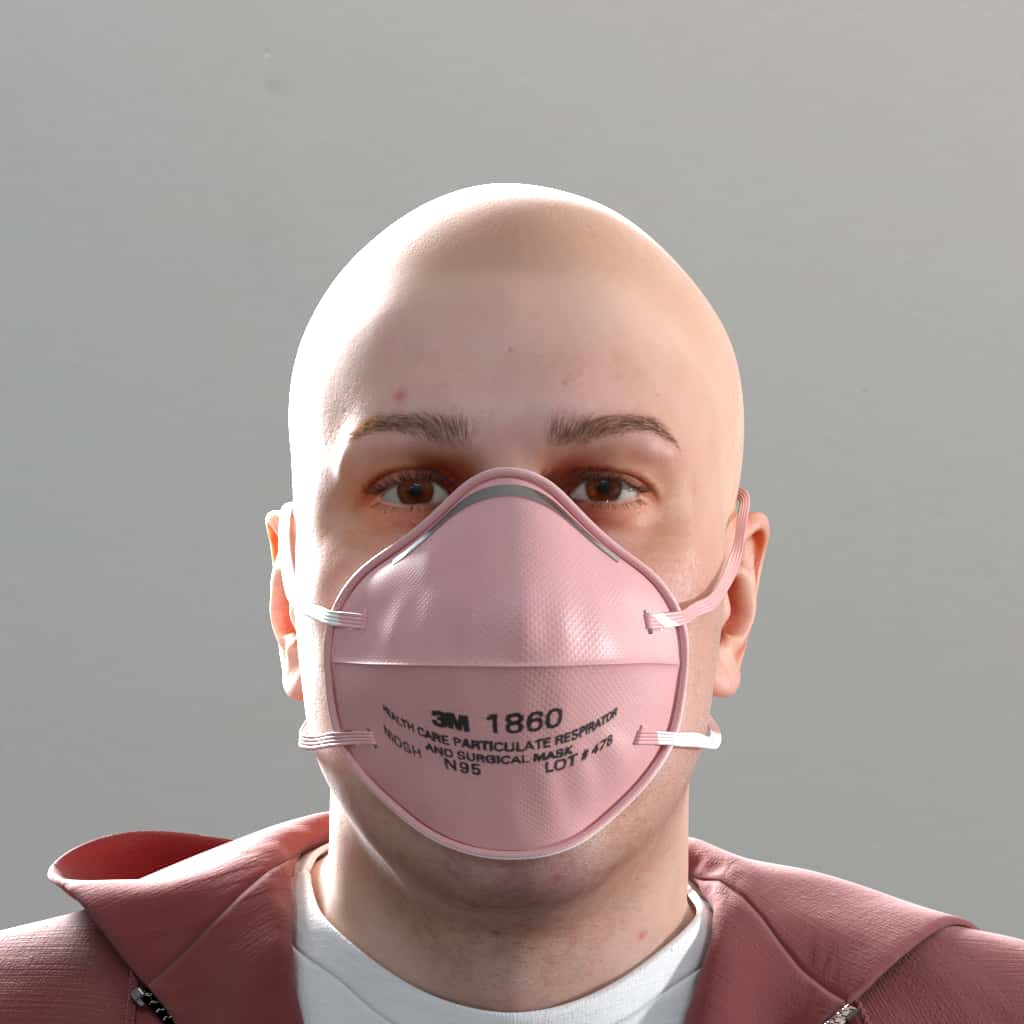

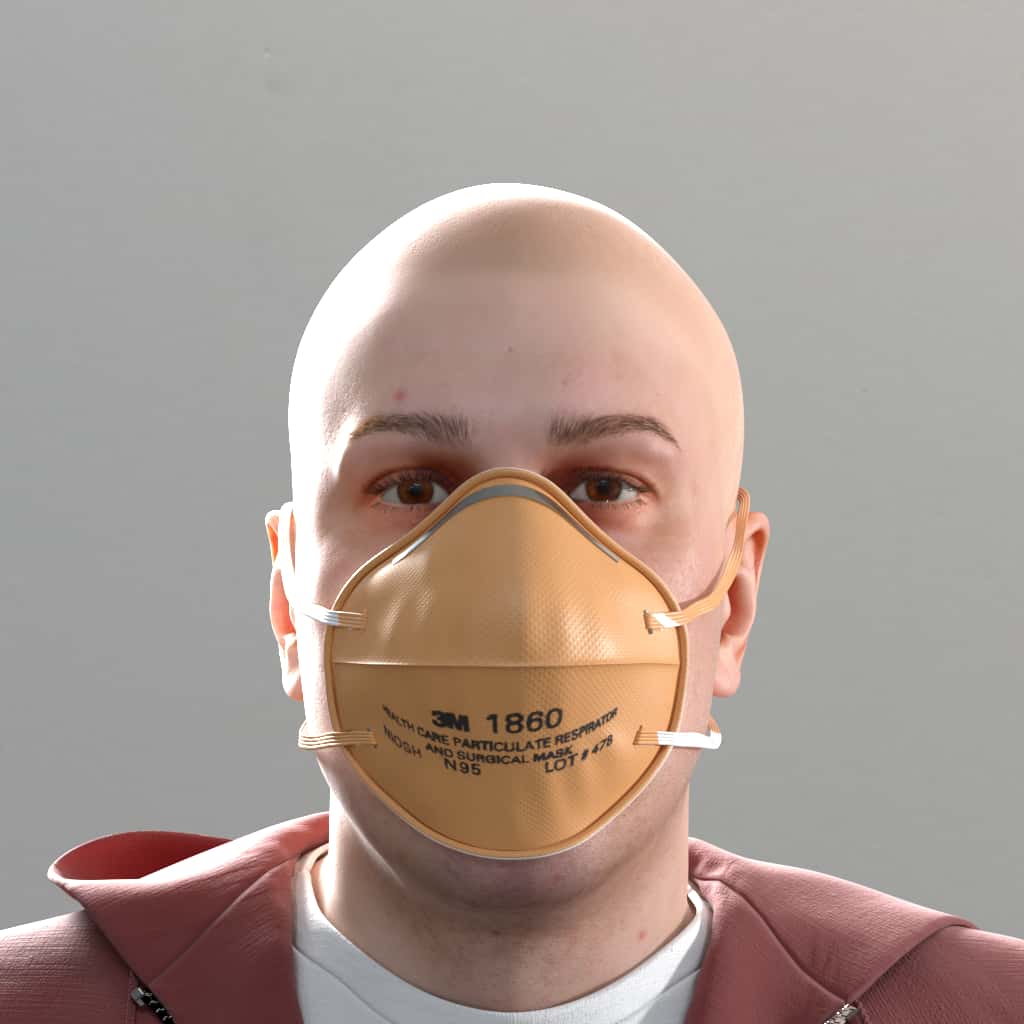

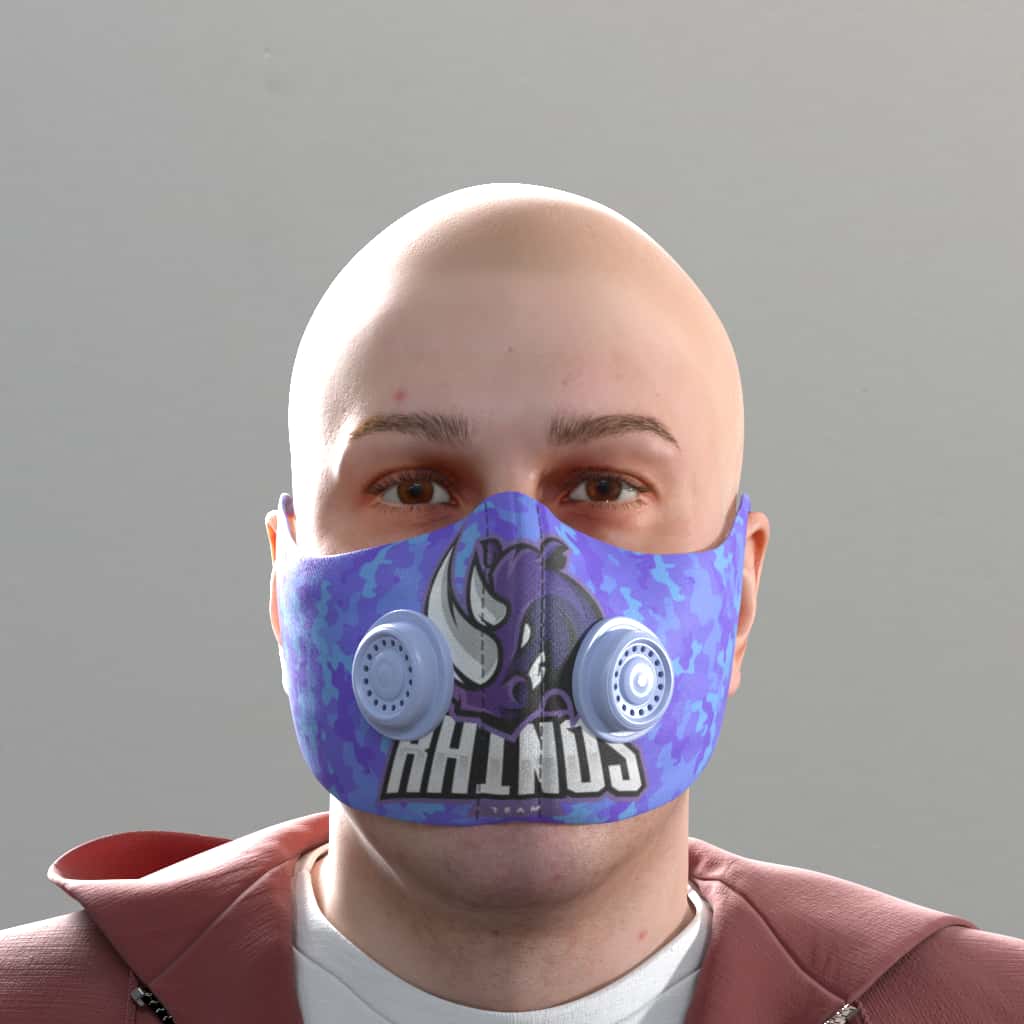

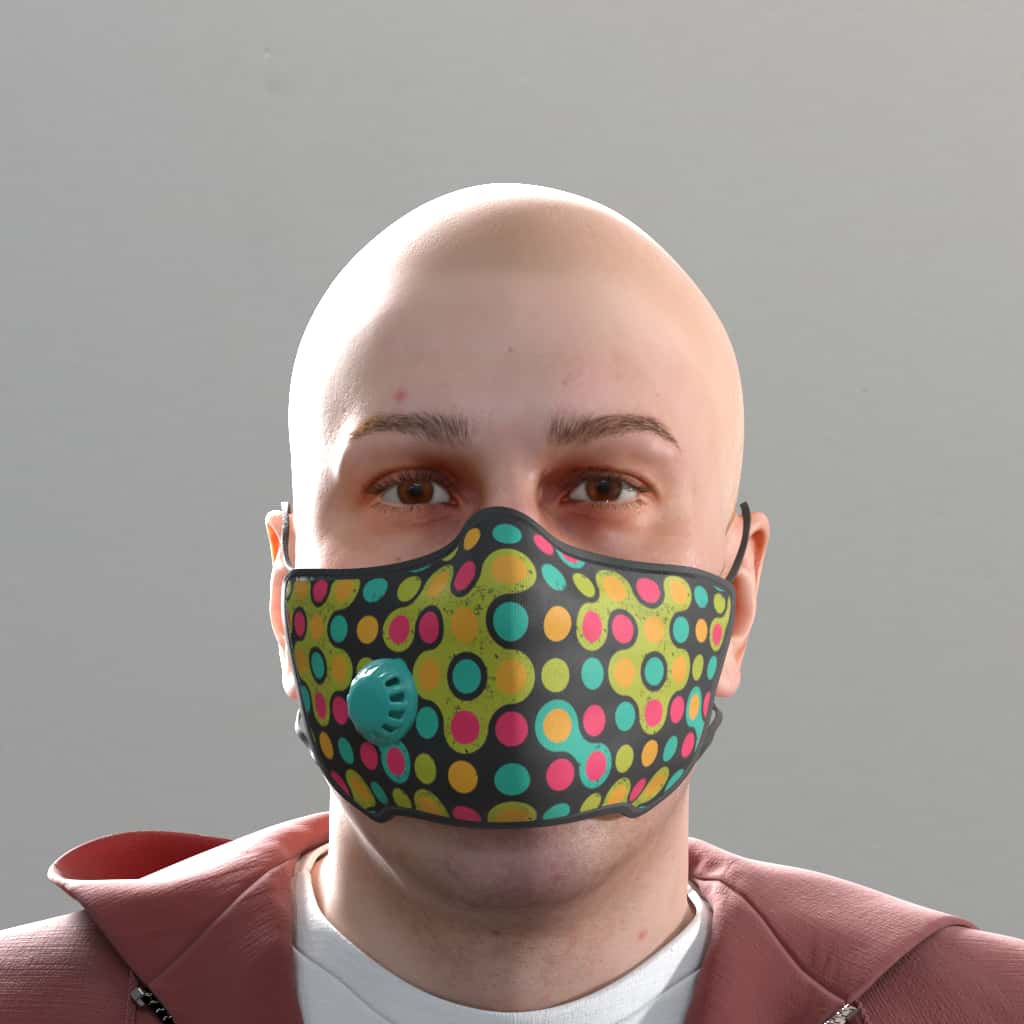

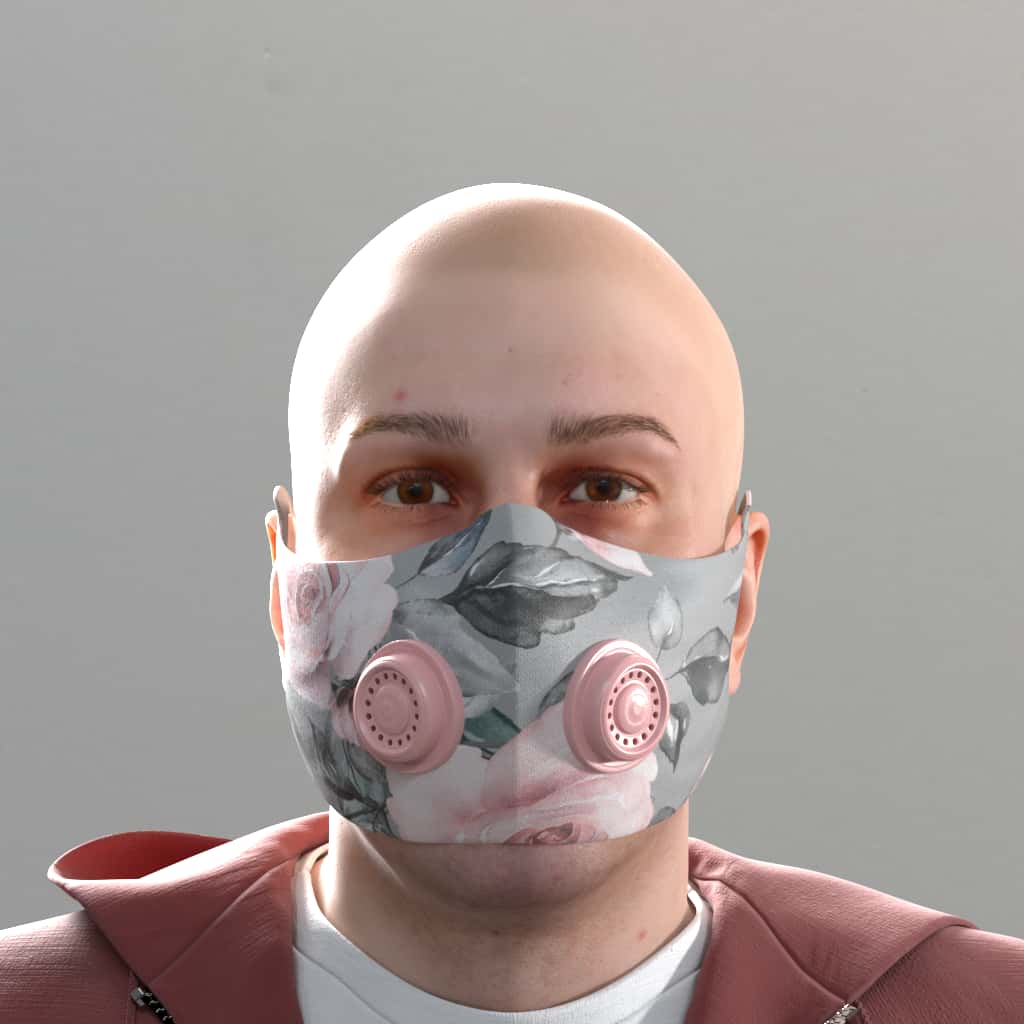

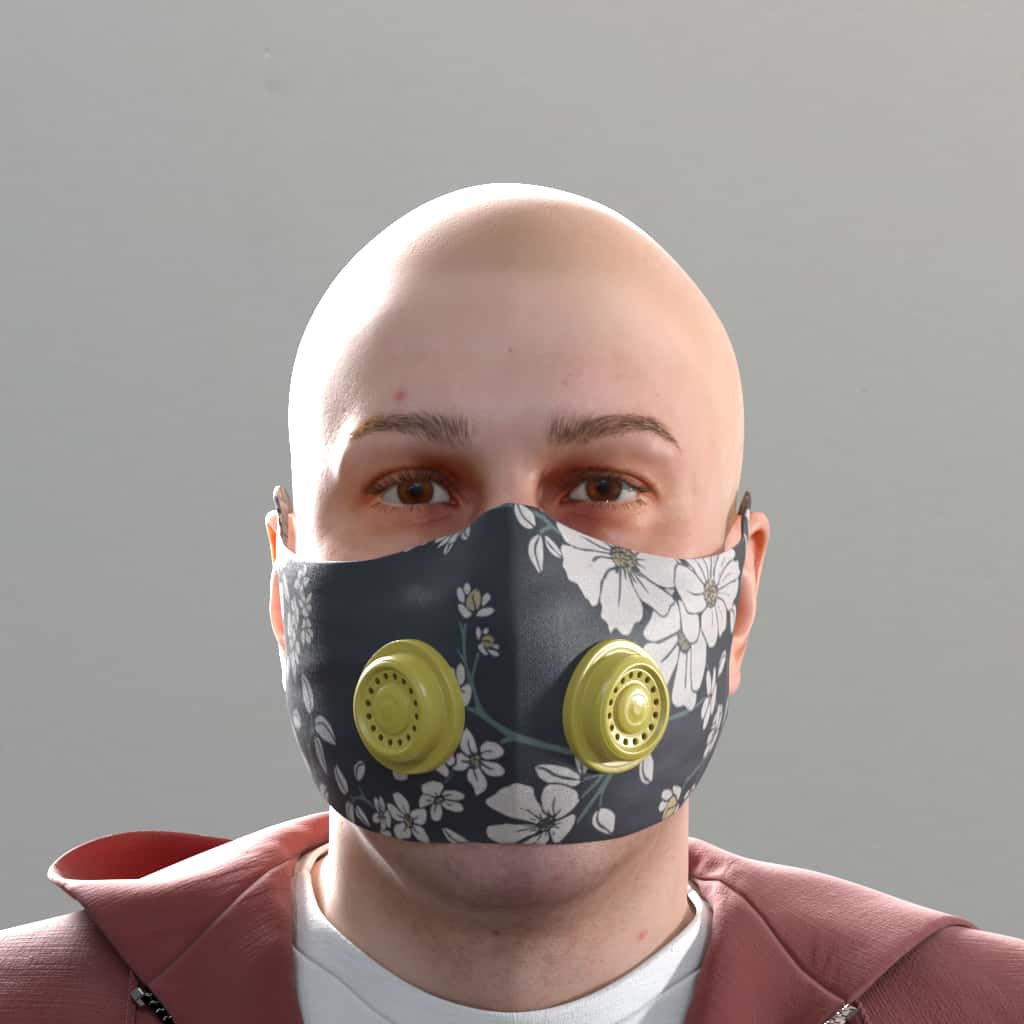

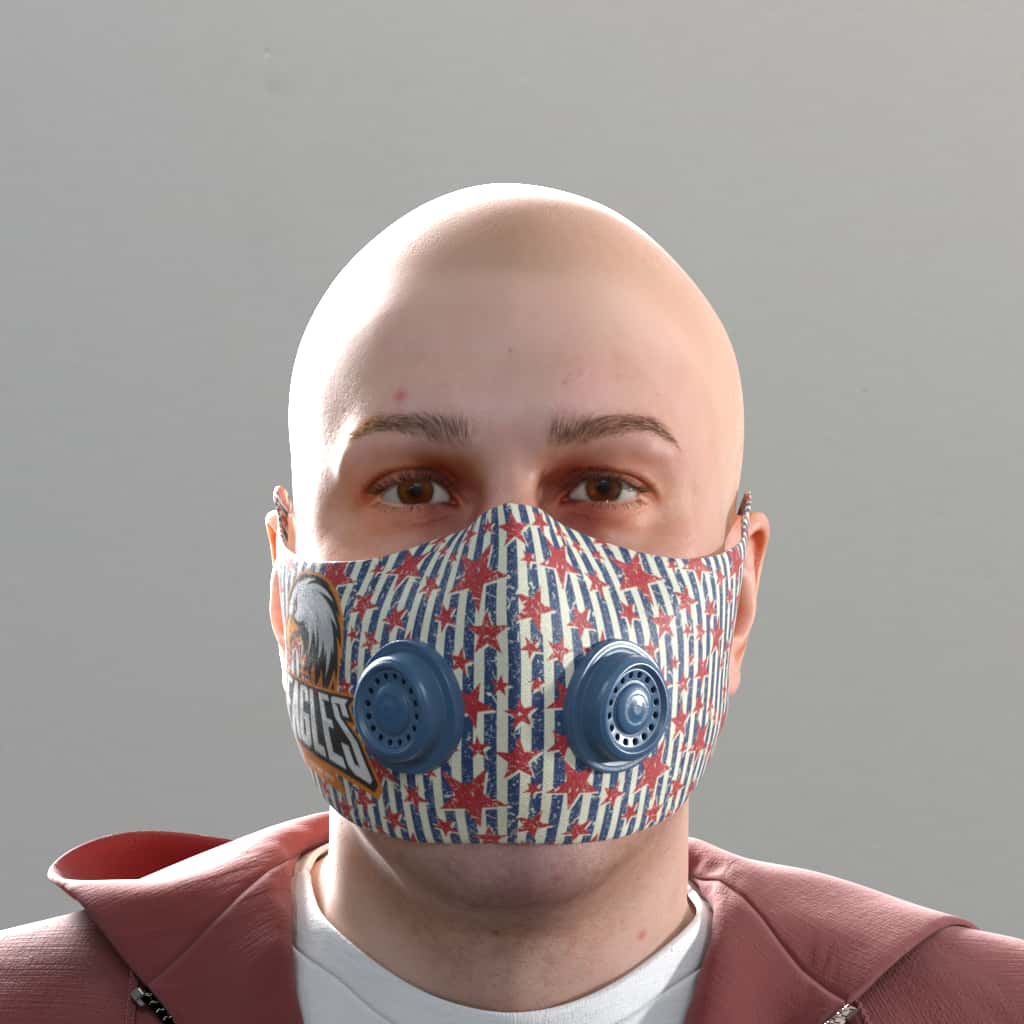

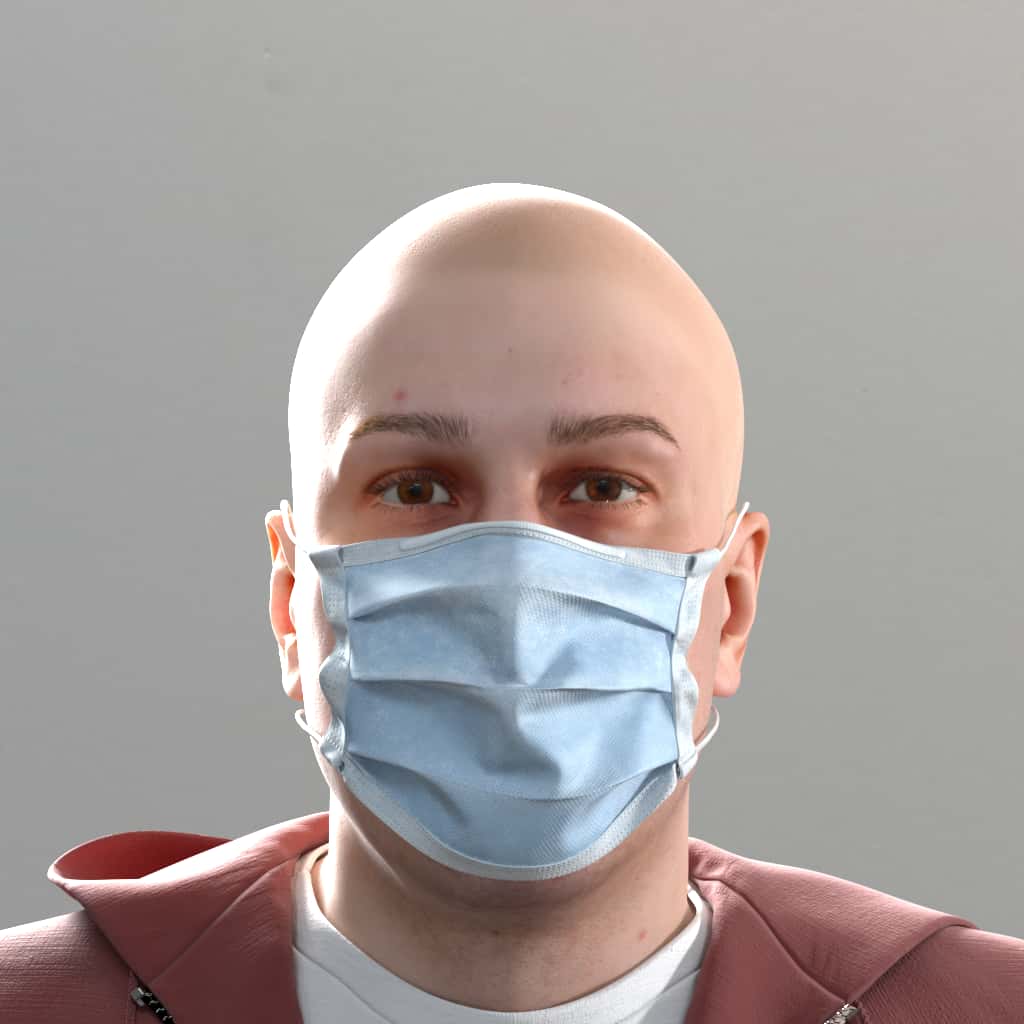

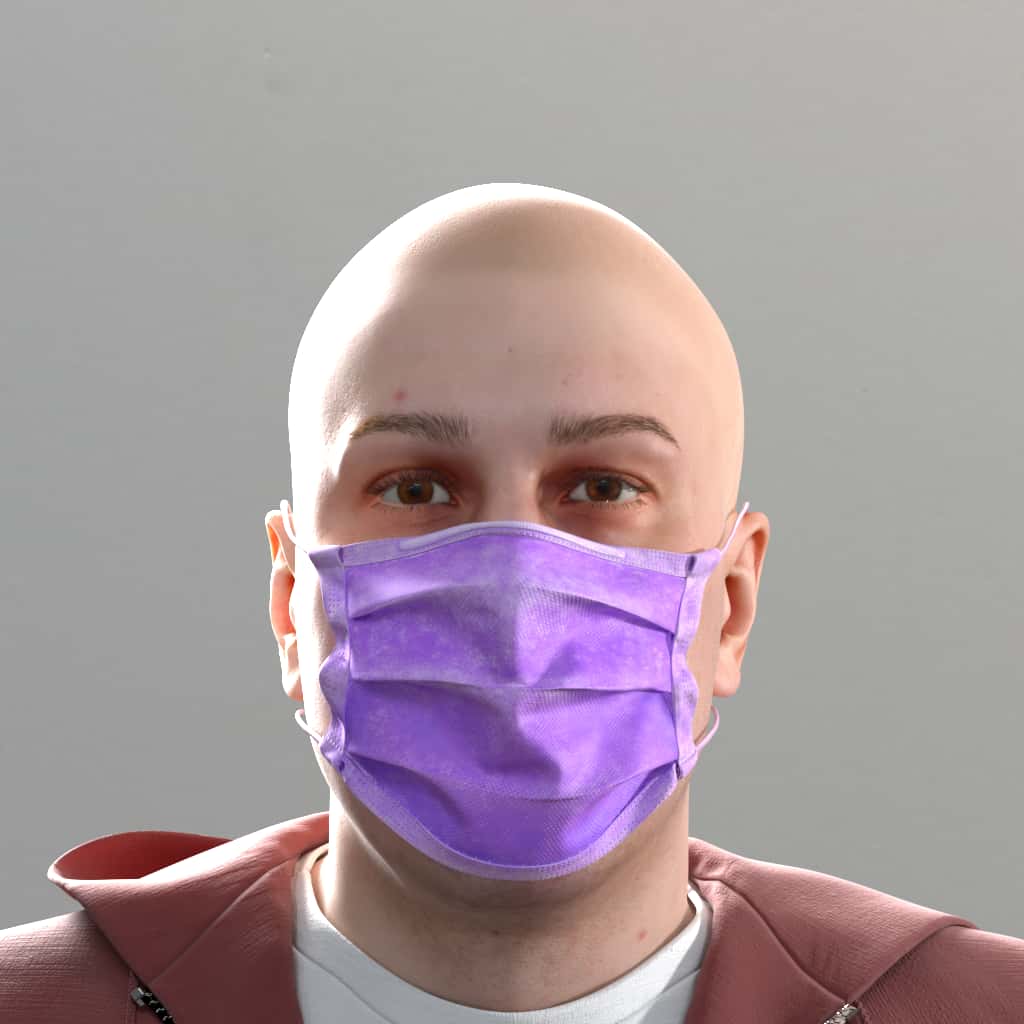

Masks

There are several styles of masks scaled to the size of the head. Simply a style name or list of them is specified. Each mask also has an off-nose version as a different style name. Style names can be found in the documentation appendix.

Position of the mask can be specified as well, defaulting to over the nose. Options include below the chin. Valid position values can be found in the documentation appendix

Note: At the moment, facial hair should not be specified if any mask is specified.

Note 2: The all keyword does not contain the none keyword, meaning that if all is requested for styles etc., a none or empty value will not be included.

You can also force the masks to be sex-appropriate for the identity (sex_matched_only). Like most sex-appropriate values, while this option may “feel” more appropriate, we have found that for machine learning, full variance can generalize better (use-case dependent).

Mask styles each have five variants, designated by values ranging from 0 to 4.

style:

- Default:

["none"] - Note: Use

["all"] as a shorthand for every option

position:

- Default:

"2" - Note: Use

["all"] as a shorthand for every option

variant:

- default:

["0"] - Note: Use

["all"] as a shorthand for every option

JSON for just selecting the floral pattern:

{

"humans": [

{

"identities": {"ids": [80],"renders_per_identity": 1},

"accessories": {

"masks": [{

"style": ["ClothHead"],

"position": ["2"],

"variant": ["0"],

"percent": 100

}]

}

}

]

}Visual examples of mask styles in position `2` (see also: full input JSON to generate them):

Visual examples of the `N95` style in different positions (see also: full input JSON to generate them):

Visual examples of the mask variants for all styles:

| Mask Name | Variant 0 | Variant 1 | Variant 2 | Variant 3 | Variant 4 |

|---|---|---|---|---|---|

| ClothEar |  |

|

|

|

|

| ClothHead |  |

|

|

|

|

| ConeHole |  |

|

|

|

|

| Duckbill |  |

|

|

|

|

| DuckbillFlat |  |

|

|

|

|

| DustEar |  |

|

|

|

|

| DustHead |  |

|

|

|

|

| GaitorNeck |  |

|

|

|

|

| K95 |  |

|

|

|

|

| N95 |  |

|

|

|

|

| N95Filter |  |

|

|

|

|

| N95Surgical |  |

|

|

|

|

| PolyesterD |  |

|

|

|

|

| PolyesterFittedF |  |

|

|

|

|

| PolyesterFittedM |  |

|

|

|

|

| PolyesterS |  |

|

|

|

|

| Respirator |  |

|

|

|

|

| Surgical |  |

|

|

|

|

Headphones

There are several styles of headphones scaled to the size of the head. Simply a style name or list of them is specified. Style names can be found in the documentation appendix.

Note: At the moment, hats should not be specified if any headphones are specified.

style:

- Default:

["none"] - Note: Use

["all"] as a shorthand for every option

Specifying a single headphone style to use:

{

"humans": [

{

"identities": {"ids": [80],"renders_per_identity": 1},

"accessories": {

"headphones": [{

"style": ["0006_headsetMic"],

"percent": 100

}]

}

}

]

}Visual examples of headphones styles (see also: full input JSON to generate them):

Bodies

Bodies appear by default, if only a head and a neck should appear, the property enabled must be set as false.

The api provides bodies of varying shapes and sizes, and modifies clothing to fit over them. By default, height and fat_content are automatically set and consistent for each ID — the settings are also the same for a given ID from one image to the next and from one job to the next. They are roughly mapped to the height and BMI of the identity.

For machine learning and other uses requiring randomization of body shape and size, manually set height and associated fat_content to override the defaults. These may result in unusual proportions of head size relative to body size — testing to find a proper balance is recommended.

enabled:

- Default:

true

height:

- Default: Per ID

fat_content:

- Default: Per ID

percent:

- Default:

100

You can check the documentation appendix for more info on valid height, valid fat_content, height logic, and bmi logic.

Body enabled and not matched, asking for full distros with all:

{

"humans": [

{

"identities": {"ids": [80],"renders_per_identity": 1},

"body": [{

"height": ["all"],

"fat_content": ["all"],

"percent": 100

}],

"camera_and_light_rigs": [{

"cameras": [{

"relative_location": {

"y": {"type": "list", "values": [-0.5]},

"z": {"type": "list", "values": [6]}

}

}]

}]

}

]

}Visual examples of varying body fat content (see also: full input JSON to generate them):

| Lowest | Lower | Median | Higher | Highest | |

|---|---|---|---|---|---|

|

Weight |

|

|

|

|

|

Visual examples of varying body heights. Please note that the current camera location logic centers from the head, so changing heights is not immediately noticeable against some backgrounds, but can be seen here in the length of arm remaining in the image (see also: full input JSON to generate them):

| Shortest | Shorter | Short | Median | Tall | Taller | Tallest | |

|---|---|---|---|---|---|---|---|

|

Height |

|

|

|

|

|

|

|

Clothing 2.0

There are many clothing outfits available to choose from. Outfits are normally filtered to gender-normalized choices (for example: no dresses on males, no suit coats on females), but that filter can be dropped by changing the value of sex_matched_only to false. By default a gender-neutral outfit of jeans, t-shirt, and hoodie are applied if nothing is specified. Also, a new feature single_style_per_id has been added which will override a distibution by choosing a single outfit to apply to all of the renders for each ID selected.

There are over 30 available types of clothing outfits, which can be found in our documentation appendix

NOTE: This feature is in beta and the JSON structure is subject to change.

outfit:

- Default:

If no outfit is specified, then each Id will get a predetermined outfit

single_style_per_id:

- Default:

false

sex_matched_only:

- Default:

true

NOTE: The value of body must be set to enabled for clothing to appear.

An example to start with:

{

"humans": [

{

"identities": {"ids": [80,303],"renders_per_identity": 1},

"clothing": [{

"outfit": ["all"],

"percent": 100

}],

"gesture": [{

"name": ["wave"],

"percent": 100

}],

"camera_and_light_rigs": [{

"type": "manual",

"cameras": [{

"specifications": {

"resolution_w": 1024,

"resolution_h": 1024

},

"relative_location": {

"y": {"type": "list", "values": [1.2]},

"z": {"type": "list", "values": [5.5]}

}

}]

}]

}

]

}Visual examples of clothing types:

Gesture Animations [BETA]

The gesture section defines body motion sequences and static poses. A gesture is identified by

position_seed. By default, keyframe_only is true, setting the selected gesture to be created as a static pose of a single render with a single frame. When keyframe_only is false, each render is split into multiple frames that can be played back as an animation. Currently, the frames are designed to be played back at 30fps.

Gestures can be listed as "all" or chosen from the list of gestures found here. The default body gesture is "default", which is a neutral standing position with arms out to the sides lifted away from the body slightly. A video of each gesture can be found here.

name:

- Default:

"default"

keyframe_only:

- Default:

true

position_seed:

- Default: 0.0

- Min: 0.0

- Max: any postive number

Example with distribution of all gestures, half of which when run would return single frame renders chosen from the entire distribution, and half would return look_right as an animated gesture:

{

"humans": [

{

"identities": {

"ids": [80],

"renders_per_identity": 1

},

"gesture": [{

"name": ["all"],

"keyframe_only": true,

"position_seed": {"type": "list", "values": [1]},

"percent": 50

},

{

"name": ["look_right"],

"keyframe_only": false,

"position_seed": {"type": "list", "values": [3]},

"percent": 50

}],

"camera_and_light_rigs": [{

"cameras": [{

"relative_location": {

"y": {"type": "list", "values": [-0.5]},

"z": {"type": "list", "values": [6]}

}

}]

}]

}

]

}Visual examples of available body gestures in their keyframe:

Camera and Light Rigs

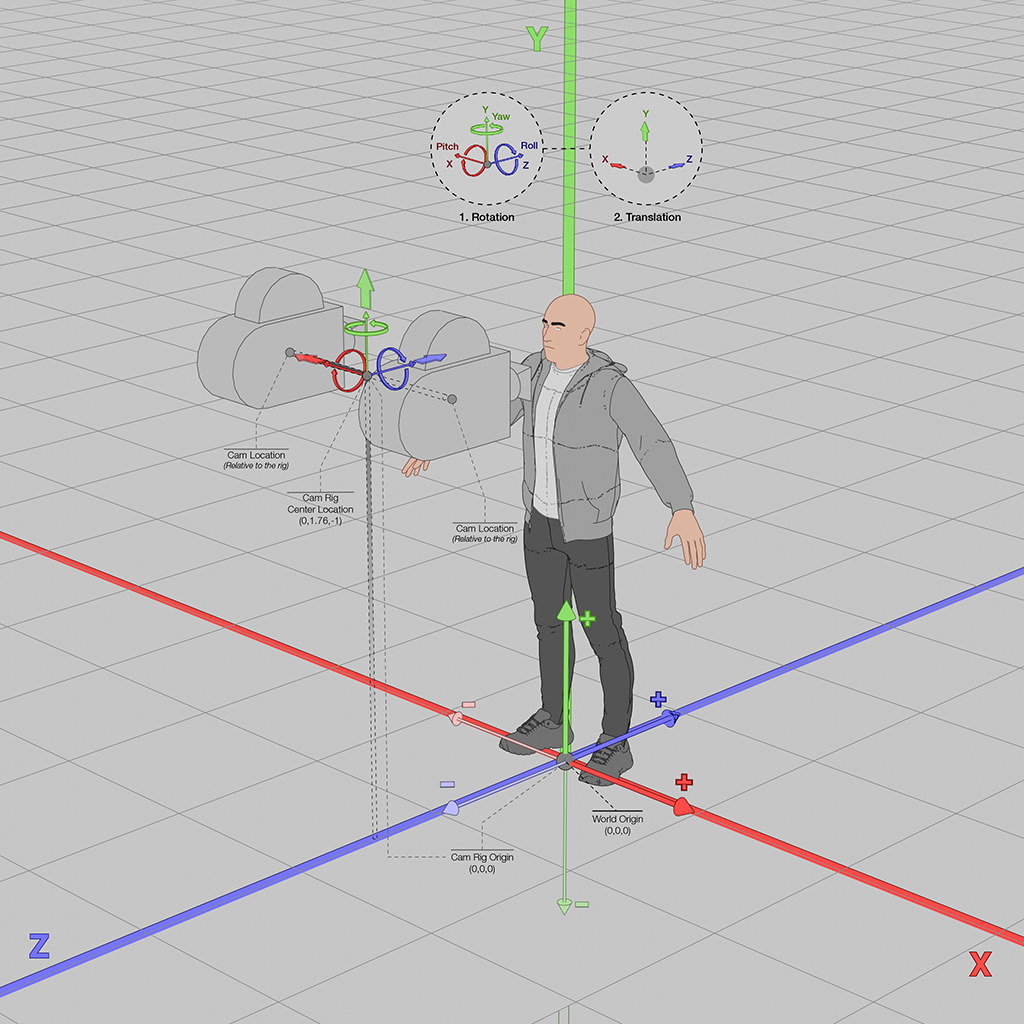

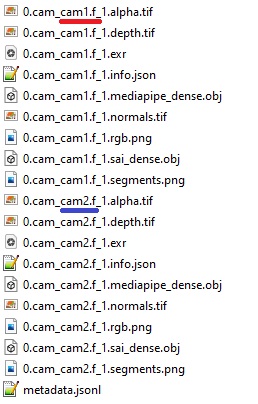

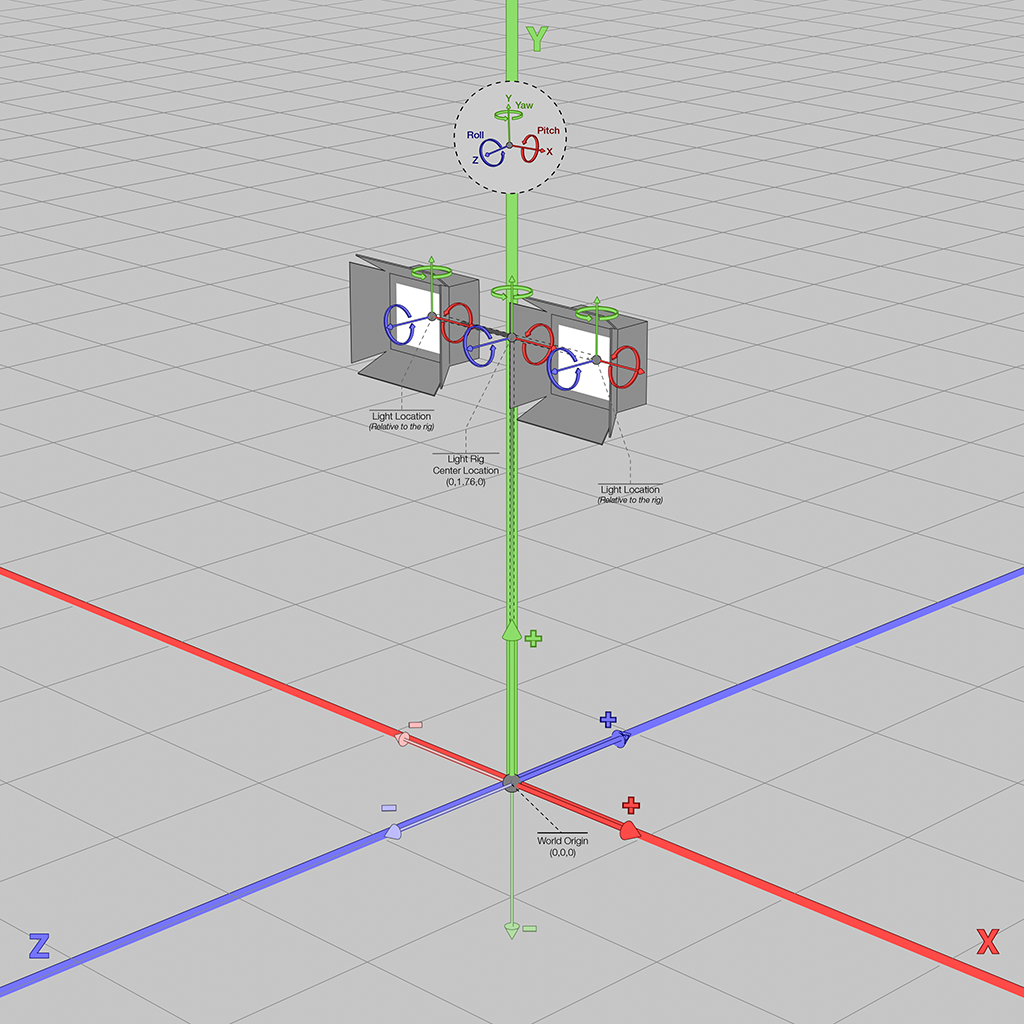

Overview

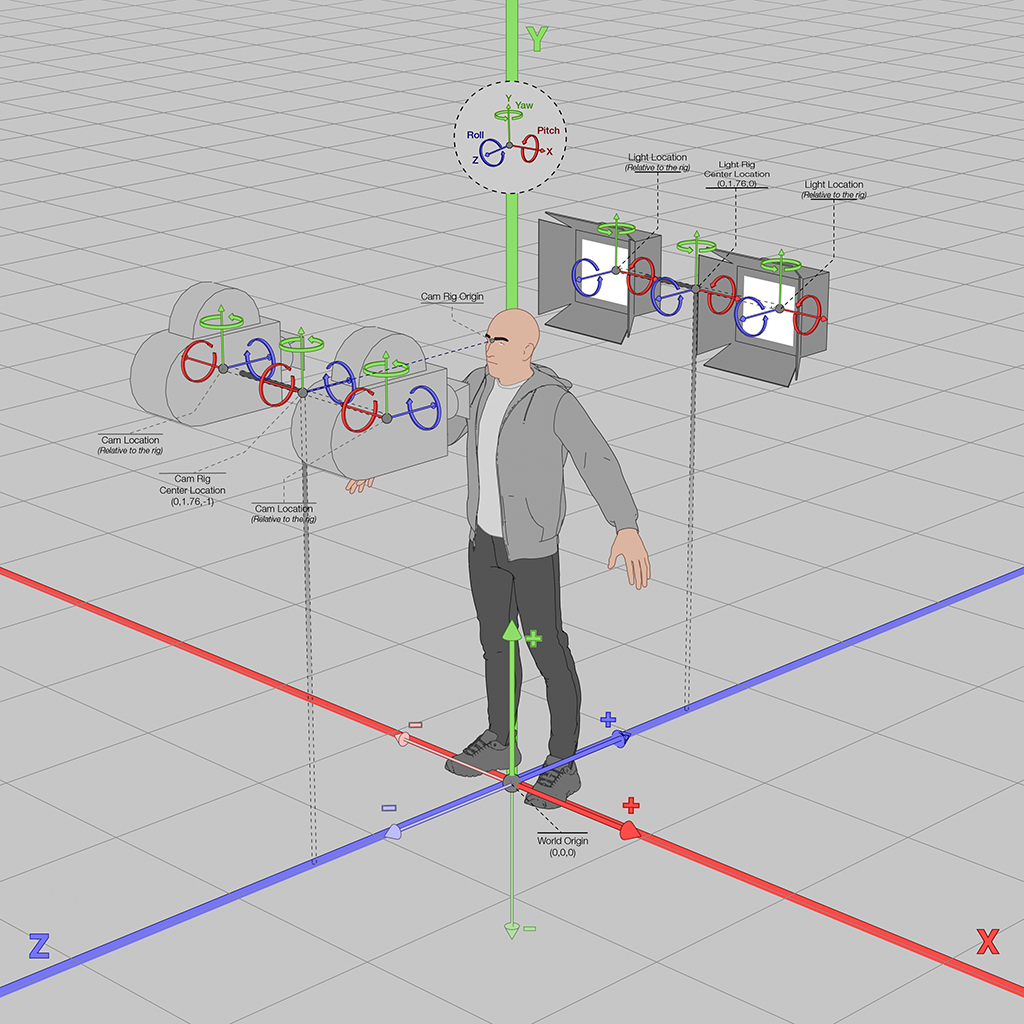

Placing cameras and lights into a virtual scene is based on creating one or more virtual rigs. A rig defines a local space in which the lights and cameras can be placed by setting an initial origin point and orientation towards the subject. It is then moved with a set of positional transforms and rotations along all three axis. How the rig can be moved from or around it's origin point is determined by its type.

The top level camera_and_light_rigs is an array and not a distribution group. The array allows for multiple rigs to be placed in the scene. At a minimum, the first rig (0th index of the array) must contain a camera. The additional indexes in the array are useful to place additional lights and cameras in the scene with their own rig coordinates and typed behavior.

Rigs can hold up to five cameras, each able to have custom render settings, specification, and location relative to the rig. Rigs can hold up to three lights each, which are also placed by their relative location to the rig and can be controlled by a number of settings.

Example corresponding to diagram:

{

"humans": [

{

"identities": {"ids": [80], "renders_per_identity": 1},

"camera_and_light_rigs": [

{

"type": "head_orbit",

"location": {"z": {"type": "list", "values": [5]}},

"cameras": [{

"name": "cam1",

"relative_location": {

"x": {"type": "list", "values": [-0.5]}

}

}, {

"name": "cam2",

"relative_location": {

"x": {"type": "list", "values": [0.5]}

}

}]

},

{

"type": "head_orbit",

"location": {

"z": {"type": "list", "values": [5]},

"yaw": {"type": "list", "values": [180]}

},

"lights": [

{

"type": "rect",

"intensity": {"type": "list", "values": [2000]},

"size_meters": {"type": "list", "values": [0.6]},

"relative_location": {

"x": {"type": "list", "values": [-0.5]}

},

"color": {

"red": {"type": "list", "values": [255]},

"green": {"type": "list", "values": [255]},

"blue": {"type": "list", "values": [255]}

}

},

{

"type": "rect",

"intensity": {"type": "list", "values": [2000]},

"size_meters": {"type": "list", "values": [0.6]},

"relative_location": {

"x": {"type": "list", "values": [0.5]}

},

"color": {

"red": {"type": "list", "values": [255]},

"green": {"type": "list", "values": [255]},

"blue": {"type": "list", "values": [255]}

}

}

]

}

]

}

]

}Rig Types

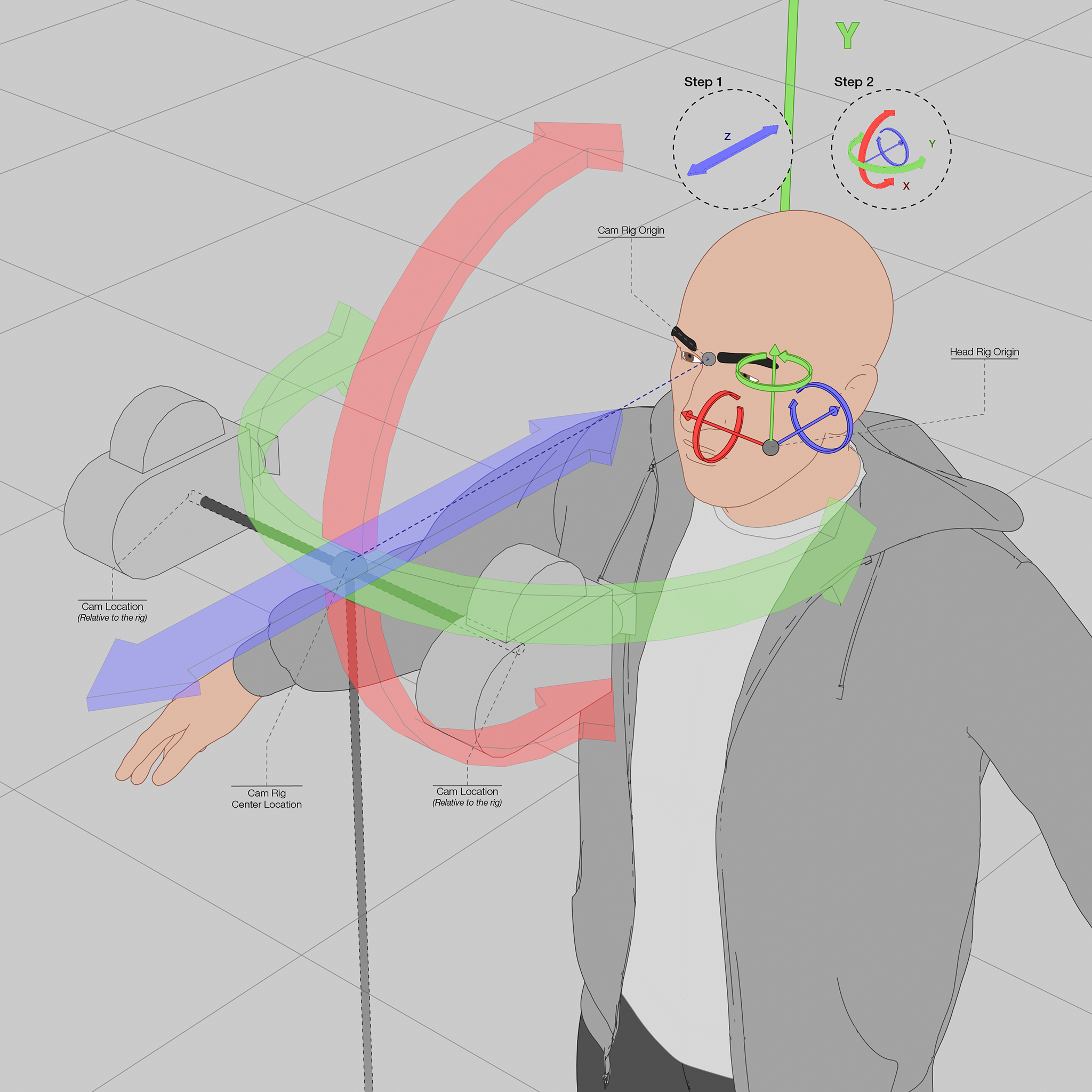

Head Orbit

Description: head_orbit keeps the rig in orbit around the head of the main human. Location transform along the x and y axis are muted in this mode and input will be ignored. Transform z is the distance from the head.

Origin: 0,0,0 is set at the centroid of the human head.

Transform Specifics: The rig is moved away from the origin by transform z units; it will be facing down the z-axis. Following this transformation, any rotations are applied. Finally the rig is moved closer to the head.

Example: The user wants a 90 degree profile of the head, to be located 5 meters away. The transform would be 0 in the x- and y-axis and 5 for z, with a rotation of yaw: +90.

An example template which uses head orbit rigs is the landmark estimation input JSON.

Example of Head Orbit

"camera_and_light_rigs": [{

"type": "head_orbit",

"location": {

"z": {"type": "list","values": [5]}

},

"cameras": [{

"name": "cam1",

"relative_location": {

"x": {"type": "list","values": [-0.5]}

}

},{

"name": "cam2",

"relative_location": {

"x": {"type": "list","values": [0.5]}

}

}]

}],

Head Orbit with Tracking

Description: head_orbit_with_tracking operates exactly like head_orbit except the head joint’s rotation is taken into account and acts as an additional offset. This can be helpful when working with animated gestures. An example use case: the head looks from its left shoulder to its right shoulder over the course of 100 frames. If no rotation is specified, the camera keeps itself oriented directly at the face of the head and will track with it across the whole animation.

Transform Specifics: The rig is moved away from the origin by transform-z units and it will be facing down the z-axis. It is then rotated relative to the head’s rotation — if a rotation offset is specified by the user, the rig is further rotated around the origin. Finally the rig is moved closer to the head.

Example: The user wants a 90 degree profile of the head, to be located 5 meters away. The transform would be 0 in the x- and y-axis and 5 for z, with a rotation of yaw: +90 . This works similarly to head_orbit but this will track with the head when an animation is used.

Example of Head Orbit with Tracking

"camera_and_light_rigs": [

{

"type": "head_orbit_with_tracking",

"location": {

"z": { "type": "list", "values": [6.9]},

"pitch": { "type": "list", "values": [0]},

"yaw": { "type": "list", "values": [90]},

"roll": { "type": "list", "values": [0]},

"y": {"type": "list","values": [-0.9]}

}

}

],Visual examples of how the camera stays on the head despite the movement:

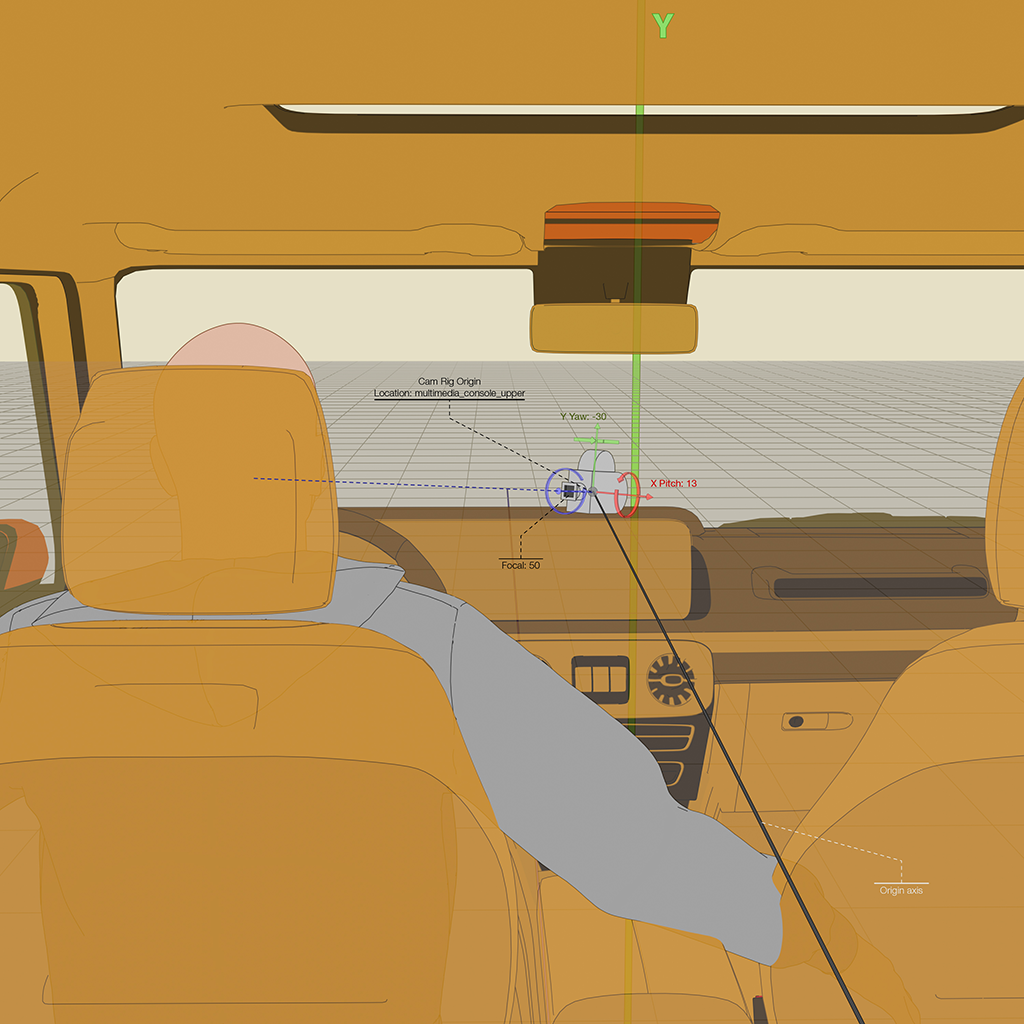

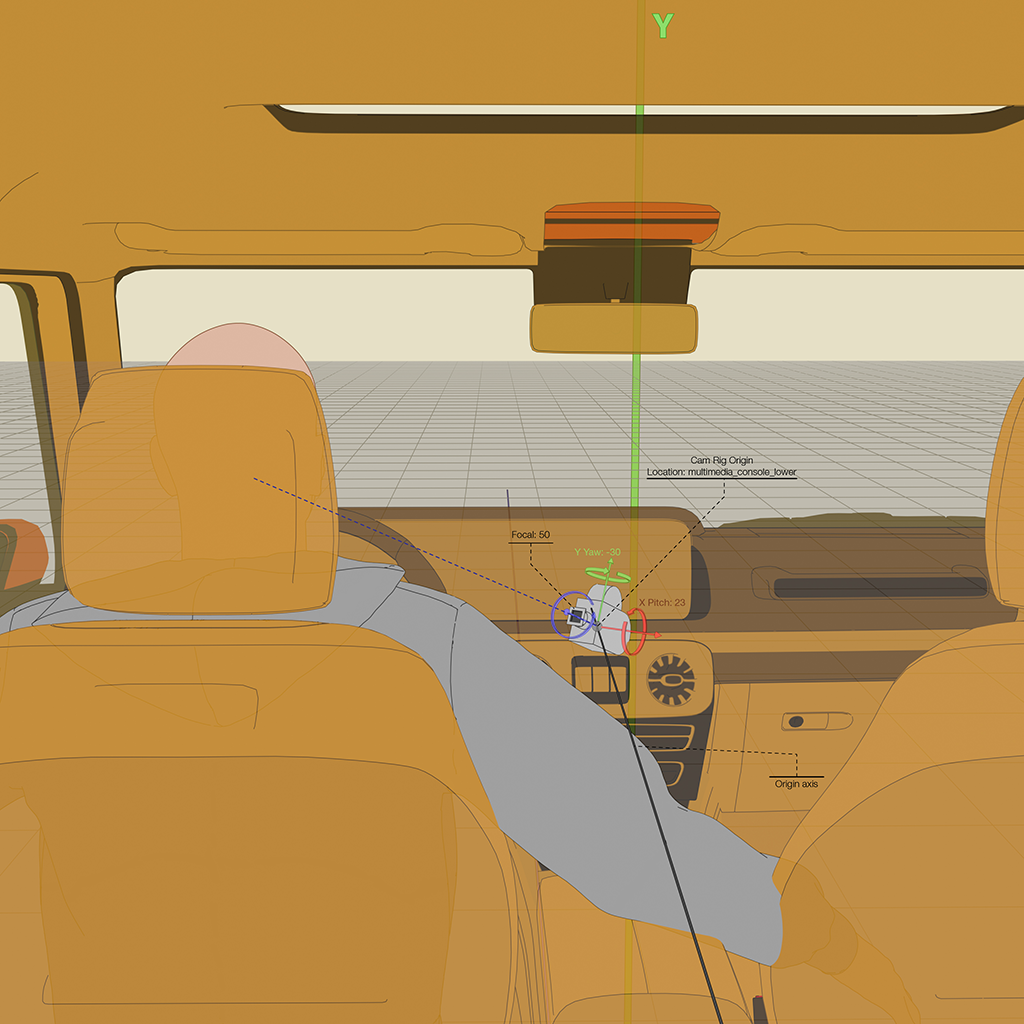

Head Orbit in Car

Description: This operates exactly like head_orbit except the head joint is hard coded in and additional steps are taken to move it down to car level. This limits the orbit of the rig around the head of the human. Transform-x and transform-y are muted in this mode. Transform-z is the distance from the head. Rotation is the orbit rotations.

Origin: 0,0,0 is set at the centroid of the human head.

Transform Specifics: The Rig is moved away from the origin by transform z units and it will be facing down the z-axis. Next it is rotated around the origin. Finally the rig is moved to the head.

Example: The user wants a 90 degree profile of the head, located 5 units away. The values entered would be: Transform {0,0,5} and Rotate {0,90,0}

Example of Head Orbit in Car

"camera_and_light_rigs": [{

"type": "head_orbit_in_car",

"location": {

"y": {"type": "list", "values": [-0.0880446]},

"z": {"type": "list", "values": [0.73]}

},

"cameras": [{

"name": "orbit_left",

"relative_location": {

"x": {"type": "list","values": [-0.2]}

},

"specifications": {

"focal_length": {"type": "list", "values": [3.7]},

"sensor_width": {"type": "list", "values": [5.45]}

}

},

{

"name": "orbit_right",

"relative_location": {

"x": {"type": "list","values": [-0.1]}

},

"specifications": {

"focal_length": {"type": "list", "values": [3.7]},

"sensor_width": {"type": "list", "values": [5.45]}

}

}

]

}]

Manual

Description: This permits the user to define within the 3d space where the rig is located. It is the most straightforward of the XformTypes.

Origin: 0,0,0 will set the rig position to the scene origin, which is generally by the feet of the human.

Transform Specifics: Transform is applied once rotation is applied.

Example: What you see is what you get. A rig with Transform{1,5,0} and Rotation of{10,0,0} will result in a rig that is placed at 1,5,0 and that rig will be rotated around the x axis by ten degrees.

Example of Manual Type

"camera_and_light_rigs": [

{

"type": "manual",

"location": {

"z": { "type": "list", "values": [1] },

"pitch": { "type": "list", "values": [0] },

"yaw": { "type": "list", "values": [0] },

"roll": { "type": "list", "values": [0] }

}

}

],

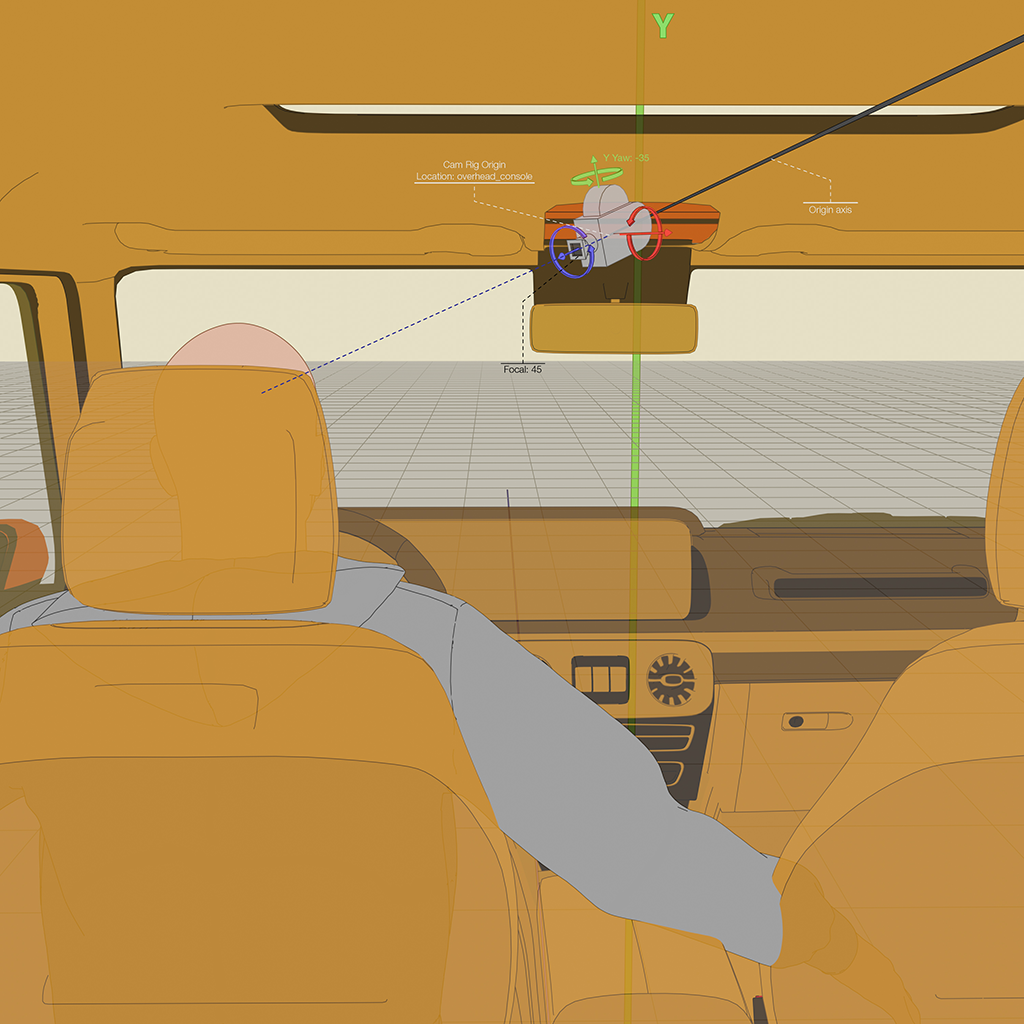

Preset Location

Description: This permits the user to snap the rig to one of the predefined locations within supported 3d_location contexts. Currently, only the vehicles context has preset locations available.

- Presets available to vehicles:

rearview_mirror, overhead_console, dashboard , multimedia_console_upper, multimedia_console_lower, pillar_a.Origin: 0,0,0 will set the rig position to the center position of the preset_location. Adjustment of the preset location is achieved by using the preset_transform. Rig location will still accept pitch, roll, and yaw controls.

Transform Specifics: Transform is applied once rotation is applied.

Example: Placing the rig in the preset location of the rearview mirror and applying a transform of u = 1, which will set the camera closer to the driver horizontally.

Example of Preset Location

"camera_and_light_rigs": [{

"type": "preset_location",

"preset_name": "rearview_mirror",

"cameras": [{

"specifications": {

"focal_length": {"type": "list","values": [30]}

}

}],

"location": {

"yaw": {"type": "list","values": [-30]},

"pitch": {"type": "list","values": [0]}

}

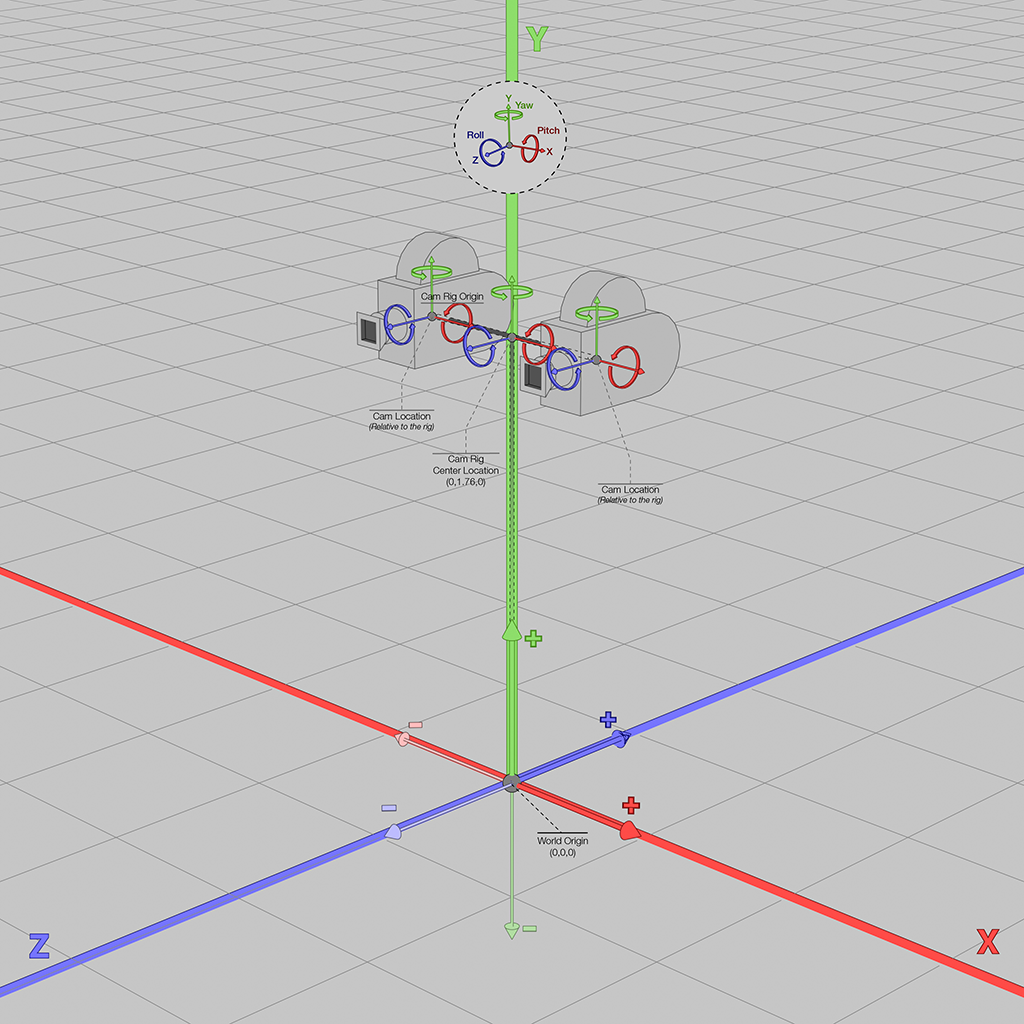

}]Visual example of preset locations:

| Multimedia Console Upper | Multimedia Console Lower | Dashboard | Overhead Console | Rearview Mirror |

|---|---|---|---|---|

|

|

|

|

|

Cameras

Name

A camera name can consist of lowercase alphanumeric characters. It is recommended to name the cameras when more than one are defined in a scene.

name:

- Default: "default"

- Limitations: Must be alphanumeric string, no special characters

Camera Example

"cameras": [

{

"name": "cam1",

"specifications": {

"resolution_h": 1024,

"resolution_w": 1024

}

},

{

"name": "cam2",

"specifications": {

"resolution_h": 1024,

"resolution_w": 1024

}

}

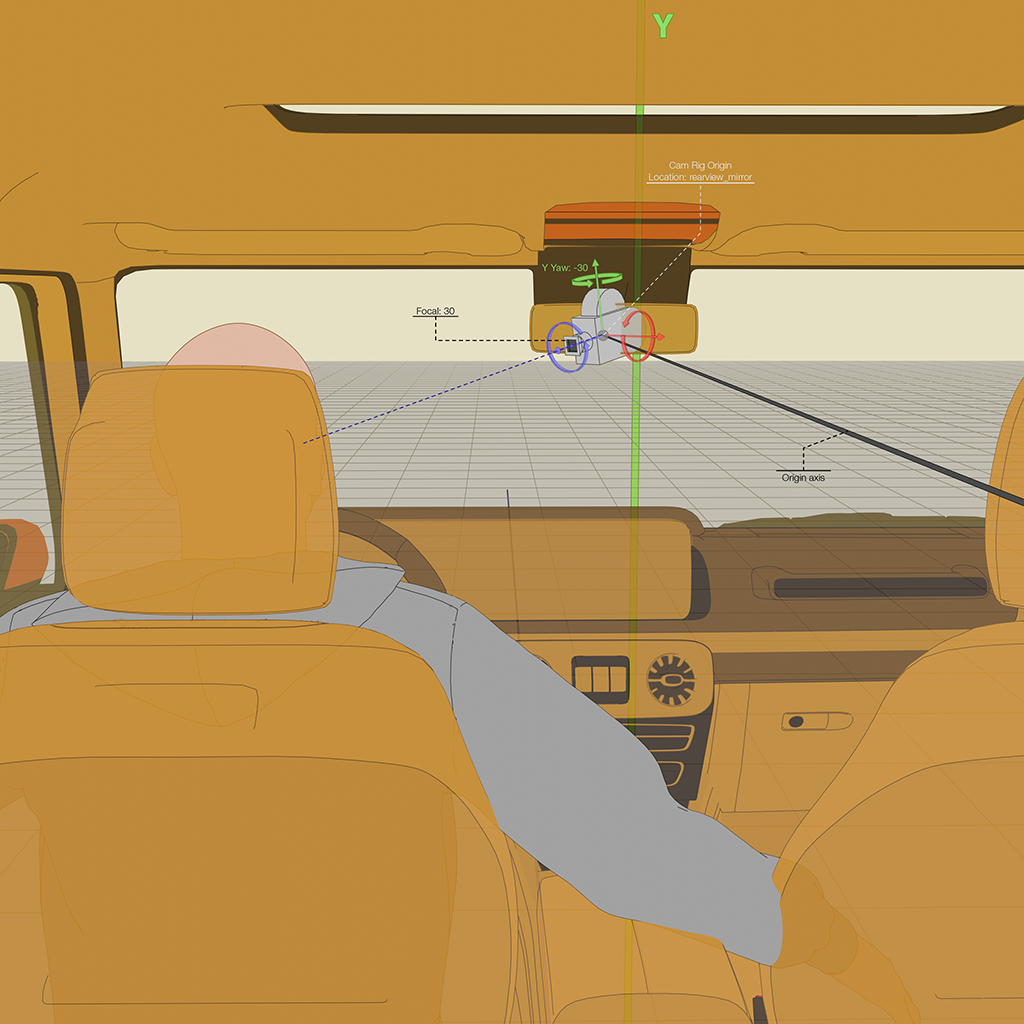

]Visual examples of downloadable content:

Camera Location

Camera relative_location takes the same style of inputs as rig location.

Transform in meters.

x, y and z:

- Default: 0.0

- Min: 0.0

- Max: 1000.0

Rotation in degrees.

pitch, yaw and roll:

- Default: 0.0

- Min: -180

- Max: 180

The order in which the transformation is applied to each camera (relative to the rig) is: Pitch, Yaw and then Roll

"camera_and_light_rigs": [{

"location": {

"z": {"type": "list","values": [0]}

},

"cameras": [{

"name": "cam1",

"relative_location": {

"yaw": {"type": "list","values": [180]},

"x": {"type": "list","values": [-0.5]}}

},{

"name": "cam2",

"relative_location": {

"yaw": {"type": "list","values": [180]},

"x": {"type": "list","values": [0.5]}

}

}]

}]

Resolution

The resolution height and width can be set for each camera. The default for a camera is 1024 x 1024 if not specified.

resolution_w and resolution_h:

- Default: 1024

- Min: 256

- Max: 4098

Camera Example

"cameras": [

{

"name": "default",

"specifications": {

"resolution_h": 1024,

"resolution_w": 1024,

"focal_length": { "type": "range", "values": { "min": 100, "max": 100 }},

"sensor_width": { "type": "range", "values": { "min": 33, "max": 33 }},

"wavelength": "visible"

}

}

]

Focal Length

The focal length (power/zoom) of the lens is specified in mm. We follow the standard as used by the vast majority of DSLR camera lenses and CGI rendering engines.

focal_length:

- Default: 100.0

- Min: 1.0

- Max: 300.0

Camera Example

{

"humans": [

{

"identities": {"ids": [80], "renders_per_identity": 1},

"camera_and_light_rigs": [{

"cameras": [{

"specifications": {

"focal_length": {"type": "range", "values": {"min": 40, "max": 190}}

}

}]

}]

}

]

}Visual examples of camera focal lengths / zoom (see also: full input JSON to generate them):

| 47.22 | 68.05 | 88.06 | 104.93 | 146.26 | 181.07 | |

|---|---|---|---|---|---|---|

| Focal length |  |

|

|

|

|

|

Sensor Width

Camera sensor size may be calculated from its field of view — knowing the focal length of the camera in question is required. Here is the formula for calculating sensor size:

sensor_width = focal_len * tan(hFoV / 2), where hFoV is horizontal FoV and focal_len is in mm (millimeters)

For the purposes of comparison, 1.5mm is a good baseline focal length value for webcams, where a standard DSLR cameras focal length is 18mm.

EXAMPLE: Certain webcams may offer 65deg as standard and 90deg as wide-angle. This video demonstrates what different field of view will look like on a Logitech Brio webcam: . In the case of a webcam, the formula resolves to:

sensor_width = focal_len * tan(hFoV / 2)

=> sensor_width = 1.5mm * tan(65/2 degrees)

=> sensor_width = 0.955605 mm

sensor_width, measured in millimeters, is the "film back" or "back plate" of the optical sensor.

sensor_width:

- Default: 33.0

- Min: 0.001

- Max: 1000.0

Camera Example

"cameras": [

{

"name": "default",

"specifications": {

"resolution_h": 1024,

"resolution_w": 1024,

"focal_length": { "type": "range", "values": { "min": 100, "max": 100 }},

"sensor_width": { "type": "range", "values": { "min": 33, "max": 33 }},

"wavelength": "visible"

}

}

]

Wavelength

There are two wavelength settings, visible and nir. The default is the visible range of light. When nir is selected, the camera will be set to also accept a range of

More examples of NIR images can be found here.

nir for the render to appear properly. Visible cameras are not affected by near infrared, and NIR cameras will turn out very dark renders without at least one light to emit the NIR wavelengths into the scene.

wavelength

- Default: "visible"

- Types: "visible", "nir"

Camera Example

"camera_and_light_rigs": [

{

"type": "head_orbit",

"cameras": [{"specifications": {"wavelength": "nir"}}],

"location": {

"z": {"type": "list","values": [2.4]},

"yaw": {"type": "list","values": [-20]},

"pitch": {"type": "list","values": [-7]}

}

},

{

"type": "head_orbit",

"lights": [{

"type": "omni",

"color": {

"red": {"type": "list","values": [255]},

"blue": {"type": "list","values": [255]},

"green": {"type": "list","values": [255]}

},

"intensity": {"type": "list","values": [0.0015]},

"wavelength": "nir",

"size_meters": {"type": "list","values": [0.0015]},

"relative_location": {

"x": {"type": "list","values": [-0.1]},

"y": {"type": "list","values": [0.01]},

"z": {"type": "list","values": [-0.77]},

"yaw": {"type": "list","values": [0.1]},

"roll": {"type": "list","values": [0.1]},

"pitch": {"type": "list","values": [0.1]}

}

}],

"location": {"z": {"type": "list","values": [1]}}

}

]Visual examples of a NIR image:

Stereo Image

It is possible to position two different camera in order to recreate a stereo image. A stereo image is common in robotic and teleconferencing applications, meant to emulate how the eyes work. Taking two images from slightly different perspectives, this can be used to infer the distance to an object. This is somewhat binary based on individual needs: an application will have a stereo camera or not.

Camera Example

{

"humans": [{

"identities": { "ids": [80],"renders_per_identity": 1},

"camera_and_light_rigs": [{

"type": "head_orbit",

"location": {

"pitch": {"type": "list","values": [-20, 0, 20]},

"z": {"type": "list","values": [1.5]}

},

"cameras": [

{

"name": "leftone",

"relative_location": {

"x": {"type": "list", "values": [-0.1]}

}

},{

"name": "rightone",

"relative_location": {

"x": {"type": "list", "values": [0.1]}

}

}

]

}]

}]

}Visual example of a stereo image:

| Stereo Image | Left cam | Right cam |

|---|---|---|

| Pitch: -20, x: -0.1 / Pitch: -20, x: 0.1 |  |

|

| Pitch: 0, x: -0.1 / Pitch: 0, x: 0.1 |  |

|

| Pitch: 20, x: -0.1 / Pitch: 20, x: 0.1 |  |

|

Additional Camera Settings

Advanced specifications section holds a few additional settings:

- Noise Threshold

- Denoise

- Lens Shift / Perspective

- Window Offsets

Denoise and Noise Threshold

denoise controls whether or not denoise is applied, the default is true

noise_threshold sets the level, currently the default is .017

Offsets and Lens Shifts

window_offset_horizontal and window_offset_vertical is a vray specific parameter related to the camera’s intrinsics, see: vray documentation

window_offset_horizontal and window_offset_vertical:

- Default: 0

- Min: -100

- Max: 100

lens_shift_horizontal and lens_shift_vertical relate to Tilt-shift, see: wikipedia article

lens_shift_horizontal and lens_shift_vertical:

- Default: 0

- Min: -100

- Max: 100

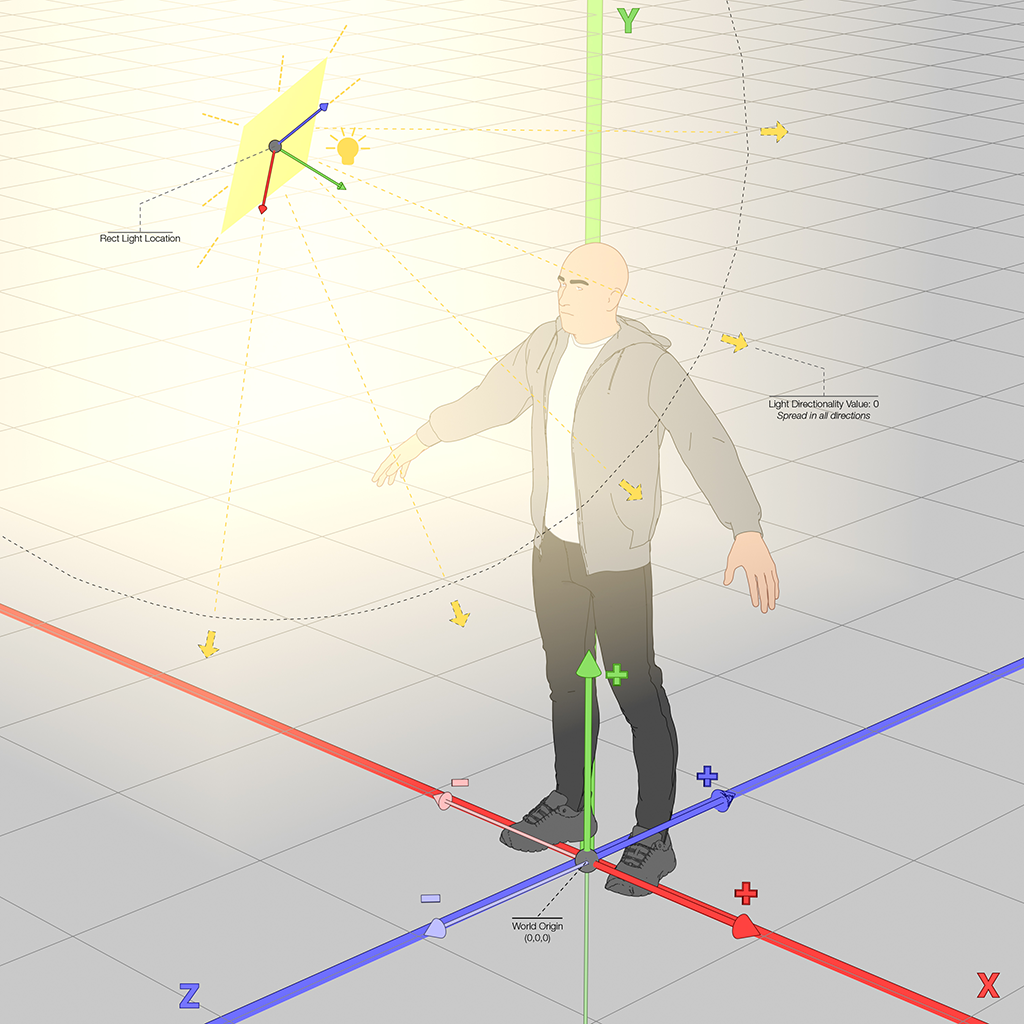

Lights Overview

Lights are able to be customized to a number of different use cases. Five different types are available representing real world configurations such as "rectangle" and "omnidirectional". Each light can be further refined to a size in meters and a brightness intensity. Colors are represented as RGB and allow for variations by setting value ranges. For near infrared setups, the wavelength can be changed to nir. Finally, a light should be given a relative location to offset it from the rig, cameras, and other lights as necessary.

nir for the render to appear properly. Visible cameras are not affected by near infrared, and NIR cameras will turn out very dark renders without at least one light to emit the NIR wavelengths into the scene.

An example template which uses lights in a rig is the teleconferencing matting input JSON.

type

- Default: "rect"

size_meters

- Default: 0.25

- Min: 0

- Max: 3

intensity

- Default: 0

- Min: 0

- Max: 7000

wavelength

- Default: "visible"

- Types: "visible", "nir"

Example of Spot Light

"lights": [

{

"type": "spot",

"size_meters": { "type": "range", "values": {"min": 0.01, "max": 3.0 }},

"intensity": { "type": "range", "values": {"min": 0.0, "max": 30.0 }},

"wavelength": "visible"

}

]

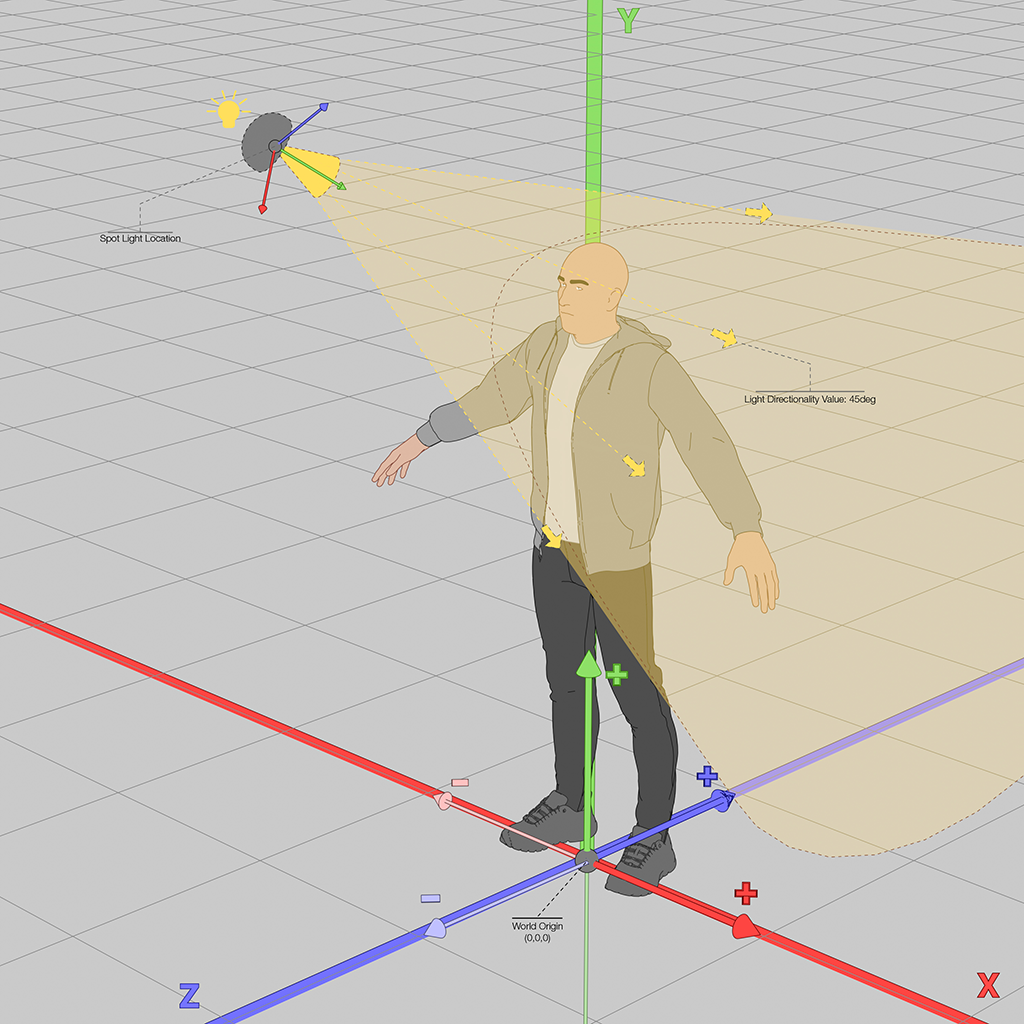

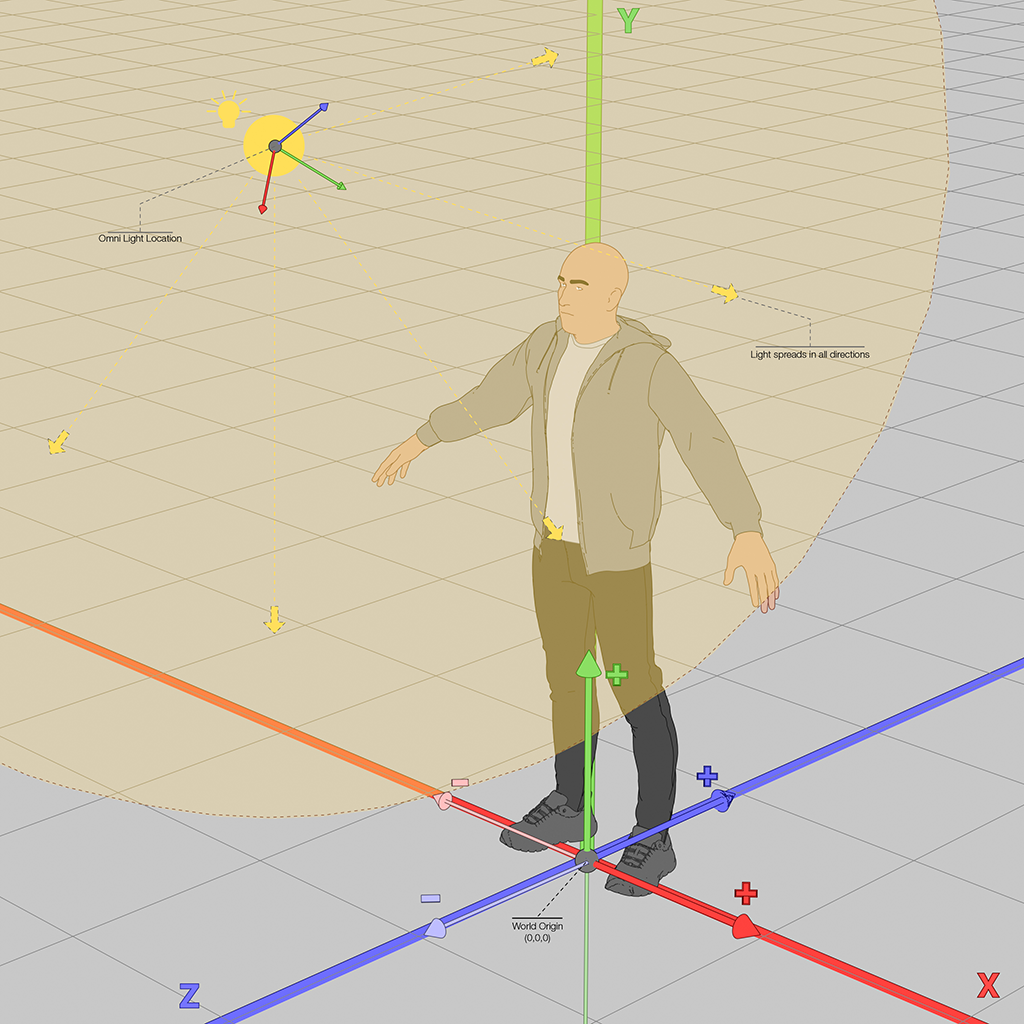

Light Types

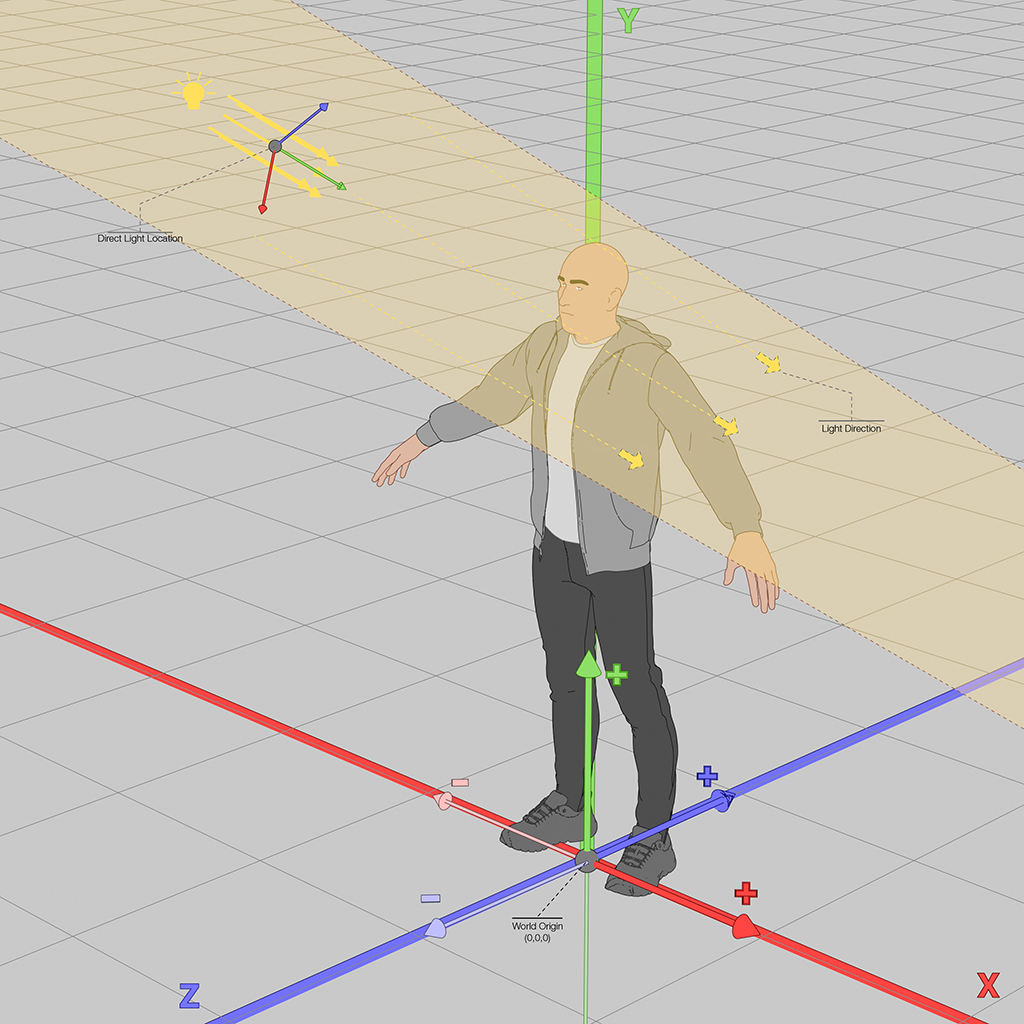

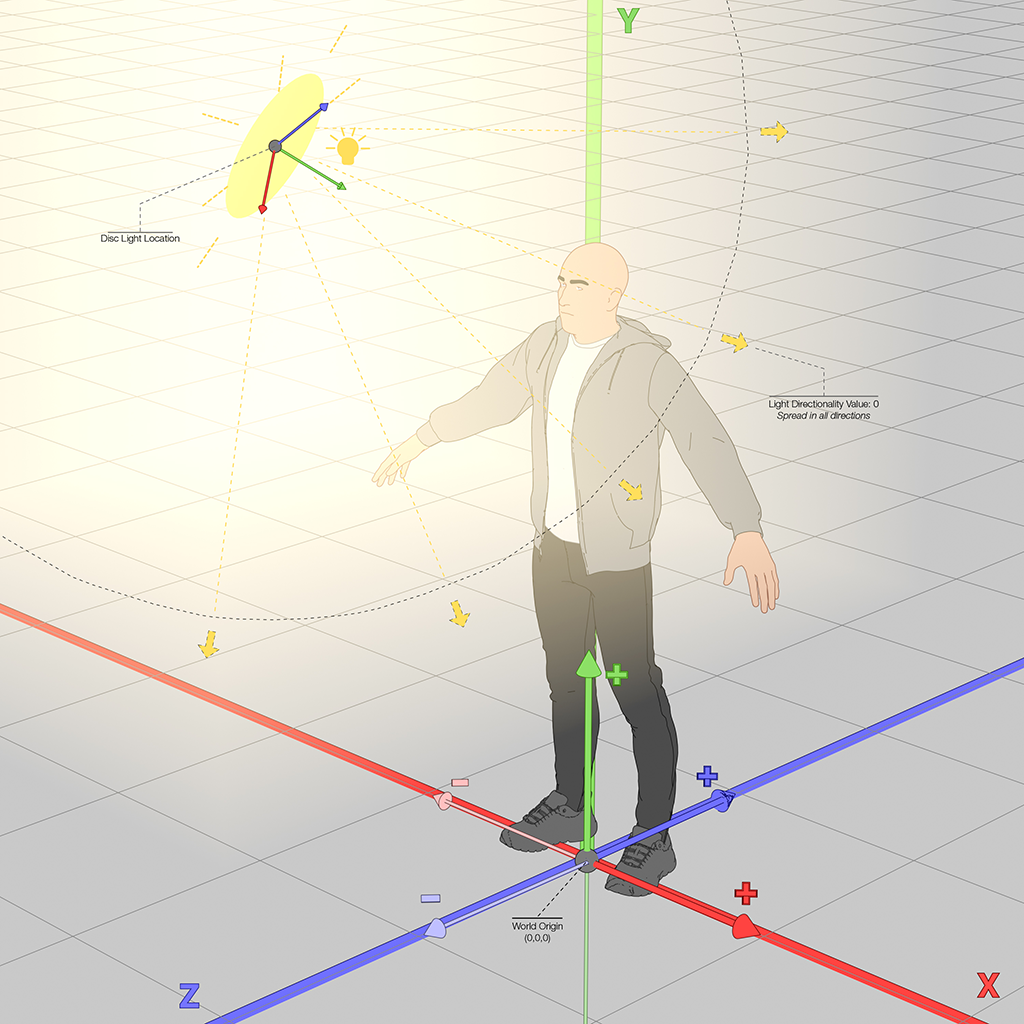

Visual example of light types:

| Rect | Spot | Omni | Direct | Disc | |

|---|---|---|---|---|---|

| diagram |  |

|

|

|

|

| Visual example |  |

|

|

|

|

Rect

Description: This light casts light out from a given area. That area is as you probably guessed based on the name defined by a rectangle. However for ease of use this is actually limited to just a uniform square of light. The larger the area the softer the shadows.

Available Intensities: 70-7000

Available Sizes: 0-3 meters

Example of Rect Light

"lights": [

{

"type": "rect",

"size_meters": { "type": "range", "values": {"min": 0.01, "max": 3.0 }},

"intensity": { "type": "range", "values": {"min": 0.0, "max": 30.0 }},

"wavelength": "visible"

}

]

Spot

Description: This light casts light only within a cone. Objects outside of this cone will receive no light.

Available Intensities: 10-150

Available Sizes: 0-3 meters

Example of Spot Light

"lights": [

{

"type": "spot",

"size_meters": { "type": "range", "values": {"min": 0.01, "max": 3.0 }},

"intensity": { "type": "range", "values": {"min": 0.0, "max": 30.0 }},

"wavelength": "visible",

}

]

Omni

Description: Casts light in all directions uniformly. This is a quick way of illuminating an interior environment. The most common use case for this would be an object in a scene that is giving off light, such as a light bulb.

Available Intensities: 10-150

Available Sizes: 0-3 meters

Example of Omni Light

"lights": [

{

"type": "omni",

"size_meters": { "type": "range", "values": {"min": 0.01, "max": 3.0 }},

"intensity": { "type": "range", "values": {"min": 0.0, "max": 30.0 }},

"wavelength": "visible",

}

]

Direct

Description: This light is a very far away light where the light source is so far away that the best way to describe it is just a direction in space. The most common use for this light is to replicate the sun.

Available Intensities: 1-40

Available Sizes: 0-3 meters

Example of Direct Light

"lights": [

{

"type": "direct",

"size_meters": { "type": "range", "values": {"min": 0.01, "max": 3.0 }},

"intensity": { "type": "range", "values": {"min": 0.0, "max": 30.0 }},

"wavelength": "visible",

}

]

Disc

Description: Just like the rectangle light this gives of light based on a geometric shape. The shape in this case is a disc, these are typically quite artistic in use. Often these are used as eye highlights as they give a very nice round highlight that in cinema is referred to as the catch light.

Available Intensities: 70-7000

Available Sizes: 0-3 meters

Example of Disc Light

"lights": [

{

"type": "disc",

"size_meters": { "type": "range", "values": {"min": 0.01, "max": 3.0 }},

"intensity": { "type": "range", "values": {"min": 0.0, "max": 30.0 }},

"wavelength": "visible",

}

]Lights Color

Color for lights is set as a range for each color to allow for variation.

red, green adn blue:

- Default: 255

- Min: 0

- Max: 255

"lights": [

{

"type": "rect",

"size_meters": {"type": "list","values": [0.25]},

"intensity": {"type": "list","values": [1000]},

"relative_location": {

"yaw": {"type": "list","values": [-90]},

"x": {"type": "list","values": [-1]}

},

"color": {

"red": {"type": "list","values": [255]},

"blue": {"type": "list","values": [0]},

"green": {"type": "list","values": [0]}

}

},

{

"type": "rect",

"size_meters": {"type": "list","values": [0.25]},

"intensity": {"type": "list","values": [1000]},

"relative_location": {

"yaw": {"type": "list","values": [90]},

"x": {"type": "list","values": [1]}

},

"color": {

"red": {"type": "list","values": [0]},

"blue": {"type": "list","values": [255]},

"green": {"type": "list","values": [0]}

}

}

]Visual examples of 2 colored lights:

Lights Location

Transform

x, y and z:

- Default: 0.0

- Min: 0.0

- Max: 1000.0

Rotation

pitch, yaw and roll:

- Default: 0.0

- Min: -180

- Max: 180

"lights": [

{

"type": "disc",

"relative_location": {

"pitch": { "type": "range", "values": { "min": 0, "max": 180 }},

"yaw": { "type": "range", "values": { "min": 0, "max": 180 }},

"roll": { "type": "range", "values": { "min": 0, "max": 180 }},

"x": { "type": "range", "values": { "min": 0, "max": 1000 }},

"y": { "type": "range", "values": { "min": 0, "max": 1000 }},

"z": { "type": "range", "values": { "min": 0, "max": 1000 }}

},

}

]

Environment

HDRI Map

An environment surrounding the face is determined by an HDRI map. HDRI maps provide the primary lighting for the scene in terms of where the sun and surrounding lights are, and how bright they are, as well as color of the background. There are many to choose from, and they can also be rotated and increased/decreased in level/intensity to brighten the scene. For many use-cases, this is the only type of environment that needs to be specified.

Folder containing all the HDRI images

name:

- Default:

["lauter_waterfall"] - Note: Use

["all"] as a shorthand for every option

intensity:

- Default: 0.75

- Min: 0.0

- Max: 5.0

rotation:

- Default: 0

- Min: -180

- Max: 180

Higher intensity with static rotation:

{

"humans": [{

"identities": {

"ids": [80],

"renders_per_identity": 1

},

"skin": {"highest_resolution": true},

"environment": {

"hdri": {

"name": ["all"],

"intensity": {"type": "list","values": [0.75]},

"rotation": {"type": "list","values": [120]}

}

}

}]

}Visual examples of available HDRIs (see also: full input JSON to generate them):

Inputs Reference

| Fields | Type | Enum/Type | Min | Max | Default | ||||

|---|---|---|---|---|---|---|---|---|---|

| "version" | number | 1 | |||||||

|

"humans"

|

|||||||||

|

"identities"

|

|||||||||

| "ids" | array | int | [80] | ||||||

|

"renders_per_identity"

|

integer | 1 | 1000 | 1 | |||||

|

"facial attributes"

|

|||||||||

|

"expression"

|

|||||||||

|

"name"

|

list | Expressions | "none" | ||||||

|

"intensity"

|

list or range | number | 0 | 1 | [0.75] | ||||

|

"gaze"

|

|||||||||

|

"horizontal_angle"

|

list or range | number | -30 | 30 | [0] | ||||

|

"vertical_angle"

|

list or range | number | -30 | 30 | [0] | ||||

|

"head_turn"

|

|||||||||

|

"pitch"

|

list or range | number | -45 | 45 | [0] | ||||

| "yaw" | list or range | number | -45 | 45 | [0] | ||||

| "roll" | list or range | number | -45 | 45 | [0] | ||||

| "hair" | |||||||||

|

"style"

|

list | Styles | "none" | ||||||

|

"color"

|

list | Colors | "chocolate brown" | ||||||

|

"color_seed"

|

list or range | number | [0] | ||||||

|

"relative_length"

|

list or range | number | 0.5 | 1 | [1.0] | ||||

|

"relative_density"

|

list or range | number | 0.5 | 1 | [1.0] | ||||

|

"sex_matched_only"

|

bool | true | |||||||

|

"ethnicity_matched_only"

|

bool | false | |||||||

|

"facial_hair"

|

|||||||||

|

"style"

|

list | Styles | ["none"] | ||||||

|

"color"

|

list | Colors | ["chocolate brown"] | ||||||

|

"color_seed"

|

list or range | number | 0.0 | 1.0 | [1.0] | ||||

|

"relative_length"

|

list or range | number | 0.5 | 1.0 | [1.0] | ||||

|

"relative_density"

|

list or range | number | 0.5 | 1.0 | [1.0] | ||||

|

"match_hair_color"

|

bool | true | |||||||

|

"eyebrows"

|

|||||||||

|

"style"

|

list | Styles | varies by ID | ||||||

|

"relative_density"

|

list or range | number | 0.7 | 1.0 | varies by ID | ||||

|

"relative_length"

|

list or range | number | 0.7 | 1.0 | varies by ID | ||||

|

"color"

|

list | "chocolate brown" | |||||||

|

"color_seed"

|

list or range | number | 0.0 | 0.0 | any postive number | ||||

|

"match_hair_color"

|

bool | true | |||||||

|

"sex_matched_only"

|

bool | true | |||||||

|

"eyes"

|

|||||||||

|

"iris_color"

|

list | Iris Color IDs | Per ID | ||||||

|

"pupil_dilation"

|

list or range | number | 0.0 | 1.0 | [0.5] | ||||

|

"redness"

|

list or range | number | 0.0 | 1.0 | [0.0] | ||||

|

"accessories"

|

|||||||||

|

"glasses"

|

|||||||||

|

"style"

|

list | Styles | |||||||

|

"lens_color"

|

list | Lens Colors | |||||||

|

"transparency"

|

list or range | number | 0 | 1 | [0] | ||||

|

"metalness"

|

list or range | number | 0 | 1 | [1] | ||||

|

"sex_matched_only"

|

bool | true | |||||||

|

"headwear"

|

|||||||||

|

"style"

|

list | Styles | ["none"] | ||||||

|

"sex_matched_only"

|

bool | true | |||||||

|

"masks"

|

|||||||||

|

"style"

|

list | Styles | ["none"] | ||||||

|

"position"

|

list | Positions | ["2"] | ||||||

|

"variant"

|

list | Variants | ["0"] | ||||||

|

"headphones"

|

|||||||||

|

"style"

|

list | Styles | ["none"] | ||||||

|

"environment"

|

|||||||||

| "hdri" | |||||||||

|

"name"

|

list | HDRIs | ["lauter_waterfall"] | ||||||

|

"intensity"

|

list | number | 0.0 | 5 | [0.75] | ||||

|

"rotation"

|

list or range | number | -180 | 180 | [0.0] | ||||

|

"body"

|

|||||||||

|

"enabled"

|

bool | false | |||||||

|

"height"

|

list | Height | Per ID | ||||||

| "fat_content" | list | Fat Content | Per ID | ||||||

|

"skin"

|

|||||||||

|

"highest_resolution"

|

bool | false | |||||||

|

"gesture"

|

|||||||||

|

"name"

|

list | Gestures | ["default"] | ||||||

| "mirrored" | bool | false | |||||||

| "keyframe_only" | bool | true | |||||||

| "position_seed" | list or range | number | 0.0 | 0.0 | any postive number | ||||

|

"clothing"

|

|||||||||

|

"outfit"

|

list | Outfits | Each ID has a predetermined outfit | ||||||

|

"sex_matched_only"

|

bool | true | |||||||

|

"3d_location"

|

list | ||||||||

|

"specifications"

|

object | defaults to an object with Type: Open | |||||||

|

"type"

|

string | see 3D Locations | "open" | ||||||

|

"properties"

|

object | varies by Type | |||||||

|

"camera_and_light_rigs"

|

list | ||||||||

|

"type"

|

string | "head_orbit" | |||||||

|

"location"

|

object | ||||||||

|

"pitch"

|

range | number | -180.0 | 180.0 | 0 | ||||

|

"yaw"

|

range | number | -180.0 | 180.0 | 0 | ||||

|

"roll"

|

range | number | -180.0 | 180.0 | 0 | ||||

|

"x"

|

range | int | -1000 | 1000 | 0 | ||||

|

"y"

|

range | int | -1000 | 1000 | 0 | ||||

|

"z"

|

range | int | -1000 | 1000 | 1 | ||||

|

"cameras"

|

list | ||||||||

|

"name"

|

string | "default" | |||||||

|

"specifications"

|

|||||||||

|

"resolution_h"

|

int | 256 | 4096 | 1024 | |||||

|

"resolution_w"

|

int | 256 | 4096 | 1024 | |||||

|

"focal_length"

|

list or range | number | 1.0 | 300.0 | [100.0] | ||||

|

"sensor_width"

|

list or range | number | 0.0 | 1,000.0 | [33.0] | ||||

|

"wavelength"

|

string | visible | |||||||

|

"relative_location"

|

|||||||||

|

"pitch"

|

range | number | -180.0 | 180.0 | 0 | ||||

|

"yaw"

|

range | number | -180.0 | 180.0 | 0 | ||||

|

"roll"

|

range | number | -180.0 | 180.0 | 0 | ||||

|

"x"

|

range | int | -1000 | 1000 | 0 | ||||

|

"y"

|

range | int | -1000 | 1000 | 0 | ||||

|

"z"

|

range | int | -1000 | 1000 | 0 | ||||

|

"advanced"

|

|||||||||

|

"lens_shift_horizontal"

|

list or range | number | -100.0 | 100.0 | [0.0] | ||||

|

"lens_shift_vertical"

|

list or range | number | -100.0 | 100.0 | [0.0] | ||||

|

"window_offset_horizontal"

|

list or range | number | -100.0 | 100.0 | [0.0] | ||||

|

"window_offset_vertical"

|

list or range | number | -100.0 | 100.0 | [0.0] | ||||

|

"noise_threshold"

|

list or range | number | 0 | 1 | [0.017] | ||||

|

"denoise"

|

bool | true | |||||||

|

"lights"

|

list | ||||||||

|

"type"

|

|||||||||

| "wavelength" | string | "visible" | |||||||

| "intensity" | list or range | number | 0.0 | 7000.0 | 4 | ||||

| "size_meters" | number | 0.0 | 3.0 | 0.0 | |||||

|

"relative_location"

|

|||||||||

|

"pitch"

|

range | number | -180.0 | 180.0 | 0 | ||||

|

"yaw"

|

range | number | -180.0 | 180.0 | 0 | ||||

|

"roll"

|

range | number | -180.0 | 180.0 | 0 | ||||

|

"x"

|

range | int | -1000 | 1000 | 0 | ||||

|

"y"

|

range | int | -1000 | 1000 | 0 | ||||

|

"z"

|

range | int | -1000 | 1000 | 0 | ||||

|

"color"

|

int | 0 | |||||||

|

"red"

|

int | 0 | 255 | 0 | |||||

|

"green"

|

int | 0 | 255 | 0 | |||||

|

"blue"

|

int | 0 | 255 | 0 |

Full and Complex Example

A single image with most of the attributes set follows.

Note: headwear is not specified because we want hair.

View this JSON on GitHub.

{

"version": 1,

"humans": [{

"identities": {"ids": [27],"renders_per_identity": 1},

"facial_attributes": {

"expression": [{

"name": ["all"],

"intensity": {

"type": "list",

"values": [0.75]

},

"percent": 20

}, {

"name": ["none"],

"intensity": {

"type": "list",

"values": [0.75]

},

"percent": 80

}],

"gaze": [{

"horizontal_angle": {

"type": "list",

"values": [-10, 0, 10]

},

"vertical_angle": {

"type": "list",

"values": [-10, 0, 10]

},

"percent": 20

}, {

"horizontal_angle": {

"type": "list",

"values": [0]

},

"vertical_angle": {

"type": "list",

"values": [0]

},

"percent": 80

}],

"head_turn": [{

"pitch": {

"type": "list",

"values": [-10, 0, 10]

},

"yaw": {

"type": "list",

"values": [-10, 0, 10]

},

"roll": {

"type": "list",

"values": [-10, 0, 10]

},

"percent": 20

}, {

"pitch": {

"type": "list",

"values": [0]

},

"yaw": {

"type": "list",

"values": [0]

},

"roll": {

"type": "list",

"values": [0]

},

"percent": 80

}],

"hair": [{

"style": ["all"],

"color": ["all"],

"color_seed": {

"type": "list",

"values": [0]

},

"relative_density": {

"type": "list",

"values": [1]

},

"relative_length": {

"type": "list",

"values": [1]

},

"sex_matched_only": true,

"ethnicity_matched_only": false,

"percent": 100

}],

"facial_hair": [{

"style": ["none"],

"color": ["all"],

"color_seed": {

"type": "list",

"values": [0]

},

"match_hair_color": true,

"relative_density": {

"type": "list",

"values": [1]

},

"relative_length": {

"type": "list",

"values": [1]

},

"percent": 80

}, {

"style": ["all"],

"color": ["all"],

"color_seed": {

"type": "list",

"values": [0]

},

"match_hair_color": true,

"relative_density": {

"type": "list",

"values": [1]

},

"relative_length": {

"type": "list",

"values": [1]

},

"percent": 20

}],

"eyebrows": [{

"style": ["all"],

"relative_length": {

"type": "range",

"values": {

"min": 1,

"max": 1

}

},

"relative_density": {

"type": "range",

"values": {

"min": 1,

"max": 1

}

},

"color": ["all"],

"color_seed": {

"type": "list",

"values": [0]

},

"match_hair_color": true,

"sex_matched_only": true,

"percent": 100

}],

"eyes": [{

"iris_color": ["all"],

"redness": {"type": "range", "values": {"min": 0.0, "max": 1.0}},

"pupil_dilation": {"type": "range", "values": {"min": 0.0, "max": 1.0}},

"percent": 100

}]

},

"accessories": {

"glasses": [{

"style": ["none"],

"lens_color": ["default"],

"transparency": {

"type": "list",

"values": [1]

},

"metalness": {

"type": "list",

"values": [0]

},

"sex_matched_only": true,

"percent": 80

}, {

"style": ["all"],

"lens_color": ["all"],

"transparency": {

"type": "list",

"values": [0.95]

},

"metalness": {

"type": "list",

"values": [0.03]

},

"sex_matched_only": true,

"percent": 10

}, {

"style": ["all"],

"lens_color": ["default"],

"transparency": {

"type": "list",

"values": [1]

},

"metalness": {

"type": "list",

"values": [0]

},

"sex_matched_only": true,

"percent": 10

}],

"headwear": [{

"style": ["none"],

"sex_matched_only": true,

"percent": 80

}, {

"style": ["all"],

"sex_matched_only": true,

"percent": 20

}],

"masks": [{

"style": ["none"],

"position": ["2"],

"variant": ["all"],

"percent": 80

}, {